The Stream Processing Mannequin Behind Google Cloud Dataflow | by Vu Trinh | Apr, 2024

On the time of the paper writing, information processing frameworks like MapReduce and its “cousins “ like Hadoop, Pig, Hive, or Spark permit the info client to course of batch information at scale. On the stream processing facet, instruments like MillWheel, Spark Streaming, or Storm got here to assist the consumer. Nonetheless, these current fashions didn’t fulfill the requirement in some widespread use circumstances.

Take into account an instance: A streaming video supplier’s enterprise income comes from billing advertisers for the quantity of promoting watched on their content material. They wish to understand how a lot to invoice every advertiser each day and mixture statistics in regards to the movies and adverts. Furthermore, they wish to run offline experiments over massive quantities of historic information. They wish to understand how typically and for the way lengthy their movies are being watched, with which content material/adverts, and by which demographic teams. All the data have to be accessible shortly to regulate their enterprise in close to real-time. The processing system should even be easy and versatile to adapt to the enterprise’s complexity. In addition they require a system that may deal with global-scale information for the reason that Web permits firms to achieve extra clients than ever. Listed below are some observations from folks at Google in regards to the state of the info processing programs of that point:

- Batch programs reminiscent of MapReduce, FlumeJava (inside Google know-how), and Spark fail to make sure the latency SLA since they require ready for all information enter to suit right into a batch earlier than processing it.

- Streaming processing programs that present scalability and fault tolerance fall wanting the expressiveness or correctness side.

- Many can not present precisely as soon as semantics, impacting correctness.

- Others lack the primitives needed for windowing or present windowing semantics which might be restricted to tuple- or processing-time-based home windows (e.g., Spark Streaming)

- Most that present event-time-based windowing depend on ordering or have restricted window triggering.

- MillWheel and Spark Streaming are sufficiently scalable, fault-tolerant, and low-latency however lack high-level programming fashions.

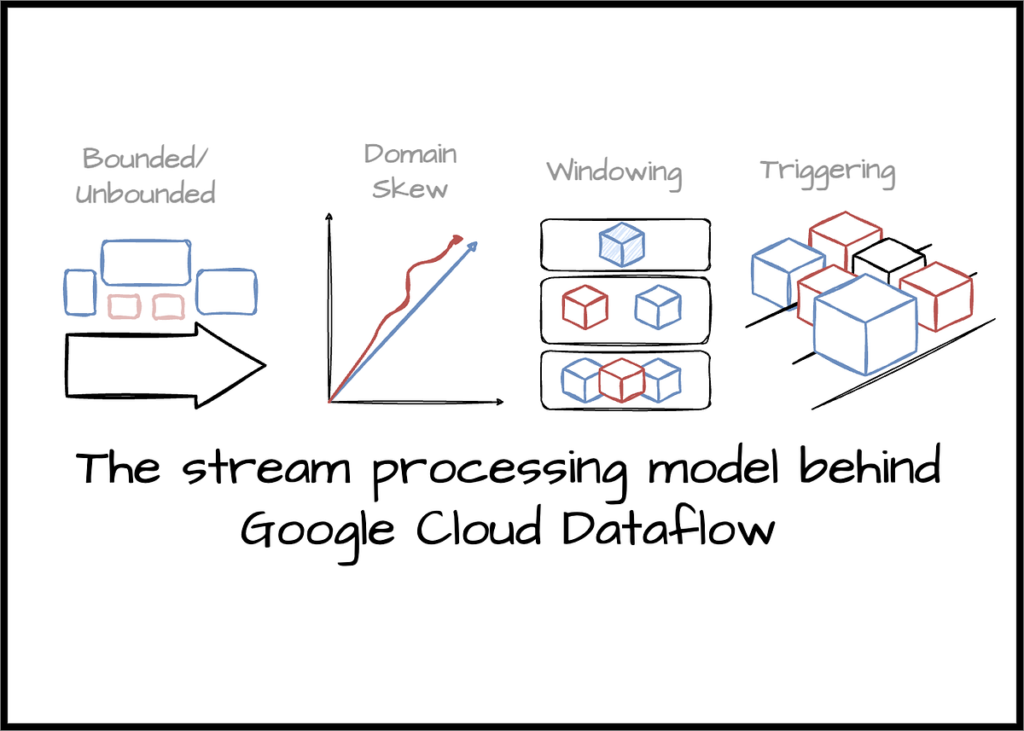

They conclude the key weak point of all of the fashions and programs talked about above is the idea that the unbounded enter information will ultimately be full. This strategy doesn’t make sense anymore when confronted with the realities of right this moment’s monumental, extremely disordered information. In addition they imagine that any strategy to fixing various real-time workloads should present easy however highly effective interfaces for balancing the correctness, latency, and price primarily based on particular use circumstances. From that perspective, the paper has the next conceptual contribution to the unified stream processing mannequin:

- Permitting for calculating event-time ordered (when the occasion occurred) outcomes over an unbounded, unordered information supply with configurable combos of correctness, latency, and price attributes.

- Separating pipeline implementation throughout 4 associated dimensions:

– What outcomes are being computed?

– The place in occasion time they’re being computed.

– When they’re materialized throughout processing time,

– How do earlier outcomes relate to later refinements?

- Separating the logical abstraction of knowledge processing from the underlying bodily implementation layer permits customers to decide on the processing engine.

In the remainder of this weblog, we’ll see how Google allows this contribution. One last item earlier than we transfer to the subsequent part: Google famous that there’s “nothing magical about this mannequin. “ The mannequin doesn’t make your expensive-computed process all of a sudden run quicker; it gives a common framework that permits for the straightforward expression of parallel computation, which isn’t tied to any particular execution engine like Spark or Flink.

The paper’s authors use the time period unbounded/bounded to outline infinite/finite information. They keep away from utilizing streaming/batch phrases as a result of they often indicate utilizing a particular execution engine. The time period unbound information describes the info that doesn’t have a predefined boundary, e.g., the consumer interplay occasions of an energetic e-commerce utility; the info stream solely stops when the appliance is inactive. Whereas bounded information refers to information that may be outlined by clear begin and finish boundaries, e.g., each day information export from the operation database.