Bayesian Knowledge Science: The What, Why, and How | by Samvardhan Vishnoi | Apr, 2024

Selecting between frequentist and Bayesian approaches is the nice debate of the final century, with a current surge in Bayesian adoption within the sciences.

What’s the distinction?

The philosophical distinction is definitely fairly refined, the place some suggest that the nice bayesian critic, Fisher, was himself a bayesian in some regard. Whereas there are numerous articles that delve into formulaic variations, what are the sensible advantages? What does Bayesian evaluation provide to the lay information scientist that the huge plethora of highly-adopted frequentist strategies don’t already? This text goals to present a sensible introduction to the motivation, formulation, and software of Bayesian strategies. Let’s dive in.

Whereas frequentists take care of describing the precise distributions of any information, the bayesian viewpoint is extra subjective. Subjectivity and statistics?! Sure, it’s really suitable.

Let’s begin with one thing easy, like a coin flip. Suppose you flip a coin 10 instances, and get heads 7 instances. What’s the chance of heads?

P(heads) = 7/10 (0.7)?

Clearly, right here we’re riddled with low pattern measurement. In a Bayesian POV nonetheless, we’re allowed to encode our beliefs instantly, asserting that if the coin is honest, the prospect of heads or tails should be equal i.e. 1/2. Whereas on this instance the selection appears fairly apparent, the controversy is extra nuanced once we get to extra complicated, much less apparent phenomenon.

But, this straightforward instance is a robust place to begin, highlighting each the best profit and shortcoming of Bayesian evaluation:

Profit: Coping with a lack of knowledge. Suppose you’re modeling unfold of an an infection in a rustic the place information assortment is scarce. Will you employ the low quantity of knowledge to derive all of your insights? Or would you wish to factor-in generally seen patterns from related international locations into your mannequin i.e. knowledgeable prior beliefs. Though the selection is evident, it leads on to the shortcoming.

Shortcoming: the prior perception is exhausting to formulate. For instance, if the coin just isn’t really honest, it will be mistaken to imagine that P (heads) = 0.5, and there may be nearly no approach to discover true P (heads) with no long term experiment. On this case, assuming P (heads) = 0.5 would really be detrimental to discovering the reality. But each statistical mannequin (frequentist or Bayesian) should make assumptions at some degree, and the ‘statistical inferences’ within the human thoughts are literally loads like bayesian inference i.e. developing prior perception methods that issue into our choices in each new scenario. Moreover, formulating mistaken prior beliefs is commonly not a dying sentence from a modeling perspective both, if we will be taught from sufficient information (extra on this in later articles).

So what does all this appear to be mathematically? Bayes’ rule lays the groundwork. Let’s suppose now we have a parameter θ that defines some mannequin which may describe our information (eg. θ may symbolize the imply, variance, slope w.r.t covariate, and so on.). Bayes’ rule states that

P (θ = t|information) ∝ P (information|θ = t) * P (θ=t)

In additional easy phrases,

- P (θ = t|information) represents the conditional chance that θ is the same as t, given our information (a.okay.a the posterior).

- Conversely, P (information|θ) represents the chance of observing our information, if θ = t (a.okay.a the ‘probability’).

- Lastly, P (θ=t) is just the chance that θ takes the worth t (the notorious ‘prior’).

So what’s this mysterious t? It could actually take many doable values, relying on what θ means. Actually, you wish to strive a number of values, and examine the probability of your information for every. This can be a key step, and you actually actually hope that you simply checked the absolute best values for θ i.e. these which cowl the utmost probability space of seeing your information (international minima, for many who care).

And that’s the crux of every part Bayesian inference does!

- Kind a previous perception for doable values of θ,

- Scale it with the probability at every θ worth, given the noticed information, and

- Return the computed end result i.e. the posterior, which tells you the chance of every examined θ worth.

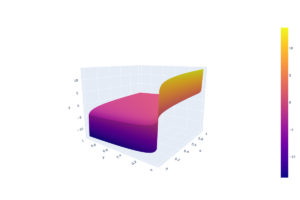

Graphically, this appears to be like one thing like:

Which highlights the following huge benefits of Bayesian stats-

- We now have an thought of your complete form of θ’s distribution (eg, how extensive is the height, how heavy are the tails, and so on.) which may allow extra sturdy inferences. Why? Just because we can’t solely higher perceive but additionally quantify the uncertainty (as in comparison with a standard level estimate with normal deviation).

- Because the course of is iterative, we will continually replace our beliefs (estimates) as extra information flows into our mannequin, making it a lot simpler to construct totally on-line fashions.

Simple sufficient! However not fairly…

This course of includes a number of computations, the place you need to calculate the probability for every doable worth of θ. Okay, possibly that is straightforward if suppose θ lies in a small vary like [0,1]. We will simply use the brute-force grid methodology, testing values at discrete intervals (10, 0.1 intervals or 100, 0.01 intervals, or extra… you get the concept) to map your complete house with the specified decision.

However what if the house is big, and god forbid extra parameters are concerned, like in any real-life modeling state of affairs?

Now now we have to check not solely the doable parameter values but additionally all their doable combos i.e. the answer house expands exponentially, rendering a grid search computationally infeasible. Fortunately, physicists have labored on the issue of environment friendly sampling, and superior algorithms exist as we speak (eg. Metropolis-Hastings MCMC, Variational Inference) which are in a position to rapidly discover excessive dimensional areas of parameters and discover convex factors. You don’t need to code these complicated algorithms your self both, probabilistic computing languages like PyMC or STAN make the method extremely streamlined and intuitive.

STAN

STAN is my favourite because it permits interfacing with extra frequent information science languages like Python, R, Julia, MATLAB and so on. aiding adoption. STAN depends on state-of-the-art Hamiltonian Monte Carlo sampling strategies that just about assure reasonably-timed convergence for nicely specified fashions. In my subsequent article, I’ll cowl methods to get began with STAN for easy in addition to not-no-simple regression fashions, with a full python code walkthrough. I will even cowl the total Bayesian modeling workflow, which includes mannequin specification, becoming, visualization, comparability, and interpretation.

Observe & keep tuned!