UC Berkeley and Microsoft Analysis Redefine Visible Understanding: How Scaling on Scales Outperforms Bigger Fashions with Effectivity and Magnificence

Within the dynamic realm of pc imaginative and prescient and synthetic intelligence, a brand new strategy challenges the standard pattern of constructing bigger fashions for superior visible understanding. The strategy within the present analysis, underpinned by the idea that bigger fashions yield extra highly effective representations, has led to the event of gigantic imaginative and prescient fashions.

Central to this exploration lies a vital examination of the prevailing apply of mannequin upscaling. This scrutiny brings to gentle the numerous useful resource expenditure and the diminishing returns on efficiency enhancements related to constantly enlarging mannequin architectures. It raises a pertinent query concerning the sustainability and effectivity of this strategy, particularly in a website the place computational sources are invaluable.

UC Berkeley and Microsoft Analysis launched an modern approach referred to as Scaling on Scales (S2). This methodology represents a paradigm shift, proposing a method that diverges from the standard mannequin scaling. By making use of a pre-trained, smaller imaginative and prescient mannequin throughout numerous picture scales, S2 goals to extract multi-scale representations, providing a brand new lens via which visible understanding could be enhanced with out essentially growing the mannequin’s dimension.

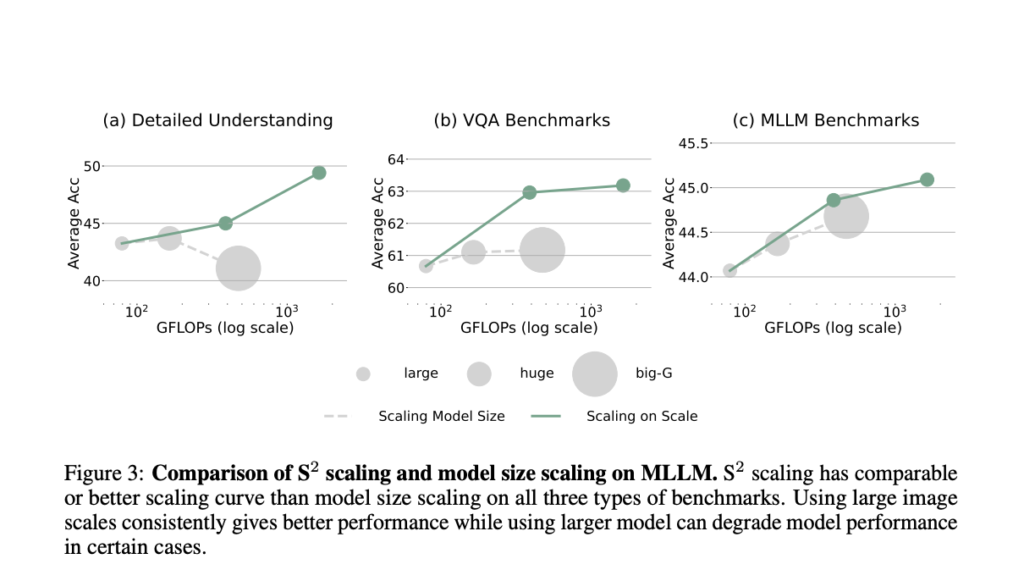

Leveraging a number of picture scales produces a composite illustration that rivals or surpasses the output of a lot bigger fashions. The analysis showcases the S2 approach’s prowess throughout a number of benchmarks, the place it persistently outperforms its bigger counterparts in duties together with however not restricted to classification, semantic segmentation, and depth estimation. It units a brand new state-of-the-art in multimodal LLM (MLLM) visible element understanding on the V* benchmark, outstripping even industrial fashions like Gemini Professional and GPT-4V, with considerably fewer parameters and comparable or decreased computational calls for.

As an illustration, in robotic manipulation duties, the S2 scaling methodology on a base-size mannequin improved the success price by about 20%, demonstrating its superiority over mere model-size scaling. The detailed understanding functionality of LLaVA-1.5, with S2 scaling, achieved outstanding accuracies, with V* Consideration and V* Spatial scoring 76.3% and 63.2%, respectively. These figures underscore the effectiveness of S2 and spotlight its effectivity and the potential for decreasing computational useful resource expenditure.

This analysis sheds gentle on the more and more pertinent query of whether or not the relentless scaling of mannequin sizes is really vital for advancing visible understanding. By the lens of the S2 approach, it turns into evident that different scaling strategies, significantly these specializing in exploiting the multi-scale nature of visible information, can present equally compelling, if not superior, efficiency outcomes. This strategy challenges the prevailing paradigm and opens up new avenues for resource-efficient and scalable mannequin growth in pc imaginative and prescient.

In conclusion, introducing and validating the Scaling on Scales (S2) methodology represents a major breakthrough in pc imaginative and prescient and synthetic intelligence. This analysis compellingly argues for a departure from the prevalent mannequin dimension growth in the direction of a extra nuanced and environment friendly scaling technique that leverages multi-scale picture representations. Doing so demonstrates the potential for attaining state-of-the-art efficiency throughout visible duties. It underscores the significance of modern scaling strategies in selling computational effectivity and useful resource sustainability in AI growth. The S2 methodology, with its capacity to rival and even surpass the output of a lot bigger fashions, presents a promising different to conventional mannequin scaling, highlighting its potential to revolutionize the sector.

Take a look at the Paper and Github. All credit score for this analysis goes to the researchers of this venture. Additionally, don’t neglect to comply with us on Twitter. Be a part of our Telegram Channel, Discord Channel, and LinkedIn Group.

If you happen to like our work, you’ll love our newsletter..

Don’t Neglect to affix our 39k+ ML SubReddit