GeFF: Revolutionizing Robotic Notion and Motion with Scene-Degree Generalizable Neural Function Fields

When a whirring sound catches your consideration, you’re strolling down the bustling metropolis avenue, rigorously cradling your morning espresso. Out of the blue, a knee-high supply robotic zips previous you on the crowded sidewalk. With exceptional dexterity, it easily avoids colliding into pedestrians, strollers, and obstructions, deftly plotting a transparent path ahead. This isn’t some sci-fi scene – it’s the cutting-edge expertise of GeFF flexing its capabilities proper earlier than your eyes.

So what precisely is that this GeFF, you marvel? It stands for Generalizable Neural Function Fields, representing a possible paradigm shift in how robots understand and work together with their complicated environments. Till now, even essentially the most superior robots have struggled to interpret and adapt to the endlessly diversified real-world scenes reliably. However this novel GeFF strategy might have lastly cracked the code.

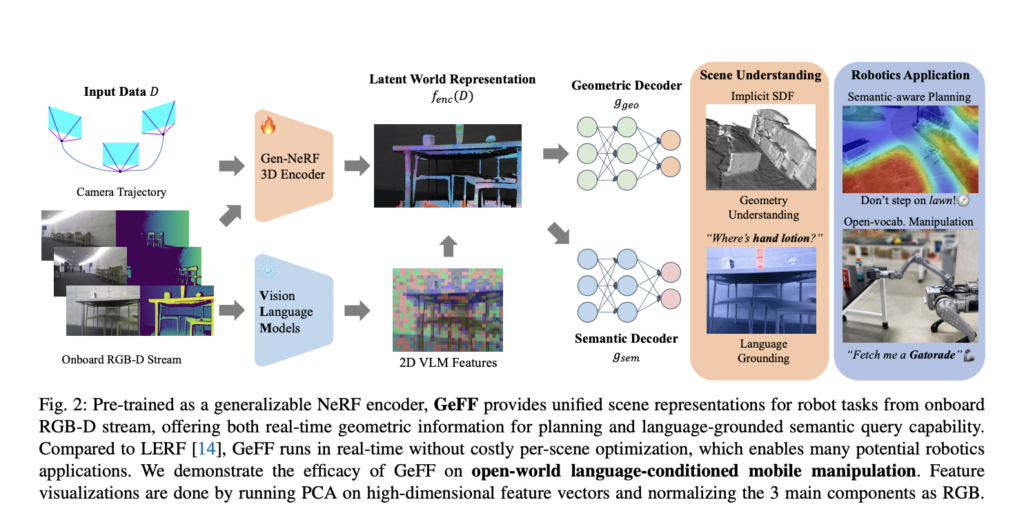

Right here’s a simplified rundown of how GeFF works its magic. Historically, robots use sensors like cameras and lidar to seize uncooked information about their environment – detecting shapes, objects, distances, and different granular components. GeFF takes a radically completely different tack. Using neural networks, it analyzes the complete, wealthy 3D scene captured by RGB-D cameras. It coherently encodes all the geometrical and semantic which means in a single unified illustration.

However GeFF isn’t merely constructing some tremendous high-res 3D map of its surroundings. In an ingenious twist, it’s truly aligning that unified spatial illustration with the pure language and descriptions that people use to make sense of areas and objects. So the robotic develops a conceptual, intuitive understanding of what it’s perceiving – having the ability to contextualize a scene as “a cluttered lounge with a sofa, TV, aspect desk, and a potted plant within the nook” similar to you or I’d.

The potential implications of this functionality are actually mind-bending. By leveraging GeFF, robots can navigate unfamiliar, unmapped environments way more akin to how people do – utilizing wealthy visible and linguistic cues to cause, comprehend their environment, and dynamically plan unmapped paths to blazingly discover their approach. They will quickly detect and keep away from obstacles, figuring out and deftly maneuvering round impediments like that cluster of pedestrians blocking the sidewalk up forward. In maybe essentially the most exceptional utility, robots powered by GeFF may even manipulate and make sense of objects they’ve by no means instantly encountered or seen earlier than in real-time.

This sci-fi futurism is already being realized in the present day. GeFF is actively being deployed and examined on precise robotic methods working in real-world environments like college labs, company places of work, and even households. Researchers use it for numerous cutting-edge duties – having robots keep away from dynamic obstacles, find and retrieve particular objects primarily based on voice instructions, carry out intricate multilevel planning for navigation and manipulation, and extra.

Naturally, this paradigm shift remains to be in its relative infancy, with immense room for progress and refinement. The methods’ efficiency should nonetheless be hardened for excessive situations and edge instances. The underlying neural representations driving GeFF’s notion want additional optimization. Integrating GeFF’s high-level planning with lower-level robotic management methods stays an intricate problem.

However make no mistake – GeFF represents a bonafide breakthrough that would utterly reshape the sphere of robotics as we all know it. For the primary time, we’re catching glimpses of robots that may deeply understand, comprehend, and make fluid choices concerning the wealthy spatial worlds round them in a gazellelike vogue – edging us tantalizingly nearer to having robots that may actually function autonomously and naturally alongside people.

In conclusion, GeFF stands on the forefront of innovation in robotics, providing a strong framework for scene-level notion and motion. With its potential to generalize throughout scenes, leverage semantic information, and function in real-time, GeFF paves the way in which for a brand new period of autonomous robots able to navigating and manipulating their environment with unprecedented sophistication and adaptableness. As analysis on this area continues to evolve, GeFF is poised to play a pivotal position in shaping the way forward for robotics.

Try the Paper. All credit score for this analysis goes to the researchers of this undertaking. Additionally, don’t overlook to comply with us on Twitter. Be a part of our Discord Channel and LinkedIn Group.

In case you like our work, you’ll love our newsletter..

Don’t Neglect to affix our Telegram Channel and 38k+ ML SubReddit