What It Is, Why It Issues, and The way to Implement It

LLMOps entails managing all the lifecycle of Massive Language Fashions (LLMs), together with knowledge and immediate administration, mannequin fine-tuning and analysis, pipeline orchestration, and LLM deployment.

Whereas there are lots of similarities with MLOps, LLMOps is exclusive as a result of it requires specialised dealing with of natural-language knowledge, prompt-response administration, and sophisticated moral issues.

Retrieval Augmented Era (RAG) permits LLMs to extract and synthesize data like a complicated search engine. Nonetheless, reworking uncooked LLMs into production-ready functions presents advanced challenges.

LLMOps encompasses finest practices and a various tooling panorama. Instruments vary from knowledge platforms to vector databases, embedding suppliers, fine-tuning platforms, immediate engineering, analysis instruments, orchestration frameworks, observability platforms, and LLM API gateways.

Massive Language Fashions (LLMs) like Meta AI’s LLaMA models, MISTRAL AI’s open models, and OpenAI’s GPT series have improved language-based AI. These fashions excel at varied duties, corresponding to translating languages with outstanding accuracy, producing artistic writing, and even coding software program.

A very notable utility is Retrieval-Augmented Era (RAG). RAG permits LLMs to tug related data from huge databases to reply questions or present context, performing as a supercharged search engine that finds, understands, and integrates data.

This text serves as your complete information to LLMOps. You’ll study:

What’s Massive Language Mannequin Operations (LLMOps)?

LLMOps (Massive Language Mannequin Operations) focuses on operationalizing all the lifecycle of huge language fashions (LLMs), from knowledge and immediate administration to mannequin coaching, fine-tuning, analysis, deployment, monitoring, and upkeep.

LLMOps is vital to turning LLMs into scalable, production-ready AI instruments. It addresses the distinctive challenges groups face deploying Massive Language Fashions, simplifies their supply to end-users, and improves scalability.

LLMOps entails:

- Infrastructure administration: Streamlining the technical spine for LLM deployment to help strong and environment friendly mannequin operations.

- Immediate-response administration: Refining LLM-backed functions via steady prompt-response optimization and high quality management.

- Knowledge and workflow orchestration: Making certain environment friendly knowledge pipeline administration and scalable workflows for LLM efficiency.

- Mannequin reliability and ethics: Common efficiency monitoring and moral oversight are wanted to take care of requirements and handle biases.

- Safety and compliance: Defending towards adversarial assaults and making certain regulatory adherence in LLM functions.

- Adapting to technological evolution: Incorporating the most recent LLM developments for cutting-edge, custom-made functions.

Machine Studying Operations (MLOps) vs Massive Language Mannequin Operations (LLMOps)

LLMOps fall below MLOps (Machine Learning Operations). You may consider it as a sub-discipline specializing in Massive Language Fashions. Many MLOps finest practices apply to LLMOps, like managing infrastructure, dealing with knowledge processing pipelines, and sustaining fashions in manufacturing.

The principle distinction is that operationalizing LLMs entails further, particular duties like immediate engineering, LLM chaining, and monitoring context relevance, toxicity, and hallucinations.

The next desk offers a extra detailed comparability:

|

Job |

MLOps |

LLMOps |

|

Growing and deploying machine-learning fashions. |

Particularly targeted on LLMs. |

|

|

If employed, it sometimes focuses on switch studying and retraining. |

Facilities on fine-tuning pre-trained fashions like GPT-3.5 with environment friendly strategies and enhancing mannequin efficiency via immediate engineering and retrieval augmented technology (RAG). |

|

|

Analysis depends on well-defined efficiency metrics. |

Evaluating textual content high quality and response accuracy typically requires human suggestions because of the complexity of language understanding (e.g., utilizing strategies like RLHF.) |

|

|

Groups sometimes handle their fashions, together with versioning and metadata. |

Fashions are sometimes externally hosted and accessed by way of APIs. |

|

|

Deploy fashions via pipelines, sometimes involving function shops and containerization. |

Fashions are a part of chains and brokers, supported by specialised instruments like vector databases. |

|

|

Monitor mannequin efficiency for knowledge drift and mannequin degradation, typically utilizing automated monitoring instruments. |

Expands conventional monitoring to incorporate prompt-response efficacy, context relevance, hallucination detection, and safety towards immediate injection threats. |

The three ranges of LLMOps: How groups are implementing LLMOps

Adopting LLMs by groups throughout varied sectors typically begins with the best method and advances in the direction of extra advanced and customised implementations as wants evolve. This path displays rising ranges of dedication, experience, and sources devoted to leveraging LLMs.

Utilizing off-the-shelf Massive Language Mannequin APIs

Groups typically begin with off-the-shelf LLM APIs, corresponding to OpenAI’s GPT-3.5, for fast resolution validation or to shortly add an LLM-powered function to an utility.

This method is a sensible entry level for smaller groups or initiatives below tight useful resource constraints. Whereas it presents an easy path to integrating superior LLM capabilities, this stage has limitations, together with much less flexibility in customization, reliance on exterior service suppliers, and potential value will increase with scaling.

High quality-tuning and serving pre-trained Massive Language Fashions

As wants grow to be extra particular and off-the-shelf APIs show inadequate, groups progress to fine-tuning pre-trained fashions like Llama-2-70B or Mistral 8x7B. This center floor balances customization and useful resource administration, so groups can adapt these fashions to area of interest use instances or proprietary knowledge units.

The method is extra resource-intensive than utilizing APIs instantly. Nonetheless, it offers a tailor-made expertise that leverages the inherent strengths of pre-trained fashions with out the exorbitant value of coaching from scratch. This stage introduces challenges corresponding to the necessity for high quality domain-specific knowledge, the danger of overfitting, and navigating potential licensing points.

Coaching and serving LLMs

For bigger organizations or devoted analysis groups, the journey might contain coaching LLMs from scratch—a path taken when current fashions fail to fulfill an utility’s distinctive calls for or when pushing the envelope of innovation.

This method permits for customizing the mannequin’s coaching course of. Nonetheless, it entails substantial investments in computational sources and experience. Coaching LLMs from scratch is a posh and time-consuming course of, and there’s no assure that the ensuing mannequin will exceed pre-existing fashions.

Understanding the LLMOps parts and their position within the LLM lifecycle

Machine studying and utility groups are more and more adopting approaches that combine LLM APIs with their current know-how stacks, fine-tune pre-trained fashions, or, in rarer instances, practice fashions from scratch.

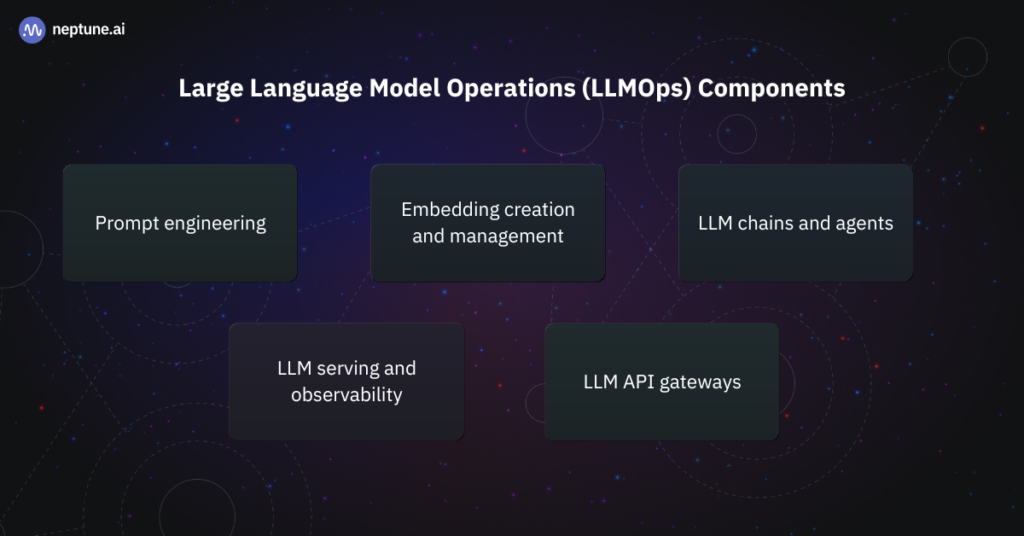

Key parts, instruments, and practices of LLMOps embrace:

- Prompt engineering: Handle and experiment with prompt-response pairs.

- Embedding creation and management: Managing embeddings with vector databases.

- LLM chains and agents: Essential in LLMOps for utilizing the complete spectrum of capabilities completely different LLMs supply.

- LLM evaluations: Use intrinsic and extrinsic metrics to guage LLM efficiency holistically.

- LLM serving and observability: Deploy LLMs for inference and handle manufacturing useful resource utilization. Repeatedly monitor mannequin efficiency and combine human insights for enhancements.

- LLM API gateways: Consuming, orchestrating, scaling, monitoring, and managing APIs from a single ingress level to combine them into manufacturing functions.

Immediate engineering

Immediate engineering entails crafting queries (prompts) that information LLMs to generate particular, desired responses. The standard and construction of prompts considerably affect LLMs’ output. In functions like buyer help chatbots, content material technology, and sophisticated activity efficiency, prompt engineering techniques guarantee LLMs perceive the precise activity at hand and reply precisely.

Prompts drive LLM interactions, and a well-designed immediate differentiates between a response that hits the mark and one which misses it. It’s not nearly what you ask however the way you ask it. Efficient immediate engineering can dramatically enhance the usability and worth of LLM-powered applications.

The principle challenges of immediate engineering

- Crafting efficient prompts: Discovering the correct wording that constantly triggers the specified response from an LLM is extra artwork than science.

- Contextual relevance: Making certain prompts present sufficient context for the LLM to generate applicable and correct responses.

- Scalability: Managing and refining an ever-growing library of prompts for various duties, fashions, and functions.

- Analysis: Measuring the effectiveness of prompts and their affect on the LLM’s responses.

Immediate engineering finest practices

- Iterative testing and refinement: Repeatedly experiment with and refine prompts. Begin with a fundamental immediate and evolve it primarily based on the LLM’s responses, utilizing strategies like A/B testing to seek out the best buildings and phrasing.

- Incorporate context: All the time embrace adequate context inside prompts to information the LLM’s understanding and response technology. That is essential for advanced or nuanced duties (take into account strategies like few-shot and chain-of-thought prompting).

- Monitor immediate efficiency: Monitor how completely different prompts affect outcomes. Use key metrics like response accuracy, relevance, and timeliness to guage immediate effectiveness.

- Suggestions loops: Use automated and human suggestions to enhance immediate design constantly. Analyze efficiency metrics and collect insights from customers or consultants to refine prompts.

- Automate immediate choice: Implement systems that automatically choose the best prompt for a given activity utilizing historic knowledge on immediate efficiency and the specifics of the present request.

Instance: Immediate engineering for a chatbot

Let’s think about we’re creating a chatbot for customer support. An preliminary immediate is perhaps easy: “Buyer inquiry: late supply.”

However with context, we count on a way more becoming response. A immediate that gives the LLM with background data may look as follows:

‘The shopper has purchased from our retailer $N instances up to now six months and ordered the identical product $M instances. The most recent cargo of this product is delayed by $T days. The shopper is inquiring: $QUESTION.’”

On this immediate template, varied data from the CRM system is injected:

- $N represents the overall variety of purchases the shopper has made up to now six months.

- $M signifies what number of instances the shopper has ordered this particular product.

- $T particulars the delay in days for the newest cargo.

- $QUESTION is the precise question or concern raised by the shopper relating to the delay.

With this detailed context offered to the chatbot, it will probably craft responses acknowledging the shopper’s frequent patronage and particular points with the delayed product.

By means of an iterative course of grounded in prompt engineering best practices, we will enhance this immediate to make sure that the chatbot successfully understands and addresses buyer issues with nuance.

Embedding creation and administration

Creating and managing embeddings is a key course of in LLMOps. It entails reworking textual knowledge into numerical type, generally known as embeddings, representing the semantic that means of phrases, sentences, or paperwork in a high-dimensional vector house.

Embeddings are important for LLMs to grasp pure language, enabling them to carry out duties like textual content classification, query answering, and extra.

Vector databases and Retrieval-Augmented Era (RAG) are pivotal parts on this context:

- Vector databases: Specialised databases designed to retailer and handle embeddings effectively. They help high-speed similarity search, which is key for duties that require discovering probably the most related data in a big dataset.

- Retrieval-Augmented Era (RAG): RAG combines the ability of retrieval from vector databases with the generative capabilities of LLMs. Related data from a corpus is used as context to generate responses or carry out particular duties.

The principle challenges of embedding creation and administration

- High quality of Embeddings: Making certain the embeddings precisely symbolize the semantic meanings of textual content is difficult however essential for the effectiveness of retrieval and technology duties.

- Effectivity of Vector Databases: Balancing retrieval pace with accuracy in massive, dynamic datasets requires optimized indexing methods and infrastructure.

Embedding Creation and Administration Finest Practices

- Common Updating: Repeatedly updating the embeddings and the corpus within the vector database to replicate the most recent data and language utilization.

- Optimization: Use database optimizations like approximate nearest neighbor (ANN) search algorithms to steadiness pace and accuracy in retrieval duties.

- Integration with LLMs: Combine vector databases and RAG strategies with LLMs to leverage the strengths of each retrieval and generative processes.

Instance: An LLM that queries a vector database for customer support interactions

Contemplate an organization that makes use of an LLM to offer buyer help via a chatbot. The chatbot is skilled on an enormous corpus of customer support interactions. When a buyer asks a query, the LLM converts this question right into a vector and queries the vector database to seek out related previous queries and their responses.

The database effectively retrieves probably the most related interactions, permitting the chatbot to offer correct and contextually applicable responses. This setup improves buyer satisfaction and enhances the chatbot’s studying and flexibility.

LLM chains and brokers

LLM chains and brokers orchestrate a number of LLMs or their APIs to unravel advanced duties {that a} single LLM may not deal with effectively. Chains consult with sequential processing steps the place the output of 1 LLM serves because the enter to a different. Brokers are autonomous methods that use a number of LLMs to execute and handle duties inside an utility.

Chains and brokers enable builders to create subtle functions that may perceive context, generate extra correct responses, and deal with advanced duties.

The principle challenges of LLM chains and brokers

- Integration complexity: Combining a number of LLMs or APIs might be technically difficult and requires cautious knowledge circulate administration.

- Efficiency and consistency: Making certain the built-in system maintains excessive efficiency and generates constant outputs.

- Error propagation: In chains, errors from one mannequin can cascade, impacting the general system’s effectiveness.

LLM chains and brokers finest practices

- Modular design: Undertake a modular method the place every part might be up to date, changed, or debugged independently. This improves the system’s flexibility and maintainability.

- API gateways: Use API gateways to handle interactions between your utility and the LLMs. This simplifies integration and offers a single level for monitoring and safety.

- Error dealing with: Implement strong error detection and dealing with mechanisms to attenuate the affect of errors in a single a part of the system on the general utility’s efficiency.

- Efficiency monitoring: Repeatedly monitor the efficiency of every part and the system as an entire. Use metrics particular to every LLM’s position inside the utility to make sure optimum operation.

- Unified knowledge format: Standardize the info format throughout all LLMs within the chain to scale back transformation overhead and simplify knowledge circulate.

Instance: A sequence of LLMs dealing with customer support requests

Think about a customer support chatbot that handles varied inquiries, from technical help to basic data. The chatbot makes use of an LLM chain, the place:

- The primary LLM interprets the person’s question and determines the kind of request.

- Primarily based on the request sort, a specialised LLM generates an in depth response or retrieves related data from a information base.

- A 3rd LLM refines the response for readability and tone, making certain it matches the corporate’s model voice.

This chain leverages the strengths of particular person LLMs to offer a complete and user-friendly customer support expertise {that a} single mannequin couldn’t obtain alone.

LLM analysis and testing

LLM analysis assesses a mannequin’s efficiency throughout varied dimensions, together with accuracy, coherence, bias, and reliability. This course of employs intrinsic metrics, like phrase prediction accuracy and perplexity, and extrinsic strategies, corresponding to human-in-the-loop testing and person satisfaction surveys. It’s a complete method to understanding how effectively an LLM interprets and responds to prompts in various eventualities.

In LLMOps, evaluating LLMs is essential for making certain fashions ship invaluable, coherent, and unbiased outputs. Since LLMs are utilized to a variety of duties—from customer support to content material creation—their analysis should replicate the complexities of the functions.

The principle challenges of LLM analysis and testing

- Complete metrics: Assessing an LLM’s nuanced understanding and functionality to deal with various duties is difficult. Conventional machine-learning metrics like accuracy or precision are normally not relevant.

- Bias and equity: Figuring out and mitigating biases inside LLM outputs to make sure equity throughout all person interactions is a major hurdle.

- Analysis state of affairs relevance: Making certain analysis eventualities precisely symbolize the applying context and seize typical interplay patterns.

- Integrating suggestions: Effectively incorporating human suggestions into the mannequin enchancment course of requires cautious orchestration.

LLM analysis and testing finest practices

- Job-specific metrics: For goal efficiency analysis, use task-relevant metrics (e.g., BLEU for translation, ROUGE for textual content similarity).

- Bias and equity evaluations: Use equity analysis instruments like LangKit and TruLens to detect and handle biases. This helps acknowledge and rectify skewed responses.

- Actual-world testing: Create testing scenarios that mimic precise person interactions to guage the mannequin’s efficiency in life like circumstances.

- Benchmarking: Use benchmarks like Original MMLU or Hugging Face’s Open LLM leaderboard to gauge how your LLM compares to established requirements.

- Reference-free analysis: Use one other, stronger LLM to guage your LLM’s outputs. With frameworks like G-Eval, this system can bypass the necessity for direct human judgment or gold-standard references. G-Eval applies LLMs with Chain-of-Thought (CoT) and a form-filling paradigm to guage LLM outputs.

Instance Situation: Evaluating a customer support chatbot with intrinsic and extrinsic metrics

Think about deploying an LLM to deal with customer support inquiries. The analysis course of would contain:

- Designing take a look at instances that cowl scripted queries, historic interactions, and hypothetical new eventualities.

- Using a mixture of metrics to evaluate response accuracy, relevance, response time, and coherence.

- Gathering suggestions from human evaluators to guage the standard of responses.

- Figuring out biases or inaccuracies to fine-tune the mannequin and for subsequent reevaluation.

LLM deployment: Serving, monitoring, and observability

LLM deployment encompasses the processes and applied sciences that convey LLMs into manufacturing environments. This consists of orchestrating mannequin updates, selecting between on-line and batch inference modes for serving predictions, and establishing the infrastructure to help these operations effectively. Correct deployment and manufacturing administration be certain that LLMs can function seamlessly to offer well timed and related outputs.

Monitoring and observability are about monitoring LLMs’ efficiency, well being, and operational metrics in manufacturing to make sure they carry out optimally and reliably. The deployment technique impacts response instances, useful resource effectivity, scalability, and total system efficiency, instantly impacting the person expertise and operational prices.

The principle challenges of LLM deployment, monitoring, and observability

- Environment friendly inference: Balancing the computational calls for of LLMs with the necessity for well timed and resource-efficient response technology.

- Mannequin updates and administration: Making certain easy updates and administration of fashions in manufacturing with minimal downtime.

- Efficiency monitoring: Monitoring an LLM’s efficiency over time, particularly in detecting and addressing points like mannequin drift or hallucinations.

- Consumer suggestions integration: Incorporating person suggestions into the mannequin enchancment cycle.

LLM Deployment and Observability Finest Practices

- CI/CD for LLMs: Use steady integration and deployment (CI/CD) pipelines to automate mannequin updates and deployments.

- Optimize inference methods:

- For batch processing, use static batching to enhance throughput.

- For on-line inference, operator fusion and weight quantization strategies needs to be utilized for quicker responses and higher useful resource use.

- Manufacturing validation: Repeatedly take a look at the LLM with artificial or actual examples to make sure its efficiency stays in step with expectations.

- Vector databases: Combine vector databases for content material retrieval functions to successfully handle scalability and real-time response wants.

- Observability instruments: Use platforms that provide complete observability into LLM efficiency, together with practical logs (prompt-completion pairs) and operational metrics (system well being, utilization statistics).

- Human-in-the-Loop (HITL) suggestions: Incorporate direct user feedback into the deployment cycle to repeatedly refine and enhance LLM outputs.

Instance Situation: Deploying customer support chatbot

Think about that you’re answerable for implementing a LLM-powered chatbot for buyer help. The deployment course of would contain:

- CI/CD Pipeline: Use GitLab CI/CD (or GitHub Action workflow) to automate the deployment course of. As you enhance your chatbot, these instruments can deal with automated testing and rolling updates so your LLM is all the time working the most recent code with out downtime.

- On-line Inference with Kubernetes utilizing OpenLLM: To deal with real-time interactions, deploy your LLM in a Kubernetes cluster with BentoML’s OpenLLM, utilizing it to handle containerized functions for top availability. Mix this with the serverless BentoCloud or an auto-scaling group on a cloud platform like AWS to make sure your sources match the demand.

- Vector Database with Milvus: Integrate Milvus, a purpose-built vector database, to handle and retrieve data shortly. That is the place your LLM will pull contextual knowledge to tell its responses and guarantee every interplay is as related and personalised as doable.

- Monitoring with LangKit and WhyLabs: Implement LangKit to gather operational metrics and visualize the telemetry in WhyLabs. Collectively, they supply a real-time overview of your system’s well being and efficiency, permitting you to react promptly to any LLM practical (drift, toxicity, knowledge leakage, and many others) or operational points (system downtime, latency, and many others).

- Human-in-the-Loop (HITL) with Label Studio: Set up a HITL course of using Label Studio, an annotation software, for real-time suggestions. This permits human supervisors to supervise the bot’s responses, intervene when vital, and regularly annotate knowledge that will probably be used to enhance the mannequin via energetic studying.

Massive Language Mannequin API gateways

LLM APIs allow you to combine pre-trained massive language fashions in your functions to carry out duties like translation, question-answering, and content material technology whereas delegating the deployment and operation to a third-party platform.

An LLM API gateway is important for effectively managing entry to a number of LLM APIs. It addresses operational challenges corresponding to authentication, load distribution, API name transformations, and systematic immediate dealing with.

The principle challenges addressed by LLM AI gateways

- API integration complexity: Managing connections and interactions with a number of LLM APIs might be technically difficult because of various API specs and necessities.

- Value management: Monitoring and controlling the prices related to high-volume API calls to LLM companies.

- Efficiency monitoring: Making certain optimum efficiency, together with managing latency and successfully dealing with request failures or timeouts.

- Safety: Safeguarding delicate API keys and knowledge transmitted between your utility and LLM API companies.

LLM AI gateways finest practices

- API choice: Select LLM APIs that finest match your utility’s wants, utilizing benchmarks to information your alternative for particular duties.

- Efficiency monitoring: Repeatedly monitor API efficiency metrics, adjusting utilization patterns to take care of optimum operation.

- Request caching: Implement caching methods to keep away from redundant requests, thus decreasing prices.

- LLM hint logging: Implement logging for API interactions to make debugging simpler with insights into API conduct and potential points.

- Model administration: Use API versioning to handle completely different utility lifecycle levels, from improvement to manufacturing.

Instance Situation: Utilizing an LLM API gateway for a multilingual buyer help chatbot

Think about creating a multilingual buyer help chatbot that leverages varied LLM APIs for real-time translation and content material technology. The chatbot should deal with hundreds of person inquiries each day, requiring fast and correct responses in a number of languages.

- The position of the API gateway: The LLM API Gateway manages all interactions with the LLM APIs, effectively distributing requests and load-balancing them amongst out there APIs to take care of quick response instances.

- Operational advantages: By centralizing API key administration, the gateway improves safety. It additionally implements caching for repeated queries to optimize prices and makes use of efficiency monitoring to regulate as APIs replace or enhance.

- Value and efficiency optimization: By means of its value administration options, the gateway offers a breakdown of bills to establish areas for optimization, corresponding to adjusting immediate methods or caching extra aggressively.

Bringing all of it collectively: An LLMOps use case

On this part, you’ll discover ways to introduce LLMOps finest practices and parts to your initiatives utilizing the instance of a RAG system offering details about well being and wellness matters.

Outline the issue

Step one clearly articulates the problem the RAG app goals to deal with. In our case, the app goals to assist customers perceive advanced well being circumstances, present options for wholesome residing, and supply insights into therapies and treatments.

Develop the textual content preprocessing pipeline

- Knowledge ingestion: Use Unstructured.io to ingest knowledge from well being boards, medical journals, and wellness blogs. Subsequent, preprocess this knowledge by cleansing, normalizing textual content, and splitting it into manageable chunks.

- Textual content-to-embedding conversion: Convert the processed textual knowledge into embeddings utilizing Cohere, which offers wealthy semantic understanding for varied health-related matters.

- Use a vector database: Retailer these embeddings in Qdrant, which is well-suited for similarity search and retrieval in high-dimensional areas.

Implement the inference part

- API Gateway: Implement an API Gateway utilizing Portkey’s AI Gateway. This gateway will parse person queries and convert them into prompts for the LLM.

- Vector database for context retrieval: Use Qdrant’s vector search function to retrieve the top-k related contexts primarily based on the question embeddings.

- Retrieval Augmented Era (RAG): Create a retrieval Q&A system to feed the person’s question and the retrieved context into the LLM. To generate the response, you should utilize a pre-trained HuggingFace mannequin (e.g., meta-llama/Llama-2-7b, google/gemma-7b) or one from OpenAI (e.g., gpt-3.5-turbo or gpt-4) that’s fine-tuned for well being and wellness matters.

Take a look at and refine the applying

- Study from customers: Implement person suggestions mechanisms to gather insights on app efficiency.

- Monitor the applying: Use TrueLens to watch responses and make use of test-time filtering to dynamically enhance the database, language mannequin, and retrieval system.

- Improve and replace: Repeatedly replace the app primarily based on the most recent well being and wellness data and person suggestions to make sure it stays a invaluable useful resource.

The current and the way forward for LLMOps

The LLMOps panorama constantly evolves with various options for deploying and managing LLMs.

On this article, we’ve checked out key parts, practices, and instruments like:

- Embeddings and vector databases: Central repositories that retailer and handle huge embeddings required for coaching and querying LLMs, optimized for fast retrieval and environment friendly scaling.

- LLM prompts: Designing and crafting efficient prompts that information the LLM to generate the specified output is important to successfully leveraging language fashions.

- LLM chains and brokers: Essential in LLMOps for utilizing the complete spectrum of capabilities completely different LLMs supply.

- LLM evaluations (evals) and testing: Systematic analysis strategies (intrinsic and extrinsic metrics) to measure the LLM’s efficiency, accuracy, and reliability, making certain it meets the required requirements earlier than and after deployment.

- LLM serving and observability: The infrastructure and processes making the skilled LLM out there typically contain deployment to cloud or edge computing environments. Instruments and practices for monitoring LLM efficiency in actual time embrace monitoring errors, biases, and drifts and utilizing human—or AI-generated—suggestions to refine and enhance the mannequin regularly.

- LLM API gateways: Interfaces that enable customers and functions to work together with LLMs simply, typically offering further layers of management, safety, and scalability.

Sooner or later, the panorama will focus extra on:

- Explainability and interpretability: As LLMOps know-how improves, so will explainability options that enable you perceive how LLMs arrive at their outputs. These capabilities will present customers and builders with insights into the mannequin’s operations, no matter the applying.

- Development in monitoring and observability: Whereas present monitoring options present insights into mannequin efficiency and well being, there’s a rising want for extra nuanced, real-time observability instruments tailor-made to LLMs.

- Developments in fine-tuning in a low-resource setting: Revolutionary methods are rising to deal with the excessive useful resource demand of LLMs. Methods like model pruning, quantization, and knowledge distillation paved the way, permitting fashions to retain efficiency whereas decreasing computational wants.

- Moreover, analysis into extra environment friendly transformer architectures and on-device coaching strategies holds promise for making LLM training and deployment extra accessible in low-resource environments.