Why LLMs Used Alone Can’t Handle Your Firm’s Predictive Wants

Sponsored Content material

ChatGPT and comparable instruments primarily based on giant language fashions (LLMs) are wonderful. However they aren’t all-purpose instruments.

It’s similar to selecting different instruments for constructing and creating. You want to decide the proper one for the job. You wouldn’t attempt to tighten a bolt with a hammer or flip a hamburger patty with a whisk. The method could be awkward, leading to a messy failure.

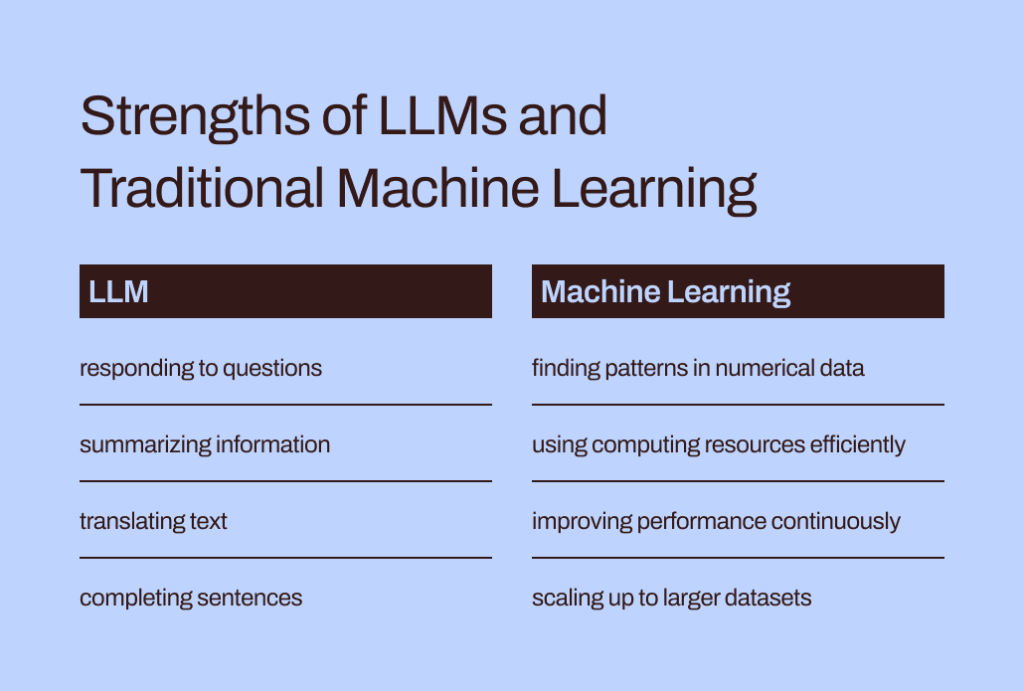

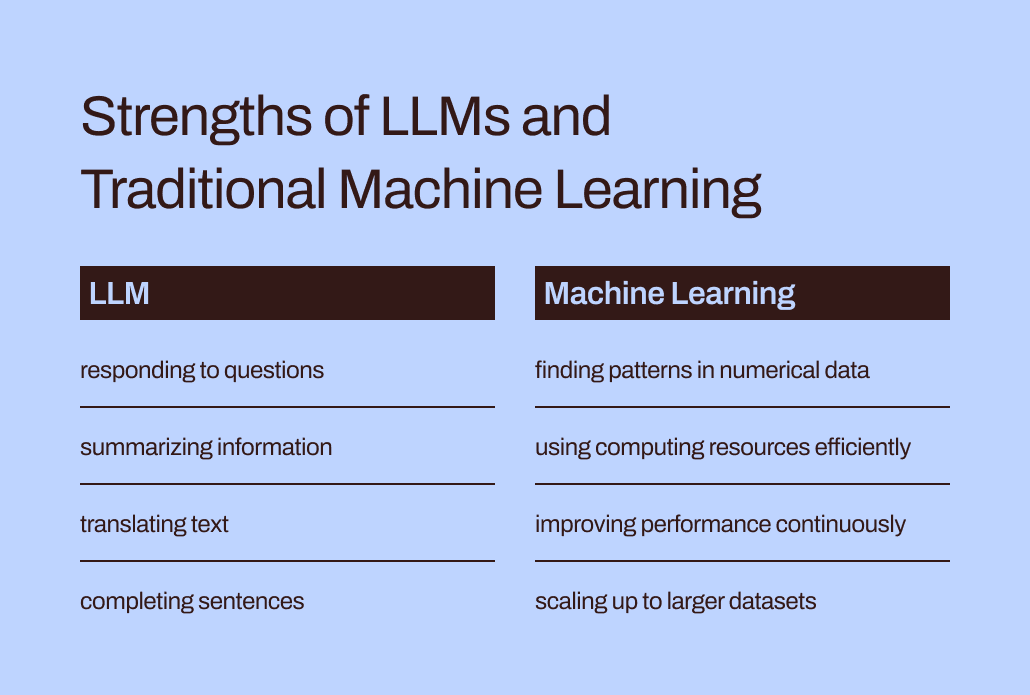

Language fashions like LLMs represent solely part of the broader machine studying toolkit, encompassing each generative AI and predictive AI. Choosing the right kind of machine studying mannequin is essential to align with the necessities of your activity.

Let’s dig deeper into why LLMs are a greater match for serving to you draft textual content or brainstorm reward concepts than for tackling your enterprise’s most crucial predictive modeling duties. There’s nonetheless a significant position for the “conventional” machine studying fashions that preceded LLMs and have repeatedly confirmed their price in companies. We’ll additionally discover a pioneering method for utilizing these instruments collectively — an thrilling growth we at Pecan name Predictive GenAI.

LLMs are designed for phrases, not numbers

In machine studying, completely different mathematical strategies are used to investigate what is called “coaching knowledge” — an preliminary dataset representing the issue {that a} knowledge analyst or knowledge scientist hopes to unravel.

The importance of coaching knowledge can’t be overstated. It holds inside it the patterns and relationships {that a} machine studying mannequin will “be taught” to foretell outcomes when it’s later given new, unseen knowledge.

So, what particularly is an LLM? Massive Language Fashions, or LLMs, fall beneath the umbrella of machine studying. They originate from deep studying, and their construction is particularly developed for pure language processing.

You may say they’re constructed on a basis of phrases. Their objective is solely to foretell which phrase would be the subsequent in a sequence of phrases. For instance, iPhones’ autocorrect characteristic in iOS 17 now makes use of an LLM to raised predict which phrase you’ll most definitely intend to kind subsequent.

Now, think about you’re a machine studying mannequin. (Bear with us, we all know it’s a stretch.) You’ve been skilled to foretell phrases. You’ve learn and studied tens of millions of phrases from an enormous vary of sources on all types of matters. Your mentors (aka builders) have helped you be taught the very best methods to foretell phrases and create new textual content that matches a consumer’s request.

However right here’s a twist. A consumer now provides you a large spreadsheet of buyer and transaction knowledge, with tens of millions of rows of numbers, and asks you to foretell numbers associated to this current knowledge.

How do you suppose your predictions would end up? First, you’d most likely be aggravated that this activity doesn’t match what you labored so exhausting to be taught. (Fortuitously, so far as we all know, LLMs don’t but have emotions.) Extra importantly, you’re being requested to do a activity that doesn’t match what you’ve realized to do. And also you most likely gained’t carry out so effectively.

The hole between coaching and activity helps clarify why LLMs aren’t well-suited for predictive duties involving numerical, tabular knowledge — the first knowledge format most companies accumulate. As an alternative, a machine studying mannequin particularly crafted and fine-tuned for dealing with such a knowledge is more practical. It’s actually been skilled for this.

LLMs’ effectivity and optimization challenges

Along with being a greater match for numerical knowledge, conventional machine studying strategies are much more environment friendly and simpler to optimize for higher efficiency than LLMs.

Let’s return to your expertise impersonating an LLM. Studying all these phrases and finding out their fashion and sequence appears like a ton of labor, proper? It will take a whole lot of effort to internalize all that info.

Equally, LLMs’ complicated coaching may end up in fashions with billions of parameters. That complexity permits these fashions to know and reply to the difficult nuances of human language. Nevertheless, heavy-duty coaching comes with heavy-duty computational calls for when LLMs generate responses. Numerically oriented “conventional” machine studying algorithms, like choice bushes or neural networks, will doubtless want far fewer computing assets. And this isn’t a case of “larger is best.” Even when LLMs might deal with numerical knowledge, this distinction would imply that conventional machine studying strategies would nonetheless be sooner, extra environment friendly, extra environmentally sustainable, and more cost effective.

Moreover, have you ever ever requested ChatGPT the way it knew to supply a specific response? Its reply will doubtless be a bit imprecise:

I generate responses primarily based on a mix of licensed knowledge, knowledge created by human trainers, and publicly out there knowledge. My coaching additionally concerned large-scale datasets obtained from quite a lot of sources, together with books, web sites, and different texts, to develop a wide-ranging understanding of human language. The coaching course of entails working computations on 1000’s of GPUs over weeks or months, however precise particulars and timescales are proprietary to OpenAI.

How a lot of the “data” mirrored in that response got here from the human trainers vs. the general public knowledge vs. books? Even ChatGPT itself isn’t positive: “The relative proportions of those sources are unknown, and I haven’t got detailed visibility into which particular paperwork had been a part of my coaching set.”

It’s a bit unnerving to have ChatGPT present such assured solutions to your questions however not be capable to hint its responses to particular sources. LLMs’ restricted interpretability and explainability additionally pose challenges in optimizing them for specific enterprise wants. It may be exhausting to know the rationale behind their info or predictions. To additional complicate issues, sure companies deal with regulatory calls for that imply they need to be capable to clarify the elements influencing a mannequin’s predictions. All in all, these challenges present that conventional machine studying fashions — usually extra interpretable and explainable — are doubtless higher suited to enterprise use circumstances.

The suitable place for LLMs in companies’ predictive toolkit

So, ought to we simply go away LLMs to their word-related duties and overlook about them for predictive use circumstances? It’d now look like they’ll’t help with predicting buyer churn or buyer lifetime worth in spite of everything.

Right here’s the factor: Whereas saying “conventional machine studying fashions” makes these methods sound extensively understood and straightforward to make use of, we all know from our expertise at Pecan that companies are nonetheless largely struggling to undertake even these extra acquainted types of AI.

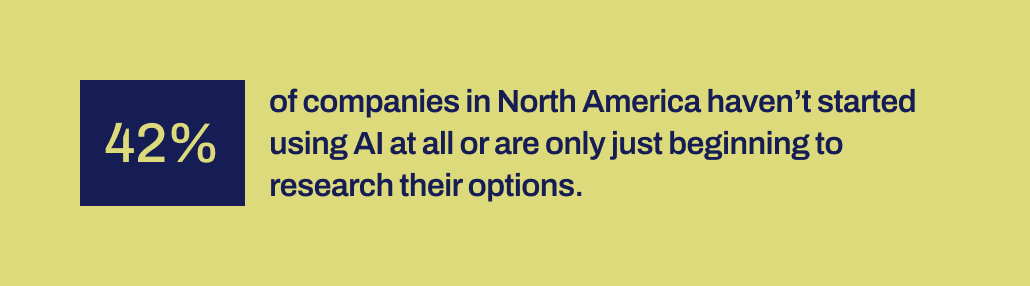

Latest analysis by Workday reveals that 42% of corporations in North America both have not initiated using AI or are simply within the early levels of exploring their choices. And it’s been over a decade since machine studying instruments turned extra accessible to corporations. They’ve had the time, and numerous instruments can be found.

For some purpose, profitable AI implementations have been surprisingly uncommon regardless of the huge buzz round knowledge science and AI — and their acknowledged potential for vital enterprise affect. Some vital mechanism is lacking to assist bridge the hole between the guarantees made by AI and the flexibility to implement it productively.

And that’s exactly the place we imagine LLMs can now play a significant bridging position. LLMs can assist enterprise customers cross the chasm between figuring out a enterprise drawback to unravel and growing a predictive mannequin.

With LLMs now within the image, enterprise and knowledge groups that don’t have the potential or capability to hand-code machine studying fashions can now higher translate their wants into fashions. They’ll “use their phrases,” as dad and mom prefer to say, to kickstart the modeling course of.

Fusing LLMs with machine studying methods constructed to excel on enterprise knowledge

That functionality has now arrived in Pecan’s Predictive GenAI, which is fusing the strengths of LLMs with our already extremely refined and automatic machine studying platform. Our LLM-powered Predictive Chat gathers enter from a enterprise consumer to information the definition and growth of a predictive query — the precise drawback the consumer desires to unravel with a mannequin.

Then, utilizing GenAI, our platform generates a Predictive Pocket book to make the following step towards modeling even simpler. Once more, drawing on LLM capabilities, the pocket book comprises pre-filled SQL queries to pick the coaching knowledge for the predictive mannequin. Pecan’s automated knowledge preparation, characteristic engineering, mannequin constructing, and deployment capabilities can perform the remainder of the method in file time, sooner than every other predictive modeling answer.

Briefly, Pecan’s Predictive GenAI makes use of the unparalleled language abilities of LLMs to make our best-in-class predictive modeling platform much more accessible and pleasant for enterprise customers. We’re excited to see how this method will assist many extra corporations succeed with AI.

So, whereas LLMs alone aren’t effectively suited to deal with all of your predictive wants, they’ll play a strong position in shifting your AI initiatives ahead. By decoding your use case and supplying you with a head begin with robotically generated SQL code, Pecan’s Predictive GenAI is main the best way in uniting these applied sciences. You’ll be able to check it out now with a free trial.