Evaluating Outlier Detection Strategies | by John Andrews | Dec, 2023

Outlier detection is an unsupervised machine studying activity to establish anomalies (uncommon observations) inside a given knowledge set. This activity is useful in lots of real-world instances the place our accessible dataset is already “contaminated” by anomalies. Scikit-learn implements several outlier detection algorithms, and in instances the place we’ve an uncontaminated baseline, we are able to additionally use these algorithms for novelty detection, a semi-supervised activity that predicts whether or not new observations are outliers.

The 4 outlier detection algorithms we’ll examine are:

- Elliptic Envelope is appropriate for normally-distributed knowledge with low dimensionality. As its identify implies, it makes use of the multivariate regular distribution to create a distance measure to separate outliers from inliers.

- Local Outlier Factor is a comparability of the native density of an commentary with that of its neighbors. Observations with a lot decrease density than their neighbors are thought of outliers.

- One-Class Support Vector Machine (SVM) with Stochastic Gradient Descent (SGD) is an O(n) approximate answer of the One-Class SVM. Be aware that the O(n²) One-Class SVM works nicely on our small instance dataset however could also be impractical to your precise use case.

- Isolation Forest is a tree-based strategy the place outliers are extra rapidly remoted by random splits than inliers.

Since our activity is unsupervised, we don’t have floor fact to match accuracies of those algorithms. As an alternative, we wish to see how their outcomes (participant rankings particularly) differ from each other and acquire some instinct into their habits and limitations, in order that we’d know when to desire one over one other.

Let’s examine a number of of those methods utilizing two metrics of batter efficiency from 2023’s Main Leage Baseball (MLB) season:

- On-base share (OBP), the speed at which a batter reaches base (by hitting, strolling, or getting hit by pitch) per plate look

- Slugging (SLG), the common variety of whole bases per at bat

There are many more sophisticated metrics of batter performance, together with OBP plus SLG (OPS), weighted on-base common (wOBA), and adjusted weighted runs created (WRC+). Nonetheless, we’ll see that along with being commonly used and simple to grasp, OBP and SLG are reasonably correlated and roughly usually distributed, making them nicely fitted to this comparability.

We use the pybaseball bundle to acquire hitting knowledge. This Python bundle is beneath MIT license and returns knowledge from Fangraphs.com, Baseball-Reference.com, and different sources which have in flip obtained offical information from Main League Baseball.

We use pybaseball’s 2023 batting statistics, which will be obtained both by batting_stats (FanGraphs) or batting_stats_bref (Baseball Reference). It seems that the participant names are more correctly formatted from Fangraphs, however participant groups and leagues from Baseball Reference are higher formatted within the case of traded gamers. For a dataset with improved readability, we truly must merge three tables: FanGraphs, Baseball Reference, and a key lookup.

from pybaseball import (cache, batting_stats_bref, batting_stats,

playerid_reverse_lookup)

import pandas as pdcache.allow() # keep away from pointless requests when re-running

MIN_PLATE_APPEARANCES = 200

# For readability and affordable default kind order

df_bref = batting_stats_bref(2023).question(f"PA >= {MIN_PLATE_APPEARANCES}"

).rename(columns={"Lev":"League",

"Tm":"Group"}

)

df_bref["League"] =

df_bref["League"].str.substitute("Maj-","").substitute("AL,NL","NL/AL"

).astype('class')

df_fg = batting_stats(2023, qual=MIN_PLATE_APPEARANCES)

key_mapping =

playerid_reverse_lookup(df_bref["mlbID"].to_list(), key_type='mlbam'

)[["key_mlbam","key_fangraphs"]

].rename(columns={"key_mlbam":"mlbID",

"key_fangraphs":"IDfg"}

)

df = df_fg.drop(columns="Group"

).merge(key_mapping, how="interior", on="IDfg"

).merge(df_bref[["mlbID","League","Team"]],

how="interior", on="mlbID"

).sort_values(["League","Team","Name"])

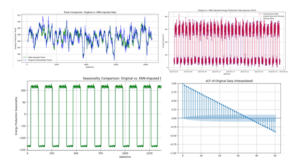

First, we word that these metrics differ in imply and variance and are reasonably correlated. We additionally word that every metric is pretty symmetric, with median worth near imply.

print(df[["OBP","SLG"]].describe().spherical(3))print(f"nCorrelation: {df[['OBP','SLG']].corr()['SLG']['OBP']:.3f}")

OBP SLG

rely 362.000 362.000

imply 0.320 0.415

std 0.034 0.068

min 0.234 0.227

25% 0.300 0.367

50% 0.318 0.414

75% 0.340 0.460

max 0.416 0.654Correlation: 0.630

Let’s visualize this joint distribution, utilizing:

- Scatterplot of the gamers, coloured by Nationwide League (NL) vs American League (AL)

- Bivariate kernel density estimator (KDE) plot of the gamers, which smoothes the scatterplot with a Gaussian kernel to estimate density

- Marginal KDE plots of every metric

import matplotlib.pyplot as plt

import seaborn as snsg = sns.JointGrid(knowledge=df, x="OBP", y="SLG", top=5)

g = g.plot_joint(func=sns.scatterplot, knowledge=df, hue="League",

palette={"AL":"blue","NL":"maroon","NL/AL":"inexperienced"},

alpha=0.6

)

g.fig.suptitle("On-base share vs. Sluggingn2023 season, min "

f"{MIN_PLATE_APPEARANCES} plate appearances"

)

g.determine.subplots_adjust(prime=0.9)

sns.kdeplot(x=df["OBP"], coloration="orange", ax=g.ax_marg_x, alpha=0.5)

sns.kdeplot(y=df["SLG"], coloration="orange", ax=g.ax_marg_y, alpha=0.5)

sns.kdeplot(knowledge=df, x="OBP", y="SLG",

ax=g.ax_joint, coloration="orange", alpha=0.5

)

df_extremes = df[ df["OBP"].isin([df["OBP"].min(),df["OBP"].max()])

| df["OPS"].isin([df["OPS"].min(),df["OPS"].max()])

]

for _,row in df_extremes.iterrows():

g.ax_joint.annotate(row["Name"], (row["OBP"], row["SLG"]),dimension=6,

xycoords='knowledge', xytext=(-3, 0),

textcoords='offset factors', ha="proper",

alpha=0.7)

plt.present()

The highest-right nook of the scatterplot reveals a cluster of excellence in hitting similar to the heavy higher tails of the SLG and OBP distributions. This small group excels at getting on base and hitting for additional bases. How a lot we take into account them to be outliers (due to their distance from nearly all of the participant inhabitants) versus inliers (due to their proximity to 1 one other) is dependent upon the definition utilized by our chosen algorithm.

Scikit-learn’s outlier detection algorithms sometimes have match() and predict() strategies, however there are exceptions and likewise variations between algorithms of their arguments. We’ll take into account every algorithm individually, however we’ll match every to a matrix of attributes (n=2) per participant (m=453). We’ll then rating not solely every participant however a grid of values spanning the vary of every attribute, to assist us visualize the prediction operate.

To visualise resolution boundaries, we have to take the next steps:

- Create a 2D

meshgridof enter function values. - Apply the

decision_functionto every level on themeshgrid, which requires unstacking the grid. - Re-shape the predictions again right into a grid.

- Plot the predictions.

We’ll use a 200×200 grid to cowl the prevailing observations plus some padding, however you might alter the grid to your required velocity and backbone.

import numpy as npX = df[["OBP","SLG"]].to_numpy()

GRID_RESOLUTION = 200

disp_x_range, disp_y_range = ( (.6*X[:,i].min(), 1.2*X[:,i].max())

for i in [0,1]

)

xx, yy = np.meshgrid(np.linspace(*disp_x_range, GRID_RESOLUTION),

np.linspace(*disp_y_range, GRID_RESOLUTION)

)

grid_shape = xx.form

grid_unstacked = np.c_[xx.ravel(), yy.ravel()]

Elliptic Envelope

The form of the elliptic envelope is decided by the info’s covariance matrix, which supplies the variance of function i on the primary diagonal [i,i] and the covariance of options i and j within the [i,j] positions. As a result of the covariance matrix is delicate to outliers, this algorithm makes use of the Minimal Covariance Determinant (MCD) Estimator, which is recommended for unimodal and symmetric distributions, with shuffling decided by the random_state enter for reproducibility. This sturdy covariance matrix will turn out to be useful once more later.

As a result of we wish to examine the outlier scores of their rating moderately than a binary outlier/inlier classification, we use the decision_function to attain gamers.

from sklearn.covariance import EllipticEnvelopeell = EllipticEnvelope(random_state=17).match(X)

df["outlier_score_ell"] = ell.decision_function(X)

Z_ell = ell.decision_function(grid_unstacked).reshape(grid_shape)

Native Outlier Issue

This strategy to measuring isolation relies on k-nearest neighbors (KNN). We calculate the overall distance from every commentary to its nearest neighbors to outline native density, after which we examine every commentary’s native density with that of its neighbors. Observations with native density a lot lower than their neighbors are thought of outliers.

Selecting the variety of neighbors to incorporate: In KNN, a rule of thumb is to let Okay = sqrt(N), the place N is your commentary rely. From this rule, we acquire a Okay shut to twenty (which occurs to be the default Okay for LOF). You may enhance or lower Okay to cut back overfitting or underfitting, respectively.

Okay = int(np.sqrt(X.form[0]))print(f"Utilizing Okay={Okay} nearest neighbors.")

Utilizing Okay=19 nearest neighbors.

Selecting a distance measure: Be aware that our options are correlated and have completely different variances, so Euclidean distance will not be very significant. We are going to use Mahalanobis distance, which accounts for function scale and correlation.

In calculating the Mahalanobis distance, we’ll use the sturdy covariance matrix. If we had not already calculated it through Ellliptic Envelope, we may calculate it directly.

from scipy.spatial.distance import pdist, squareform# If we did not have the elliptical envelope already,

# we may calculate sturdy covariance:

# from sklearn.covariance import MinCovDet

# robust_cov = MinCovDet().match(X).covariance_

# However we are able to simply re-use it from elliptical envelope:

robust_cov = ell.covariance_

print(f"Sturdy covariance matrix:n{np.spherical(robust_cov,5)}n")

inv_robust_cov = np.linalg.inv(robust_cov)

D_mahal = squareform(pdist(X, 'mahalanobis', VI=inv_robust_cov))

print(f"Mahalanobis distance matrix of dimension {D_mahal.form}, "

f"e.g.:n{np.spherical(D_mahal[:5,:5],3)}...n...n")

Sturdy covariance matrix:

[[0.00077 0.00095]

[0.00095 0.00366]]Mahalanobis distance matrix of dimension (362, 362), e.g.:

[[0. 2.86 1.278 0.964 0.331]

[2.86 0. 2.63 2.245 2.813]

[1.278 2.63 0. 0.561 0.956]

[0.964 2.245 0.561 0. 0.723]

[0.331 2.813 0.956 0.723 0. ]]...

...

Becoming the Native Outlier Issue: Be aware that utilizing a customized distance matrix requires us to cross metric="precomputed" to the constructor after which the space matrix itself to the match methodology. (See documentation for extra particulars.)

Additionally word that in contrast to different algorithms, with LOF we’re instructed to not use the score_samples methodology for scoring current observations; this methodology ought to solely be used for novelty detection.

from sklearn.neighbors import LocalOutlierFactorlof = LocalOutlierFactor(n_neighbors=Okay, metric="precomputed", novelty=True

).match(D_mahal)

df["outlier_score_lof"] = lof.negative_outlier_factor_

Create the choice boundary: As a result of we used a customized distance metric, we should additionally compute that customized distance between every level within the grid to the unique observations. Earlier than we used the spatial measure pdist for pairwise distances between every member of a single set, however now we use cdist to return the distances from every member of the primary set of inputs to every member of the second set.

from scipy.spatial.distance import cdistD_mahal_grid = cdist(XA=grid_unstacked, XB=X,

metric='mahalanobis', VI=inv_robust_cov

)

Z_lof = lof.decision_function(D_mahal_grid).reshape(grid_shape)

Help Vector Machine (SGD-One-Class SVM)

SVMs use the kernel trick to remodel options into the next dimensionality the place a separating hyperplane will be recognized. The radial foundation operate (RBF) kernel requires the inputs to be standardized, however because the documentation for StandardScaler notes, that scaler is delicate to outliers, so we’ll use RobustScaler. We’ll pipe the scaled inputs into Nyström kernel approximation, as recommended by the documentation for SGDOneClassSVM.

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import RobustScaler

from sklearn.kernel_approximation import Nystroem

from sklearn.linear_model import SGDOneClassSVMsuv = make_pipeline(

RobustScaler(),

Nystroem(random_state=17),

SGDOneClassSVM(random_state=17)

).match(X)

df["outlier_score_svm"] = suv.decision_function(X)

Z_svm = suv.decision_function(grid_unstacked).reshape(grid_shape)

Isolation Forest

This tree-based strategy to measuring isolation performs random recursive partitioning. If the common variety of splits required to isolate a given commentary is low, that commentary is taken into account a stronger candidate outlier. Like Random Forests and different tree-based fashions, Isolation Forest doesn’t assume that the options are usually distributed or require them to be scaled. By default, it builds 100 timber. Our instance solely makes use of two options, so we don’t allow function sampling.

from sklearn.ensemble import IsolationForestiso = IsolationForest(random_state=17).match(X)

df["outlier_score_iso"] = iso.score_samples(X)

Z_iso = iso.decision_function(grid_unstacked).reshape(grid_shape)

Be aware that the predictions from these fashions have completely different distributions. We apply QuantileTransformer to make them extra visually comparable on a given grid. From the documentation, please word:

Be aware that this remodel is non-linear. It could distort linear correlations between variables measured on the similar scale however renders variables measured at completely different scales extra straight comparable.

from adjustText import adjust_text

from sklearn.preprocessing import QuantileTransformerN_QUANTILES = 8 # This many coloration breaks per chart

N_CALLOUTS=15 # Label this many prime outliers per chart

fig, axs = plt.subplots(2, 2, figsize=(12, 12), sharex=True, sharey=True)

fig.suptitle("Comparability of Outlier Identification Algorithms",dimension=20)

fig.supxlabel("On-Base Proportion (OBP)")

fig.supylabel("Slugging (SLG)")

ax_ell = axs[0,0]

ax_lof = axs[0,1]

ax_svm = axs[1,0]

ax_iso = axs[1,1]

model_abbrs = ["ell","iso","lof","svm"]

qt = QuantileTransformer(n_quantiles=N_QUANTILES)

for ax, nm, abbr, zz in zip( [ax_ell,ax_iso,ax_lof,ax_svm],

["Elliptic Envelope","Isolation Forest",

"Local Outlier Factor","One-class SVM"],

model_abbrs,

[Z_ell,Z_iso,Z_lof,Z_svm]

):

ax.title.set_text(nm)

outlier_score_var_nm = f"outlier_score_{abbr}"

qt.match(np.kind(zz.reshape(-1,1)))

zz_qtl = qt.remodel(zz.reshape(-1,1)).reshape(zz.form)

cs = ax.contourf(xx, yy, zz_qtl, cmap=plt.cm.OrRd.reversed(),

ranges=np.linspace(0,1,N_QUANTILES)

)

ax.scatter(X[:, 0], X[:, 1], s=20, c="b", edgecolor="okay", alpha=0.5)

df_callouts = df.sort_values(outlier_score_var_nm).head(N_CALLOUTS)

texts = [ ax.text(row["OBP"], row["SLG"], row["Name"], c="b",

dimension=9, alpha=1.0)

for _,row in df_callouts.iterrows()

]

adjust_text(texts,

df_callouts["OBP"].values, df_callouts["SLG"].values,

arrowprops=dict(arrowstyle='->', coloration="b", alpha=0.6),

ax=ax

)

plt.tight_layout(pad=2)

plt.present()

for var in ["OBP","SLG"]:

df[f"Pctl_{var}"] = 100*(df[var].rank()/df[var].dimension).spherical(3)

model_score_vars = [f"outlier_score_{nm}" for nm in model_abbrs]

model_rank_vars = [f"Rank_{nm.upper()}" for nm in model_abbrs]

df[model_rank_vars] = df[model_score_vars].rank(axis=0).astype(int)

# Averaging the ranks is unfair; we simply want a countdown order

df["Rank_avg"] = df[model_rank_vars].imply(axis=1)

print("Counting right down to the best outlier...n")

print(

df.sort_values("Rank_avg",ascending=False

).tail(N_CALLOUTS)[["Name","AB","PA","H","2B","3B",

"HR","BB","HBP","SO","OBP",

"Pctl_OBP","SLG","Pctl_SLG"

] +

[f"Rank_{nm.upper()}" for nm in model_abbrs]

].to_string(index=False)

)

Counting right down to the best outlier...Identify AB PA H 2B 3B HR BB HBP SO OBP Pctl_OBP SLG Pctl_SLG Rank_ELL Rank_ISO Rank_LOF Rank_SVM

Austin Barnes 178 200 32 5 0 2 17 2 43 0.256 2.6 0.242 0.6 19 7 25 12

J.D. Martinez 432 479 117 27 2 33 34 2 149 0.321 52.8 0.572 98.1 15 18 5 15

Yandy Diaz 525 600 173 35 0 22 65 8 94 0.410 99.2 0.522 95.4 13 15 13 10

Jose Siri 338 364 75 13 2 25 20 2 130 0.267 5.5 0.494 88.4 8 14 15 13

Juan Soto 568 708 156 32 1 35 132 2 129 0.410 99.2 0.519 95.0 12 13 11 11

Mookie Betts 584 693 179 40 1 39 96 8 107 0.408 98.6 0.579 98.3 7 10 20 7

Rob Refsnyder 202 243 50 9 1 1 33 5 47 0.365 90.5 0.317 6.6 5 19 2 14

Yordan Alvarez 410 496 120 24 1 31 69 13 92 0.407 98.3 0.583 98.6 6 9 18 6

Freddie Freeman 637 730 211 59 2 29 72 16 121 0.410 99.2 0.567 97.8 9 11 9 8

Matt Olson 608 720 172 27 3 54 104 4 167 0.389 96.5 0.604 99.2 11 6 7 9

Austin Hedges 185 212 34 5 0 1 11 2 47 0.234 0.3 0.227 0.3 10 1 4 3

Aaron Decide 367 458 98 16 0 37 88 0 130 0.406 98.1 0.613 99.4 3 5 6 4

Ronald Acuna Jr. 643 735 217 35 4 41 80 9 84 0.416 100.0 0.596 98.9 2 3 10 2

Corey Seager 477 536 156 42 0 33 49 4 88 0.390 97.0 0.623 99.7 4 4 3 5

Shohei Ohtani 497 599 151 26 8 44 91 3 143 0.412 99.7 0.654 100.0 1 2 1 1

It appears to be like just like the 4 implementations largely agree on the right way to outline outliers, however with some noticeable variations in scores and likewise in ease of use.

Elliptic Envelope has narrower contours across the ellipse’s minor axis, so it tends to spotlight these attention-grabbing gamers who run opposite to the general correlation between options. For instance, Rays outfielder José Siri ranks as extra of an outlier beneath this algorithm as a result of his excessive SLG (88th percentile) versus low OBP (fifth percentile), which is according to an aggressive hitter who swings exhausting at borderline pitches and both crushes them or will get weak-to-no contact.

Elliptic Envelope can also be straightforward to make use of with out configuration, and it gives the sturdy covariance matrix. When you have low-dimensional knowledge and an inexpensive expectation for it to be usually distributed (which is usually not the case), you may wish to do that easy strategy first.

One-class SVM has extra uniformly spaced contours, so it tends to emphasise observations alongside the general course of correlation greater than the Elliptic Envelope. All-Star first basemen Freddie Freeman (Dodgers) and Yandy Diaz (Rays) rank extra strongly beneath this algorithm than beneath others, since their SLG and OBP are each wonderful (99th and 97th percentile for Freeman, 99th and ninety fifth for Diaz).

The RBF kernel required an additional step for standardization, however it additionally appeared to work nicely on this easy instance with out fine-tuning.

Native Outlier Issue picked up on the “cluster of excellence” talked about earlier with a small bimodal contour (barely seen within the chart). Because the Dodgers’ outfielder/second-baseman Mookie Betts is surrounded by different wonderful hitters together with Freeman, Yordan Alvarez, and Ronald Acuña Jr., he ranks as solely the Twentieth-strongest outlier beneath LOF, versus tenth or stronger beneath the opposite algorithms. Conversely, Braves outfielder Marcell Ozuna had barely decrease SLG and significantly decrease OBP than Betts, however he’s extra of an outlier beneath LOF as a result of his neighborhood is much less dense.

LOF was probably the most time-consuming to implement since we created sturdy distance matrices for becoming and scoring. We may have spent a while tuning Okay as nicely.

Isolation Forest tends to emphasise observations on the corners of the function area, as a result of splits are distributed throughout options. Backup catcher Austin Hedges, who performed for the Pirates and Rangers in 2023 and signed with Guardians for 2024, is robust defensively however the worst batter (with a minimum of 200 plate appearances) in each SLG and OBP. Hedges will be remoted in a single cut up on both OBP or OPS, making him the strongest outlier. Isolation Forest is the solely algorithm that didn’t rank Shohei Ohtani because the strongest outlier: since Ohtani was edged out in OBP by Ronald Acuña Jr., each Ohtani and Acuña will be remoted in a single cut up on solely one function.

As with frequent supervised tree-based learners, Isolation Forest doesn’t extrapolate, making it higher fitted to becoming to a contaminated dataset for outlier detection than for becoming to an anomaly-free dataset for novelty detection (the place it wouldn’t rating new outliers extra strongly than the prevailing observations).

Though Isolation Forest labored nicely out of the field, its failure to rank Shohei Ohtani because the best outlier in baseball (and possibly all skilled sports activities) illustrates the first limitation of any outlier detector: the info you utilize to suit it.

Not solely did we omit defensive stats (sorry, Austin Hedges), we didn’t trouble to incorporate pitching stats. As a result of pitchers don’t even attempt to hit anymore… apart from Ohtani, whose season included the second-best batting common towards (BAA) and Eleventh-best earned run common (ERA) in baseball (minimal 100 innings), a complete-game shutout, and a recreation by which he struck out ten batters and hit two dwelling runs.

It has been recommended that Shohei Ohtani is a complicated extraterrestrial impersonating a human, however it appears extra seemingly that there are two superior extraterrestrials impersonating the identical human. Sadly, one among them simply had elbow surgical procedure and received’t pitch in 2024… however the different simply signed a file 10-year, $700 million contract. And because of outlier detection, now we are able to see why!