6 Issues of LLMs That LangChain is Making an attempt to Assess

Picture by Creator

Within the ever-evolving panorama of know-how, the surge of enormous language fashions (LLMs) has been nothing in need of a revolution. Instruments like ChatGPT and Google BARD are on the forefront, showcasing the artwork of the potential in digital interplay and software improvement.

The success of fashions comparable to ChatGPT has spurred a surge in curiosity from firms wanting to harness the capabilities of those superior language fashions.

But, the true energy of LLMs does not simply lie of their standalone skills.

Their potential is amplified when they’re built-in with further computational assets and data bases, creating functions that aren’t solely good and linguistically expert but in addition richly knowledgeable by information and processing energy.

And this integration is precisely what LangChain tries to evaluate.

Langchain is an progressive framework crafted to unleash the complete capabilities of LLMs, enabling a clean symbiosis with different programs and assets. It is a device that provides information professionals the keys to assemble functions which are as clever as they’re contextually conscious, leveraging the huge sea of data and computational selection out there at present.

It isn’t only a device, it is a transformational pressure that’s reshaping the tech panorama.

This prompts the next query:

How will LangChain redefine the boundaries of what LLMs can obtain?

Stick with me and let’s attempt to uncover all of it collectively.

LangChain is an open-source framework constructed round LLMs. It supplies builders with an arsenal of instruments, elements, and interfaces that streamline the structure of LLM-driven functions.

Nevertheless, it isn’t simply one other device.

Working with LLMs can generally really feel like attempting to suit a sq. peg right into a spherical gap.

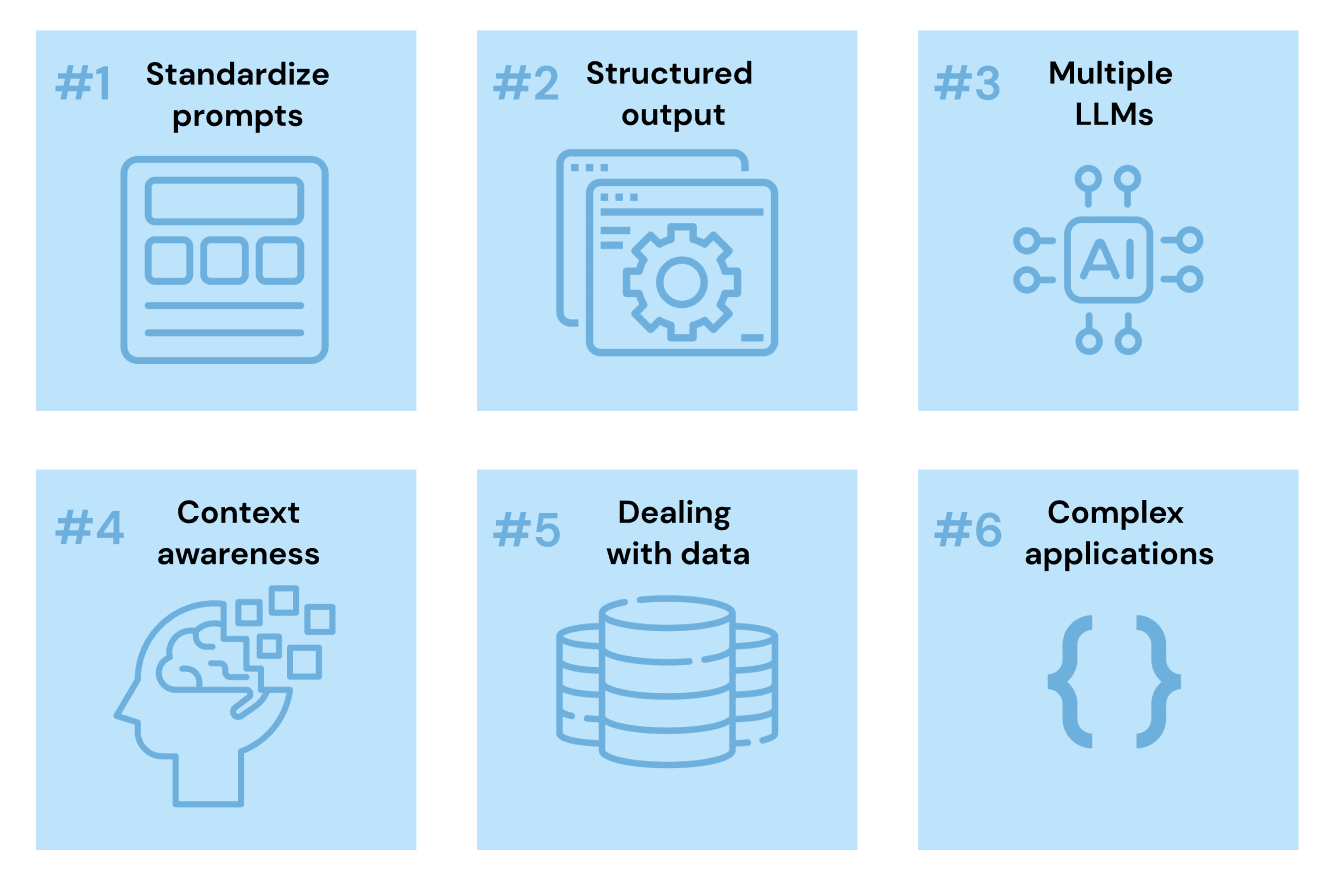

There are some frequent issues that I wager most of you’ve got already skilled your self:

- Methods to standardize immediate buildings.

- How to verify LLM’s output can be utilized by different modules or libraries.

- Methods to simply change from one LLM mannequin to a different.

- Methods to preserve some document of reminiscence when wanted.

- Methods to take care of information.

All these issues convey us to the next query:

Methods to develop a complete advanced software being positive that the LLM mannequin will behave as anticipated.

The prompts are riddled with repetitive buildings and textual content, the responses are as unstructured as a toddler’s playroom, and the reminiscence of those fashions? Let’s simply say it is not precisely elephantine.

So… how can we work with them?

Making an attempt to develop advanced functions with AI and LLMs is usually a full headache.

And that is the place LangChain steps in because the problem-solver.

At its core, LangChain is made up of a number of ingenious elements that assist you to simply combine LLM in any improvement.

LangChain is producing enthusiasm for its potential to amplify the capabilities of potent giant language fashions by endowing them with reminiscence and context. This addition allows the simulation of “reasoning” processes, permitting for the tackling of extra intricate duties with larger precision.

For builders, the attraction of LangChain lies in its progressive method to creating person interfaces. Reasonably than counting on conventional strategies like drag-and-drop or coding, customers can articulate their wants instantly, and the interface is constructed to accommodate these requests.

It’s a framework designed to supercharge software program builders and information engineers with the flexibility to seamlessly combine LLMs into their functions and information workflows.

So this brings us to the next query…

Understanding present LLMs current 6 most important issues, now we will see how LangChain is attempting to evaluate them.

Picture by Creator

1. Prompts are means too advanced now

Let’s attempt to recall how the idea of immediate has quickly advanced throughout these final months.

It began with a easy string describing a simple job to carry out:

Hey ChatGPT, are you able to please clarify to me how you can plot a scatter chart in Python?

Nevertheless, over time individuals realized this was means too easy. We weren’t offering LLMs sufficient context to grasp their most important job.

Right this moment we have to inform any LLM way more than merely describing the principle job to meet. We now have to explain the AI’s high-level habits, the writing type and embody directions to verify the reply is correct. And another element to present a extra contextualized instruction to our mannequin.

So at present, somewhat than utilizing the very first immediate, we might submit one thing extra just like:

Hey ChatGPT, think about you're a information scientist. You might be good at analyzing information and visualizing it utilizing Python.

Are you able to please clarify to me how you can generate a scatter chart utilizing the Seaborn library in Python

Proper?

Nevertheless, as most of you’ve got already realized, I can ask for a distinct job however nonetheless preserve the identical high-level habits of the LLM. Because of this most elements of the immediate can stay the identical.

For this reason we should always be capable to write this half only one time after which add it to any immediate you want.

LangChain fixes this repeat textual content situation by providing templates for prompts.

These templates combine the particular particulars you want to your job (asking precisely for the scatter chart) with the same old textual content (like describing the high-level habits of the mannequin).

So our closing immediate template can be:

Hey ChatGPT, think about you're a information scientist. You might be good at analyzing information and visualizing it utilizing Python.

Are you able to please clarify to me how you can generate a utilizing the library in Python?

With two most important enter variables:

- kind of chart

- python library

2. Responses Are Unstructured by Nature

We people interpret textual content simply, For this reason when chatting with any AI-powered chatbot like ChatGPT, we will simply take care of plain textual content.

Nevertheless, when utilizing these exact same AI algorithms for apps or applications, these solutions needs to be offered in a set format, like CSV or JSON information.

Once more, we will attempt to craft refined prompts that ask for particular structured outputs. However we can’t be 100% positive that this output will likely be generated in a construction that’s helpful for us.

That is the place LangChain’s Output parsers kick in.

This class permits us to parse any LLM response and generate a structured variable that may be simply used. Neglect about asking ChatGPT to reply you in a JSON, LangChain now means that you can parse your output and generate your individual JSON.

3. LLMs Have No Reminiscence – however some functions would possibly want them to.

Now simply think about you might be speaking with an organization’s Q&A chatbot. You ship an in depth description of what you want, the chatbot solutions appropriately and after a second iteration… it’s all gone!

That is just about what occurs when calling any LLM by way of API. When utilizing GPT or another user-interface chatbot, the AI mannequin forgets any a part of the dialog the very second we cross to our subsequent flip.

They don’t have any, or a lot, reminiscence.

And this could result in complicated or mistaken solutions.

As most of you’ve got already guessed, LangChain once more is able to come to assist us.

LangChain provides a category known as reminiscence. It permits us to maintain the mannequin context-aware, be it holding the entire chat historical past or only a abstract so it doesn’t get any mistaken replies.

4. Why select a single LLM when you possibly can have all of them?

Everyone knows OpenAI’s GPT fashions are nonetheless within the realm of LLMs. Nevertheless… There are many different choices on the market like Meta’s Llama, Claude, or Hugging Face Hub open-source fashions.

Should you solely design your program for one firm’s language mannequin, you are caught with their instruments and guidelines.

Utilizing instantly the native API of a single mannequin makes you rely completely on them.

Think about should you constructed your app’s AI options with GPT, however later came upon it is advisable to incorporate a characteristic that’s higher assessed utilizing Meta’s Llama.

You’ll be compelled to start out throughout from scratch… which isn’t good in any respect.

LangChain provides one thing known as an LLM class. Consider it as a particular device that makes it straightforward to alter from one language mannequin to a different, and even use a number of fashions without delay in your app.

For this reason growing instantly with LangChain means that you can take into account a number of fashions without delay.

5. Passing Information to the LLM is Difficult

Language fashions like GPT-4 are skilled with enormous volumes of textual content. For this reason they work with textual content by nature. Nevertheless, they often battle relating to working with information.

Why? You would possibly ask.

Two most important points could be differentiated:

- When working with information, we first must know how you can retailer this information, and how you can successfully choose the information we need to present to the mannequin. LangChain helps with this situation through the use of one thing known as indexes. These allow you to herald information from completely different locations like databases or spreadsheets and set it up so it is able to be despatched to the AI piece by piece.

- However, we have to resolve how you can put that information into the immediate you give the mannequin. The best means is to simply put all the information instantly into the immediate, however there are smarter methods to do it, too.

On this second case, LangChain has some particular instruments that use completely different strategies to present information to the AI. Be it utilizing direct Immediate stuffing, which lets you put the entire information set proper into the immediate, or utilizing extra superior choices like Map-reduce, Refine, or Map-rerank, LangChain eases the way in which we ship information to any LLM.

6. Standardizing Growth Interfaces

It is at all times tough to suit LLMs into larger programs or workflows. For example, you would possibly must get some data from a database, give it to the AI, after which use the AI’s reply in one other a part of your system.

LangChain has particular options for these sorts of setups.

- Chains are like strings that tie completely different steps collectively in a easy, straight line.

- Brokers are smarter and may make decisions about what to do subsequent, based mostly on what the AI says.

LangChain additionally simplifies this by offering standardized interfaces that streamline the event course of, making it simpler to combine and chain calls to LLMs and different utilities, enhancing the general improvement expertise.

In essence, LangChain provides a collection of instruments and options that make it simpler to develop functions with LLMs by addressing the intricacies of immediate crafting, response structuring, and mannequin integration.

LangChain is greater than only a framework, it is a game-changer on the planet of information engineering and LLMs.

It is the bridge between the advanced, usually chaotic world of AI and the structured, systematic method wanted in information functions.

As we wrap up this exploration, one factor is evident:

LangChain isn’t just shaping the way forward for LLMs, it is shaping the way forward for know-how itself.

Josep Ferrer is an analytics engineer from Barcelona. He graduated in physics engineering and is presently working within the Information Science area utilized to human mobility. He’s a part-time content material creator targeted on information science and know-how. You may contact him on LinkedIn, Twitter or Medium.