10 Important Pandas Capabilities Each Knowledge Scientist Ought to Know

Picture by Creator

In right now’s data-driven world, knowledge evaluation and insights make it easier to get essentially the most out of it and make it easier to make higher selections. From an organization’s perspective, it provides a Aggressive Benefit and personaliz?s the entire course of.

This tutorial will discover essentially the most potent Python library pandas, and we are going to talk about a very powerful capabilities of this library which can be necessary for knowledge evaluation. Learners can even observe this tutorial attributable to its simplicity and effectivity. If you happen to don’t have python put in in your system, you need to use Google Colaboratory.

You’ll be able to obtain the dataset from that link.

import pandas as pd

df = pd.read_csv("kaggle_sales_data.csv", encoding="Latin-1") # Load the info

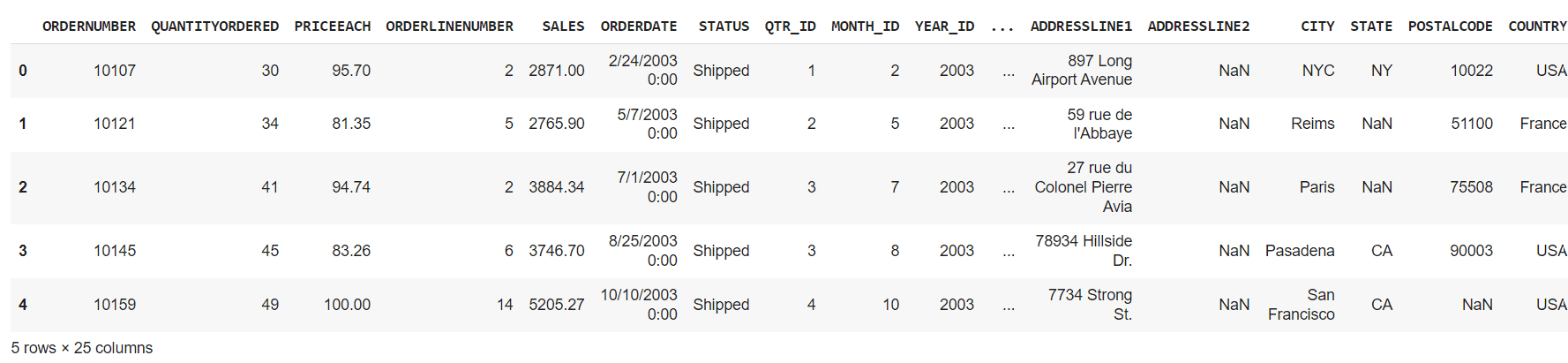

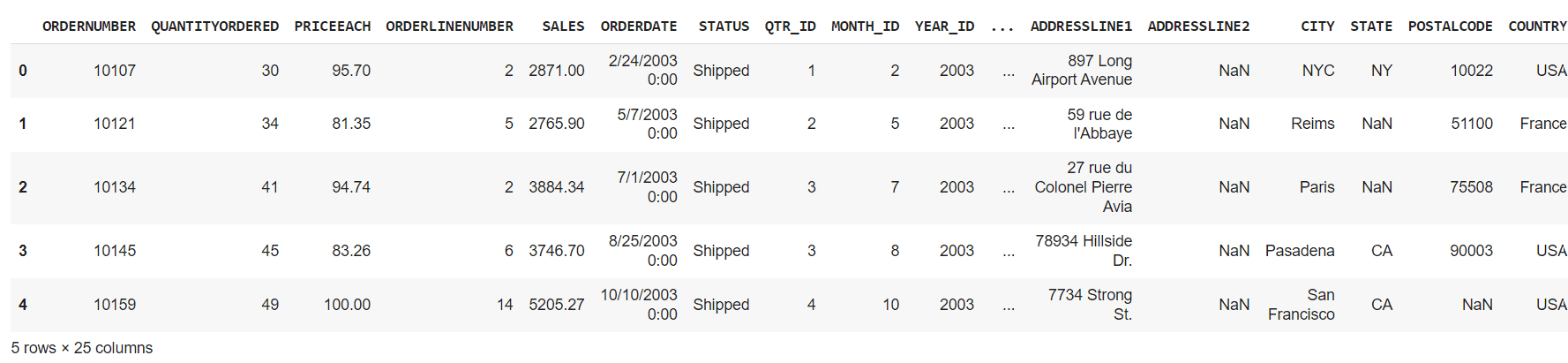

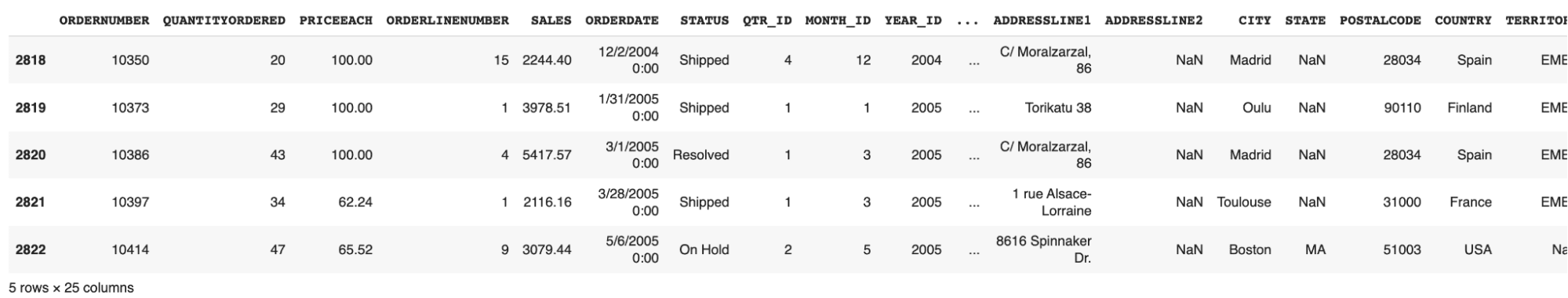

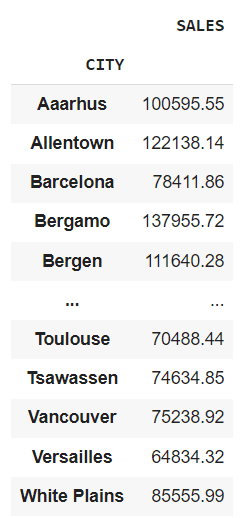

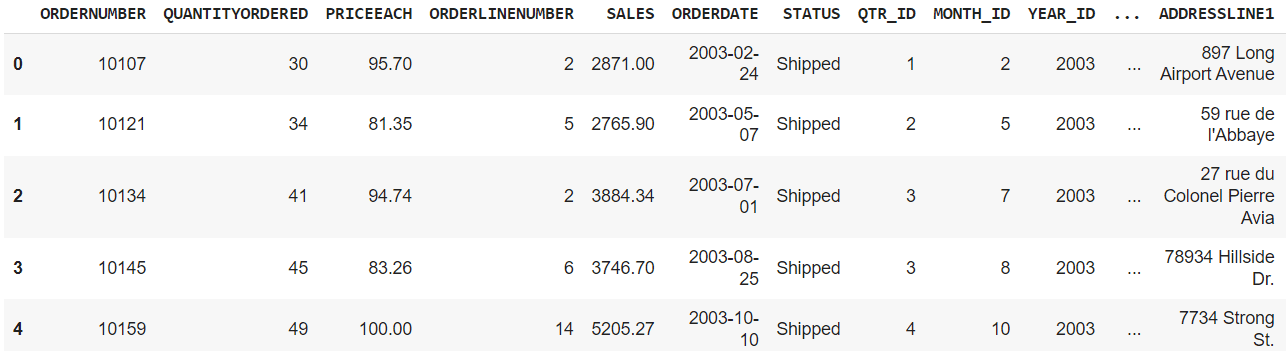

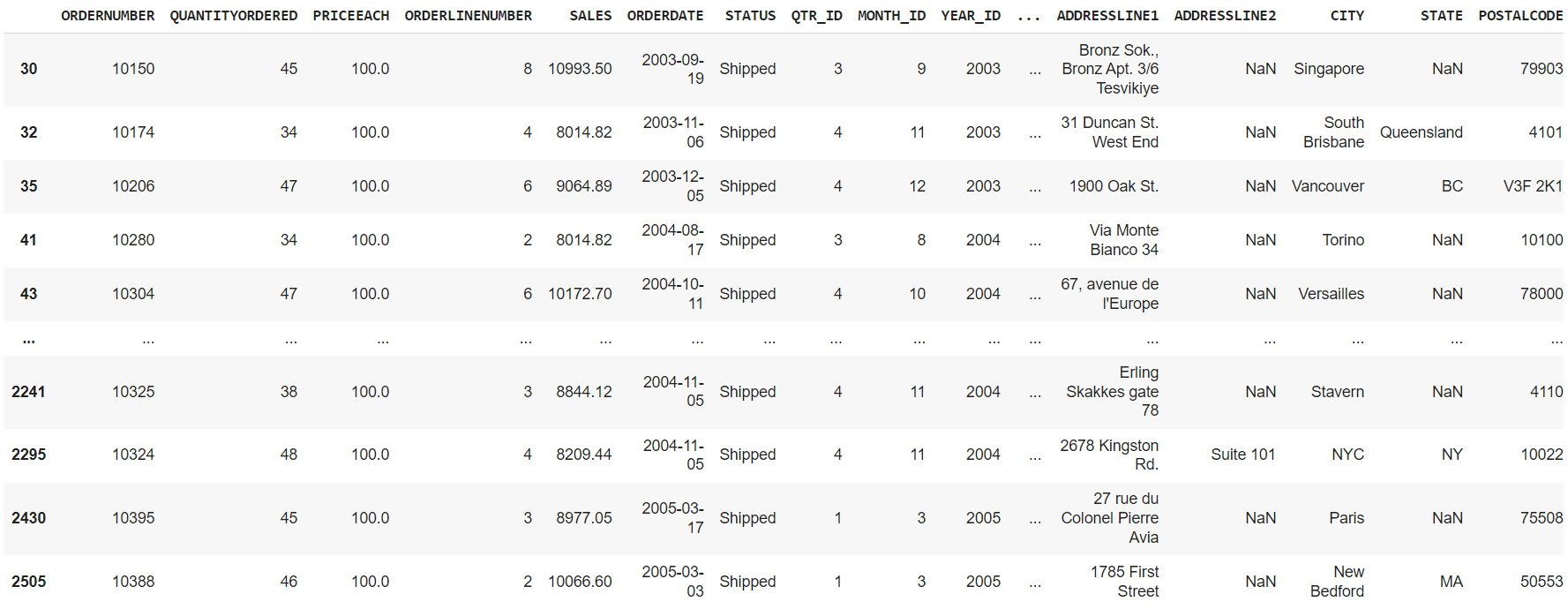

df.head() # Present first 5 rows

Output:

On this part, we are going to talk about numerous capabilities that make it easier to to get extra about your knowledge. Like viewing it or getting the imply, common, min/max, or getting details about the dataframe.

1. Knowledge Viewing

-

df.head(): It shows the primary 5 rows of the pattern knowledge

-

df.tail(): It shows the final 5 rows of the pattern knowledge

-

df.pattern(n): It shows the random n variety of rows within the pattern knowledge

-

df.form: It shows the pattern knowledge’s rows and columns (dimensions).

It signifies that our dataset has 2823 rows, every containing 25 columns.

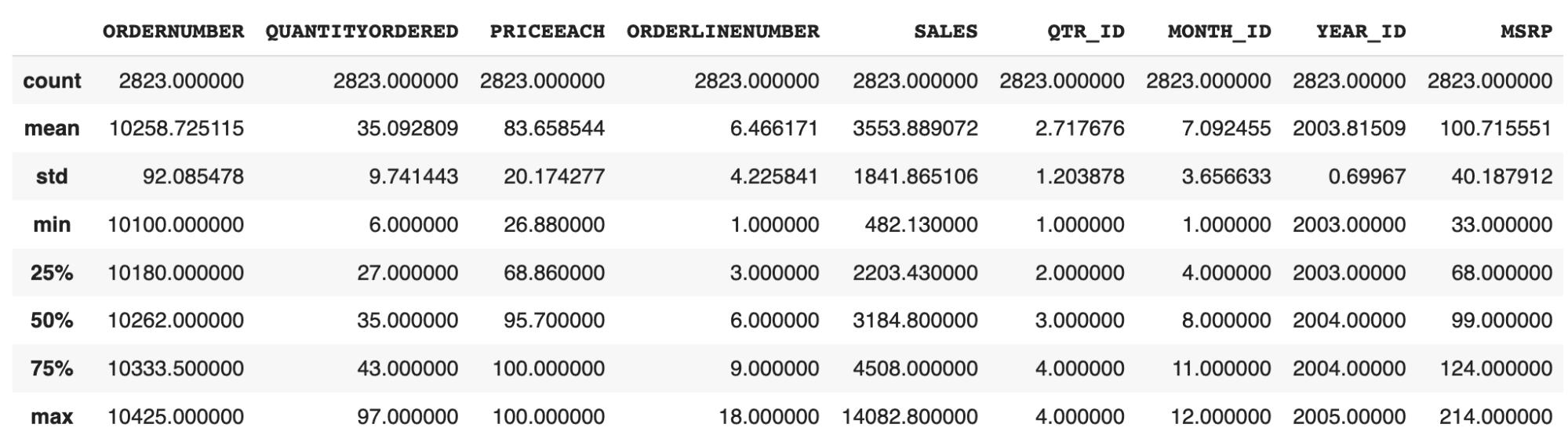

2. Statistics

This part comprises the capabilities that make it easier to carry out statistics like common, min/max, and quartiles in your knowledge.

-

df.describe(): Get the essential statistics of every column of the pattern knowledge

-

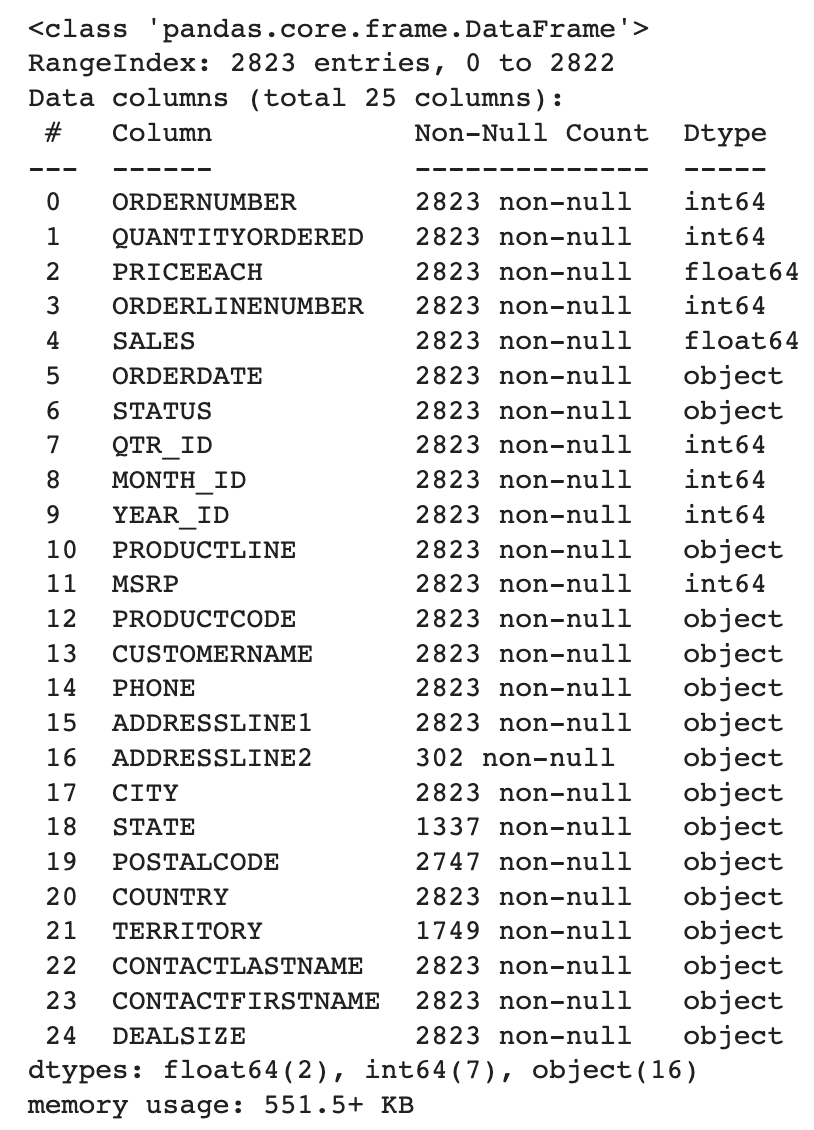

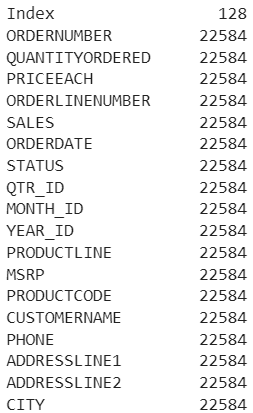

df.data(): Get the details about the varied knowledge sorts used and the non-null depend of every column.

-

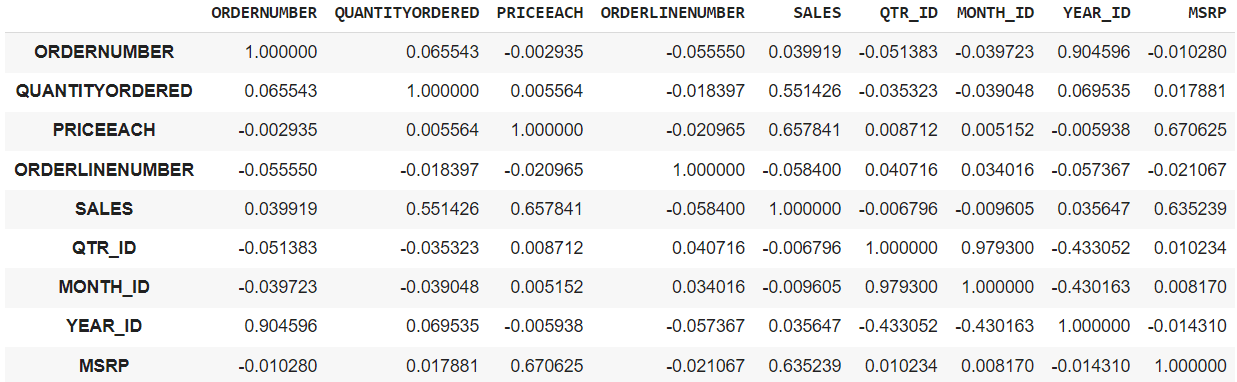

df.corr(): This will provide the correlation matrix between all of the integer columns within the knowledge body.

-

df.memory_usage(): It should let you know how a lot reminiscence is being consumed by every column.

3. Knowledge Choice

You can even choose the info of any particular row, column, and even a number of columns.

-

df.iloc[row_num]: It should choose a specific row primarily based on its index

For ex-,

-

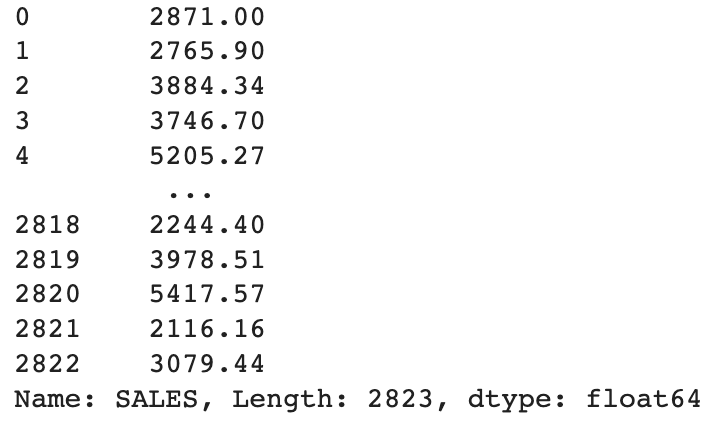

df[col_name]: It should choose the actual column

For ex-,

Output:

-

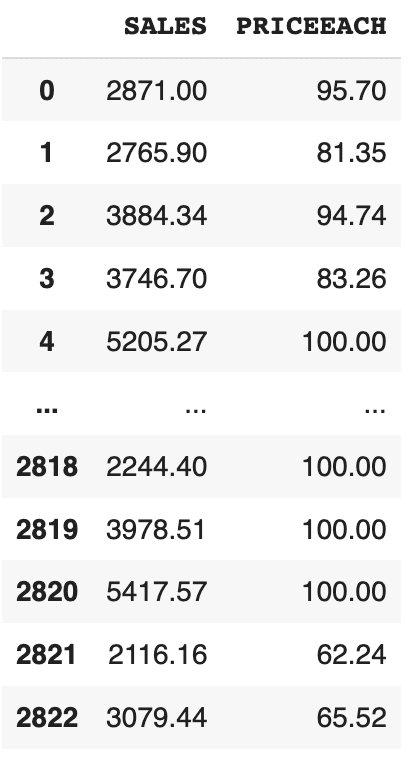

df[[‘col1’, ‘col2’]]: It should choose a number of columns given

For ex-,

df[["SALES", "PRICEEACH"]]

Output:

These capabilities are used to deal with the lacking knowledge. Some rows within the knowledge include some null and rubbish values, which may hamper the efficiency of our skilled mannequin. So, it’s at all times higher to appropriate or take away these lacking values.

-

df.isnull(): This can establish the lacking values in your dataframe. -

df.dropna(): This can take away the rows containing lacking values in any column. -

df.fillna(val): This can fill the lacking values withvalgiven within the argument. -

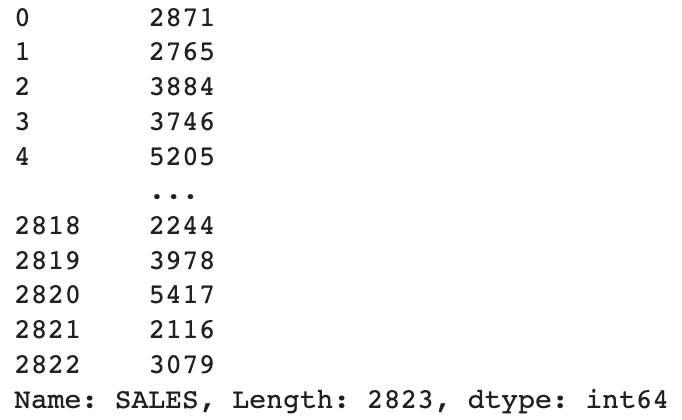

df[‘col’].astype(new_data_type): It could actually convert the info sort of the chosen columns to a special knowledge sort.

For ex-,

We’re changing the info sort of the SALES column from float to int.

Right here, we are going to use some useful capabilities in knowledge evaluation, like grouping, sorting, and filtering.

- Aggregation Capabilities:

You’ll be able to group a column by its identify after which apply some aggregation capabilities like sum, min/max, imply, and so on.

df.groupby("col_name_1").agg({"col_name_2": "sum"})

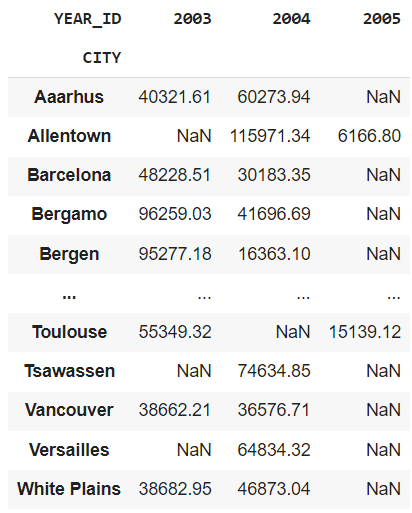

For ex-,

df.groupby("CITY").agg({"SALES": "sum"})

It offers you the entire gross sales of every metropolis.

If you wish to apply a number of aggregations at a single time, you possibly can write them like that.

For ex-,

aggregation = df.agg({"SALES": "sum", "QUANTITYORDERED": "imply"})

Output:

SALES 1.003263e+07

QUANTITYORDERED 3.509281e+01

dtype: float64

- Filtering Knowledge:

We will filter the info in rows primarily based on a selected worth or a situation.

For ex-,

Shows the rows the place the worth of gross sales is larger than 5000

You can even filter the dataframe utilizing the question() operate. It should additionally generate an identical output as above.

For ex,

- Sorting Knowledge:

You’ll be able to kind the info primarily based on a selected column, both within the ascending order or within the descending order.

For ex-,

df.sort_values("SALES", ascending=False) # Kinds the info in descending order

- Pivot Tables:

We will create pivot tables that summarize the info utilizing particular columns. That is very helpful in analyzing the info whenever you solely wish to think about the impact of specific columns.

For ex-,

pd.pivot_table(df, values="SALES", index="CITY", columns="YEAR_ID", aggfunc="sum")

Let me break this for you.

-

values: It comprises the column for which you wish to populate the desk’s cells. -

index: The column utilized in it should grow to be the row index of the pivot desk, and every distinctive class of this column will grow to be a row within the pivot desk. -

columns: It comprises the headers of the pivot desk, and every distinctive factor will grow to be the column within the pivot desk. -

aggfunc: This is identical aggregator operate we mentioned earlier.

Output:

This output reveals a chart which depicts the entire gross sales in a specific metropolis for a selected 12 months.

6. Combining Knowledge Frames

We will mix and merge a number of knowledge frames both horizontally or vertically. It should concatenate two knowledge frames and return a single merged knowledge body.

For ex-,

combined_df = pd.concat([df1, df2])

You’ll be able to merge two knowledge frames primarily based on a standard column. It’s helpful whenever you wish to mix two knowledge frames that share a standard identifier.

For ex,

merged_df = pd.merge(df1, df2, on="common_col")

7. Making use of Customized Capabilities

You’ll be able to apply customized capabilities in accordance with your wants in both a row or a column.

For ex-,

def cus_fun(x):

return x * 3

df["Sales_Tripled"] = df["SALES"].apply(cus_fun, axis=0)

We’ve written a customized operate that may triple the gross sales worth for every row. axis=0 signifies that we wish to apply the customized operate on a column, and axis=1 implies that we wish to apply the operate on a row.

Within the earlier methodology you must write a separate operate after which to name it from the apply() methodology. Lambda operate lets you use the customized operate contained in the apply() methodology itself. Let’s see how we are able to try this.

df["Sales_Tripled"] = df["SALES"].apply(lambda x: x * 3)

Applymap:

We will additionally apply a customized operate to each factor of the dataframe in a single line of code. However a degree to recollect is that it’s relevant to all the weather within the dataframe.

For ex-,

df = df.applymap(lambda x: str(x))

It should convert the info sort to a string of all the weather within the dataframe.

8. Time Sequence Evaluation

In arithmetic, time sequence evaluation means analyzing the info collected over a selected time interval, and pandas have capabilities to carry out one of these evaluation.

Conversion to DateTime Object Mannequin:

We will convert the date column right into a datetime format for simpler knowledge manipulation.

For ex-,

df["ORDERDATE"] = pd.to_datetime(df["ORDERDATE"])

Output:

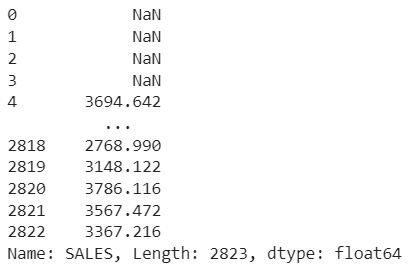

Calculate Rolling Common:

Utilizing this methodology, we are able to create a rolling window to view knowledge. We will specify a rolling window of any measurement. If the window measurement is 5, then it means a 5-day knowledge window at the moment. It could actually make it easier to take away fluctuations in your knowledge and assist establish patterns over time.

For ex-

rolling_avg = df["SALES"].rolling(window=5).imply()

Output:

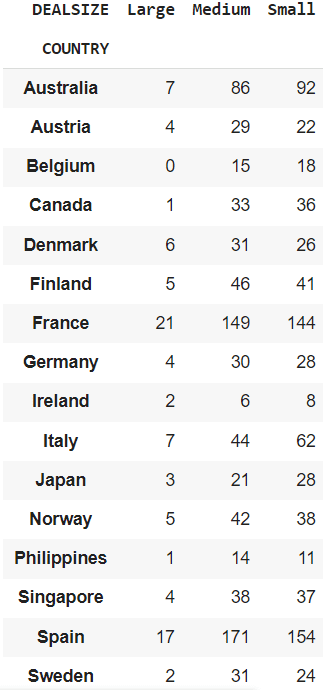

9. Cross Tabulation

We will carry out cross-tabulation between two columns of a desk. It’s usually a frequency desk that reveals the frequency of occurrences of assorted classes. It could actually make it easier to to know the distribution of classes throughout completely different areas.

For ex-,

Getting a cross-tabulation between the COUNTRY and DEALSIZE.

cross_tab = pd.crosstab(df["COUNTRY"], df["DEALSIZE"])

It could actually present you the order measurement (‘DEALSIZE’) ordered by completely different nations.

10. Dealing with Outliers

Outliers in knowledge signifies that a specific level goes far past the common vary. Let’s perceive it via an instance. Suppose you will have 5 factors, say 3, 5, 6, 46, 8. Then we are able to clearly say that the quantity 46 is an outlier as a result of it’s far past the common of the remainder of the factors. These outliers can result in improper statistics and ought to be faraway from the dataset.

Right here pandas come to the rescue to seek out these potential outliers. We will use a technique known as Interquartile Vary(IQR), which is a standard methodology for locating and dealing with these outliers. You can even examine this methodology if you would like data on it. You’ll be able to learn extra about them here.

Let’s see how we are able to try this utilizing pandas.

Q1 = df["SALES"].quantile(0.25)

Q3 = df["SALES"].quantile(0.75)

IQR = Q3 - Q1

lower_bound = Q1 - 1.5 * IQR

upper_bound = Q3 + 1.5 * IQR

outliers = df[(df["SALES"] < lower_bound) | (df["SALES"] > upper_bound)]

Q1 is the primary quartile representing the twenty fifth percentile of the info and Q3 is the third quartile representing the seventy fifth percentile of the info.

lower_bound variable shops the decrease certain that’s used for locating potential outliers. Its worth is ready to 1.5 instances the IQR beneath Q1. Equally, upper_bound calculates the higher certain, 1.5 instances the IQR above Q3.

After which, you filter out the outliers which can be lower than the decrease or better than the higher certain.

Python pandas library allows us to carry out superior knowledge evaluation and manipulations. These are just a few of them. You could find some extra instruments in this pandas documentation. One necessary factor to recollect is that the number of methods may be particular which caters to your wants and the dataset you might be utilizing.

Aryan Garg is a B.Tech. Electrical Engineering scholar, at present within the closing 12 months of his undergrad. His curiosity lies within the area of Net Improvement and Machine Studying. He have pursued this curiosity and am desirous to work extra in these instructions.