Customizing coding companions for organizations

Generative AI fashions for coding companions are principally educated on publicly out there supply code and pure language textual content. Whereas the big dimension of the coaching corpus permits the fashions to generate code for generally used performance, these fashions are unaware of code in personal repositories and the related coding kinds which might be enforced when growing with them. Consequently, the generated recommendations might require rewriting earlier than they’re applicable for incorporation into an inside repository.

We will handle this hole and decrease further guide enhancing by embedding code data from personal repositories on prime of a language mannequin educated on public code. For this reason we developed a customization functionality for Amazon CodeWhisperer. On this publish, we present you two doable methods of customizing coding companions utilizing retrieval augmented technology and fine-tuning.

Our aim with CodeWhisperer customization functionality is to allow organizations to tailor the CodeWhisperer mannequin utilizing their personal repositories and libraries to generate organization-specific code suggestions that save time, comply with organizational model and conventions, and keep away from bugs or safety vulnerabilities. This advantages enterprise software program growth and helps overcome the next challenges:

- Sparse documentation or data for inside libraries and APIs that forces builders to spend time analyzing beforehand written code to duplicate utilization.

- Ignorance and consistency in implementing enterprise-specific coding practices, kinds and patterns.

- Inadvertent use of deprecated code and APIs by builders.

By utilizing inside code repositories for extra coaching which have already undergone code critiques, the language mannequin can floor the usage of inside APIs and code blocks that overcome the previous listing of issues. As a result of the reference code is already reviewed and meets the shopper’s excessive bar, the probability of introducing bugs or safety vulnerabilities can also be minimized. And, by fastidiously choosing of the supply recordsdata used for personalisation, organizations can cut back the usage of deprecated code.

Design challenges

Customizing code recommendations primarily based on a company’s personal repositories has many fascinating design challenges. Deploying giant language fashions (LLMs) to floor code recommendations has fastened prices for availability and variable prices on account of inference primarily based on the variety of tokens generated. Subsequently, having separate customizations for every buyer and internet hosting them individually, thereby incurring further fastened prices, could be prohibitively costly. However, having a number of customizations concurrently on the identical system necessitates multi-tenant infrastructure to isolate proprietary code for every buyer. Moreover, the customization functionality ought to floor knobs to allow the choice of the suitable coaching subset from the interior repository utilizing completely different metrics (for instance, recordsdata with a historical past of fewer bugs or code that’s lately dedicated into the repository). By choosing the code primarily based on these metrics, the customization could be educated utilizing higher-quality code which may enhance the standard of code recommendations. Lastly, even with constantly evolving code repositories, the price related to customization ought to be minimal to assist enterprises understand price financial savings from elevated developer productiveness.

A baseline method to constructing customization might be to pretrain the mannequin on a single coaching corpus composed of of the prevailing (public) pretraining dataset together with the (personal) enterprise code. Whereas this method works in observe, it requires (redundant) particular person pretraining utilizing the general public dataset for every enterprise. It additionally requires redundant deployment prices related to internet hosting a personalized mannequin for every buyer that solely serves consumer requests originating from that buyer. By decoupling the coaching of private and non-private code and deploying the customization on a multi-tenant system, these redundant prices could be prevented.

How one can customise

At a excessive stage, there are two sorts of doable customization strategies: retrieval-augmented technology (RAG) and fine-tuning (FT).

- Retrieval-augmented technology: RAG finds matching items of code inside a repository that’s much like a given code fragment (for instance, code that instantly precedes the cursor within the IDE) and augments the immediate used to question the LLM with these matched code snippets. This enriches the immediate to assist nudge the mannequin into producing extra related code. There are a couple of strategies explored within the literature alongside these traces. See Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks, REALM, kNN-LM and RETRO.

- Fantastic-tuning: FT takes a pre-trained LLM and trains it additional on a particular, smaller codebase (in comparison with the pretraining dataset) to adapt it for the suitable repository. Fantastic-tuning adjusts the LLM’s weights primarily based on this coaching, making it extra tailor-made to the group’s distinctive wants.

Each RAG and fine-tuning are highly effective instruments for enhancing the efficiency of LLM-based customization. RAG can shortly adapt to non-public libraries or APIs with decrease coaching complexity and price. Nonetheless, looking and augmenting retrieved code snippets to the immediate will increase latency at runtime. As an alternative, fine-tuning doesn’t require any augmentation of the context as a result of the mannequin is already educated on personal libraries and APIs. Nonetheless, it results in increased coaching prices and complexities in serving the mannequin, when a number of customized fashions should be supported throughout a number of enterprise prospects. As we focus on later, these considerations could be remedied by optimizing the method additional.

Retrieval augmented technology

There are a couple of steps concerned in RAG:

Indexing

Given a personal repository as enter by the admin, an index is created by splitting the supply code recordsdata into chunks. Put merely, chunking turns the code snippets into digestible items which might be prone to be most informative for the mannequin and are simple to retrieve given the context. The dimensions of a piece and the way it’s extracted from a file are design selections that have an effect on the ultimate consequence. For instance, chunks could be cut up primarily based on traces of code or primarily based on syntactic blocks, and so forth.

Administrator Workflow

Contextual search

Search a set of listed code snippets primarily based on a couple of traces of code above the cursor and retrieve related code snippets. This retrieval can occur utilizing completely different algorithms. These selections would possibly embrace:

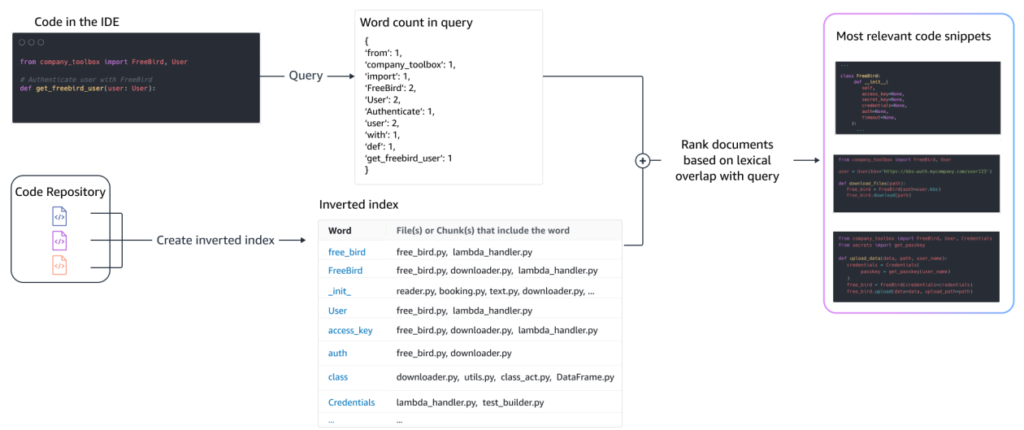

- Bag of phrases (BM25) – A bag-of-words retrieval perform that ranks a set of code snippets primarily based on the question time period frequencies and code snippet lengths.

BM25-based retrieval

The next determine illustrates how BM25 works. With a purpose to use BM25, an inverted index is constructed first. It is a knowledge construction that maps completely different phrases to the code snippets that these phrases happen in. At search time, we glance up code snippets primarily based on the phrases current within the question and rating them primarily based on the frequency.

Semantic retrieval

BM25 focuses on lexical matching. Subsequently, changing “add” with “delete” might not change the BM25 rating primarily based on the phrases within the question, however the retrieved performance would be the reverse of what’s required. In distinction, semantic retrieval focuses on the performance of the code snippet despite the fact that variable and API names could also be completely different. Usually, a mixture of BM25 and semantic retrievals can work nicely collectively to ship higher outcomes.

Augmented inference

When builders write code, their present program is used to formulate a question that’s despatched to the retrieval index. After retrieving a number of code snippets utilizing one of many strategies mentioned above, we prepend them to the unique immediate. There are a lot of design selections right here, together with the variety of snippets to be retrieved, the relative placement of the snippets within the immediate, and the scale of the snippet. The ultimate design alternative is primarily pushed by empirical statement by exploring varied approaches with the underlying language mannequin and performs a key position in figuring out the accuracy of the method. The contents from the returned chunks and the unique code are mixed and despatched to the mannequin to get personalized code recommendations.

Developer workflow

Fantastic tuning:

Fantastic-tuning a language mannequin is finished for transfer learning during which the weights of a pre-trained mannequin are educated on new knowledge. The aim is to retain the suitable data from a mannequin already educated on a big corpus and refine, change, or add new data from the brand new corpus — in our case, a brand new codebase. Merely coaching on a brand new codebase results in catastrophic forgetting. For instance, the language mannequin might “forget” its knowledge of safety or the APIs which might be sparsely used within the enterprise codebase to this point. There are a selection of strategies like experience replay, GEM, and PP-TF which might be employed to handle this problem.

Fantastic tuning

There are two methods of fine-tuning. One method is to make use of the extra knowledge with out augmenting the immediate to fine-tune the mannequin. One other method is to reinforce the immediate throughout fine-tuning by retrieving related code recommendations. This helps enhance the mannequin’s means to offer higher recommendations within the presence of retrieved code snippets. The mannequin is then evaluated on a held-out set of examples after it’s educated. Subsequently, the personalized mannequin is deployed and used for producing the code recommendations.

Regardless of the benefits of utilizing devoted LLMs for producing code on personal repositories, the prices could be prohibitive for small and medium-sized organizations. It’s because devoted compute sources are needed despite the fact that they could be underutilized given the scale of the groups. One technique to obtain price effectivity is serving a number of fashions on the identical compute (for instance, SageMaker multi-tenancy). Nonetheless, language fashions require a number of devoted GPUs throughout a number of zones to deal with latency and throughput constraints. Therefore, multi-tenancy of full mannequin internet hosting on every GPU is infeasible.

We will overcome this downside by serving a number of prospects on the identical compute by utilizing small adapters to the LLM. Parameter-efficient fine-tuning (PEFT) strategies like prompt tuning, prefix tuning, and Low-Rank Adaptation (LoRA) are used to decrease coaching prices with none lack of accuracy. LoRA, particularly, has seen nice success at attaining comparable (or higher) accuracy than full-model fine-tuning. The essential thought is to design a low-rank matrix that’s then added to the matrices with the unique matrix weight of focused layers of the mannequin. Usually, these adapters are then merged with the unique mannequin weights for serving. This results in the identical dimension and structure as the unique neural community. Maintaining the adapters separate, we will serve the identical base mannequin with many mannequin adapters. This brings the economies of cut back to our small and medium-sized prospects.

Low-Rank Adaptation (LoRA)

Measuring effectiveness of customization

We’d like analysis metrics to evaluate the efficacy of the personalized resolution. Offline analysis metrics act as guardrails towards transport customizations which might be subpar in comparison with the default mannequin. By constructing datasets out of a held-out dataset from throughout the supplied repository, the customization method could be utilized to this dataset to measure effectiveness. Evaluating the prevailing supply code with the personalized code suggestion quantifies the usefulness of the customization. Frequent measures used for this quantification embrace metrics like edit similarity, actual match, and CodeBLEU.

It’s also doable to measure usefulness by quantifying how typically inside APIs are invoked by the customization and evaluating it with the invocations within the pre-existing supply. After all, getting each features proper is essential for a profitable completion. For our customization method, we’ve got designed a tailored metric generally known as Customization High quality Index (CQI), a single user-friendly measure ranging between 1 and 10. The CQI metric exhibits the usefulness of the recommendations from the personalized mannequin in comparison with code recommendations with a generic public mannequin.

Abstract

We constructed Amazon CodeWhisperer customization functionality primarily based on a mix of the main technical strategies mentioned on this weblog publish and evaluated it with consumer research on developer productiveness, performed by Persistent Techniques. In these two research, commissioned by AWS, builders had been requested to create a medical software program software in Java that required use of their inside libraries. Within the first research, builders with out entry to CodeWhisperer took (on common) ~8.2 hours to finish the duty, whereas those that used CodeWhisperer (with out customization) accomplished the duty 62 p.c quicker in (on common) ~3.1 hours.

Within the second research with a distinct set of developer cohorts, builders utilizing CodeWhisperer that had been personalized utilizing their personal codebase accomplished the duty in 2.5 hours on common, 28 p.c quicker than those that had been utilizing CodeWhisperer with out customization and accomplished the duty in ~3.5 hours on common. We strongly imagine instruments like CodeWhisperer which might be personalized to your codebase have a key position to play in additional boosting developer productiveness and advocate giving it a run. For extra data and to get began, go to the Amazon CodeWhisperer page.

In regards to the authors

Qing Solar is a Senior Utilized Scientist in AWS AI Labs and work on AWS CodeWhisperer, a generative AI-powered coding assistant. Her analysis pursuits lie in Pure Language Processing, AI4Code and generative AI. Previously, she had labored on a number of NLP-based companies reminiscent of Comprehend Medical, a medical prognosis system at Amazon Well being AI and Machine Translation system at Meta AI. She acquired her PhD from Virginia Tech in 2017.

Qing Solar is a Senior Utilized Scientist in AWS AI Labs and work on AWS CodeWhisperer, a generative AI-powered coding assistant. Her analysis pursuits lie in Pure Language Processing, AI4Code and generative AI. Previously, she had labored on a number of NLP-based companies reminiscent of Comprehend Medical, a medical prognosis system at Amazon Well being AI and Machine Translation system at Meta AI. She acquired her PhD from Virginia Tech in 2017.

Arash Farahani is an Utilized Scientist with Amazon CodeWhisperer. His present pursuits are in generative AI, search, and personalization. Arash is captivated with constructing options that resolve developer ache factors. He has labored on a number of options inside CodeWhisperer, and launched NLP options into varied inside workstreams that contact all Amazon builders. He acquired his PhD from College of Illinois at Urbana-Champaign in 2017.

Arash Farahani is an Utilized Scientist with Amazon CodeWhisperer. His present pursuits are in generative AI, search, and personalization. Arash is captivated with constructing options that resolve developer ache factors. He has labored on a number of options inside CodeWhisperer, and launched NLP options into varied inside workstreams that contact all Amazon builders. He acquired his PhD from College of Illinois at Urbana-Champaign in 2017.

Xiaofei Ma is an Utilized Science Supervisor in AWS AI Labs. He joined Amazon in 2016 as an Utilized Scientist inside SCOT group after which later AWS AI Labs in 2018 engaged on Amazon Kendra. Xiaofei has been serving because the science supervisor for a number of companies together with Kendra, Contact Lens, and most lately CodeWhisperer and CodeGuru Safety. His analysis pursuits lie within the space of AI4Code and Pure Language Processing. He acquired his PhD from College of Maryland, School Park in 2010.

Xiaofei Ma is an Utilized Science Supervisor in AWS AI Labs. He joined Amazon in 2016 as an Utilized Scientist inside SCOT group after which later AWS AI Labs in 2018 engaged on Amazon Kendra. Xiaofei has been serving because the science supervisor for a number of companies together with Kendra, Contact Lens, and most lately CodeWhisperer and CodeGuru Safety. His analysis pursuits lie within the space of AI4Code and Pure Language Processing. He acquired his PhD from College of Maryland, School Park in 2010.

Murali Krishna Ramanathan is a Principal Utilized Scientist in AWS AI Labs and co-leads AWS CodeWhisperer, a generative AI-powered coding companion. He’s captivated with constructing software program instruments and workflows that assist enhance developer productiveness. Previously, he constructed Piranha, an automatic refactoring device to delete code on account of stale characteristic flags and led code high quality initiatives at Uber engineering. He’s a recipient of the Google school award (2015), ACM SIGSOFT Distinguished paper award (ISSTA 2016) and Maurice Halstead award (Purdue 2006). He acquired his PhD in Pc Science from Purdue College in 2008.

Murali Krishna Ramanathan is a Principal Utilized Scientist in AWS AI Labs and co-leads AWS CodeWhisperer, a generative AI-powered coding companion. He’s captivated with constructing software program instruments and workflows that assist enhance developer productiveness. Previously, he constructed Piranha, an automatic refactoring device to delete code on account of stale characteristic flags and led code high quality initiatives at Uber engineering. He’s a recipient of the Google school award (2015), ACM SIGSOFT Distinguished paper award (ISSTA 2016) and Maurice Halstead award (Purdue 2006). He acquired his PhD in Pc Science from Purdue College in 2008.

Ramesh Nallapati is a Senior Principal Utilized Scientist in AWS AI Labs and co-leads CodeWhisperer, a generative AI-powered coding companion, and Titan Massive Language Fashions at AWS. His pursuits are primarily within the areas of Pure Language Processing and Generative AI. Previously, Ramesh has supplied science management in delivering many NLP-based AWS merchandise reminiscent of Kendra, Quicksight Q and Contact Lens. He held analysis positions at Stanford, CMU and IBM Analysis, and acquired his Ph.D. in Pc Science from College of Massachusetts Amherst in 2006.

Ramesh Nallapati is a Senior Principal Utilized Scientist in AWS AI Labs and co-leads CodeWhisperer, a generative AI-powered coding companion, and Titan Massive Language Fashions at AWS. His pursuits are primarily within the areas of Pure Language Processing and Generative AI. Previously, Ramesh has supplied science management in delivering many NLP-based AWS merchandise reminiscent of Kendra, Quicksight Q and Contact Lens. He held analysis positions at Stanford, CMU and IBM Analysis, and acquired his Ph.D. in Pc Science from College of Massachusetts Amherst in 2006.