Mistral 7B basis fashions from Mistral AI at the moment are accessible in Amazon SageMaker JumpStart

As we speak, we’re excited to announce that the Mistral 7B basis fashions, developed by Mistral AI, can be found for patrons via Amazon SageMaker JumpStart to deploy with one click on for operating inference. With 7 billion parameters, Mistral 7B could be simply personalized and shortly deployed. You may check out this mannequin with SageMaker JumpStart, a machine studying (ML) hub that gives entry to algorithms and fashions so you’ll be able to shortly get began with ML. On this publish, we stroll via the way to uncover and deploy the Mistral 7B mannequin.

What’s Mistral 7B

Mistral 7B is a basis mannequin developed by Mistral AI, supporting English textual content and code technology skills. It helps a wide range of use circumstances, reminiscent of textual content summarization, classification, textual content completion, and code completion. To show the straightforward customizability of the mannequin, Mistral AI has additionally launched a Mistral 7B Instruct mannequin for chat use circumstances, fine-tuned utilizing a wide range of publicly accessible dialog datasets.

Mistral 7B is a transformer mannequin and makes use of grouped-query consideration and sliding-window consideration to realize quicker inference (low latency) and deal with longer sequences. Group question consideration is an structure that mixes multi-query and multi-head consideration to realize output high quality near multi-head consideration and comparable pace to multi-query consideration. Sliding-window consideration makes use of the stacked layers of a transformer to attend up to now past the window dimension to extend context size. Mistral 7B has an 8,000-token context size, demonstrates low latency and excessive throughput, and has robust efficiency when in comparison with bigger mannequin alternate options, offering low reminiscence necessities at a 7B mannequin dimension. The mannequin is made accessible below the permissive Apache 2.0 license, to be used with out restrictions.

What’s SageMaker JumpStart

With SageMaker JumpStart, ML practitioners can select from a rising checklist of best-performing basis fashions. ML practitioners can deploy basis fashions to devoted Amazon SageMaker situations inside a community remoted atmosphere, and customise fashions utilizing SageMaker for mannequin coaching and deployment.

Now you can uncover and deploy Mistral 7B with just a few clicks in Amazon SageMaker Studio or programmatically via the SageMaker Python SDK, enabling you to derive mannequin efficiency and MLOps controls with SageMaker options reminiscent of Amazon SageMaker Pipelines, Amazon SageMaker Debugger, or container logs. The mannequin is deployed in an AWS safe atmosphere and below your VPC controls, serving to guarantee information safety.

Uncover fashions

You may entry Mistral 7B basis fashions via SageMaker JumpStart within the SageMaker Studio UI and the SageMaker Python SDK. On this part, we go over the way to uncover the fashions in SageMaker Studio.

SageMaker Studio is an built-in growth atmosphere (IDE) that gives a single web-based visible interface the place you’ll be able to entry purpose-built instruments to carry out all ML growth steps, from getting ready information to constructing, coaching, and deploying your ML fashions. For extra particulars on the way to get began and arrange SageMaker Studio, confer with Amazon SageMaker Studio.

In SageMaker Studio, you’ll be able to entry SageMaker JumpStart, which incorporates pre-trained fashions, notebooks, and prebuilt options, below Prebuilt and automatic options.

From the SageMaker JumpStart touchdown web page, you’ll be able to browse for options, fashions, notebooks, and different sources. You’ll find Mistral 7B within the Basis Fashions: Textual content Technology carousel.

You can too discover different mannequin variants by selecting Discover all Textual content Fashions or looking for “Mistral.”

You may select the mannequin card to view particulars concerning the mannequin reminiscent of license, information used to coach, and the way to use. Additionally, you will discover two buttons, Deploy and Open pocket book, which is able to assist you use the mannequin (the next screenshot exhibits the Deploy possibility).

Deploy fashions

Deployment begins whenever you select Deploy. Alternatively, you’ll be able to deploy via the instance pocket book that exhibits up whenever you select Open pocket book. The instance pocket book offers end-to-end steerage on the way to deploy the mannequin for inference and clear up sources.

To deploy utilizing pocket book, we begin by choosing the Mistral 7B mannequin, specified by the model_id. You may deploy any of the chosen fashions on SageMaker with the next code:

This deploys the mannequin on SageMaker with default configurations, together with default occasion sort (ml.g5.2xlarge) and default VPC configurations. You may change these configurations by specifying non-default values in JumpStartModel. After it’s deployed, you’ll be able to run inference in opposition to the deployed endpoint via the SageMaker predictor:

Optimizing the deployment configuration

Mistral fashions use Textual content Technology Inference (TGI model 1.1) mannequin serving. When deploying fashions with the TGI deep studying container (DLC), you’ll be able to configure a wide range of launcher arguments by way of atmosphere variables when deploying your endpoint. To assist the 8,000-token context size of Mistral 7B fashions, SageMaker JumpStart has configured a few of these parameters by default: we set MAX_INPUT_LENGTH and MAX_TOTAL_TOKENS to 8191 and 8192, respectively. You may view the total checklist by inspecting your mannequin object:

By default, SageMaker JumpStart doesn’t clamp concurrent customers by way of the atmosphere variable MAX_CONCURRENT_REQUESTS smaller than the TGI default worth of 128. The reason being as a result of some customers could have typical workloads with small payload context lengths and wish excessive concurrency. Notice that the SageMaker TGI DLC helps a number of concurrent customers via rolling batch. When deploying your endpoint in your software, you may contemplate whether or not you must clamp MAX_TOTAL_TOKENS or MAX_CONCURRENT_REQUESTS previous to deployment to supply one of the best efficiency in your workload:

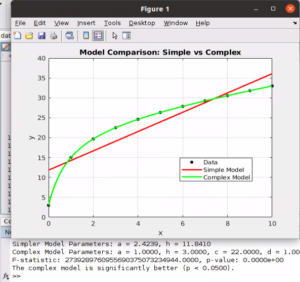

Right here, we present how mannequin efficiency may differ in your typical endpoint workload. Within the following tables, you’ll be able to observe that small-sized queries (128 enter phrases and 128 output tokens) are fairly performant below numerous concurrent customers, reaching token throughput on the order of 1,000 tokens per second. Nonetheless, because the variety of enter phrases will increase to 512 enter phrases, the endpoint saturates its batching capability—the variety of concurrent requests allowed to be processed concurrently—leading to a throughput plateau and vital latency degradations beginning round 16 concurrent customers. Lastly, when querying the endpoint with giant enter contexts (for instance, 6,400 phrases) concurrently by a number of concurrent customers, this throughput plateau happens comparatively shortly, to the purpose the place your SageMaker account will begin encountering 60-second response timeout limits in your overloaded requests.

| . | throughput (tokens/s) | ||||||||||

| concurrent customers | 1 | 2 | 4 | 8 | 16 | 32 | 64 | 128 | |||

| mannequin | occasion sort | enter phrases | output tokens | . | |||||||

| mistral-7b-instruct | ml.g5.2xlarge | 128 | 128 | 30 | 54 | 89 | 166 | 287 | 499 | 793 | 1030 |

| 512 | 128 | 29 | 50 | 80 | 140 | 210 | 315 | 383 | 458 | ||

| 6400 | 128 | 17 | 25 | 30 | 35 | — | — | — | — | ||

| . | p50 latency (ms/token) | ||||||||||

| concurrent customers | 1 | 2 | 4 | 8 | 16 | 32 | 64 | 128 | |||

| mannequin | occasion sort | enter phrases | output tokens | . | |||||||

| mistral-7b-instruct | ml.g5.2xlarge | 128 | 128 | 32 | 33 | 34 | 36 | 41 | 46 | 59 | 88 |

| 512 | 128 | 34 | 36 | 39 | 43 | 54 | 71 | 112 | 213 | ||

| 6400 | 128 | 57 | 71 | 98 | 154 | — | — | — | — | ||

Inference and instance prompts

Mistral 7B

You may work together with a base Mistral 7B mannequin like every customary textual content technology mannequin, the place the mannequin processes an enter sequence and outputs predicted subsequent phrases within the sequence. The next is a straightforward instance with multi-shot studying, the place the mannequin is supplied with a number of examples and the ultimate instance response is generated with contextual information of those earlier examples:

Mistral 7B instruct

The instruction-tuned model of Mistral accepts formatted directions the place dialog roles should begin with a person immediate and alternate between person and assistant. A easy person immediate could appear like the next:

A multi-turn immediate would appear like the next:

This sample repeats for nonetheless many turns are within the dialog.

Within the following sections, we discover some examples utilizing the Mistral 7B Instruct mannequin.

Data retrieval

The next is an instance of information retrieval:

Giant context query answering

To show the way to use this mannequin to assist giant enter context lengths, the next instance embeds a passage, titled “Rats” by Robert Sullivan (reference), from the MCAS Grade 10 English Language Arts Studying Comprehension take a look at into the enter immediate instruction and asks the mannequin a directed query concerning the textual content:

Arithmetic and reasoning

The Mistral fashions additionally report strengths in arithmetic accuracy. Mistral can present comprehension reminiscent of the next math logic:

Coding

The next is an instance of a coding immediate:

Clear up

After you’re achieved operating the pocket book, be certain that to delete all of the sources that you simply created within the course of so your billing is stopped. Use the next code:

Conclusion

On this publish, we confirmed you the way to get began with Mistral 7B in SageMaker Studio and deploy the mannequin for inference. As a result of basis fashions are pre-trained, they may also help decrease coaching and infrastructure prices and allow customization in your use case. Go to Amazon SageMaker JumpStart now to get began.

Assets

Concerning the Authors

Dr. Kyle Ulrich is an Utilized Scientist with the Amazon SageMaker JumpStart workforce. His analysis pursuits embody scalable machine studying algorithms, pc imaginative and prescient, time sequence, Bayesian non-parametrics, and Gaussian processes. His PhD is from Duke College and he has revealed papers in NeurIPS, Cell, and Neuron.

Dr. Kyle Ulrich is an Utilized Scientist with the Amazon SageMaker JumpStart workforce. His analysis pursuits embody scalable machine studying algorithms, pc imaginative and prescient, time sequence, Bayesian non-parametrics, and Gaussian processes. His PhD is from Duke College and he has revealed papers in NeurIPS, Cell, and Neuron.

Dr. Ashish Khetan is a Senior Utilized Scientist with Amazon SageMaker JumpStart and helps develop machine studying algorithms. He acquired his PhD from College of Illinois Urbana-Champaign. He’s an energetic researcher in machine studying and statistical inference, and has revealed many papers in NeurIPS, ICML, ICLR, JMLR, ACL, and EMNLP conferences.

Dr. Ashish Khetan is a Senior Utilized Scientist with Amazon SageMaker JumpStart and helps develop machine studying algorithms. He acquired his PhD from College of Illinois Urbana-Champaign. He’s an energetic researcher in machine studying and statistical inference, and has revealed many papers in NeurIPS, ICML, ICLR, JMLR, ACL, and EMNLP conferences.

Vivek Singh is a product supervisor with Amazon SageMaker JumpStart. He focuses on enabling prospects to onboard SageMaker JumpStart to simplify and speed up their ML journey to construct generative AI functions.

Vivek Singh is a product supervisor with Amazon SageMaker JumpStart. He focuses on enabling prospects to onboard SageMaker JumpStart to simplify and speed up their ML journey to construct generative AI functions.

Roy Allela is a Senior AI/ML Specialist Options Architect at AWS based mostly in Munich, Germany. Roy helps AWS prospects—from small startups to giant enterprises—prepare and deploy giant language fashions effectively on AWS. Roy is keen about computational optimization issues and enhancing the efficiency of AI workloads.

Roy Allela is a Senior AI/ML Specialist Options Architect at AWS based mostly in Munich, Germany. Roy helps AWS prospects—from small startups to giant enterprises—prepare and deploy giant language fashions effectively on AWS. Roy is keen about computational optimization issues and enhancing the efficiency of AI workloads.