AI Researchers from Bytedance and the King Abdullah College of Science and Expertise Current a Novel Framework For Animating Hair Blowing in Nonetheless Portrait Images

Hair is likely one of the most exceptional options of the human physique, impressing with its dynamic qualities that convey scenes to life. Research have constantly demonstrated that dynamic components have a stronger enchantment and fascination than static photos. Social media platforms like TikTok and Instagram witness the every day sharing of huge portrait photographs as folks aspire to make their footage each interesting and artistically charming. This drive fuels researchers’ exploration into the realm of animating human hair inside nonetheless photos, aiming to supply a vivid, aesthetically pleasing, and exquisite viewing expertise.

Current developments within the subject have launched strategies to infuse nonetheless photos with dynamic components, animating fluid substances corresponding to water, smoke, and hearth inside the body. But, these approaches have largely missed the intricate nature of human hair in real-life pictures. This text focuses on the creative transformation of human hair inside portrait pictures, which includes translating the image right into a cinemagraph.

A cinemagraph represents an modern brief video format that enjoys favor amongst skilled photographers, advertisers, and artists. It finds utility in numerous digital mediums, together with digital commercials, social media posts, and touchdown pages. The fascination for cinemagraphs lies of their skill to merge the strengths of nonetheless photos and movies. Sure areas inside a cinemagraph characteristic refined, repetitive motions in a brief loop, whereas the rest stays static. This distinction between stationary and transferring components successfully captivates the viewer’s consideration.

By way of the transformation of a portrait picture right into a cinemagraph, full with refined hair motions, the concept is to reinforce the picture’s attract with out detracting from the static content material, making a extra compelling and fascinating visible expertise.

Current strategies and business software program have been developed to generate high-fidelity cinemagraphs from enter movies by selectively freezing sure video areas. Sadly, these instruments will not be appropriate for processing nonetheless photos. In distinction, there was a rising curiosity in still-image animation. Most of those approaches have targeted on animating fluid components corresponding to clouds, water, and smoke. Nevertheless, the dynamic conduct of hair, composed of fibrous supplies, presents a particular problem in comparison with fluid components. Not like fluid factor animation, which has obtained intensive consideration, the animation of human hair in actual portrait photographs has been comparatively unexplored.

Animating hair in a static portrait picture is difficult because of the intricate complexity of hair constructions and dynamics. Not like the graceful surfaces of the human physique or face, hair contains a whole bunch of 1000’s of particular person elements, leading to advanced and non-uniform constructions. This complexity results in intricate movement patterns inside the hair, together with interactions with the pinnacle. Whereas there are specialised strategies for modeling hair, corresponding to utilizing dense digital camera arrays and high-speed cameras, they’re typically pricey and time-consuming, limiting their practicality for real-world hair animation.

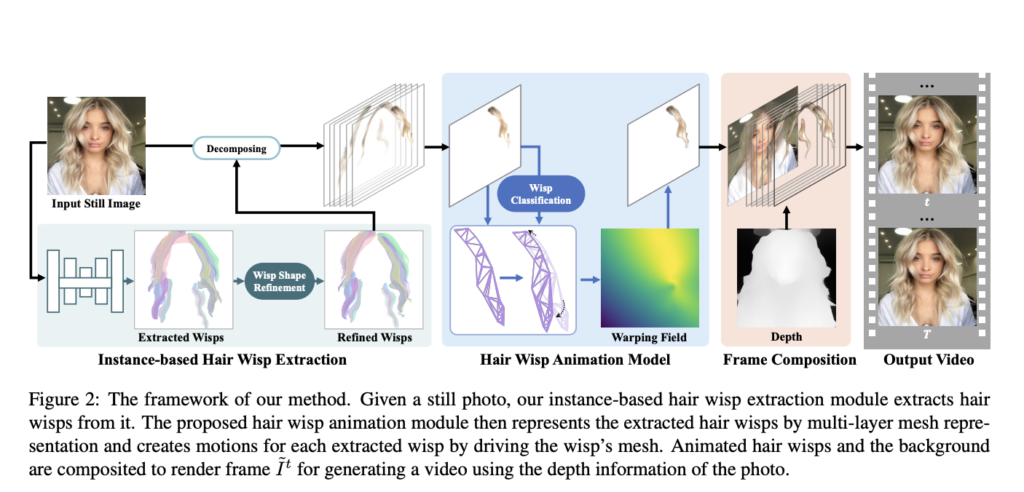

The paper introduced on this article introduces a novel AI technique for robotically animating hair inside a static portrait picture, eliminating the necessity for person intervention or advanced {hardware} setups. The perception behind this method lies within the human visible system’s diminished sensitivity to particular person hair strands and their motions in actual portrait movies, in comparison with artificial strands inside a digitalized human in a digital atmosphere. The proposed resolution is to animate “hair wisps” as an alternative of particular person strands, making a visually pleasing viewing expertise. To attain this, the paper introduces a hair wisp animation module, enabling an environment friendly and automatic resolution. An outline of this framework is illustrated under.

The important thing problem on this context is the best way to extract these hair wisps. Whereas associated work, corresponding to hair modeling, has targeted on hair segmentation, these approaches primarily goal the extraction of the complete hair area, which differs from the target. To extract significant hair wisps, the researchers innovatively body hair wisp extraction as an example segmentation drawback, the place a person phase inside a nonetheless picture corresponds to a hair wisp. By adopting this drawback definition, the researchers leverage occasion segmentation networks to facilitate the extraction of hair wisps. This not solely simplifies the hair wisp extraction drawback but additionally permits the usage of superior networks for efficient extraction. Moreover, the paper presents the creation of a hair wisp dataset containing actual portrait photographs to coach the networks, together with a semi-annotation scheme to provide ground-truth annotations for the recognized hair wisps. Some pattern outcomes from the paper are reported within the determine under in contrast with state-of-the-art strategies.

This was the abstract of a novel AI framework designed to remodel nonetheless portraits into cinemagraphs by animating hair wisps with pleasing motions with out noticeable artifacts. In case you are and need to study extra about it, please be at liberty to consult with the hyperlinks cited under.

Take a look at the Paper and Project Page. All Credit score For This Analysis Goes To the Researchers on This Mission. Additionally, don’t neglect to affix our 31k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, the place we share the most recent AI analysis information, cool AI tasks, and extra.

If you like our work, you will love our newsletter..

We’re additionally on WhatsApp. Join our AI Channel on Whatsapp..

Daniele Lorenzi obtained his M.Sc. in ICT for Web and Multimedia Engineering in 2021 from the College of Padua, Italy. He’s a Ph.D. candidate on the Institute of Info Expertise (ITEC) on the Alpen-Adria-Universität (AAU) Klagenfurt. He’s at the moment working within the Christian Doppler Laboratory ATHENA and his analysis pursuits embody adaptive video streaming, immersive media, machine studying, and QoS/QoE analysis.