Unify Batch and ML Programs with Function/Coaching/Inference Pipelines

Sponsored Content material

By Jim Dowling, Co-Founder & CEO, Hopsworks

This text introduces a unified architectural sample for constructing each Batch and Actual-Time machine studying (ML) Programs. We name it the FTI (Function, Coaching, Inference) pipeline structure. FTI pipelines break up the monolithic ML pipeline into 3 impartial pipelines, every with clearly outlined inputs and outputs, the place every pipeline might be developed, examined, and operated independently. For a historic perspective on the evolution of the FTI Pipeline structure, you possibly can learn the complete in-depth mental map for MLOps article.

In recent times, Machine Studying Operations (MLOps) has gained mindshare as a growth course of, impressed by DevOps rules, that introduces automated testing, versioning of ML property, and operational monitoring to allow ML systems to be incrementally developed and deployed. Nevertheless, present MLOps approaches typically current a fancy and overwhelming panorama, leaving many groups struggling to navigate the trail from mannequin growth to manufacturing. On this article, we introduce a contemporary perspective on constructing ML techniques by way of the idea of FTI pipelines. The FTI structure has empowered numerous builders to create strong ML techniques with ease, lowering cognitive load, and fostering higher collaboration throughout groups. We delve into the core rules of FTI pipelines and discover their purposes in each batch and real-time ML techniques.

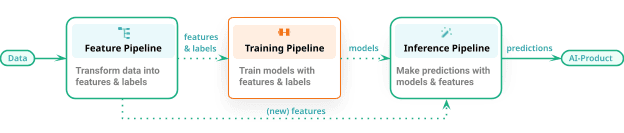

The FTI method for this architectural sample has been used to construct a whole bunch of ML techniques. The sample is as follows – a ML system consists of three independently developed and operated ML pipelines:

- a function pipeline that takes as enter uncooked information that it transforms into options (and labels)

- a coaching pipeline that takes as enter options (and labels) and outputs a skilled mannequin, and

- an inference pipeline that takes new function information and a skilled mannequin and makes predictions.

On this FTI, there isn’t any single ML pipeline. The confusion about what the ML pipeline does (does it function engineer and practice fashions or additionally do inference or simply a kind of?) disappears. The FTI structure applies to each batch ML techniques and real-time ML techniques.

Determine 1: The Function/Coaching/Inference (FTI) pipelines for constructing ML Programs

The function pipeline could be a batch program or a streaming program. The coaching pipeline can output something from a easy XGBoost mannequin to a parameter-efficient fine-tuned (PEFT) large-language mannequin (LLM), skilled on many GPUs. Lastly, the inference pipeline could be a batch program that produces a batch of predictions to an internet service that takes requests from shoppers and returns predictions in real-time.

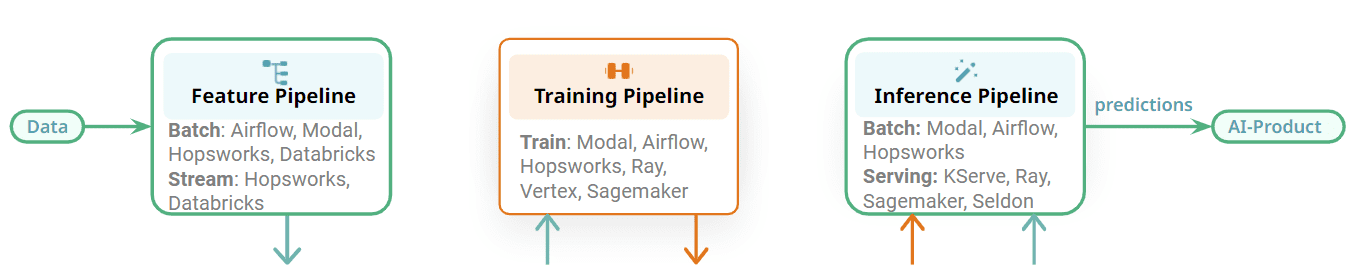

One main benefit of FTI pipelines is that it’s an open structure. You should utilize Python, Java or SQL. If it is advisable do function engineering on massive volumes of information, you need to use Spark or DBT or Beam. Coaching will sometimes be in Python utilizing some ML framework, and batch inference could possibly be in Python or Spark, relying in your information volumes. On-line inference pipelines are, nevertheless, practically at all times in Python as fashions are sometimes coaching with Python.

Determine 2: Select the perfect orchestrator on your ML pipeline/service.

The FTI pipelines are additionally modular and there’s a clear interface between the totally different levels. Every FTI pipeline might be operated independently. In comparison with the monolithic ML pipeline, totally different groups can now be liable for growing and working every pipeline. The affect of that is that for orchestration, for instance, one staff may use one orchestrator for a function pipeline and a distinct staff may use a distinct orchestrator for the batch inference pipeline. Alternatively, you would use the identical orchestrator for the three totally different FTI pipelines for a batch ML system. Some examples of orchestrators that can be utilized in ML techniques embody general-purpose, feature-rich orchestrators, equivalent to Airflow, or light-weight orchestrators, equivalent to Modal, or managed orchestrators provided by function platforms.

A few of the FTI pipelines, nevertheless, won’t want orchestration. Coaching pipelines might be run on-demand, when a brand new mannequin is required. Streaming function pipelines and on-line inference pipelines run constantly as providers, and don’t require orchestration. Flink, Spark Streaming, and Beam are run as providers on platforms equivalent to Kubernetes, Databricks, or Hopsworks. On-line inference pipelines are deployed with their mannequin on mannequin serving platforms, equivalent to KServe (Hopsworks), Seldon, Sagemaker, and Ray. The principle takeaway right here is that the ML pipelines are modular with clear interfaces, enabling you to decide on the perfect expertise for working your FTI pipelines.

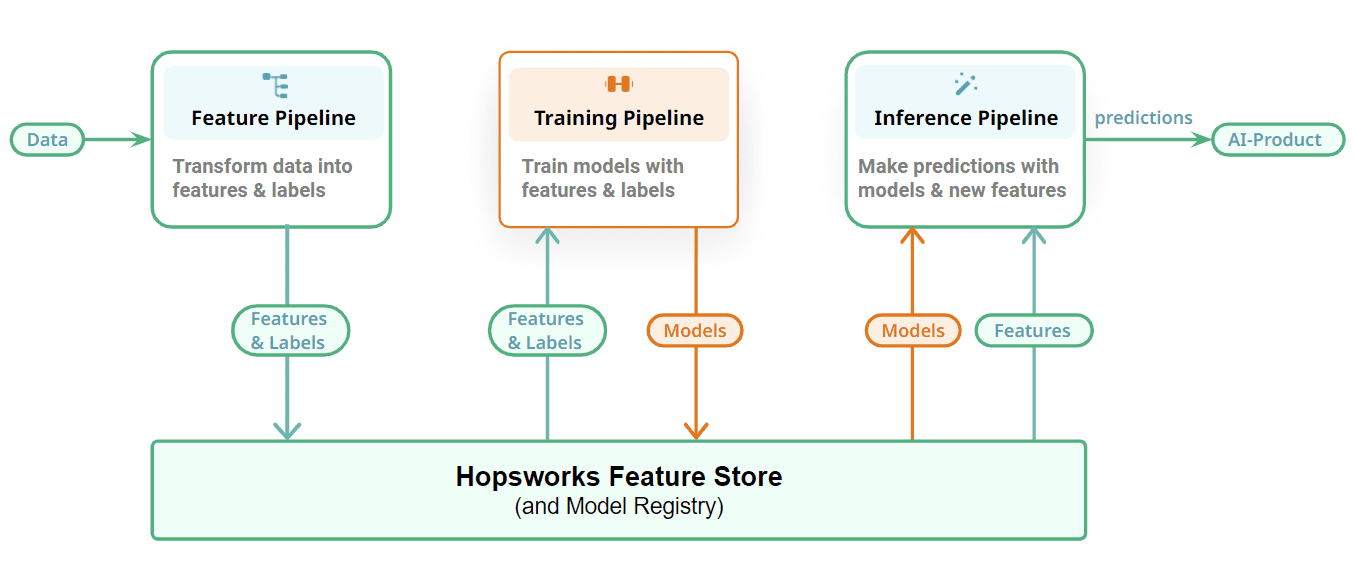

Determine 3: Join your ML pipelines with a Function Retailer and Mannequin Registry

Lastly, we present how we join our FTI pipelines along with a stateful layer to retailer the ML artifacts – options, coaching/check information, and fashions. Function pipelines retailer their output, options, as DataFrames within the function retailer. Incremental tables retailer every new replace/append/delete as separate commits utilizing a desk format (we use Apache Hudi in Hopsworks). Coaching pipelines learn point-in-time constant snapshots of coaching information from Hopsworks to coach fashions with and output the skilled mannequin to a mannequin registry. You may embody your favourite mannequin registry right here, however we’re biased in the direction of Hopsworks’ mannequin registry. Batch inference pipelines additionally learn point-in-time constant snapshots of inference information from the function retailer, and produce predictions by making use of the mannequin to the inference information. On-line inference pipelines compute on-demand options and skim precomputed options from the function retailer to construct a feature vector that’s used to make predictions in response to requests by on-line purposes/providers.

Function Pipelines

Function pipelines learn information from information sources, compute options and ingest them to the function retailer. A few of the questions that have to be answered for any given function pipeline embody:

- Is the function pipeline batch or streaming?

- Are function ingestions incremental or full-load operations?

- What framework/language is used to implement the function pipeline?

- Is there information validation carried out on the function information earlier than ingestion?

- What orchestrator is used to schedule the function pipeline?

- If some options have already been computed by an upstream system (e.g., an information warehouse), how do you stop duplicating that information, and solely learn these options when creating coaching or batch inference information?

Coaching Pipelines

In coaching pipelines a few of the particulars that may be found on double-clicking are:

- What framework/language is used to implement the coaching pipeline?

- What experiment monitoring platform is used?

- Is the coaching pipeline run on a schedule (in that case, what orchestrator is used), or is it run on-demand (e.g., in response to efficiency degradation of a mannequin)?

- Are GPUs wanted for coaching? If sure, how are they allotted to coaching pipelines?

- What function encoding/scaling is finished on which options? (We sometimes retailer function information unencoded within the function retailer, in order that it may be used for EDA (exploratory information evaluation). Encoding/scaling is carried out in a constant method coaching and inference pipelines). Examples of function encoding strategies embody scikit-learn pipelines or declarative transformations in feature views (Hopsworks).

- What mannequin analysis and validation course of is used?

- What mannequin registry is used to retailer the skilled fashions?

Inference Pipelines

Inference pipelines are as various because the purposes they AI-enable. In inference pipelines, a few of the particulars that may be found on double-clicking are:

- What’s the prediction client – is it a dashboard, on-line utility – and the way does it devour predictions?

- Is it a batch or on-line inference pipeline?

- What sort of function encoding/scaling is finished on which options?

- For a batch inference pipeline, what framework/language is used? What orchestrator is used to run it on a schedule? What sink is used to devour the predictions produced?

- For an internet inference pipeline, what mannequin serving server is used to host the deployed mannequin? How is the net inference pipeline carried out – as a predictor class or with a separate transformer step? Are GPUs wanted for inference? Is there a SLA (service-level agreements) for a way lengthy it takes to answer prediction requests?

The prevailing mantra is that MLOps is about automating steady integration (CI), steady supply (CD), and steady coaching (CT) for ML techniques. However that’s too summary for a lot of builders. MLOps is admittedly about continuous growth of ML-enabled merchandise that evolve over time. The out there enter information (options) adjustments over time, the goal you are attempting to foretell adjustments over time. That you must make adjustments to the supply code, and also you wish to be certain that any adjustments you make don’t break your ML system or degrade its efficiency. And also you wish to speed up the time required to make these adjustments and check earlier than these adjustments are routinely deployed to manufacturing.

So, from our perspective, a extra pithy definition of MLOps that permits ML Programs to be safely developed over time is that it requires, at a minimal, automated testing, versioning, and monitoring of ML artifacts. MLOps is about automated testing, versioning, and monitoring of ML artifacts.

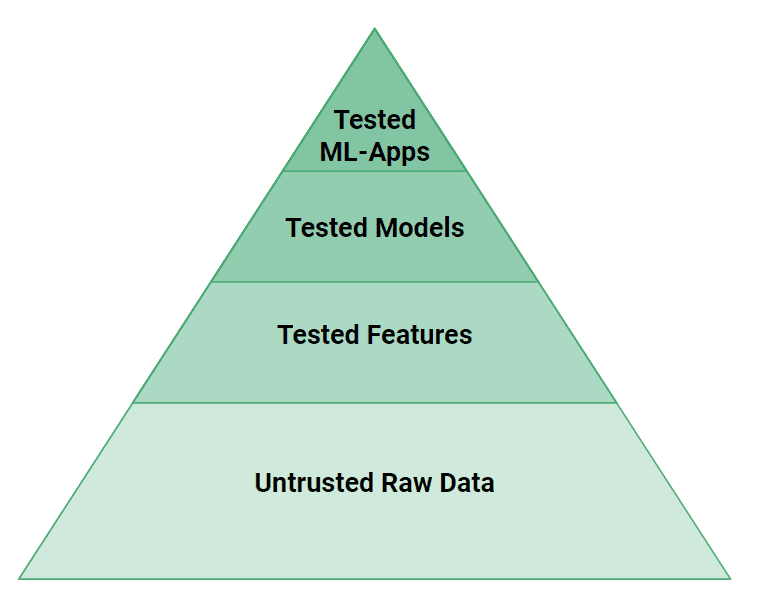

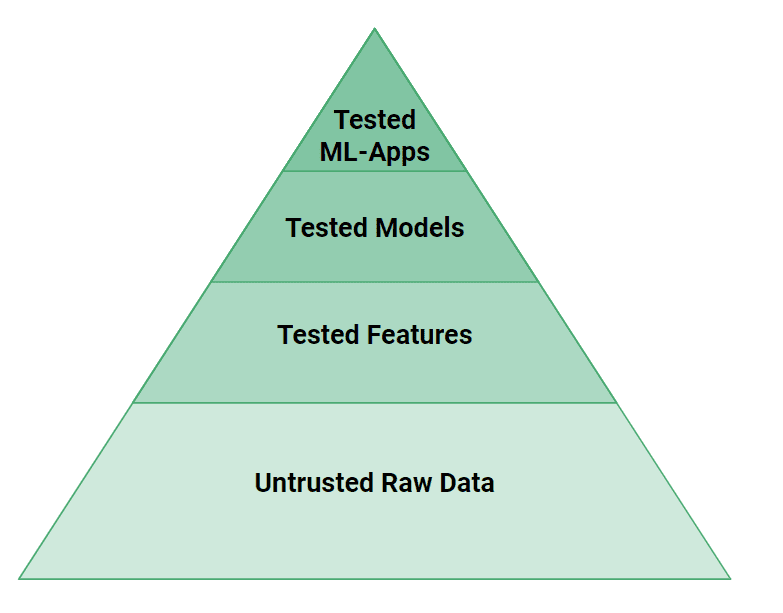

Determine 4: The testing pyramid for ML Artifacts

In determine 4, we will see that extra ranges of testing are wanted in ML techniques than in conventional software program techniques. Small bugs in information or code can simply trigger a ML mannequin to make incorrect predictions. From a testing perspective, if net purposes are propeller-driven airplanes, ML techniques are jet-engines. It takes vital engineering effort to check and validate ML Programs to make them secure!

At a excessive degree, we have to check each the source-code and information for ML Programs. The options created by function pipelines can have their logic examined with unit assessments and their enter information checked with information validation assessments (e.g., Great Expectations). The fashions have to be examined for efficiency, but additionally for a scarcity of bias in opposition to recognized teams of weak customers. Lastly, on the prime of the pyramid, ML-Programs want to check their efficiency with A/B assessments earlier than they will change to make use of a brand new mannequin.

Lastly, we have to model ML artifacts in order that the operators of ML techniques can safely replace and rollback variations of deployed fashions. System help for the push-button improve/downgrade of fashions is among the holy grails of MLOps. However fashions want options to make predictions, so mannequin variations are related to function variations and fashions and options have to be upgraded/downgraded synchronously. Fortunately, you don’t want a yr in rotation as a Google SRE to simply improve/downgrade fashions – platform help for versioned ML artifacts ought to make this a simple ML system upkeep operation.

Here’s a pattern of a few of the open-source ML techniques out there constructed on the FTI structure. They’ve been constructed largely by practitioners and college students.

Batch ML Programs

Actual-Time ML System

This text introduces the FTI pipeline structure for MLOps, which has empowered quite a few builders to effectively create and preserve ML techniques. Based mostly on our expertise, this structure considerably reduces the cognitive load related to designing and explaining ML techniques, particularly when in comparison with conventional MLOps approaches. In company environments, it fosters enhanced inter-team communication by establishing clear interfaces, thereby selling collaboration and expediting the event of high-quality ML techniques. Whereas it simplifies the overarching complexity, it additionally permits for in-depth exploration of the person pipelines. Our aim for the FTI pipeline structure is to facilitate improved teamwork and faster mannequin deployment, in the end expediting the societal transformation pushed by AI.

Learn extra in regards to the basic rules and parts that represent the FTI Pipelines structure in our full in-depth mental map for MLOps.