Reinforcement Studying: an Straightforward Introduction to Worth Iteration | by Carl Bettosi | Sep, 2023

Fixing the instance utilizing Worth Iteration

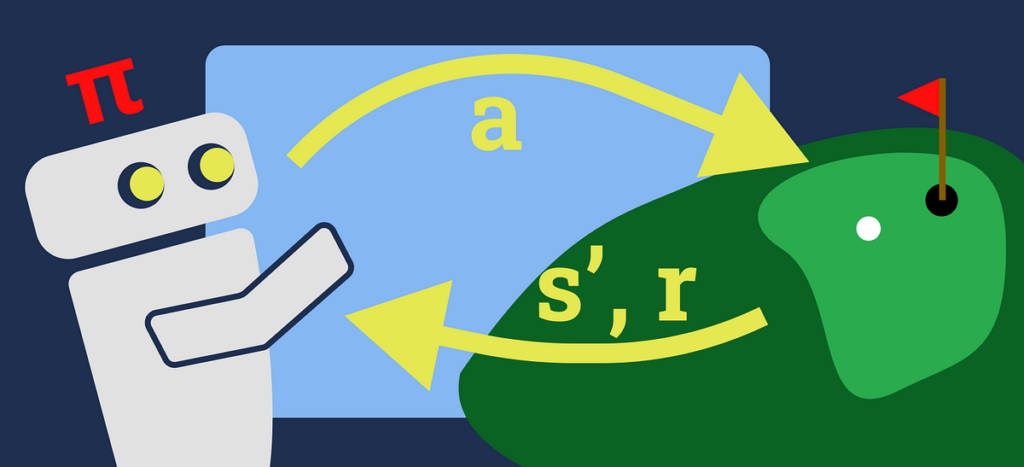

VI ought to make much more sense as soon as we full an instance downside, so let’s get again to our golf MDP. We have now formalised this as an MDP however at the moment, the agent doesn’t know the very best technique when enjoying golf, so let’s remedy the golf MDP utilizing VI.

We’ll begin by defining our mannequin parameters utilizing pretty customary values:

γ = 0.9 // low cost issue

θ = 0.01 // convergence threshold

We are going to then initialise our price desk to 0 for states in S:

// worth deskV(s0) = 0

V(s1) = 0

V(s2) = 0

We are able to now begin within the outer loop:

Δ = 0

And three passes of the interior loop for every state in S:

// Bellman replace rule

// V(s) ← maxₐ Σₛ′, ᵣ p(s′, r|s, a) * [r + γV(s′)]//******************* state s0 *******************//

v = 0

// we've got solely checked out one motion right here as just one is on the market from s0

// we all know that the others will not be doable and would due to this fact sum to 0

V(s0) = max[T(s0 | s0, hit to green) * (R(s0, hit to green, s0) + γ * V(s0)) +

T(s1 | s0, hit to green) * (R(s0, hit to green, s1) + γ * V(s1))]

V(s0) = max[0.1 * (0 + 0.9 * 0) +

0.9 * (0 + 0.9 * 0)]

V(s0) = max[0] = 0

// Delta replace rule

// Δ ← max(Δ,| v - V(s)|)

Δ = max[Δ, |v - v(s0)|] = max[0, |0 - 0|] = 0

//******************* state s1 *******************//

v = 0

// there are 2 obtainable actions right here

V(s1) = max[T(s0 | s1, hit to fairway) * (R(s1, hit to fairway, s0) + γ * V(s0)) +

T(s1 | s1, hit to fairway) * (R(s1, hit to fairway, s1) + γ * V(s1)),

T(s1 | s1, hit in hole) * (R(s1, hit in hole, s1) + γ * V(s1)) +

T(s2 | s1, hit in hole) * (R(s1, hit in hole, s2) + γ * V(s2))]

V(s1) = max[0.9 * (0 + 0.9 * 0) +

0.1 * (0 + 0.9 * 0),

0.1 * (0 + 0.9 * 0) +

0.9 * (10 + 0.9 * 0)]

V(s1) = max[0, 9] = 9

Δ = max[Δ, |v - v(s1)|] = max[0, |0 - 9|] = 9

//******************* state s2 *******************//

// terminal state with no actions

This offers us the next replace to our price desk:

V(s0) = 0

V(s1) = 9

V(s2) = 0

We don’t want to fret about s2 as this can be a terminal state, which means no actions are doable right here.

We now get away the interior loop and proceed the outer loop, performing a convergence verify on:

Δ < θ = 9 < 0.01 = False

Since we’ve got not converged, we do a second iteration of the outer loop:

Δ = 0

And one other 3 passes of the interior loop, utilizing the up to date worth desk:

//******************* state s0 *******************//v = 0

V(s0) = max[T(s0 | s0, hit to green) * (R(s0, hit to green, s0) + γ * V(s0)) +

T(s1 | s0, hit to green) * (R(s0, hit to green, s1) + γ * V(s1))]

V(s0) = max[0.1 * (0 + 0.9 * 0) +

0.9 * (0 + 0.9 * 9)]

V(s0) = max[7.29] = 7.29

Δ = max[Δ, |v - v(s0)|] = max[0, |0 - 7.29|] = 7.29

//******************* state s1 *******************//

v = 9

V(s1) = max[T(s0 | s1, hit to fairway) * (R(s1, hit to fairway, s0) + γ * V(s0)) +

T(s1 | s1, hit to fairway) * (R(s1, hit to fairway, s1) + γ * V(s1)),

T(s1 | s1, hit in hole) * (R(s1, hit in hole, s1) + γ * V(s1)) +

T(s2 | s1, hit in hole) * (R(s1, hit in hole, s2) + γ * V(s2))]

V(s1) = max[0.9 * (0 + 0.9 * 7.29) +

0.1 * (0 + 0.9 * 9),

0.1 * (0 + 0.9 * 9) +

0.9 * (10 + 0.9 * 0)]

V(s1) = max(6.7149, 9.81) = 9.81

Δ = max[Δ, |v - v(s1)|] = max[7.29, |9 - 9.81|] = 7.29

//******************* state s2 *******************//

// terminal state with no actions

On the finish of the second iteration, our values are:

V(s0) = 7.29

V(s1) = 9.81

V(s2) = 0

Verify convergence as soon as once more:

Δ < θ = 7.29 < 0.01 = False

Nonetheless no convergence, so we proceed the identical course of as above till Δ < θ. I gained’t present all of the calculations, the above two are sufficient to know the method.

After 6 iterations, our coverage converges. That is our values and convergence fee as they alter over every iteration:

Iteration V(s0) V(s1) V(s2) Δ Converged

1 0 9 0 9 False

2 7.29 9.81 0 7.29 False

3 8.6022 9.8829 0 1.3122 False

4 8.779447 9.889461 0 0.177247 False

5 8.80061364 9.89005149 0 0.02116664 False

6 8.8029969345 9.8901046341 0 0.0023832945 True

Now we will extract our coverage:

// Coverage extraction rule

// π(s) = argmaxₐ Σₛ′, ᵣ p(s′, r|s, a) * [r + γV(s′)]//******************* state s0 *******************//

// we all know there is just one doable motion from s0, however let's simply do it anyway

π(s0) = argmax[T(s0 | s0, hit to green) * (R(s0, hit to green, s0) + γ * V(s0)) +

T(s1 | s0, hit to green) * (R(s0, hit to green, s1) + γ * V(s1))

π(s0) = argmax[0.1 * (0 + 0.9 * 8.8029969345) +

0.9 * (0 + 0.9 * 9.8901046341)]

π(s0) = argmax[8.80325447773]

π(s0) = hit to inexperienced

//******************* state s1 *******************//

π(s1) = argmax[T(s0 | s1, hit to fairway) * (R(s1, hit to fairway, s0) + γ * V(s0)) +

T(s1 | s1, hit to fairway) * (R(s1, hit to fairway, s1) + γ * V(s1)),

T(s1 | s1, hit in hole) * (R(s1, hit in hole, s1) + γ * V(s1)) +

T(s2 | s1, hit in hole) * (R(s1, hit in hole, s2) + γ * V(s2))]

V(s1) = max[0.9 * (0 + 0.9 * 8.8029969345) +

0.1 * (0 + 0.9 * 9.8901046341),

0.1 * (0 + 0.9 * 9.8901046341) +

0.9 * (10 + 0.9 * 0)]

π(s1) = argmax[8.02053693401, 9.89010941707]

π(s1) = hit in gap

Our ultimate coverage is:

π(s0) = hit to inexperienced

π(s1) = hit to gap

π(s2) = terminal state (no motion)

So, when our agent is within the Ball on fairway state (s0), the very best motion is to hit to inexperienced. This appears fairly apparent since that’s the solely obtainable motion. Nonetheless, in s1, the place there are two doable actions, our coverage has realized to hit in gap. We are able to now give this realized coverage to different brokers who need to play golf!

And there you may have it! We have now simply solved a quite simple RL downside utilizing Worth Iteration.