Notion Equity – Google Analysis Weblog

Google’s Responsible AI research is constructed on a basis of collaboration — between groups with various backgrounds and experience, between researchers and product builders, and in the end with the group at giant. The Notion Equity crew drives progress by combining deep subject-matter experience in each laptop imaginative and prescient and machine studying (ML) equity with direct connections to the researchers constructing the notion methods that energy merchandise throughout Google and past. Collectively, we’re working to deliberately design our methods to be inclusive from the bottom up, guided by Google’s AI Principles.

|

| Notion Equity analysis spans the design, growth, and deployment of superior multimodal fashions together with the newest basis and generative fashions powering Google’s merchandise. |

Our crew’s mission is to advance the frontiers of equity and inclusion in multimodal ML methods, particularly associated to foundation fashions and generative AI. This encompasses core expertise elements together with classification, localization, captioning, retrieval, visible query answering, text-to-image or text-to-video technology, and generative picture and video enhancing. We imagine that equity and inclusion can and ought to be top-line efficiency objectives for these purposes. Our analysis is targeted on unlocking novel analyses and mitigations that allow us to proactively design for these targets all through the event cycle. We reply core questions, similar to: How can we use ML to responsibly and faithfully mannequin human notion of demographic, cultural, and social identities with a purpose to promote equity and inclusion? What sorts of system biases (e.g., underperforming on photos of individuals with sure pores and skin tones) can we measure and the way can we use these metrics to design higher algorithms? How can we construct extra inclusive algorithms and methods and react shortly when failures happen?

Measuring illustration of individuals in media

ML methods that may edit, curate or create photos or movies can have an effect on anybody uncovered to their outputs, shaping or reinforcing the beliefs of viewers world wide. Analysis to scale back representational harms, similar to reinforcing stereotypes or denigrating or erasing teams of individuals, requires a deep understanding of each the content material and the societal context. It hinges on how completely different observers understand themselves, their communities, or how others are represented. There’s appreciable debate within the discipline relating to which social classes ought to be studied with computational instruments and the way to take action responsibly. Our analysis focuses on working towards scalable options which might be knowledgeable by sociology and social psychology, are aligned with human notion, embrace the subjective nature of the issue, and allow nuanced measurement and mitigation. One instance is our analysis on differences in human perception and annotation of skin tone in images utilizing the Monk Skin Tone scale.

Our instruments are additionally used to check illustration in large-scale content material collections. By means of our Media Understanding for Social Exploration (MUSE) mission, we have partnered with tutorial researchers, nonprofit organizations, and main client manufacturers to know patterns in mainstream media and promoting content material. We first printed this work in 2017, with a co-authored examine analyzing gender equity in Hollywood movies. Since then, we have elevated the dimensions and depth of our analyses. In 2019, we launched findings based mostly on over 2.7 million YouTube advertisements. Within the latest study, we study illustration throughout intersections of perceived gender presentation, perceived age, and pores and skin tone in over twelve years of common U.S. tv exhibits. These research present insights for content material creators and advertisers and additional inform our personal analysis.

|

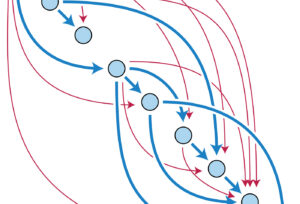

| An illustration (not precise information) of computational alerts that may be analyzed at scale to disclose representational patterns in media collections. [Video Collection / Getty Images] |

Transferring ahead, we’re increasing the ML equity ideas on which we focus and the domains by which they’re responsibly utilized. Wanting past photorealistic photos of individuals, we’re working to develop instruments that mannequin the illustration of communities and cultures in illustrations, summary depictions of humanoid characters, and even photos with no individuals in them in any respect. Lastly, we have to motive about not simply who’s depicted, however how they’re portrayed — what narrative is communicated by the encompassing picture content material, the accompanying textual content, and the broader cultural context.

Analyzing bias properties of perceptual methods

Constructing superior ML methods is complicated, with a number of stakeholders informing varied standards that determine product habits. Total high quality has traditionally been outlined and measured utilizing abstract statistics (like general accuracy) over a check dataset as a proxy for person expertise. However not all customers expertise merchandise in the identical manner.

Notion Equity allows sensible measurement of nuanced system habits past abstract statistics, and makes these metrics core to the system high quality that straight informs product behaviors and launch choices. That is usually a lot more durable than it appears. Distilling complicated bias points (e.g., disparities in efficiency throughout intersectional subgroups or cases of stereotype reinforcement) to a small variety of metrics with out shedding essential nuance is extraordinarily difficult. One other problem is balancing the interaction between equity metrics and different product metrics (e.g., person satisfaction, accuracy, latency), which are sometimes phrased as conflicting regardless of being suitable. It’s common for researchers to explain their work as optimizing an “accuracy-fairness” tradeoff when in actuality widespread person satisfaction is aligned with assembly equity and inclusion targets.

To those ends, our crew focuses on two broad analysis instructions. First, democratizing entry to well-understood and widely-applicable equity evaluation tooling, partaking associate organizations in adopting them into product workflows, and informing management throughout the corporate in decoding outcomes. This work consists of creating broad benchmarks, curating widely-useful high-quality test datasets and tooling centered round methods similar to sliced evaluation and counterfactual testing — usually constructing on the core illustration alerts work described earlier. Second, advancing novel approaches in direction of equity analytics — together with partnering with product efforts that will end in breakthrough findings or inform launch strategy.

Advancing AI responsibly

Our work doesn’t cease with analyzing mannequin habits. Moderately, we use this as a jumping-off level for figuring out algorithmic enhancements in collaboration with different researchers and engineers on product groups. Over the previous 12 months we have launched upgraded elements that energy Search and Memories options in Google Pictures, resulting in extra constant efficiency and drastically enhancing robustness by added layers that preserve errors from cascading by the system. We’re engaged on enhancing rating algorithms in Google Photos to diversify illustration. We up to date algorithms that will reinforce historic stereotypes, utilizing further alerts responsibly, such that it’s extra seemingly for everyone to see themselves reflected in Search results and find what they’re looking for.

This work naturally carries over to the world of generative AI, the place models can create collections of images or videos seeded from image and text prompts and can answer questions about images and videos. We’re excited in regards to the potential of those applied sciences to deliver new experiences to users and as instruments to additional our personal analysis. To allow this, we’re collaborating throughout the analysis and accountable AI communities to develop guardrails that mitigate failure modes. We’re leveraging our instruments for understanding illustration to energy scalable benchmarks that may be mixed with human suggestions, and investing in analysis from pre-training by deployment to steer the fashions to generate increased high quality, extra inclusive, and extra controllable output. We would like these fashions to encourage individuals, producing various outputs, translating ideas with out counting on tropes or stereotypes, and offering constant behaviors and responses throughout counterfactual variations of prompts.

Alternatives and ongoing work

Regardless of over a decade of centered work, the sector of notion equity applied sciences nonetheless looks as if a nascent and fast-growing area, rife with alternatives for breakthrough methods. We proceed to see alternatives to contribute technical advances backed by interdisciplinary scholarship. The hole between what we will measure in photos versus the underlying elements of human id and expression is giant — closing this hole would require more and more complicated media analytics options. Information metrics that point out true illustration, located within the applicable context and heeding a variety of viewpoints, stays an open problem for us. Can we attain some extent the place we will reliably determine depictions of nuanced stereotypes, regularly replace them to mirror an ever-changing society, and discern conditions by which they could possibly be offensive? Algorithmic advances pushed by human suggestions level a promising path ahead.

Current give attention to AI security and ethics within the context of contemporary giant mannequin growth has spurred new methods of fascinated with measuring systemic biases. We’re exploring a number of avenues to make use of these fashions — together with current developments in concept-based explainability strategies, causal inference strategies, and cutting-edge UX analysis — to quantify and reduce undesired biased behaviors. We look ahead to tackling the challenges forward and creating expertise that’s constructed for everyone.

Acknowledgements

We want to thank each member of the Notion Equity crew, and all of our collaborators.