The Enigma for ChatGPT: PUMA is an AI Method That Proposes a Quick and Safe Method for LLM Inference

Massive Language Fashions (LLMs) have began a revolution within the synthetic intelligence area. The discharge of ChatGPT has sparked the ignition for the period of LLMs, and since then, we have now seen them ever enhancing. These fashions are made potential with large quantities of information and have impressed us with their capabilities, from mastering language understanding to simplifying advanced duties.

There have been quite a few options proposed to ChatGPT, they usually bought higher and higher daily, even managing to surpass ChatGPT in sure duties. LLaMa, Claudia, Falcon, and extra; the new LLM models are coming for the ChatGPT’s throne.

Nevertheless, there isn’t a doubt that ChatGPT continues to be by far the preferred LLM on the market. There’s a actually excessive likelihood that your favourite AI-powered app might be only a ChatGPT wrapper, dealing with the connection for you. However, if we step again and take into consideration the safety perspective, is it actually personal and safe? OpenAI ensures protecting API data privacy is one thing they deeply care about, however they’re going through numerous lawsuits on the similar time. Even when they work actually onerous to guard the privateness and safety of the mannequin utilization, these fashions might be too highly effective to be managed.

So how will we guarantee we will make the most of the facility of LLMs with out considerations about privateness and safety arising? How will we make the most of these fashions’ prowess with out compromising delicate knowledge? Allow us to meet with PUMA.

PUMA is a framework designed to allow safe and environment friendly analysis of Transformer fashions, all whereas sustaining the sanctity of your knowledge. It merges safe multi-party computation (MPC) with environment friendly Transformer inference.

At its core, PUMA introduces a novel approach to approximate the advanced non-linear capabilities inside Transformer fashions, like GeLU and Softmax. These approximations are tailor-made to retain accuracy whereas considerably boosting effectivity. Not like earlier strategies that may sacrifice efficiency or result in convoluted deployment methods, PUMA’s strategy balances each worlds – guaranteeing correct outcomes whereas sustaining the effectivity essential for real-world purposes.

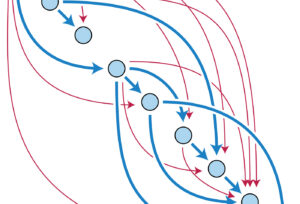

PUMA introduces three pivotal entities: the mannequin proprietor, the consumer, and the computing events. Every entity performs a vital function within the safe inference course of.

The mannequin proprietor provides the educated Transformer fashions, whereas the consumer contributes the enter knowledge and receives the inference outcomes. The computing events collectively execute safe computation protocols, guaranteeing that knowledge and mannequin weights stay securely protected all through the method. The underpinning precept of PUMA‘s inference course of is to take care of the confidentiality of enter knowledge and weights, preserving the privateness of the entities concerned.

Safe embedding, a elementary facet of the safe inference course of, historically includes the era of a one-hot vector utilizing token identifiers. As a substitute, PUMA proposes a safe embedding design that adheres intently to the usual workflow of Transformer fashions. This streamlined strategy ensures that the safety measures don’t intrude with the inherent structure of the mannequin, simplifying the deployment of safe fashions in sensible purposes.

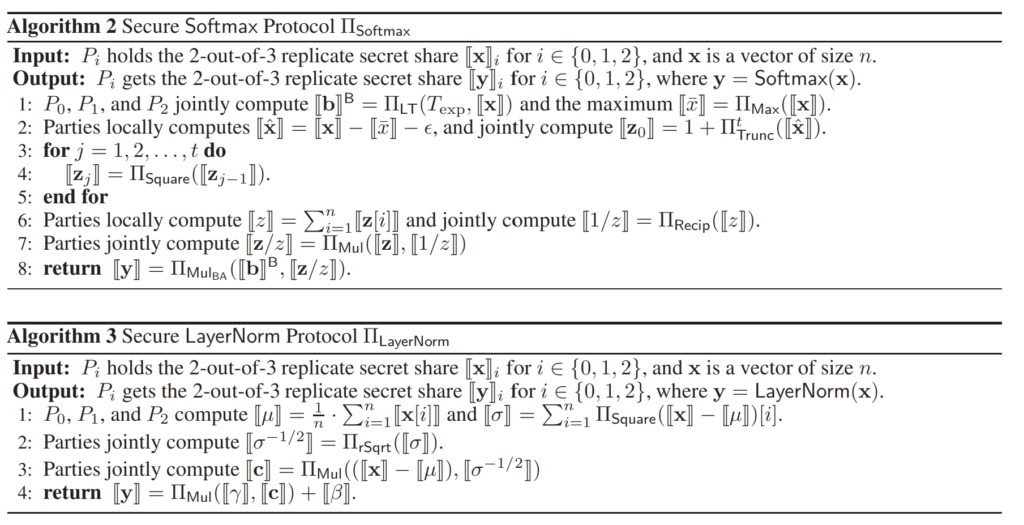

Furthermore, a significant problem in safe inference lies in approximating advanced capabilities, similar to GeLU and Softmax, in a method that balances computational effectivity with accuracy. PUMA tackles this facet by devising extra correct approximations tailor-made to the properties of those capabilities. By leveraging the precise traits of those capabilities, PUMA considerably enhances the precision of the approximation whereas optimizing runtime and communication prices.

Lastly, LayerNorm, a vital operation inside the Transformer mannequin, presents distinctive challenges in safe inference because of the divide-square-root formulation. PUMA addresses this by well redefining the operation utilizing safe protocols, thus guaranteeing that the computation of LayerNorm stays each safe and environment friendly.

One of the vital options of PUMA is its seamless integration. The framework facilitates end-to-end safe inference for Transformer fashions with out necessitating main mannequin structure modifications. This implies you’ll be able to leverage pre-trained Transformer fashions with minimal effort. Whether or not it’s a language mannequin downloaded from Hugging Face or one other supply, PUMA retains issues easy. It aligns with the unique workflow and doesn’t demand advanced retraining or modifications.

Try the Paper and Github link. All Credit score For This Analysis Goes To the Researchers on This Challenge. Additionally, don’t overlook to affix our 29k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, the place we share the most recent AI analysis information, cool AI initiatives, and extra.

For those who like our work, please comply with us on Twitter

Ekrem Çetinkaya obtained his B.Sc. in 2018, and M.Sc. in 2019 from Ozyegin College, Istanbul, Türkiye. He wrote his M.Sc. thesis about picture denoising utilizing deep convolutional networks. He obtained his Ph.D. diploma in 2023 from the College of Klagenfurt, Austria, along with his dissertation titled “Video Coding Enhancements for HTTP Adaptive Streaming Utilizing Machine Studying.” His analysis pursuits embrace deep studying, pc imaginative and prescient, video encoding, and multimedia networking.