Construct ML options at scale with Amazon SageMaker Function Retailer utilizing information from Amazon Redshift

Amazon Redshift is the preferred cloud information warehouse that’s utilized by tens of hundreds of shoppers to investigate exabytes of information daily. Many practitioners are extending these Redshift datasets at scale for machine studying (ML) utilizing Amazon SageMaker, a completely managed ML service, with necessities to develop options offline in a code manner or low-code/no-code manner, retailer featured information from Amazon Redshift, and make this occur at scale in a manufacturing setting.

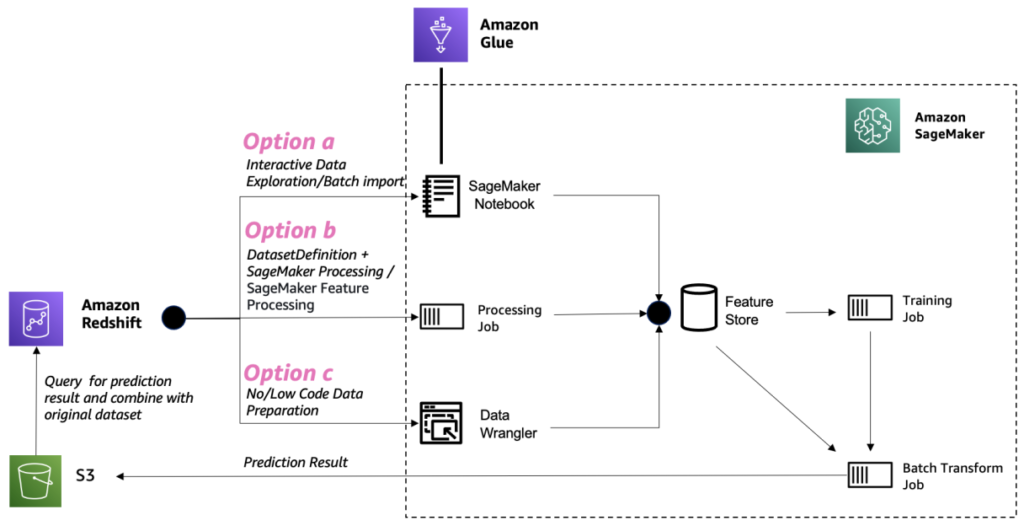

On this submit, we present you three choices to organize Redshift supply information at scale in SageMaker, together with loading information from Amazon Redshift, performing characteristic engineering, and ingesting options into Amazon SageMaker Feature Store:

In case you’re an AWS Glue person and wish to do the method interactively, contemplate choice A. In case you’re acquainted with SageMaker and writing Spark code, choice B may very well be your selection. If you wish to do the method in a low-code/no-code manner, you’ll be able to observe choice C.

Amazon Redshift makes use of SQL to investigate structured and semi-structured information throughout information warehouses, operational databases, and information lakes, utilizing AWS-designed {hardware} and ML to ship the very best price-performance at any scale.

SageMaker Studio is the primary totally built-in growth setting (IDE) for ML. It offers a single web-based visible interface the place you’ll be able to carry out all ML growth steps, together with getting ready information and constructing, coaching, and deploying fashions.

AWS Glue is a serverless information integration service that makes it simple to find, put together, and mix information for analytics, ML, and utility growth. AWS Glue allows you to seamlessly gather, remodel, cleanse, and put together information for storage in your information lakes and information pipelines utilizing quite a lot of capabilities, together with built-in transforms.

Answer overview

The next diagram illustrates the answer structure for every choice.

Conditions

To proceed with the examples on this submit, that you must create the required AWS sources. To do that, we offer an AWS CloudFormation template to create a stack that comprises the sources. Whenever you create the stack, AWS creates various sources in your account:

- A SageMaker area, which incorporates an related Amazon Elastic File System (Amazon EFS) quantity

- An inventory of licensed customers and quite a lot of safety, utility, coverage, and Amazon Virtual Private Cloud (Amazon VPC) configurations

- A Redshift cluster

- A Redshift secret

- An AWS Glue connection for Amazon Redshift

- An AWS Lambda operate to arrange required sources, execution roles and insurance policies

Just remember to don’t have already two SageMaker Studio domains within the Area the place you’re operating the CloudFormation template. That is the utmost allowed variety of domains in every supported Area.

Deploy the CloudFormation template

Full the next steps to deploy the CloudFormation template:

- Save the CloudFormation template sm-redshift-demo-vpc-cfn-v1.yaml regionally.

- On the AWS CloudFormation console, select Create stack.

- For Put together template, choose Template is prepared.

- For Template supply, choose Add a template file.

- Select Select File and navigate to the situation in your laptop the place the CloudFormation template was downloaded and select the file.

- Enter a stack title, similar to

Demo-Redshift. - On the Configure stack choices web page, go away every thing as default and select Subsequent.

- On the Assessment web page, choose I acknowledge that AWS CloudFormation may create IAM sources with customized names and select Create stack.

It’s best to see a brand new CloudFormation stack with the title Demo-Redshift being created. Await the standing of the stack to be CREATE_COMPLETE (roughly 7 minutes) earlier than shifting on. You may navigate to the stack’s Assets tab to test what AWS sources have been created.

Launch SageMaker Studio

Full the next steps to launch your SageMaker Studio area:

- On the SageMaker console, select Domains within the navigation pane.

- Select the area you created as a part of the CloudFormation stack (

SageMakerDemoDomain). - Select Launch and Studio.

This web page can take 1–2 minutes to load whenever you entry SageMaker Studio for the primary time, after which you’ll be redirected to a House tab.

Obtain the GitHub repository

Full the next steps to obtain the GitHub repo:

- Within the SageMaker pocket book, on the File menu, select New and Terminal.

- Within the terminal, enter the next command:

Now you can see the amazon-sagemaker-featurestore-redshift-integration folder in navigation pane of SageMaker Studio.

Arrange batch ingestion with the Spark connector

Full the next steps to arrange batch ingestion:

- In SageMaker Studio, open the pocket book 1-uploadJar.ipynb beneath

amazon-sagemaker-featurestore-redshift-integration. - In case you are prompted to decide on a kernel, select Information Science because the picture and Python 3 because the kernel, then select Choose.

- For the next notebooks, select the identical picture and kernel besides the AWS Glue Interactive Periods pocket book (4a).

- Run the cells by urgent Shift+Enter in every of the cells.

Whereas the code runs, an asterisk (*) seems between the sq. brackets. When the code is completed operating, the * can be changed with numbers. This motion can be workable for all different notebooks.

Arrange the schema and cargo information to Amazon Redshift

The subsequent step is to arrange the schema and cargo information from Amazon Simple Storage Service (Amazon S3) to Amazon Redshift. To take action, run the pocket book 2-loadredshiftdata.ipynb.

Create characteristic shops in SageMaker Function Retailer

To create your characteristic shops, run the pocket book 3-createFeatureStore.ipynb.

Carry out characteristic engineering and ingest options into SageMaker Function Retailer

On this part, we current the steps for all three choices to carry out characteristic engineering and ingest processed options into SageMaker Function Retailer.

Possibility A: Use SageMaker Studio with a serverless AWS Glue interactive session

Full the next steps for choice A:

- In SageMaker Studio, open the pocket book 4a-glue-int-session.ipynb.

- In case you are prompted to decide on a kernel, select SparkAnalytics 2.0 because the picture and Glue Python [PySpark and Ray] because the kernel, then select Choose.

The setting preparation course of could take a while to finish.

Possibility B: Use a SageMaker Processing job with Spark

On this choice, we use a SageMaker Processing job with a Spark script to load the unique dataset from Amazon Redshift, carry out characteristic engineering, and ingest the info into SageMaker Function Retailer. To take action, open the pocket book 4b-processing-rs-to-fs.ipynb in your SageMaker Studio setting.

Right here we use RedshiftDatasetDefinition to retrieve the dataset from the Redshift cluster. RedshiftDatasetDefinition is one sort of enter of the processing job, which offers a easy interface for practitioners to configure Redshift connection-related parameters similar to identifier, database, desk, question string, and extra. You may simply set up your Redshift connection utilizing RedshiftDatasetDefinition with out sustaining a connection full time. We additionally use the SageMaker Feature Store Spark connector library within the processing job to connect with SageMaker Function Retailer in a distributed setting. With this Spark connector, you’ll be able to simply ingest information to the characteristic group’s on-line and offline retailer from a Spark DataFrame. Additionally, this connector comprises the performance to routinely load characteristic definitions to assist with creating characteristic teams. Above all, this resolution provides you a local Spark technique to implement an end-to-end information pipeline from Amazon Redshift to SageMaker. You may carry out any characteristic engineering in a Spark context and ingest ultimate options into SageMaker Function Retailer in only one Spark mission.

To make use of the SageMaker Function Retailer Spark connector, we lengthen a pre-built SageMaker Spark container with sagemaker-feature-store-pyspark put in. Within the Spark script, use the system executable command to run pip set up, set up this library in your native setting, and get the native path of the JAR file dependency. Within the processing job API, present this path to the parameter of submit_jars to the node of the Spark cluster that the processing job creates.

Within the Spark script for the processing job, we first learn the unique dataset information from Amazon S3, which quickly shops the unloaded dataset from Amazon Redshift as a medium. Then we carry out characteristic engineering in a Spark manner and use feature_store_pyspark to ingest information into the offline characteristic retailer.

For the processing job, we offer a ProcessingInput with a redshift_dataset_definition. Right here we construct a construction in accordance with the interface, offering Redshift connection-related configurations. You should utilize query_string to filter your dataset by SQL and unload it to Amazon S3. See the next code:

You might want to wait 6–7 minutes for every processing job together with USER, PLACE, and RATING datasets.

For extra particulars about SageMaker Processing jobs, consult with Process data.

For SageMaker native options for characteristic processing from Amazon Redshift, you too can use Feature Processing in SageMaker Function Retailer, which is for underlying infrastructure together with provisioning the compute environments and creating and sustaining SageMaker pipelines to load and ingest information. You may solely focus in your characteristic processor definitions that embody transformation features, the supply of Amazon Redshift, and the sink of SageMaker Function Retailer. The scheduling, job administration, and different workloads in manufacturing are managed by SageMaker. Function Processor pipelines are SageMaker pipelines, so the standard monitoring mechanisms and integrations can be found.

Possibility C: Use SageMaker Information Wrangler

SageMaker Information Wrangler permits you to import information from numerous information sources together with Amazon Redshift for a low-code/no-code technique to put together, remodel, and featurize your information. After you end information preparation, you need to use SageMaker Information Wrangler to export options to SageMaker Function Retailer.

There are some AWS Identity and Access Management (IAM) settings that permit SageMaker Information Wrangler to connect with Amazon Redshift. First, create an IAM position (for instance, redshift-s3-dw-connect) that features an Amazon S3 entry coverage. For this submit, we connected the AmazonS3FullAccess coverage to the IAM position. If in case you have restrictions of accessing a specified S3 bucket, you’ll be able to outline it within the Amazon S3 entry coverage. We connected the IAM position to the Redshift cluster that we created earlier. Subsequent, create a coverage for SageMaker to entry Amazon Redshift by getting its cluster credentials, and fix the coverage to the SageMaker IAM position. The coverage appears to be like like the next code:

After this setup, SageMaker Information Wrangler permits you to question Amazon Redshift and output the outcomes into an S3 bucket. For directions to connect with a Redshift cluster and question and import information from Amazon Redshift to SageMaker Information Wrangler, consult with Import data from Amazon Redshift.

SageMaker Information Wrangler provides a choice of over 300 pre-built information transformations for widespread use circumstances similar to deleting duplicate rows, imputing lacking information, one-hot encoding, and dealing with time collection information. You can even add customized transformations in pandas or PySpark. In our instance, we utilized some transformations similar to drop column, information sort enforcement, and ordinal encoding to the info.

When your information movement is full, you’ll be able to export it to SageMaker Function Retailer. At this level, that you must create a characteristic group: give the characteristic group a reputation, choose each on-line and offline storage, present the title of a S3 bucket to make use of for the offline retailer, and supply a job that has SageMaker Function Retailer entry. Lastly, you’ll be able to create a job, which creates a SageMaker Processing job that runs the SageMaker Information Wrangler movement to ingest options from the Redshift information supply to your characteristic group.

Right here is one end-to-end information movement within the state of affairs of PLACE characteristic engineering.

Use SageMaker Function Retailer for mannequin coaching and prediction

To make use of SageMaker Function retailer for mannequin coaching and prediction, open the pocket book 5-classification-using-feature-groups.ipynb.

After the Redshift information is reworked into options and ingested into SageMaker Function Retailer, the options can be found for search and discovery throughout groups of information scientists chargeable for many impartial ML fashions and use circumstances. These groups can use the options for modeling with out having to rebuild or rerun characteristic engineering pipelines. Function teams are managed and scaled independently, and may be reused and joined collectively whatever the upstream information supply.

The subsequent step is to construct ML fashions utilizing options chosen from one or a number of characteristic teams. You resolve which characteristic teams to make use of to your fashions. There are two choices to create an ML dataset from characteristic teams, each using the SageMaker Python SDK:

- Use the SageMaker Function Retailer DatasetBuilder API – The SageMaker Function Retailer

DatasetBuilderAPI permits information scientists create ML datasets from a number of characteristic teams within the offline retailer. You should utilize the API to create a dataset from a single or a number of characteristic teams, and output it as a CSV file or a pandas DataFrame. See the next instance code:

- Run SQL queries utilizing the athena_query operate within the FeatureGroup API – Another choice is to make use of the auto-built AWS Glue Information Catalog for the FeatureGroup API. The FeatureGroup API contains an

Athena_queryoperate that creates an AthenaQuery occasion to run user-defined SQL question strings. Then you definately run the Athena question and arrange the question consequence right into a pandas DataFrame. This selection permits you to specify extra sophisticated SQL queries to extract info from a characteristic group. See the next instance code:

Subsequent, we will merge the queried information from totally different characteristic teams into our ultimate dataset for mannequin coaching and testing. For this submit, we use batch transform for mannequin inference. Batch remodel permits you to get mannequin inferene on a bulk of information in Amazon S3, and its inference result’s saved in Amazon S3 as nicely. For particulars on mannequin coaching and inference, consult with the pocket book 5-classification-using-feature-groups.ipynb.

Run a be part of question on prediction ends in Amazon Redshift

Lastly, we question the inference consequence and be part of it with unique person profiles in Amazon Redshift. To do that, we use Amazon Redshift Spectrum to affix batch prediction ends in Amazon S3 with the unique Redshift information. For particulars, consult with the pocket book run 6-read-results-in-redshift.ipynb.

Clear up

On this part, we offer the steps to scrub up the sources created as a part of this submit to keep away from ongoing expenses.

Shut down SageMaker Apps

Full the next steps to close down your sources:

- In SageMaker Studio, on the File menu, select Shut Down.

- Within the Shutdown affirmation dialog, select Shutdown All to proceed.

- After you get the “Server stopped” message, you’ll be able to shut this tab.

Delete the apps

Full the next steps to delete your apps:

- On the SageMaker console, within the navigation pane, select Domains.

- On the Domains web page, select

SageMakerDemoDomain. - On the area particulars web page, beneath Person profiles, select the person

sagemakerdemouser. - Within the Apps part, within the Motion column, select Delete app for any lively apps.

- Make sure that the Standing column says Deleted for all of the apps.

Delete the EFS storage quantity related together with your SageMaker area

Find your EFS quantity on the SageMaker console and delete it. For directions, consult with Manage Your Amazon EFS Storage Volume in SageMaker Studio.

Delete default S3 buckets for SageMaker

Delete the default S3 buckets (sagemaker-<region-code>-<acct-id>) for SageMaker In case you are not utilizing SageMaker in that Area.

Delete the CloudFormation stack

Delete the CloudFormation stack in your AWS account in order to scrub up all associated sources.

Conclusion

On this submit, we demonstrated an end-to-end information and ML movement from a Redshift information warehouse to SageMaker. You may simply use AWS native integration of purpose-built engines to undergo the info journey seamlessly. Try the AWS Blog for extra practices about constructing ML options from a contemporary information warehouse.

In regards to the Authors

Akhilesh Dube, a Senior Analytics Options Architect at AWS, possesses greater than 20 years of experience in working with databases and analytics merchandise. His main position entails collaborating with enterprise purchasers to design sturdy information analytics options whereas providing complete technical steerage on a variety of AWS Analytics and AI/ML companies.

Akhilesh Dube, a Senior Analytics Options Architect at AWS, possesses greater than 20 years of experience in working with databases and analytics merchandise. His main position entails collaborating with enterprise purchasers to design sturdy information analytics options whereas providing complete technical steerage on a variety of AWS Analytics and AI/ML companies.

Ren Guo is a Senior Information Specialist Options Architect within the domains of generative AI, analytics, and conventional AI/ML at AWS, Better China Area.

Ren Guo is a Senior Information Specialist Options Architect within the domains of generative AI, analytics, and conventional AI/ML at AWS, Better China Area.

Sherry Ding is a Senior AI/ML Specialist Options Architect. She has in depth expertise in machine studying with a PhD diploma in Laptop Science. She primarily works with Public Sector prospects on numerous AI/ML-related enterprise challenges, serving to them speed up their machine studying journey on the AWS Cloud. When not serving to prospects, she enjoys outside actions.

Sherry Ding is a Senior AI/ML Specialist Options Architect. She has in depth expertise in machine studying with a PhD diploma in Laptop Science. She primarily works with Public Sector prospects on numerous AI/ML-related enterprise challenges, serving to them speed up their machine studying journey on the AWS Cloud. When not serving to prospects, she enjoys outside actions.

Mark Roy is a Principal Machine Studying Architect for AWS, serving to prospects design and construct AI/ML options. Mark’s work covers a variety of ML use circumstances, with a main curiosity in laptop imaginative and prescient, deep studying, and scaling ML throughout the enterprise. He has helped firms in lots of industries, together with insurance coverage, monetary companies, media and leisure, healthcare, utilities, and manufacturing. Mark holds six AWS Certifications, together with the ML Specialty Certification. Previous to becoming a member of AWS, Mark was an architect, developer, and expertise chief for over 25 years, together with 19 years in monetary companies.

Mark Roy is a Principal Machine Studying Architect for AWS, serving to prospects design and construct AI/ML options. Mark’s work covers a variety of ML use circumstances, with a main curiosity in laptop imaginative and prescient, deep studying, and scaling ML throughout the enterprise. He has helped firms in lots of industries, together with insurance coverage, monetary companies, media and leisure, healthcare, utilities, and manufacturing. Mark holds six AWS Certifications, together with the ML Specialty Certification. Previous to becoming a member of AWS, Mark was an architect, developer, and expertise chief for over 25 years, together with 19 years in monetary companies.