Prepare self-supervised imaginative and prescient transformers on overhead imagery with Amazon SageMaker

This can be a visitor weblog submit co-written with Ben Veasey, Jeremy Anderson, Jordan Knight, and June Li from Vacationers.

Satellite tv for pc and aerial photographs present perception into a variety of issues, together with precision agriculture, insurance coverage danger evaluation, city improvement, and catastrophe response. Coaching machine studying (ML) fashions to interpret this knowledge, nevertheless, is bottlenecked by pricey and time-consuming human annotation efforts. One option to overcome this problem is thru self-supervised learning (SSL). By coaching on giant quantities of unlabeled picture knowledge, self-supervised fashions study picture representations that may be transferred to downstream duties, corresponding to picture classification or segmentation. This method produces picture representations that generalize effectively to unseen knowledge and reduces the quantity of labeled knowledge required to construct performant downstream fashions.

On this submit, we reveal how one can prepare self-supervised imaginative and prescient transformers on overhead imagery utilizing Amazon SageMaker. Vacationers collaborated with the Amazon Machine Studying Options Lab (now often known as the Generative AI Innovation Center) to develop this framework to assist and improve aerial imagery mannequin use circumstances. Our resolution is predicated on the DINO algorithm and makes use of the SageMaker distributed data parallel library (SMDDP) to separate the information over a number of GPU situations. When pre-training is full, the DINO picture representations could be transferred to quite a lot of downstream duties. This initiative led to improved mannequin performances throughout the Vacationers Knowledge & Analytics house.

Overview of resolution

The 2-step course of for pre-training imaginative and prescient transformers and transferring them to supervised downstream duties is proven within the following diagram.

Within the following sections, we offer a walkthrough of the answer utilizing satellite tv for pc photographs from the BigEarthNet-S2 dataset. We construct on the code supplied within the DINO repository.

Stipulations

Earlier than getting began, you want entry to a SageMaker notebook instance and an Amazon Simple Storage Service (Amazon S3) bucket.

Put together the BigEarthNet-S2 dataset

BigEarthNet-S2 is a benchmark archive that comprises 590,325 multispectral photographs collected by the Sentinel-2 satellite tv for pc. The pictures doc the land cowl, or bodily floor options, of ten European international locations between June 2017 and Might 2018. The sorts of land cowl in every picture, corresponding to pastures or forests, are annotated in accordance with 19 labels. The next are just a few instance RGB photographs and their labels.

Step one in our workflow is to arrange the BigEarthNet-S2 dataset for DINO coaching and analysis. We begin by downloading the dataset from the terminal of our SageMaker pocket book occasion:

The dataset has a measurement of about 109 GB. Every picture is saved in its personal folder and comprises 12 spectral channels. Three bands with 60m spatial decision (60-meter pixel peak/width) are designed to establish aerosols (B01), water vapor (B09), and clouds (B10). Six bands with 20m spatial decision are used to establish vegetation (B05, B06, B07, B8A) and distinguish between snow, ice, and clouds (B11, B12). Three bands with 10m spatial decision assist seize seen and near-infrared gentle (B02, B03, B04, B8/B8A). Moreover, every folder comprises a JSON file with the picture metadata. An in depth description of the information is supplied within the BigEarthNet Guide.

To carry out statistical analyses of the information and cargo photographs throughout DINO coaching, we course of the person metadata information into a typical geopandas Parquet file. This may be accomplished utilizing the BigEarthNet Widespread and the BigEarthNet GDF Builder helper packages:

The ensuing metadata file comprises the really helpful picture set, which excludes 71,042 photographs which are absolutely lined by seasonal snow, clouds, and cloud shadows. It additionally comprises data on the acquisition date, location, land cowl, and prepare, validation, and check break up for every picture.

We retailer the BigEarthNet-S2 photographs and metadata file in an S3 bucket. As a result of we use true coloration photographs throughout DINO coaching, we solely add the pink (B04), inexperienced (B03), and blue (B02) bands:

The dataset is roughly 48 GB in measurement and has the next construction:

Prepare DINO fashions with SageMaker

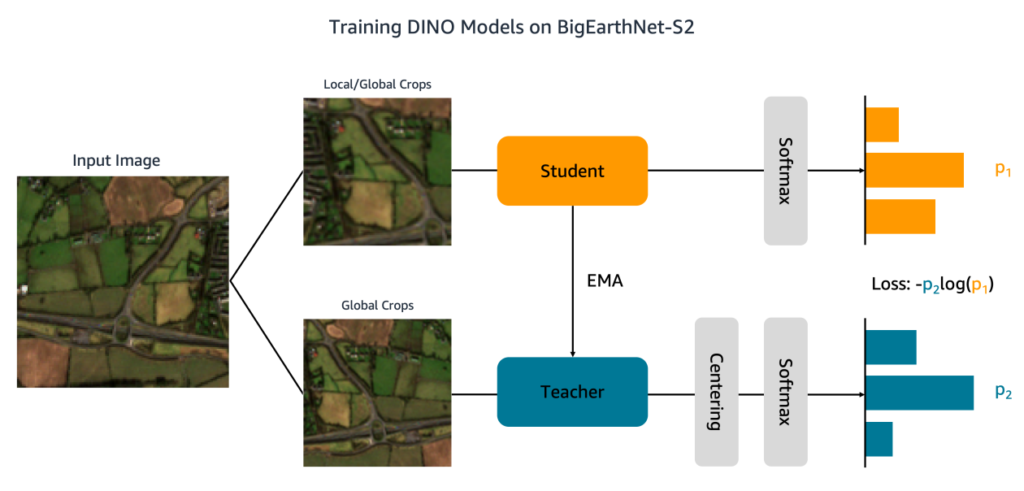

Now that our dataset has been uploaded to Amazon S3, we transfer to coach DINO fashions on BigEarthNet-S2. As proven within the following determine, the DINO algorithm passes totally different world and native crops of an enter picture to pupil and instructor networks. The coed community is taught to match the output of the instructor community by minimizing the cross-entropy loss. The coed and instructor weights are related by an exponential shifting common (EMA).

We make two modifications to the unique DINO code. First, we create a customized PyTorch dataset class to load the BigEarthNet-S2 photographs. The code was initially written to course of ImageNet knowledge and expects photographs to be saved by class. BigEarthNet-S2, nevertheless, is a multi-label dataset the place every picture resides in its personal subfolder. Our dataset class hundreds every picture utilizing the file path saved within the metadata:

This dataset class is named in main_dino.py throughout coaching. Though the code features a operate to one-hot encode the land cowl labels, these labels usually are not utilized by the DINO algorithm.

The second change we make to the DINO code is so as to add assist for SMDDP. We add the next code to the init_distributed_mode operate within the util.py file:

With these changes, we’re prepared to coach DINO fashions on BigEarthNet-S2 utilizing SageMaker. To coach on a number of GPUs or situations, we create a SageMaker PyTorch Estimator that ingests the DINO coaching script, the picture and metadata file paths, and the coaching hyperparameters:

This code specifies that we are going to prepare a small imaginative and prescient transformer mannequin (21 million parameters) with a patch measurement of 16 for 100 epochs. It’s best observe to create a brand new checkpoint_s3_uri for every coaching job with a view to cut back the preliminary knowledge obtain time. As a result of we’re utilizing SMDDP, we should prepare on an ml.p3.16xlarge, ml.p3dn.24xlarge, or ml.p4d.24xlarge occasion. It’s because SMDDP is just enabled for the most important multi-GPU situations. To coach on smaller occasion sorts with out SMDDP, you’ll need to take away the distribution and debugger_hook_config arguments from the estimator.

After we have now created the SageMaker PyTorch Estimator, we launch the coaching job by calling the match methodology. We specify the enter coaching knowledge utilizing the Amazon S3 URIs for the BigEarthNet-S2 metadata and pictures:

SageMaker spins up the occasion, copies the coaching script and dependencies, and begins DINO coaching. We are able to monitor the progress of the coaching job from our Jupyter pocket book utilizing the next instructions:

We are able to additionally monitor occasion metrics and examine log information on the SageMaker console below Coaching jobs. Within the following figures, we plot the GPU utilization and loss operate for a DINO mannequin educated on an ml.p3.16xlarge occasion with a batch measurement of 128.

Throughout coaching, the GPU utilization is 83% of the ml.p3.16xlarge capability (8 NVIDIA Tesla V100 GPUs) and the VRAM utilization is 85%. The loss operate steadily decreases with every epoch, indicating that the outputs of the coed and instructor networks have gotten extra comparable. In whole, coaching takes about 11 hours.

Switch studying to downstream duties

Our educated DINO mannequin could be transferred to downstream duties like picture classification or segmentation. On this part, we use the pre-trained DINO options to foretell the land cowl lessons for photographs within the BigEarthNet-S2 dataset. As depicted within the following diagram, we prepare a multi-label linear classifier on high of frozen DINO options. On this instance, the enter picture is related to arable land and pasture land covers.

Many of the code for the linear classifier is already in place within the authentic DINO repository. We make just a few changes for our particular job. As earlier than, we use the customized BigEarthNet dataset to load photographs throughout coaching and analysis. The labels for the photographs are one-hot encoded as 19-dimensional binary vectors. We use the binary cross-entropy for the loss operate and compute the average precision to judge the efficiency of the mannequin.

To coach the classifier, we create a SageMaker PyTorch Estimator that runs the coaching script, eval_linear.py. The coaching hyperparameters embrace the small print of the DINO mannequin structure and the file path for the mannequin checkpoint:

We begin the coaching job utilizing the match methodology, supplying the Amazon S3 areas of the BigEarthNet-S2 metadata and coaching photographs and the DINO mannequin checkpoint:

When coaching is full, we are able to carry out inference on the BigEarthNet-S2 check set utilizing SageMaker batch transform or SageMaker Processing. Within the following desk, we examine the common precision of the linear mannequin on check set photographs utilizing two totally different DINO picture representations. The primary mannequin, ViT-S/16 (ImageNet), is the small imaginative and prescient transformer checkpoint included within the DINO repository that was pre-trained utilizing front-facing photographs within the ImageNet dataset. The second mannequin, ViT-S/16 (BigEarthNet-S2), is the mannequin we produced by pre-training on overhead imagery.

| Mannequin | Common precision |

|---|---|

| ViT-S/16 (ImageNet) | 0.685 |

| ViT-S/16 (BigEarthNet-S2) | 0.732 |

We discover that the DINO mannequin pre-trained on BigEarthNet-S2 transfers higher to the land cowl classification job than the DINO mannequin pre-trained on ImageNet, leading to a 6.7% improve within the common precision.

Clear up

After finishing DINO coaching and switch studying, we are able to clear up our sources to keep away from incurring prices. We stop or delete our notebook instance and remove any unwanted data or model artifacts from Amazon S3.

Conclusion

This submit demonstrated how one can prepare DINO fashions on overhead imagery utilizing SageMaker. We used SageMaker PyTorch Estimators and SMDDP with a view to generate representations of BigEarthNet-S2 photographs with out the necessity for specific labels. We then transferred the DINO options to a downstream picture classification job, which concerned predicting the land cowl class of BigEarthNet-S2 photographs. For this job, pre-training on satellite tv for pc imagery yielded a 6.7% improve in common precision relative to pre-training on ImageNet.

You need to use this resolution as a template for coaching DINO fashions on large-scale, unlabeled aerial and satellite tv for pc imagery datasets. To study extra about DINO and constructing fashions on SageMaker, try the next sources:

Concerning the Authors

Ben Veasey is a Senior Affiliate Knowledge Scientist at Vacationers, working throughout the AI & Automation Accelerator crew. With a deep understanding of revolutionary AI applied sciences, together with laptop imaginative and prescient, pure language processing, and generative AI, Ben is devoted to accelerating the adoption of those applied sciences to optimize enterprise processes and drive effectivity at Vacationers.

Jeremy Anderson is a Director & Knowledge Scientist at Vacationers on the AI & Automation Accelerator crew. He’s concerned with fixing enterprise issues with the most recent AI and deep studying strategies together with giant language fashions, foundational imagery fashions, and generative AI. Previous to Vacationers, Jeremy earned a PhD in Molecular Biophysics from the Johns Hopkins College and likewise studied evolutionary biochemistry. Outdoors of labor you’ll find him operating, woodworking, or rewilding his yard.

Jordan Knight is a Senior Knowledge Scientist working for Vacationers within the Enterprise Insurance coverage Analytics & Analysis Division. His ardour is for fixing difficult real-world laptop imaginative and prescient issues and exploring new state-of-the-art strategies to take action. He has a selected curiosity within the social affect of ML fashions and the way we are able to proceed to enhance modeling processes to develop ML options which are equitable for all. Jordan graduated from MIT with a Grasp’s in Enterprise Analytics. In his free time you’ll find him both mountain climbing, climbing, or persevering with to develop his considerably rudimentary cooking expertise.

June Li is an information scientist at Vacationers’s Enterprise Insurance coverage’s Synthetic Intelligence crew, the place she leads and coordinates work within the AI imagery portfolio. She is keen about implementing revolutionary AI options that carry substantial worth to the enterprise companions and stakeholders. Her work has been integral in reworking complicated enterprise challenges into alternatives by leveraging cutting-edge AI applied sciences.

Sourav Bhabesh is a Senior Utilized Scientist on the AWS Titan Labs, the place he builds Foundational Mannequin (FM) capabilities and options. His specialty is Pure Language Processing (NLP) and is keen about deep studying. Outdoors of labor he enjoys studying books and touring.

Laura Kulowski is an Utilized Scientist at Amazon’s Generative AI Innovation Heart, the place she works intently with clients to construct generative AI options. In her free time, Laura enjoys exploring new locations by bike.

Andrew Ang is a Sr. Machine Studying Engineer at AWS. Along with serving to clients construct AI/ML options, he enjoys water sports activities, squash and watching journey & meals vlogs.

Mehdi Noori is an Utilized Science Supervisor on the Generative AI Innovation Heart. With a ardour for bridging know-how and innovation, he assists AWS clients in unlocking the potential of generative AI, turning potential challenges into alternatives for speedy experimentation and innovation by specializing in scalable, measurable, and impactful makes use of of superior AI applied sciences, and streamlining the trail to manufacturing.