Scale coaching and inference of 1000’s of ML fashions with Amazon SageMaker

As machine studying (ML) turns into more and more prevalent in a variety of industries, organizations are discovering the necessity to practice and serve massive numbers of ML fashions to fulfill the varied wants of their prospects. For software program as a service (SaaS) suppliers particularly, the power to coach and serve 1000’s of fashions effectively and cost-effectively is essential for staying aggressive in a quickly evolving market.

Coaching and serving 1000’s of fashions requires a strong and scalable infrastructure, which is the place Amazon SageMaker might help. SageMaker is a completely managed platform that permits builders and information scientists to construct, practice, and deploy ML fashions shortly, whereas additionally providing the cost-saving advantages of utilizing the AWS Cloud infrastructure.

On this put up, we discover how you need to use SageMaker options, together with Amazon SageMaker Processing, SageMaker coaching jobs, and SageMaker multi-model endpoints (MMEs), to coach and serve 1000’s of fashions in an economical method. To get began with the described answer, you’ll be able to check with the accompanying pocket book on GitHub.

Use case: Vitality forecasting

For this put up, we assume the position of an ISV firm that helps their prospects develop into extra sustainable by monitoring their vitality consumption and offering forecasts. Our firm has 1,000 prospects who need to higher perceive their vitality utilization and make knowledgeable choices about the right way to cut back their environmental affect. To do that, we use an artificial dataset and practice an ML mannequin based mostly on Prophet for every buyer to make vitality consumption forecasts. With SageMaker, we are able to effectively practice and serve these 1,000 fashions, offering our prospects with correct and actionable insights into their vitality utilization.

There are three options within the generated dataset:

- customer_id – That is an integer identifier for every buyer, starting from 0–999.

- timestamp – This can be a date/time worth that signifies the time at which the vitality consumption was measured. The timestamps are randomly generated between the beginning and finish dates specified within the code.

- consumption – This can be a float worth that signifies the vitality consumption, measured in some arbitrary unit. The consumption values are randomly generated between 0–1,000 with sinusoidal seasonality.

Resolution overview

To effectively practice and serve 1000’s of ML fashions, we are able to use the next SageMaker options:

- SageMaker Processing – SageMaker Processing is a completely managed information preparation service that lets you carry out information processing and mannequin analysis duties in your enter information. You need to use SageMaker Processing to rework uncooked information into the format wanted for coaching and inference, in addition to to run batch and on-line evaluations of your fashions.

- SageMaker training jobs – You need to use SageMaker coaching jobs to coach fashions on a wide range of algorithms and enter information sorts, and specify the compute assets wanted for coaching.

- SageMaker MMEs – Multi-model endpoints allow you to host a number of fashions on a single endpoint, which makes it straightforward to serve predictions from a number of fashions utilizing a single API. SageMaker MMEs can save time and assets by decreasing the variety of endpoints wanted to serve predictions from a number of fashions. MMEs assist internet hosting of each CPU- and GPU-backed fashions. Word that in our situation, we use 1,000 fashions, however this isn’t a limitation of the service itself.

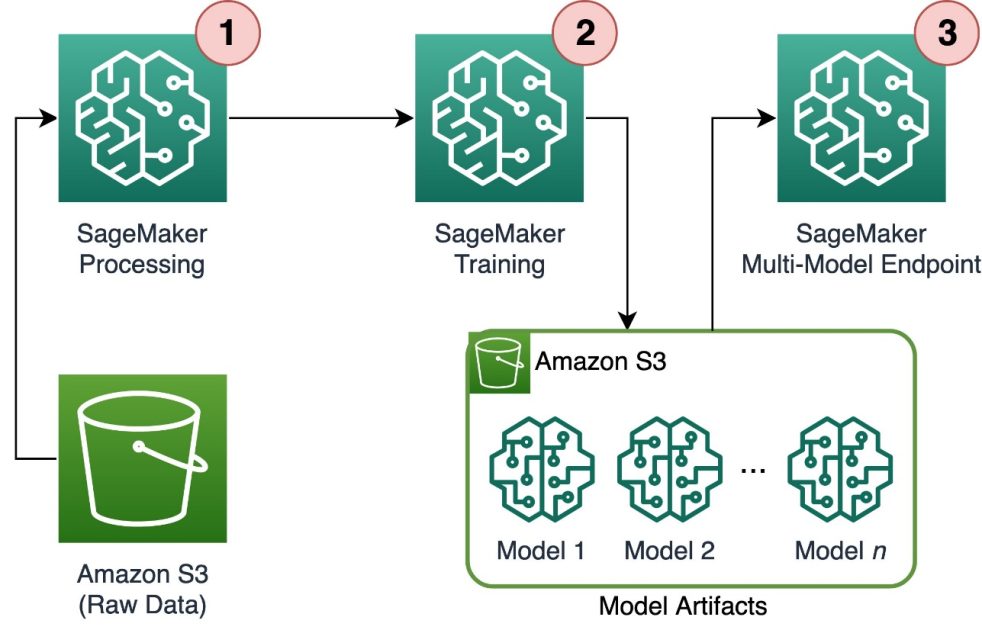

The next diagram illustrates the answer structure.

The workflow contains the next steps:

- We use SageMaker Processing to preprocess information and create a single CSV file per buyer and retailer it in Amazon Simple Storage Service (Amazon S3).

- The SageMaker coaching job is configured to learn the output of the SageMaker Processing job and distribute it in a round-robin style to the coaching cases. Word that this will also be achieved with Amazon SageMaker Pipelines.

- The mannequin artifacts are saved in Amazon S3 by the coaching job, and are served straight from the SageMaker MME.

Scale coaching to 1000’s of fashions

Scaling the coaching of 1000’s of fashions is feasible by way of the distribution parameter of the TrainingInput class within the SageMaker Python SDK, which lets you specify how information is distributed throughout a number of coaching cases for a coaching job. There are three choices for the distribution parameter: FullyReplicated, ShardedByS3Key, and ShardedByRecord. The ShardedByS3Key choice signifies that the coaching information is sharded by S3 object key, with every coaching occasion receiving a novel subset of the information, avoiding duplication. After the information is copied by SageMaker to the coaching containers, we are able to learn the folder and recordsdata construction to coach a novel mannequin per buyer file. The next is an instance code snippet:

Each SageMaker coaching job shops the mannequin saved within the /decide/ml/mannequin folder of the coaching container earlier than archiving it in a mannequin.tar.gz file, after which uploads it to Amazon S3 upon coaching job completion. Energy customers can even automate this course of with SageMaker Pipelines. When storing a number of fashions by way of the identical coaching job, SageMaker creates a single mannequin.tar.gz file containing all of the skilled fashions. This might then imply that, with a view to serve the mannequin, we would wish to unpack the archive first. To keep away from this, we use checkpoints to save lots of the state of particular person fashions. SageMaker gives the performance to repeat checkpoints created through the coaching job to Amazon S3. Right here, the checkpoints should be saved in a pre-specified location, with the default being /decide/ml/checkpoints. These checkpoints can be utilized to renew coaching at a later second or as a mannequin to deploy on an endpoint. For a high-level abstract of how the SageMaker coaching platform manages storage paths for coaching datasets, mannequin artifacts, checkpoints, and outputs between AWS Cloud storage and coaching jobs in SageMaker, check with Amazon SageMaker Training Storage Folders for Training Datasets, Checkpoints, Model Artifacts, and Outputs.

The next code makes use of a fictitious mannequin.save() perform contained in the practice.py script containing the coaching logic:

Scale inference to 1000’s of fashions with SageMaker MMEs

SageMaker MMEs permit you to serve a number of fashions on the identical time by creating an endpoint configuration that features a record of all of the fashions to serve, after which creating an endpoint utilizing that endpoint configuration. There is no such thing as a must re-deploy the endpoint each time you add a brand new mannequin as a result of the endpoint will robotically serve all fashions saved within the specified S3 paths. That is achieved with Multi Model Server (MMS), an open-source framework for serving ML fashions that may be put in in containers to offer the entrance finish that fulfills the necessities for the brand new MME container APIs. As well as, you need to use different mannequin servers together with TorchServe and Triton. MMS will be put in in your customized container by way of the SageMaker Inference Toolkit. To study extra about the right way to configure your Dockerfile to incorporate MMS and use it to serve your fashions, check with Build Your Own Container for SageMaker Multi-Model Endpoints.

The next code snippet reveals the right way to create an MME utilizing the SageMaker Python SDK:

When the MME is stay, we are able to invoke it to generate predictions. Invocations will be executed in any AWS SDK in addition to with the SageMaker Python SDK, as proven within the following code snippet:

When calling a mannequin, the mannequin is initially loaded from Amazon S3 on the occasion, which may end up in a chilly begin when calling a brand new mannequin. Incessantly used fashions are cached in reminiscence and on disk to offer low-latency inference.

Conclusion

SageMaker is a strong and cost-effective platform for coaching and serving 1000’s of ML fashions. Its options, together with SageMaker Processing, coaching jobs, and MMEs, allow organizations to effectively practice and serve 1000’s of fashions at scale, whereas additionally benefiting from the cost-saving benefits of utilizing the AWS Cloud infrastructure. To study extra about the right way to use SageMaker for coaching and serving 1000’s of fashions, check with Process data, Train a Model with Amazon SageMaker and Host multiple models in one container behind one endpoint.

Concerning the Authors

Davide Gallitelli is a Specialist Options Architect for AI/ML within the EMEA area. He’s based mostly in Brussels and works intently with prospects all through Benelux. He has been a developer since he was very younger, beginning to code on the age of seven. He began studying AI/ML at college, and has fallen in love with it since then.

Davide Gallitelli is a Specialist Options Architect for AI/ML within the EMEA area. He’s based mostly in Brussels and works intently with prospects all through Benelux. He has been a developer since he was very younger, beginning to code on the age of seven. He began studying AI/ML at college, and has fallen in love with it since then.

Maurits de Groot is a Options Architect at Amazon Internet Providers, based mostly out of Amsterdam. He likes to work on machine learning-related subjects and has a predilection for startups. In his spare time, he enjoys snowboarding and taking part in squash.

Maurits de Groot is a Options Architect at Amazon Internet Providers, based mostly out of Amsterdam. He likes to work on machine learning-related subjects and has a predilection for startups. In his spare time, he enjoys snowboarding and taking part in squash.