From Unstructured to Structured Knowledge with LLMs

Sponsored Publish

Authors: Michael Ortega and Geoffrey Angus

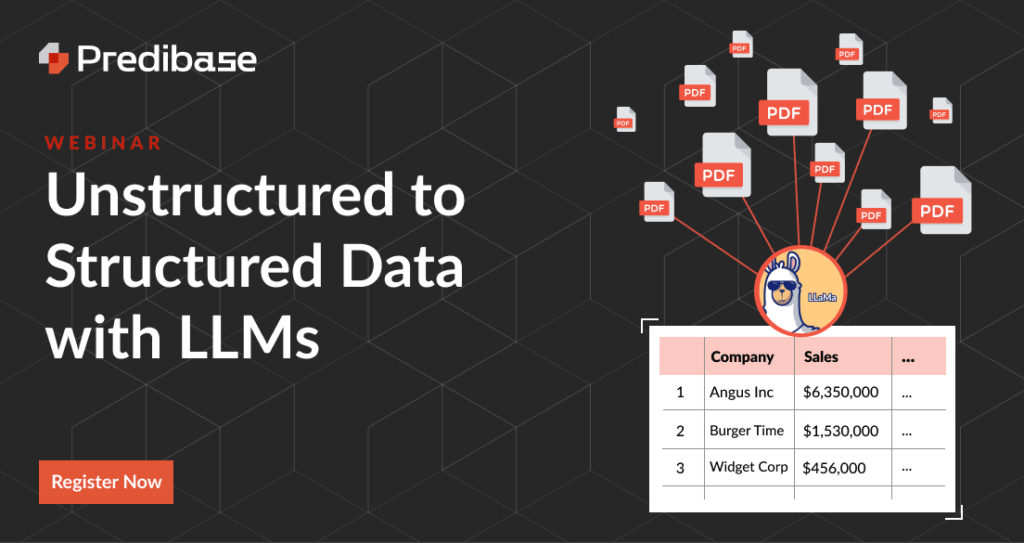

Ensure that to register for our upcoming webinar to discover ways to use giant language fashions to extract insights from unstructured paperwork.

Due to ChatGPT, chat interfaces are how most customers have interacted with LLMs. Whereas that is quick, intuitive, and enjoyable for a variety of generative use instances (e.g. ChatGPT write me a joke about what number of engineers it takes to jot down a weblog), there are elementary limitations to this interface that preserve them from going into manufacturing.

- Gradual – chat interfaces are optimized to offer a low-latency expertise. Such optimizations usually come on the expense of throughput, making them unviable for large-scale analytics use instances.

- Imprecise – even after days of devoted immediate iteration, LLMs are sometimes liable to offering verbose responses to easy questions. Whereas such responses are generally extra human-intelligible in chat-like interactions, they’re oftentimes tougher to parse and eat in broader software program ecosystems.

- Restricted assist for analytics- even when related to your non-public information (by way of an embedding index or in any other case), most LLMs deployed for chat merely can’t ingest the entire context required for a lot of courses of questions sometimes requested by information analysts.

The truth is that many of those LLM-powered search and Q&A methods aren’t optimized for large-scale production-grade analytics use instances.

The appropriate strategy: Generate structured insights from unstructured information with LLMs

Think about you’re a portfolio supervisor with numerous monetary paperwork. You need to ask the next query, “Of those 10 potential investments, present the very best income achieved by every firm between the years 2000 to 2023?” An LLM out-of-the-box, even with an index retrieval system related to your non-public information, would wrestle to reply this query as a result of quantity of context required.

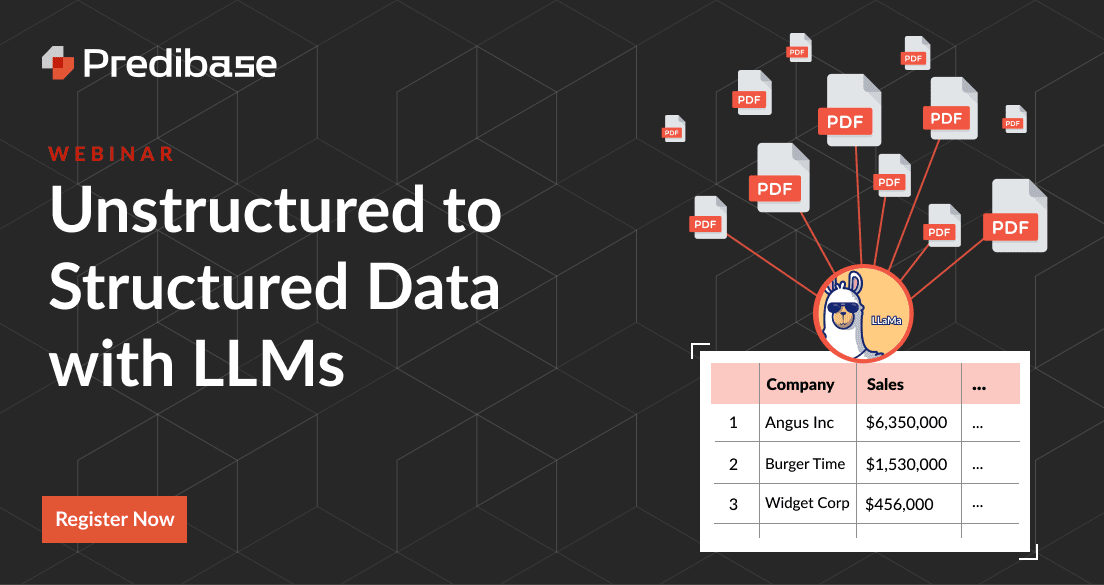

Fortuitously, there’s a greater approach. You possibly can reply questions over your complete corpus quicker by first utilizing an LLM to transform your unstructured paperwork into structured tables by way of a single giant batch job. Utilizing this strategy, the monetary establishment from our hypothetical above may generate structured information in a desk from a big set of economic PDFs utilizing an outlined schema. Then, rapidly produce key statistics on their portfolio in ways in which a chat-based LLM would wrestle.

Even additional, you could possibly construct net-new tabular ML fashions on high of the derived structured information for downstream information science duties (e.g. based mostly on these 10 threat components which firm is most probably to default). This smaller, task-specific ML mannequin utilizing the derived structured information would carry out higher and price much less to run in comparison with a chat-based LLM.

Learn to extract structured insights out of your paperwork with LLMs

Need to discover ways to put this strategy into apply utilizing state-of-the-art AI instruments designed for builders? Be part of our upcoming webinar and stay demo to discover ways to:

- Outline a schema of information to extract from a big corpus of PDFs

- Customise and use open-source LLMs to assemble new tables with supply citations

- Visualize and run predictive analytics in your extracted information

You’ll have an opportunity to ask your questions stay throughout our Q&A.