Extra correct responses, export to Google Sheets

Let’s dig deeper into this new functionality and the way it’s serving to Bard enhance its responses.

Improved logic and reasoning abilities

Giant language fashions (LLMs) are like prediction engines — when given a immediate, they generate a response by predicting what phrases are prone to come subsequent. In consequence, they’ve been extraordinarily succesful on language and inventive duties, however weaker in areas like reasoning and math. With a view to assist remedy extra advanced issues with superior reasoning and logic capabilities, relying solely on LLM output isn’t sufficient.

Our new technique permits Bard to generate and execute code to spice up its reasoning and math skills. This strategy takes inspiration from a well-studied dichotomy in human intelligence, notably lined in Daniel Kahneman’s e-book “Pondering, Quick and Sluggish” — the separation of “System 1” and “System 2” considering.

- System 1 considering is quick, intuitive and easy. When a jazz musician improvises on the spot or a touch-typer thinks a couple of phrase and watches it seem on the display, they’re utilizing System 1 considering.

- System 2 considering, against this, is sluggish, deliberate and effortful. If you’re finishing up lengthy division or studying learn how to play an instrument, you’re utilizing System 2.

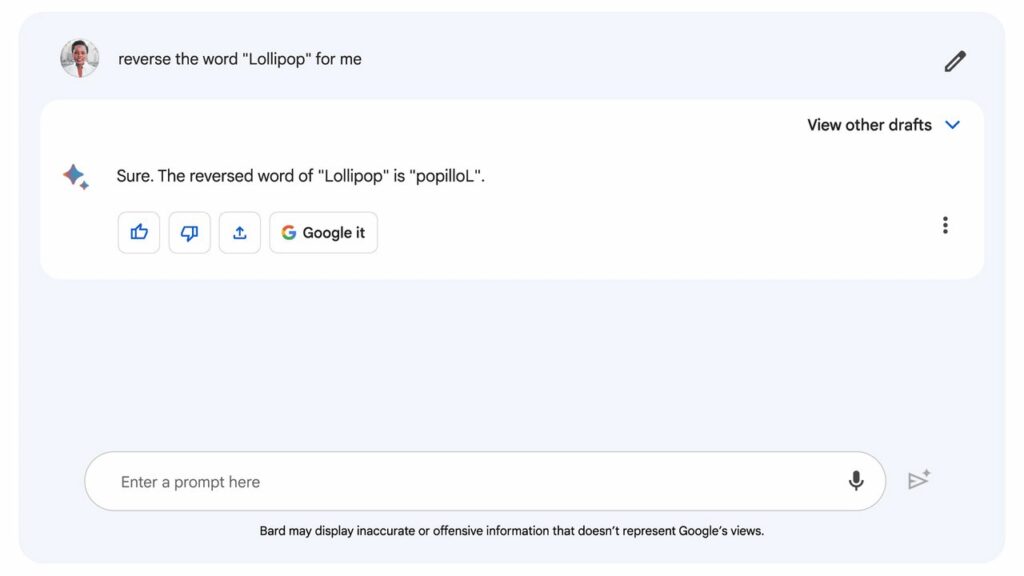

On this analogy, LLMs will be regarded as working purely beneath System 1 — producing textual content rapidly however with out deep thought. This results in some unimaginable capabilities, however can fall quick in some stunning methods. (Think about attempting to resolve a math drawback utilizing System 1 alone: You’ll be able to’t cease and do the arithmetic, you simply must spit out the primary reply that involves thoughts.) Conventional computation intently aligns with System 2 considering: It’s formulaic and rigid, however the proper sequence of steps can produce spectacular outcomes, akin to options to lengthy division.

With this newest replace, we’ve mixed the capabilities of each LLMs (System 1) and conventional code (System 2) to assist enhance accuracy in Bard’s responses. By implicit code execution, Bard identifies prompts which may profit from logical code, writes it “beneath the hood,” executes it and makes use of the outcome to generate a extra correct response. Thus far, we have seen this technique enhance the accuracy of Bard’s responses to computation-based phrase and math issues in our inside problem datasets by roughly 30%.

Even with these enhancements, Bard received’t at all times get it proper — for instance, Bard may not generate code to assist the immediate response, the code it generates is likely to be mistaken or Bard might not embrace the executed code in its response. With all that stated, this improved means to reply with structured, logic-driven capabilities is a crucial step towards making Bard much more useful. Keep tuned for extra.