Falcon LLM: The New King of Open-Supply LLMs

Picture by Editor

We’ve been seeing massive language fashions (LLMs) spitting out each week, with increasingly more chatbots for us to make use of. Nevertheless, it may be onerous to determine which is one of the best, the progress on every and which one is most helpful.

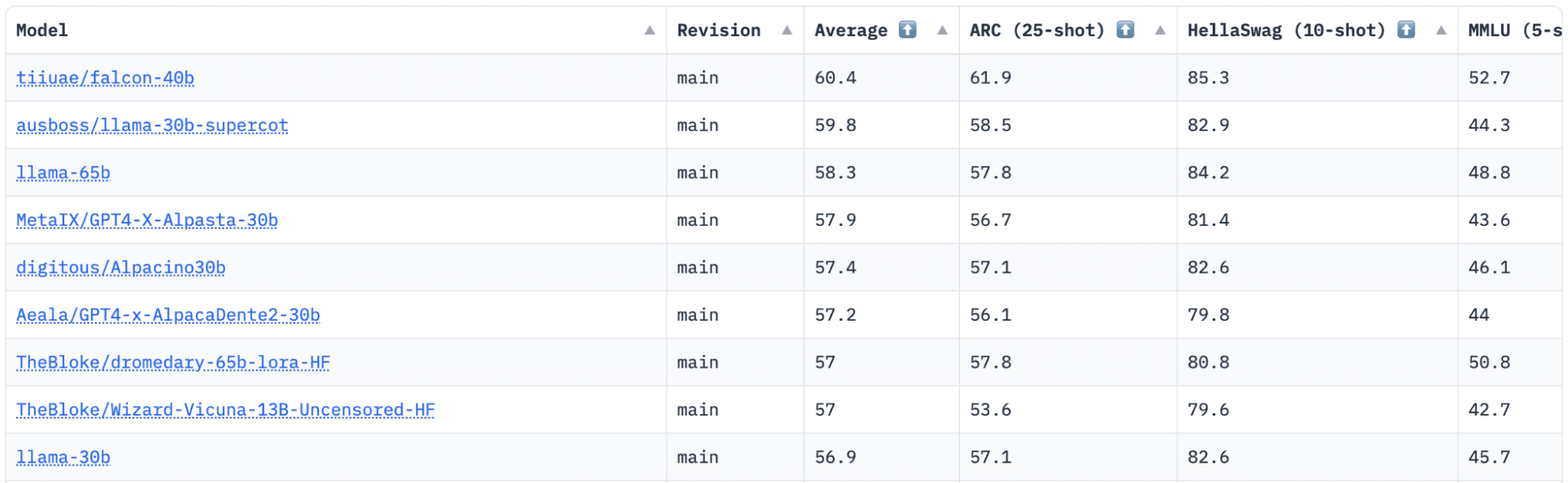

HuggingFace has an Open LLM Leaderboard which tracks, evaluates and ranks LLMs as they’re being launched. They use a novel framework which is used to check generative language fashions on completely different analysis duties.

Of latest, LLaMA (Massive Language Mannequin Meta AI) was on the high of the leaderboard and has been lately dethroned by a brand new pre-trained LLM – Falcon 40B.

Picture by HuggingFace Open LLM Leaderboard

Falcon LLM was Based and constructed by the Technology Innovation Institute (TII), an organization that’s a part of the Abu Dhabi Authorities’s Superior Know-how Analysis Council. The federal government oversees expertise analysis in the entire of the United Arab Emirates, the place the workforce of scientists, researchers and engineers concentrate on delivering transformative applied sciences and discoveries in science.

Falcon-40B is a foundational LLM with 40B parameters, coaching on one trillion tokens. Falcon 40B is an autoregressive decoder-only mannequin. An autoregressive decoder-only mannequin implies that the mannequin is educated to foretell the subsequent token in a sequence given the earlier tokens. The GPT mannequin is an effective instance of this.

The structure of Falcon has been proven to considerably outperform GPT-3 for under 75% of the coaching compute price range, in addition to solely requiring ? of the compute at inference time.

Information high quality at scale was an essential focus of the workforce on the Know-how Innovation Institute, as we all know that LLMs are extremely delicate to the standard of coaching information. The workforce constructed a knowledge pipeline which scaled to tens of 1000’s of CPU cores for quick processing and was capable of extract high-quality content material from the online utilizing intensive filtering and deduplication.

Additionally they have one other smaller model: Falcon-7B which has 7B parameters, educated on 1,500B tokens. Aswell as a Falcon-40B-Instruct, and Falcon-7B-Instruct fashions out there, in case you are searching for a ready-to-use chat mannequin.

What can Falcon 40B do?

Much like different LLMs, Falcon 40B can:

- Generate inventive content material

- Clear up advanced issues

- Customer support operations

- Digital assistants

- Language Translation

- Sentiment evaluation.

- Scale back and automate “repetitive” work.

- Assist Emirati firms change into extra environment friendly

How was Falcon 40B educated?

Being educated on 1 trillion tokens, it required 384 GPUs on AWS, over two months. Educated on 1,000B tokens of RefinedWeb, a large English internet dataset constructed by TII.

Pretraining information consisted of a group of public information from the online, utilizing CommonCrawl. The workforce went by means of an intensive filtering section to take away machine-generated textual content, and grownup content material in addition to any deduplication to provide a pretraining dataset of almost 5 trillion tokens was assembled.

Constructed on high of CommonCrawl, the RefinedWeb dataset has proven fashions to attain a greater efficiency than fashions which can be educated on curated datasets. RefinedWeb can be multimodal-friendly.

As soon as it was prepared, Falcon was validated towards open-source benchmarks comparable to EAI Harness, HELM, and BigBench.

They’ve open-sourced Falcon LLM to the general public, making Falcon 40B and 7B extra accessible to researchers and builders as it’s primarily based on the Apache License Model 2.0 launch.

The LLM which was as soon as for analysis and industrial use solely, has now change into open-source to cater to the worldwide demand for inclusive entry to AI. It’s now freed from royalties for industrial use restrictions, because the UAE are dedicated to altering the challenges and bounds inside AI and the way it performs a major function sooner or later.

Aiming to domesticate an ecosystem of collaboration, innovation, and information sharing on the earth of AI, Apache 2.0 ensures safety and secure open-source software program.

If you wish to check out a less complicated model of Falcon-40B which is best fitted to generic directions within the type of a chatbot, you wish to be utilizing Falcon-7B.

So let’s get began…

Should you haven’t already, set up the next packages:

!pip set up transformers

!pip set up einops

!pip set up speed up

!pip set up xformers

After getting put in these packages, you possibly can then transfer on to operating the code supplied for Falcon 7-B Instruct:

from transformers import AutoTokenizer, AutoModelForCausalLM

import transformers

import torch

mannequin = "tiiuae/falcon-7b-instruct"

tokenizer = AutoTokenizer.from_pretrained(mannequin)

pipeline = transformers.pipeline(

"text-generation",

mannequin=mannequin,

tokenizer=tokenizer,

torch_dtype=torch.bfloat16,

trust_remote_code=True,

device_map="auto",

)

sequences = pipeline(

"Girafatron is obsessive about giraffes, essentially the most wonderful animal on the face of this Earth. Giraftron believes all different animals are irrelevant when in comparison with the fantastic majesty of the giraffe.nDaniel: Hey, Girafatron!nGirafatron:",

max_length=200,

do_sample=True,

top_k=10,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

)

for seq in sequences:

print(f"End result: {seq['generated_text']}")

Standing as one of the best open-source mannequin out there, Falcon has taken the LLaMAs crown, and persons are amazed at its strongly optimized structure, open-source with a novel license, and it’s out there in two sizes: 40B and 7B parameters.

Have you ever had a strive? You probably have, tell us within the feedback what you assume.

Nisha Arya is a Information Scientist, Freelance Technical Author and Group Supervisor at KDnuggets. She is especially serious about offering Information Science profession recommendation or tutorials and principle primarily based information round Information Science. She additionally needs to discover the other ways Synthetic Intelligence is/can profit the longevity of human life. A eager learner, searching for to broaden her tech information and writing abilities, while serving to information others.