Amazon SageMaker with TensorBoard: An summary of a hosted TensorBoard expertise

At present, information scientists who’re coaching deep studying fashions have to determine and remediate mannequin coaching points to fulfill accuracy targets for manufacturing deployment, and require a option to make the most of normal instruments for debugging mannequin coaching. Among the many information scientist group, TensorBoard is a well-liked toolkit that enables information scientists to visualise and analyze numerous facets of their machine studying (ML) fashions and coaching processes. It gives a set of instruments for visualizing coaching metrics, analyzing mannequin architectures, exploring embeddings, and extra. TensorFlow and PyTorch initiatives each endorse and use TensorBoard of their official documentation and examples.

Amazon SageMaker with TensorBoard is a functionality that brings the visualization instruments of TensorBoard to SageMaker. Built-in with SageMaker coaching jobs and domains, it gives SageMaker area customers entry to the TensorBoard information and helps area customers carry out mannequin debugging duties utilizing the SageMaker TensorBoard visualization plugins. Once they create a SageMaker coaching job, area customers can use TensorBoard utilizing the SageMaker Python SDK or Boto3 API. SageMaker with TensorBoard is supported by the SageMaker Information Supervisor plugin, with which area customers can entry many coaching jobs in a single place inside the TensorBoard utility.

On this publish, we exhibit the right way to arrange a coaching job with TensorBoard in SageMaker utilizing the SageMaker Python SDK, entry SageMaker TensorBoard, discover coaching output information visualized in TensorBoard, and delete unused TensorBoard purposes.

Resolution overview

A typical coaching job for deep studying in SageMaker consists of two primary steps: getting ready a coaching script and configuring a SageMaker coaching job launcher. On this publish, we stroll you thru the required modifications to gather TensorBoard-compatible information from SageMaker coaching.

Stipulations

To start out utilizing SageMaker with TensorBoard, it is advisable to arrange a SageMaker area with an Amazon VPC underneath an AWS account. Area consumer profiles for every particular person consumer are required to entry the TensorBoard on SageMaker, and the AWS Identity and Access Management (IAM) execution function wants a minimal set of permissions, together with the next:

sagemaker:CreateAppsagemaker:DeleteAppsagemaker:DescribeTrainingJobsagemaker:Searchs3:GetObjects3:ListBucket

For extra info on the right way to arrange SageMaker Area and consumer profiles, see Onboard to Amazon SageMaker Domain Using Quick setup and Add and Remove User Profiles.

Listing construction

When utilizing Amazon SageMaker Studio, the listing construction could be organized as follows:

Right here, script/prepare.py is your coaching script, and simple_tensorboard.ipynb launches the SageMaker coaching job.

Modify your coaching script

You should utilize any of the next instruments to gather tensors and scalars: TensorBoardX, TensorFlow Abstract Author, PyTorch Abstract Author, or Amazon SageMaker Debugger, and specify the info output path because the log listing within the coaching container (log_dir). On this pattern code, we use TensorFlow to coach a easy, totally related neural community for a classification job. For different choices, seek advice from Prepare a training job with a TensorBoard output data configuration. Within the prepare() perform, we use the tensorflow.keras.callbacks.TensorBoard software to gather tensors and scalars, specify /choose/ml/output/tensorboard because the log listing within the coaching container, and move it to mannequin coaching callbacks argument. See the next code:

Assemble a SageMaker coaching launcher with a TensorBoard information configuration

Use sagemaker.debugger.TensorBoardOutputConfig whereas configuring a SageMaker framework estimator, which maps the Amazon Simple Storage Service (Amazon S3) bucket you specify for saving TensorBoard information with the native path within the coaching container (for instance, /choose/ml/output/tensorboard). You should utilize a different container native output path. Nonetheless, it have to be in step with the worth of the LOG_DIR variable, as specified within the earlier step, to have SageMaker efficiently search the native path within the coaching container and save the TensorBoard information to the S3 output bucket.

Subsequent, move the thing of the module to the tensorboard_output_config parameter of the estimator class. The next code snippet reveals an instance of getting ready a TensorFlow estimator with the TensorBoard output configuration parameter.

The next is the boilerplate code:

The next code is for the coaching container:

The next code is the TensorBoard configuration:

Launch the coaching job with the next code:

Entry TensorBoard on SageMaker

You’ll be able to entry TensorBoard with two strategies: programmatically utilizing the sagemaker.interactive_apps.tensorboard module that generates the URL or utilizing the TensorBoard touchdown web page on the SageMaker console. After you open TensorBoard, SageMaker runs the TensorBoard plugin and routinely finds and masses all coaching job output information in a TensorBoard-compatible file format from S3 buckets paired with coaching jobs throughout or after coaching.

The next code autogenerates the URL to the TensorBoard console touchdown web page:

This returns the next message with a URL that opens the TensorBoard touchdown web page.

For opening TensorBoard from the SageMaker console, please seek advice from How to access TensorBoard on SageMaker.

If you open the TensorBoard utility, TensorBoard opens with the SageMaker Information Supervisor tab. The next screenshot reveals the total view of the SageMaker Information Supervisor tab within the TensorBoard utility.

On the SageMaker Information Supervisor tab, you possibly can choose any coaching job and cargo TensorBoard-compatible coaching output information from Amazon S3.

- Within the Add Coaching Job part, use the verify packing containers to decide on coaching jobs from which you need to pull information and visualize for debugging.

- Select Add Chosen Jobs.

The chosen jobs ought to seem within the Tracked Coaching Jobs part.

Refresh the viewer by selecting the refresh icon within the upper-right nook, and the visualization tabs ought to seem after the job information is efficiently loaded.

Discover coaching output information visualized in TensorBoard

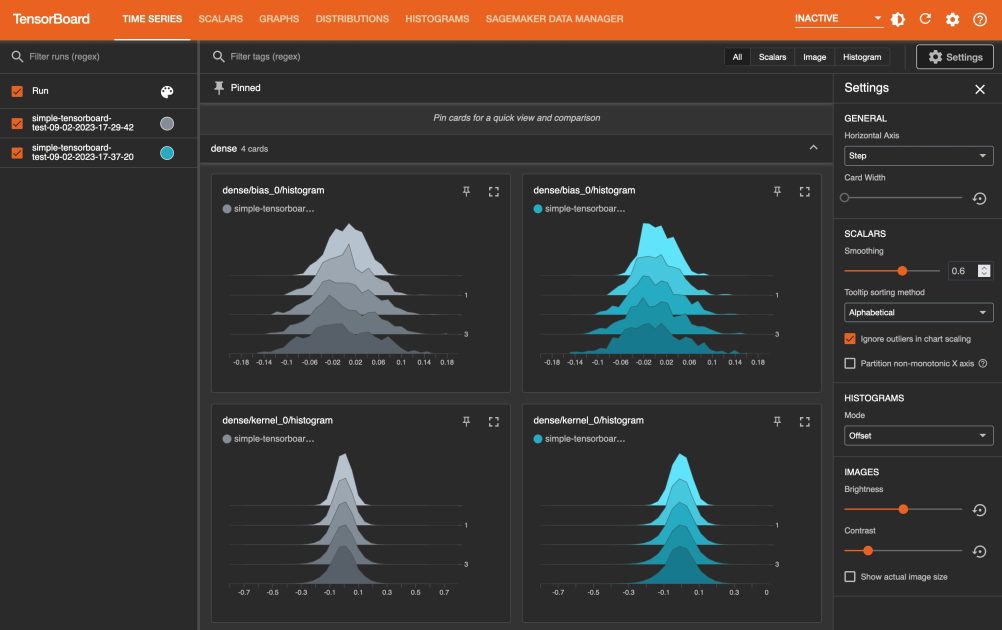

On the Time Collection tab and different graphics-based tabs, you possibly can see the checklist of Tracked Coaching Jobs within the left pane. It’s also possible to use the verify packing containers of the coaching jobs to point out or conceal visualizations. The TensorBoard dynamic plugins are activated dynamically relying on how you will have set your coaching script to incorporate abstract writers and move callbacks for tensor and scalar assortment, and the graphics tabs additionally seem dynamically. The next screenshots present instance views of every tab with visualizations of the collected metrics of two coaching jobs. The metrices embrace time collection, scalar, graph, distribution, and histogram plugins.

The next screenshot is the Time Collection tab view.

The next screenshot is the Scalars tab view.

The next screenshot is the Graphs tab view.

The next screenshot is the Distributions tab view.

The next screenshot is the Histograms tab view.

Clear up

After you might be finished with monitoring and experimenting with jobs in TensorBoard, shut the TensorBoard utility down:

- On the SageMaker console, select Domains within the navigation pane.

- Select your area.

- Select your consumer profile.

- Beneath Apps, select Delete App for the TensorBoard row.

- Select Sure, delete app.

- Enter delete within the textual content field, then select Delete.

A message ought to seem on the prime of the web page: “Default is being deleted”.

Conclusion

TensorBoard is a strong software for visualizing, analyzing, and debugging deep studying fashions. On this publish, we offer a information to utilizing SageMaker with TensorBoard, together with the right way to arrange TensorBoard in a SageMaker coaching job utilizing the SageMaker Python SDK, entry SageMaker TensorBoard, discover coaching output information visualized in TensorBoard, and delete unused TensorBoard purposes. By following these steps, you can begin utilizing TensorBoard in SageMaker on your work.

We encourage you to experiment with different options and strategies.

Concerning the authors

Dr. Baichuan Solar is a Senior Information Scientist at AWS AI/ML. He’s captivated with fixing strategic enterprise issues with clients utilizing data-driven methodology on the cloud, and he has been main initiatives in difficult areas together with robotics laptop imaginative and prescient, time collection forecasting, value optimization, predictive upkeep, pharmaceutical growth, product advice system, and so forth. In his spare time he enjoys touring and hanging out with household.

Dr. Baichuan Solar is a Senior Information Scientist at AWS AI/ML. He’s captivated with fixing strategic enterprise issues with clients utilizing data-driven methodology on the cloud, and he has been main initiatives in difficult areas together with robotics laptop imaginative and prescient, time collection forecasting, value optimization, predictive upkeep, pharmaceutical growth, product advice system, and so forth. In his spare time he enjoys touring and hanging out with household.

Manoj Ravi is a Senior Product Supervisor for Amazon SageMaker. He’s captivated with constructing next-gen AI merchandise and works on software program and instruments to make large-scale machine studying simpler for patrons. He holds an MBA from Haas College of Enterprise and a Masters in Info Techniques Administration from Carnegie Mellon College. In his spare time, Manoj enjoys taking part in tennis and pursuing panorama images.

Manoj Ravi is a Senior Product Supervisor for Amazon SageMaker. He’s captivated with constructing next-gen AI merchandise and works on software program and instruments to make large-scale machine studying simpler for patrons. He holds an MBA from Haas College of Enterprise and a Masters in Info Techniques Administration from Carnegie Mellon College. In his spare time, Manoj enjoys taking part in tennis and pursuing panorama images.