First mlverse survey outcomes – software program, purposes, and past

Thanks everybody who participated in our first mlverse survey!

Wait: What even is the mlverse?

The mlverse originated as an abbreviation of multiverse, which, on its half, got here into being as an meant allusion to the well-known tidyverse. As such, though mlverse software program goals for seamless interoperability with the tidyverse, and even integration when possible (see our latest post that includes an entirely tidymodels-integrated torch community structure), the priorities are in all probability a bit completely different: Usually, mlverse software program’s raison d’être is to permit R customers to do issues which might be generally recognized to be accomplished with different languages, reminiscent of Python.

As of as we speak, mlverse growth takes place primarily in two broad areas: deep studying, and distributed computing / ML automation. By its very nature, although, it’s open to altering person pursuits and calls for. Which leads us to the subject of this publish.

GitHub points and community questions are precious suggestions, however we needed one thing extra direct. We needed a method to learn the way you, our customers, make use of the software program, and what for; what you suppose could possibly be improved; what you want existed however just isn’t there (but). To that finish, we created a survey. Complementing software- and application-related questions for the above-mentioned broad areas, the survey had a 3rd part, asking about the way you understand moral and social implications of AI as utilized within the “actual world”.

A number of issues upfront:

Firstly, the survey was utterly nameless, in that we requested for neither identifiers (reminiscent of e-mail addresses) nor issues that render one identifiable, reminiscent of gender or geographic location. In the identical vein, we had assortment of IP addresses disabled on objective.

Secondly, identical to GitHub points are a biased pattern, this survey’s members should be. Most important venues of promotion have been rstudio::global, Twitter, LinkedIn, and RStudio Group. As this was the primary time we did such a factor (and underneath important time constraints), not all the things was deliberate to perfection – not wording-wise and never distribution-wise. Nonetheless, we obtained a number of fascinating, useful, and infrequently very detailed solutions, – and for the following time we do that, we’ll have our classes realized!

Thirdly, all questions have been non-compulsory, naturally leading to completely different numbers of legitimate solutions per query. However, not having to pick out a bunch of “not relevant” bins freed respondents to spend time on subjects that mattered to them.

As a ultimate pre-remark, most questions allowed for a number of solutions.

In sum, we ended up with 138 accomplished surveys. Thanks once more everybody who participated, and particularly, thanks for taking the time to reply the – many – free-form questions!

Areas and purposes

Our first aim was to seek out out through which settings, and for what sorts of purposes, deep-learning software program is getting used.

General, 72 respondents reported utilizing DL of their jobs in business, adopted by academia (23), research (21), spare time (43), and not-actually-using-but-wanting-to (24).

Of these working with DL in business, greater than twenty stated they labored in consulting, finance, and healthcare (every). IT, schooling, retail, pharma, and transportation have been every talked about greater than ten occasions:

Determine 1: Variety of customers reporting to make use of DL in business. Smaller teams not displayed.

In academia, dominant fields (as per survey members) have been bioinformatics, genomics, and IT, adopted by biology, medication, pharmacology, and social sciences:

Determine 2: Variety of customers reporting to make use of DL in academia. Smaller teams not displayed.

What utility areas matter to bigger subgroups of “our” customers? Practically 100 (of 138!) respondents stated they used DL for some form of image-processing utility (together with classification, segmentation, and object detection). Subsequent up was time-series forecasting, adopted by unsupervised studying.

The recognition of unsupervised DL was a bit surprising; had we anticipated this, we might have requested for extra element right here. So for those who’re one of many individuals who chosen this – or for those who didn’t take part, however do use DL for unsupervised studying – please tell us a bit extra within the feedback!

Subsequent, NLP was about on par with the previous; adopted by DL on tabular information, and anomaly detection. Bayesian deep studying, reinforcement studying, advice programs, and audio processing have been nonetheless talked about incessantly.

Determine 3: Purposes deep studying is used for. Smaller teams not displayed.

Frameworks and expertise

We additionally requested what frameworks and languages members have been utilizing for deep studying, and what they have been planning on utilizing sooner or later. Single-time mentions (e.g., deeplearning4J) will not be displayed.

Determine 4: Framework / language used for deep studying. Single mentions not displayed.

An vital factor for any software program developer or content material creator to analyze is proficiency/ranges of experience current of their audiences. It (practically) goes with out saying that experience could be very completely different from self-reported experience. I’d prefer to be very cautious, then, to interpret the beneath outcomes.

Whereas with regard to R expertise, the mixture self-ratings look believable (to me), I’d have guessed a barely completely different end result re DL. Judging from different sources (like, e.g., GitHub points), I are likely to suspect extra of a bimodal distribution (a far stronger model of the bimodality we’re already seeing, that’s). To me, it looks as if we now have quite many customers who know a lot about DL. In settlement with my intestine feeling, although, is the bimodality itself – versus, say, a Gaussian form.

However in fact, pattern dimension is average, and pattern bias is current.

Determine 5: Self-rated expertise re R and deep studying.

Needs and ideas

Now, to the free-form questions. We needed to know what we may do higher.

I’ll tackle essentially the most salient subjects so as of frequency of point out. For DL, that is surprisingly straightforward (versus Spark, as you’ll see).

“No Python”

The primary concern with deep studying from R, for survey respondents, clearly has to don’t with R however with Python. This subject appeared in varied types, essentially the most frequent being frustration over how onerous it may be, depending on the surroundings, to get Python dependencies for TensorFlow/Keras appropriate. (It additionally appeared as enthusiasm for torch, which we’re very glad about.)

Let me make clear and add some context.

TensorFlow is a Python framework (these days subsuming Keras, which is why I’ll be addressing each of these as “TensorFlow” for simplicity) that’s made accessible from R by packages tensorflow and keras . As with different Python libraries, objects are imported and accessible through reticulate . Whereas tensorflow gives the low-level entry, keras brings idiomatic-feeling, nice-to-use wrappers that allow you to neglect in regards to the chain of dependencies concerned.

However, torch, a latest addition to mlverse software program, is an R port of PyTorch that doesn’t delegate to Python. As a substitute, its R layer straight calls into libtorch, the C++ library behind PyTorch. In that approach, it’s like a number of high-duty R packages, making use of C++ for efficiency causes.

Now, this isn’t the place for suggestions. Listed below are just a few ideas although.

Clearly, as one respondent remarked, as of as we speak the torch ecosystem doesn’t provide performance on par with TensorFlow, and for that to alter time and – hopefully! extra on that beneath – your, the group’s, assist is required. Why? As a result of torch is so younger, for one; but additionally, there’s a “systemic” cause! With TensorFlow, as we will entry any image through the tf object, it’s all the time doable, if inelegant, to do from R what you see accomplished in Python. Respective R wrappers nonexistent, fairly just a few weblog posts (see, e.g., https://blogs.rstudio.com/ai/posts/2020-04-29-encrypted_keras_with_syft/, or A first look at federated learning with TensorFlow) relied on this!

Switching to the subject of tensorflow’s Python dependencies inflicting issues with set up, my expertise (from GitHub points, in addition to my very own) has been that difficulties are fairly system-dependent. On some OSes, problems appear to seem extra typically than on others; and low-control (to the person person) environments like HPC clusters could make issues particularly troublesome. In any case although, I’ve to (sadly) admit that when set up issues seem, they are often very difficult to unravel.

tidymodels integration

The second most frequent point out clearly was the want for tighter tidymodels integration. Right here, we wholeheartedly agree. As of as we speak, there isn’t any automated method to accomplish this for torch fashions generically, however it may be accomplished for particular mannequin implementations.

Final week, torch, tidymodels, and high-energy physics featured the primary tidymodels-integrated torch bundle. And there’s extra to return. Actually, if you’re growing a bundle within the torch ecosystem, why not take into account doing the identical? Do you have to run into issues, the rising torch group will likely be glad to assist.

Documentation, examples, educating supplies

Thirdly, a number of respondents expressed the want for extra documentation, examples, and educating supplies. Right here, the scenario is completely different for TensorFlow than for torch.

For tensorflow, the web site has a large number of guides, tutorials, and examples. For torch, reflecting the discrepancy in respective lifecycles, supplies will not be that plentiful (but). Nevertheless, after a latest refactoring, the web site has a brand new, four-part Get started part addressed to each freshmen in DL and skilled TensorFlow customers curious to find out about torch. After this hands-on introduction, place to get extra technical background can be the part on tensors, autograd, and neural network modules.

Reality be advised, although, nothing can be extra useful right here than contributions from the group. Everytime you resolve even the tiniest drawback (which is usually how issues seem to oneself), take into account making a vignette explaining what you probably did. Future customers will likely be grateful, and a rising person base signifies that over time, it’ll be your flip to seek out that some issues have already been solved for you!

The remaining objects mentioned didn’t come up fairly as typically (individually), however taken collectively, all of them have one thing in frequent: All of them are needs we occur to have, as nicely!

This positively holds within the summary – let me cite:

“Develop extra of a DL group”

“Bigger developer group and ecosystem. Rstudio has made nice instruments, however for utilized work is has been onerous to work in opposition to the momentum of working in Python.”

We wholeheartedly agree, and constructing a bigger group is precisely what we’re making an attempt to do. I just like the formulation “a DL group” insofar it’s framework-independent. In the long run, frameworks are simply instruments, and what counts is our capacity to usefully apply these instruments to issues we have to resolve.

Concrete needs embrace

-

Extra paper/mannequin implementations (reminiscent of TabNet).

-

Amenities for straightforward information reshaping and pre-processing (e.g., as a way to move information to RNNs or 1dd convnets within the anticipated 3D format).

-

Probabilistic programming for

torch(analogously to TensorFlow Chance). -

A high-level library (reminiscent of quick.ai) based mostly on

torch.

In different phrases, there’s a entire cosmos of helpful issues to create; and no small group alone can do it. That is the place we hope we will construct a group of individuals, every contributing what they’re most considering, and to no matter extent they need.

Areas and purposes

For Spark, questions broadly paralleled these requested about deep studying.

General, judging from this survey (and unsurprisingly), Spark is predominantly utilized in business (n = 39). For tutorial employees and college students (taken collectively), n = 8. Seventeen folks reported utilizing Spark of their spare time, whereas 34 stated they needed to make use of it sooner or later.

business sectors, we once more discover finance, consulting, and healthcare dominating.

Determine 6: Variety of customers reporting to make use of Spark in business. Smaller teams not displayed.

What do survey respondents do with Spark? Analyses of tabular information and time collection dominate:

Determine 7: Variety of customers reporting to make use of Spark in business. Smaller teams not displayed.

Frameworks and expertise

As with deep studying, we needed to know what language folks use to do Spark. For those who have a look at the beneath graphic, you see R showing twice: as soon as in reference to sparklyr, as soon as with SparkR. What’s that about?

Each sparklyr and SparkR are R interfaces for Apache Spark, every designed and constructed with a special set of priorities and, consequently, trade-offs in thoughts.

sparklyr, one the one hand, will enchantment to information scientists at residence within the tidyverse, as they’ll be capable to use all the info manipulation interfaces they’re conversant in from packages reminiscent of dplyr, DBI, tidyr, or broom.

SparkR, then again, is a lightweight R binding for Apache Spark, and is bundled with the identical. It’s a wonderful alternative for practitioners who’re well-versed in Apache Spark and simply want a skinny wrapper to entry varied Spark functionalities from R.

Determine 8: Language / language bindings used to do Spark.

When requested to charge their experience in R and Spark, respectively, respondents confirmed related conduct as noticed for deep studying above: Most individuals appear to suppose extra of their R expertise than their theoretical Spark-related data. Nevertheless, much more warning ought to be exercised right here than above: The variety of responses right here was considerably decrease.

Determine 9: Self-rated expertise re R and Spark.

Needs and ideas

Similar to with DL, Spark customers have been requested what could possibly be improved, and what they have been hoping for.

Curiously, solutions have been much less “clustered” than for DL. Whereas with DL, just a few issues cropped up repeatedly, and there have been only a few mentions of concrete technical options, right here we see in regards to the reverse: The nice majority of needs have been concrete, technical, and infrequently solely got here up as soon as.

Most likely although, this isn’t a coincidence.

Trying again at how sparklyr has advanced from 2016 till now, there’s a persistent theme of it being the bridge that joins the Apache Spark ecosystem to quite a few helpful R interfaces, frameworks, and utilities (most notably, the tidyverse).

Lots of our customers’ ideas have been basically a continuation of this theme. This holds, for instance, for 2 options already accessible as of sparklyr 1.4 and 1.2, respectively: help for the Arrow serialization format and for Databricks Join. It additionally holds for tidymodels integration (a frequent want), a easy R interface for outlining Spark UDFs (incessantly desired, this one too), out-of-core direct computations on Parquet information, and prolonged time-series functionalities.

We’re grateful for the suggestions and can consider fastidiously what could possibly be accomplished in every case. Generally, integrating sparklyr with some function X is a course of to be deliberate fastidiously, as modifications may, in idea, be made in varied locations (sparklyr; X; each sparklyr and X; or perhaps a newly-to-be-created extension). Actually, this can be a subject deserving of far more detailed protection, and must be left to a future publish.

To begin, that is in all probability the part that may revenue most from extra preparation, the following time we do that survey. Because of time stress, some (not all!) of the questions ended up being too suggestive, presumably leading to social-desirability bias.

Subsequent time, we’ll attempt to keep away from this, and questions on this space will probably look fairly completely different (extra like situations or what-if tales). Nevertheless, I used to be advised by a number of folks they’d been positively stunned by merely encountering this subject in any respect within the survey. So maybe that is the principle level – though there are just a few outcomes that I’m certain will likely be fascinating by themselves!

Anticlimactically, essentially the most non-obvious outcomes are offered first.

“Are you anxious about societal/political impacts of how AI is utilized in the true world?”

For this query, we had 4 reply choices, formulated in a approach that left no actual “center floor”. (The labels within the graphic beneath verbatim replicate these choices.)

Determine 10: Variety of customers responding to the query ‘Are you anxious about societal/political impacts of how AI is utilized in the true world?’ with the reply choices given.

The subsequent query is unquestionably one to maintain for future editions, as from all questions on this part, it positively has the very best data content material.

“Whenever you consider the close to future, are you extra afraid of AI misuse or extra hopeful about constructive outcomes?”

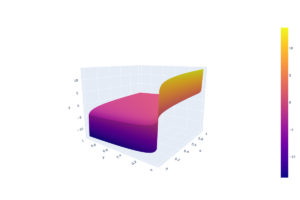

Right here, the reply was to be given by transferring a slider, with -100 signifying “I are usually extra pessimistic”; and 100, “I are usually extra optimistic”. Though it will have been doable to stay undecided, selecting a worth near 0, we as an alternative see a bimodal distribution:

Determine 11: Whenever you consider the close to future, are you extra afraid of AI misuse or extra hopeful about constructive outcomes?

Why fear, and what about

The next two questions are these already alluded to as presumably being overly liable to social-desirability bias. They requested what purposes folks have been anxious about, and for what causes, respectively. Each questions allowed to pick out nevertheless many responses one needed, deliberately not forcing folks to rank issues that aren’t comparable (the way in which I see it). In each instances although, it was doable to explicitly point out None (akin to “I don’t actually discover any of those problematic” and “I’m not extensively anxious”, respectively.)

What purposes of AI do you’re feeling are most problematic?

Determine 12: Variety of customers choosing the respective utility in response to the query: What purposes of AI do you’re feeling are most problematic?

In case you are anxious about misuse and unfavorable impacts, what precisely is it that worries you?

Determine 13: Variety of customers choosing the respective impression in response to the query: In case you are anxious about misuse and unfavorable impacts, what precisely is it that worries you?

Complementing these questions, it was doable to enter additional ideas and considerations in free-form. Though I can’t cite all the things that was talked about right here, recurring themes have been:

-

Misuse of AI to the unsuitable functions, by the unsuitable folks, and at scale.

-

Not feeling chargeable for how one’s algorithms are used (the I’m only a software program engineer topos).

-

Reluctance, in AI however in society total as nicely, to even talk about the subject (ethics).

Lastly, though this was talked about simply as soon as, I’d prefer to relay a remark that went in a path absent from all offered reply choices, however that in all probability ought to have been there already: AI getting used to assemble social credit score programs.

“It’s additionally that you simply one way or the other may need to be taught to recreation the algorithm, which is able to make AI utility forcing us to behave in a roundabout way to be scored good. That second scares me when the algorithm just isn’t solely studying from our conduct however we behave in order that the algorithm predicts us optimally (turning each use case round).”

This has change into a protracted textual content. However I believe that seeing how a lot time respondents took to reply the various questions, typically together with a number of element within the free-form solutions, it appeared like a matter of decency to, within the evaluation and report, go into some element as nicely.

Thanks once more to everybody who took half! We hope to make this a recurring factor, and can attempt to design the following version in a approach that makes solutions much more information-rich.

Thanks for studying!