Are Mannequin Explanations Helpful in Follow? Rethinking The right way to Help Human-ML Interactions. – Machine Studying Weblog | ML@CMU

Determine 1. This weblog put up discusses the effectiveness of black-box mannequin explanations in aiding finish customers to make choices. We observe that explanations don’t the truth is assist with concrete functions similar to fraud detection and paper matching for peer assessment. Our work additional motivates novel instructions for creating and evaluating instruments to help human-ML interactions.

Mannequin explanations have been touted as essential info to facilitate human-ML interactions in lots of real-world functions the place finish customers make choices knowledgeable by ML predictions. For instance, explanations are thought to help mannequin builders in figuring out when fashions depend on spurious artifacts and to help area consultants in figuring out whether or not to comply with a mannequin’s prediction. Nonetheless, whereas quite a few explainable AI (XAI) strategies have been developed, XAI has but to ship on this promise. XAI strategies are usually optimized for numerous however slim technical targets disconnected from their claimed use circumstances. To attach strategies to concrete use circumstances, we argued in our Communications of ACM paper [1] for researchers to scrupulously consider how effectively proposed strategies will help actual customers of their real-world functions.

In direction of bridging this hole, our group has since accomplished two collaborative tasks the place we labored with area consultants in e-commerce fraud detection and paper matching for peer assessment. By these efforts, we’ve gleaned the next two insights:

- Current XAI strategies usually are not helpful for decision-making. Presenting people with standard, general-purpose XAI strategies doesn’t enhance their efficiency on real-world use circumstances that motivated the event of those strategies. Our unfavourable findings align with these of contemporaneous works.

- Rigorous, real-world analysis is vital however laborious. These findings had been obtained by consumer research that had been time-consuming to conduct.

We imagine that every of those insights motivates a corresponding analysis course to help human-ML interactions higher shifting ahead. First, past strategies that try to clarify the ML mannequin itself, we must always contemplate a wider vary of approaches that current related task-specific info to human decision-makers; we refer to those approaches as human-centered ML (HCML) strategies [10]. Second, we have to create new workflows to judge proposed HCML strategies which are each low-cost and informative of real-world efficiency.

On this put up, we first define our workflow for evaluating XAI strategies. We then describe how we instantiated this workflow in two domains: fraud detection and peer assessment paper matching. Lastly, we describe the 2 aforementioned insights from these efforts; we hope these takeaways will encourage the neighborhood to rethink how HCML strategies are developed and evaluated.

How do you rigorously consider rationalization strategies?

In our CACM paper [1], we launched a use-case-grounded workflow to judge rationalization strategies in follow—this implies displaying that they’re ‘helpful,’ i.e., that they will truly enhance human-ML interactions within the real-world functions that they’re motivated by. This workflow contrasts with analysis workflows of XAI strategies in prior work, which relied on researcher-defined proxy metrics that will or will not be related to any downstream job. Our proposed three-step workflow is predicated on the overall scientific technique:

Step 1: Outline a concrete use case. To do that, researchers might have to work intently with area consultants to outline a job that displays the sensible use case of curiosity.

Step 2: Choose rationalization strategies for analysis. Whereas chosen strategies is perhaps comprised of standard XAI strategies, the suitable set of strategies is to a big extent application-specific and also needs to embody related non-explanation baselines.

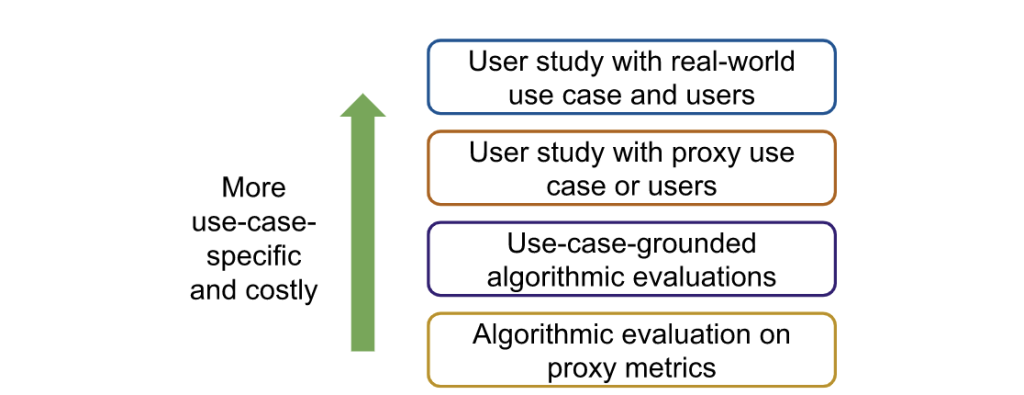

Step 3: Consider rationalization strategies in opposition to baselines. Whereas researchers ought to in the end consider chosen strategies by a consumer examine with real-world customers, researchers might wish to first conduct cheaper, noisier types of analysis to slim down the set of strategies in consideration (Determine 2).

Instantiating the workflow in follow

We collaborated with consultants from two domains (fraud detection and peer assessment paper matching) to instantiate this use-case-grounded workflow and consider current XAI strategies:

Area 1: Fraud detection [3]. We partnered with researchers at Feedzai, a monetary start-up, to evaluate whether or not offering mannequin explanations improved the power of fraud analysts to detect fraudulent e-commerce transactions. On condition that we had entry to real-world information (i.e., historic e-commerce transactions for which we had floor fact solutions of whether or not the transaction was fraudulent) and actual customers (i.e., fraud analysts), we instantly carried out a consumer examine on this context. An instance of the interface proven to analysts is in Determine 3. We in contrast analysts’ common efficiency when proven completely different explanations to a baseline setting the place they had been solely offered the mannequin prediction. We in the end discovered that not one of the standard XAI strategies we evaluated (LIME, SHAP, and Tree Interpreter) resulted in any enchancment within the analysts’ choices in comparison with the baseline setting (Determine 5, left). Evaluating these strategies with actual customers moreover posed many logistical challenges as a result of fraud analysts took time from their common day-to-day work to periodically take part in our examine.

Area 2: Peer assessment paper matching [4]. We collaborated with Professor Nihar Shah (CMU), an knowledgeable in peer assessment, to analyze what info might assist meta-reviewers of a convention higher match submitted papers to acceptable reviewers. Studying from our prior expertise, we first carried out a consumer examine utilizing proxy duties and customers, which we labored with Professor Shah to design as proven in Determine 4. On this proxy setting, we discovered that offering explanations from standard XAI strategies the truth is led customers to be extra assured—-the vast majority of individuals proven highlights from XAI strategies believed the highlighted info was useful—yet, they made statistically worse choices (Determine 5 proper)!

How can we higher help human-ML interactions?

By these collaborations, we recognized two vital instructions for future work, which we describe in additional element together with our preliminary efforts in every course.

We have to develop strategies for particular use circumstances. Our outcomes recommend that explanations from standard, general-purpose XAI strategies can each damage decision-making whereas making customers overconfident. These findings have additionally been noticed in a number of contemporaneous works (e.g., [7,8,9]). Researchers, as an alternative, want to think about creating human-centered ML (HCML) strategies [10] tailor-made for every downstream use case. HCML strategies are any method that gives details about the actual use case and context that may inform human choices.

Our contributions: Within the peer assessment matching setting, we proposed an HCML technique designed in tandem with a site knowledgeable [4]. Notably, our technique isn’t a mannequin rationalization method, because it highlights info within the enter information, particularly sentences and phrases which are comparable within the submitted paper and the reviewer profile. Determine 6 compares the textual content highlighted utilizing our technique to the textual content highlighted utilizing current strategies. Our technique outperformed each a baseline the place there was no rationalization and the mannequin rationalization situation (Determine 5, proper). Based mostly on these optimistic outcomes, we plan to maneuver evaluations of our proposed technique to extra real looking peer assessment settings. Additional, we carried out an exploratory examine to raised perceive how folks work together with info offered by HCML strategies as a primary step in direction of arising with a extra systematic method to plan task-specific HCML strategies [5].

We want extra environment friendly analysis pipelines. Whereas consumer research carried out in a real-world use case and with actual customers are the best technique to consider HCML strategies, it’s a time- and resource-consuming course of. We spotlight the necessity for less expensive evaluations that may be utilized to slim down candidate HCML strategies and nonetheless implicate the downstream use case. One choice is to work with area consultants to design a proxy job as we did within the peer assessment setting, however even these research require cautious consideration of the generalizability to the real-world use case.

Our contributions. We launched an algorithmic-based analysis known as simulated consumer analysis (SimEvals) [2]. As an alternative of conducting research on proxy duties, researchers can prepare SimEvals, that are ML fashions that function human proxies. SimEvals extra faithfully displays elements of real-world analysis as a result of their coaching and analysis information are instantiated on the identical information and job thought-about in real-world research. To coach SimEvals, the researcher first must generate a dataset of observation-label pairs. The remark corresponds to the data that may be introduced in a consumer examine (and critically contains the HCML technique), whereas the output is the bottom fact label for the use case of curiosity. For instance, within the fraud detection setting, the remark would encompass each the e-commerce transaction and ML mannequin rating proven in Determine 3(a) together with the reason proven in Determine 3(b). The bottom fact label is whether or not or not the transaction was fraudulent. SimEvals are skilled to foretell a label given an remark and their take a look at set accuracies may be interpreted as a measure of whether or not the data contained within the remark is predictive for the use case.

We not solely evaluated SimEvals on a wide range of proxy duties but in addition examined SimEvals in follow by working with Feedzai, the place we discovered outcomes that corroborate the unfavourable findings from the consumer examine [6]. Though SimEvals mustn’t substitute consumer research as a result of SimEvals usually are not designed to imitate human decision-making, these outcomes recommend that SimEvals could possibly be initially used to determine extra promising explanations (Determine 6).

Conclusion

In abstract, our current efforts encourage two methods the neighborhood ought to rethink help human-ML interactions: (1) we have to substitute general-purpose XAI methods with HCML strategies tailor-made to particular use circumstances, and (2) creating intermediate analysis procedures that may assist slim down the HCML strategies to judge in additional pricey settings.

For extra details about the assorted papers talked about on this weblog put up, see the hyperlinks under:

[1] Chen, V., Li, J., Kim, J. S., Plumb, G., & Talwalkar, A. Interpretable Machine Studying. Communications of the ACM, 2022. (link)

[2] Chen, V., Johnson, N., Topin, N., Plumb, G., & Talwalkar, A. Use-case-grounded simulations for rationalization analysis. NeurIPS, 2022. (link)

[3] Amarasinghe, Ok., Rodolfa, Ok. T., Jesus, S., Chen, V., Balayan, V., Saleiro, P., Bizzaro, P., Talwalkar, A. & Ghani, R. (2022). On the Significance of Software-Grounded Experimental Design for Evaluating Explainable ML Strategies. arXiv. (link)

[4] Kim, J. S., Chen, V., Pruthi, D., Shah, N., Talwalkar, A. Helping Human Selections in Doc Matching. arXiv. (link)

[5] Chen, V., Liao, Q. V., Vaughan, J. W., & Bansal, G. (2023). Understanding the Position of Human Instinct on Reliance in Human-AI Choice-Making with Explanations. arXiv. (link)

[6] Martin, A., Chen, V., Jesus, S., Saleiro, P. A Case Research on Designing Evaluations of ML Explanations with Simulated Consumer Research. arXiv. (link)

[7] Bansal, G., Wu, T., Zhou, J., Fok, R., Nushi, B., Kamar, E., Ribeiro, M. T. & Weld, D. Does the entire exceed its components? the impact of ai explanations on complementary workforce efficiency. CHI, 2021. (link)

[8] Adebayo, J., Muelly, M., Abelson, H., & Kim, B. Put up hoc explanations could also be ineffective for detecting unknown spurious correlation. ICLR, 2022. (link)

[9] Zhang, Y., Liao, Q. V., & Bellamy, R. Ok. Impact of confidence and rationalization on accuracy and belief calibration in AI-assisted resolution making. FAccT, 2020. (link)

[10] Chancellor, S. (2023). Towards Practices for Human-Centered Machine Studying. Communications of the ACM, 66(3), 78-85. (link)

Acknowledgments

We wish to thank Kasun Amarasinghe, Jeremy Cohen, Nari Johnson, Joon Sik Kim, Q. Vera Liao, and Junhong Shen for useful suggestions and strategies on earlier variations of the weblog put up. Thanks additionally to Emma Kallina for her assist with designing the primary determine!