Studying Minds with AI: Researchers Translate Mind Waves to Photos

Picture by Editor

Think about reliving your recollections or establishing pictures of what somebody is pondering. It feels like one thing straight out of a science fiction film however with the current developments in laptop imaginative and prescient and deep studying, it’s changing into a actuality. Although neuroscientists nonetheless battle to really demystify how the human mind converts what our eyes see into psychological pictures, it looks as if AI is getting higher at this process. Two researchers, from the Graduate Faculty of Frontier Biosciences at Osaka College, proposed a brand new technique utilizing an LDM named Secure Diffusion that precisely reconstructed the photographs from human mind exercise that was obtained by useful magnetic resonance imaging (fMRI). Though the paper “High-resolution image reconstruction with latent diffusion models from human brain activity“ by Yu Takagi and Shinji Nishimotois isn’t but peer-reviewed, it has taken the web by storm because the outcomes are shockingly correct.

This expertise has the potential to revolutionize fields like psychology, neuroscience, and even the felony justice system. Think about a suspect is questioned about the place he was through the time of the homicide and he solutions that he was residence. However the reconstructed picture reveals him on the crime scene. Fairly Fascinating proper? So how does it precisely work? Let’s dig deeper into this analysis paper, its limitations, and its future scope.

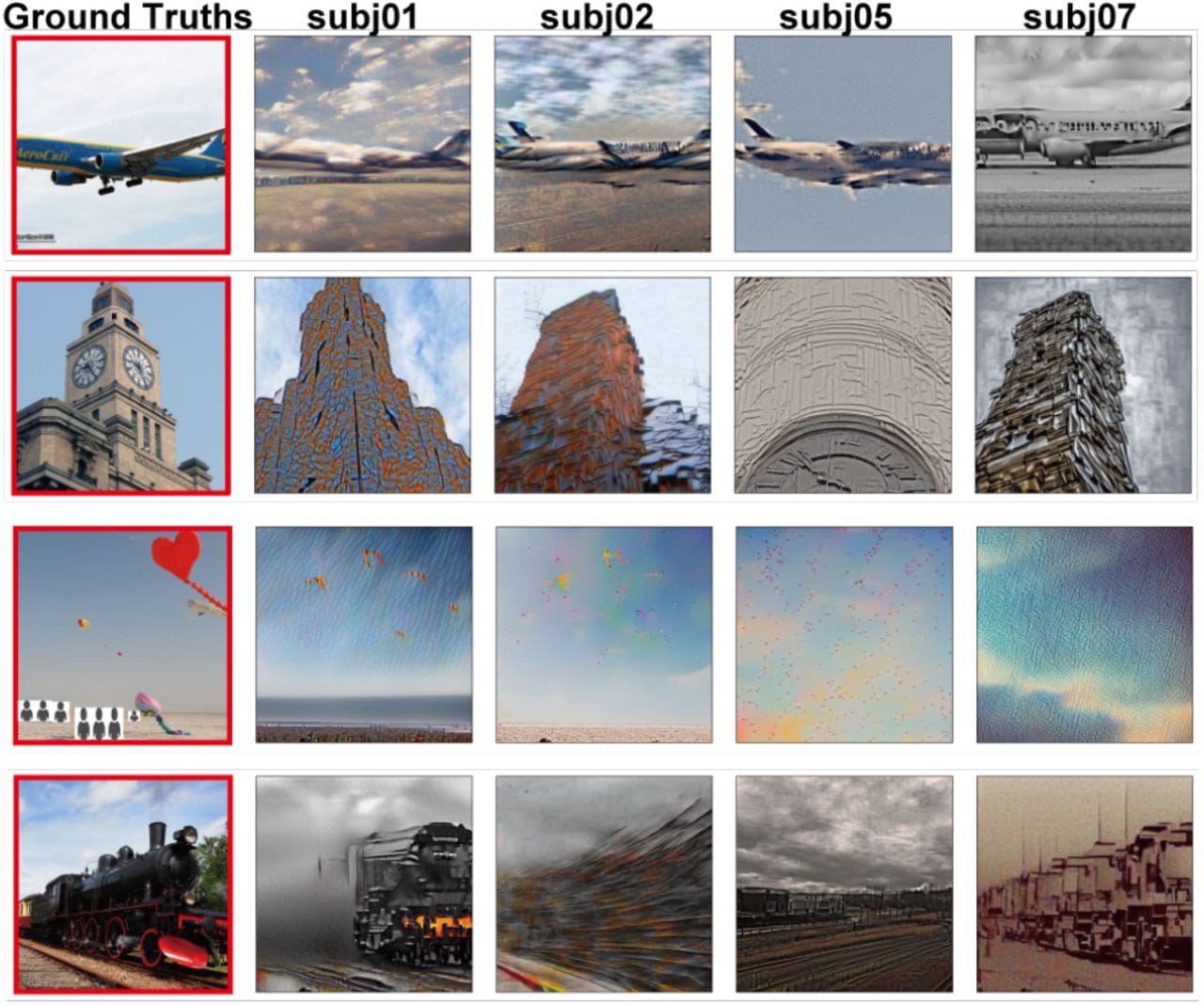

The researchers used the Natural Scenes Dataset ( NSD) offered by the College of Minnesota. It contained information obtained from fMRI scans of 4 topics who had checked out 10,000 completely different pictures. A subset of 982 pictures seen by all 4 topics was used because the check dataset. Two completely different AI fashions have been skilled through the course of. One was used to hyperlink the mind exercise with the fMRI pictures whereas the opposite one was used to hyperlink it with the textual content descriptions of pictures that the themes checked out. Collectively, these fashions allowed the Secure Diffusion to show the fMRI information into comparatively correct imitations of the photographs that weren’t a part of its coaching attaining an accuracy of virtually 80%.

Original Images (left) and AI-Generated Images for all Four Subjects

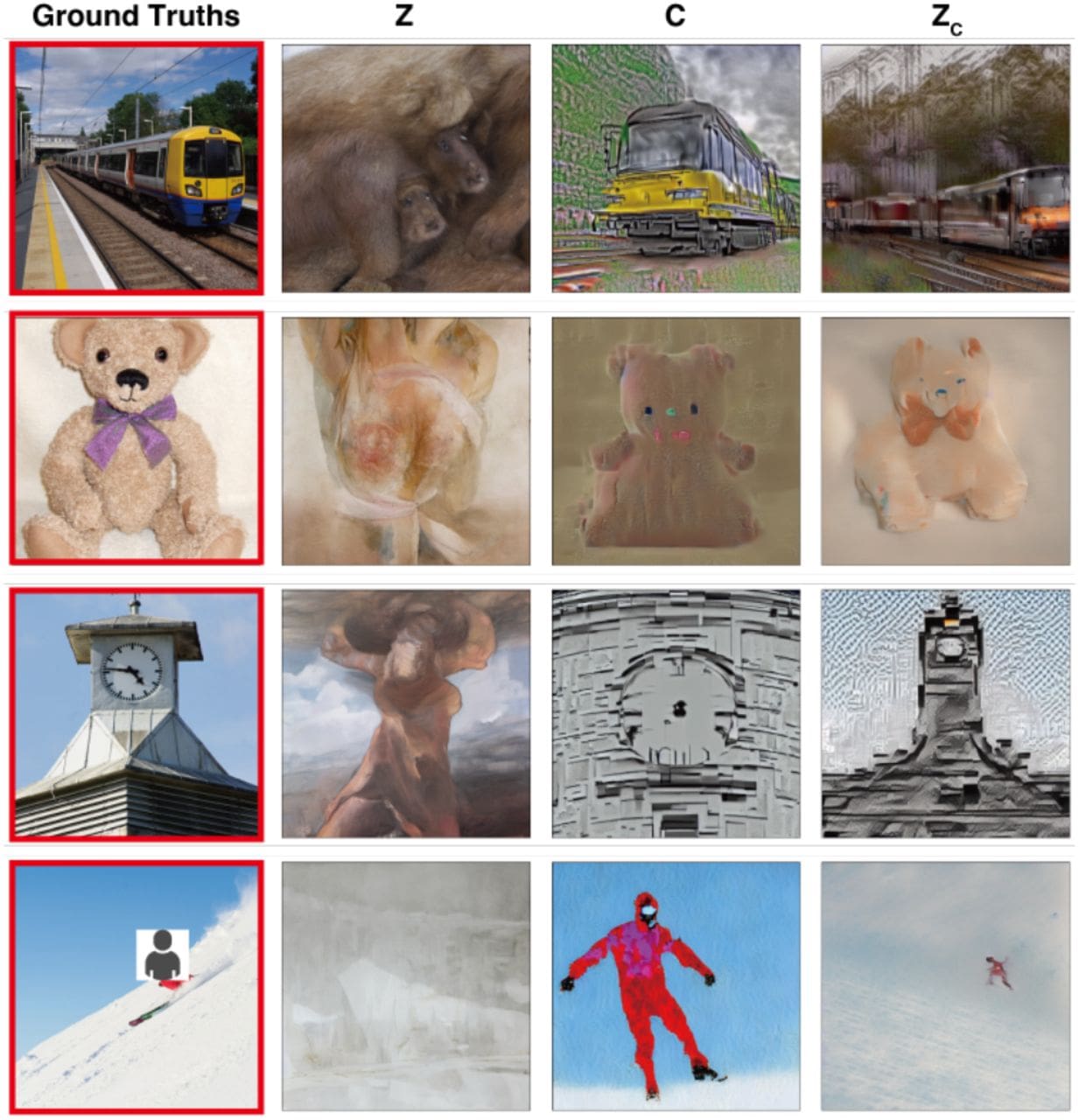

The primary mannequin was capable of successfully regenerate the format and the angle of the picture being seen. However the mannequin struggled with particular objects such because the clock tower and It created summary and cloudy figures. As a substitute of utilizing giant datasets to foretell extra particulars, researchers used the second AI mannequin to affiliate the key phrases from picture captions to fMRI scans. For instance, if the coaching information has an image of a clock tower then the system associates the sample of mind exercise with that object. In the course of the testing stage, if an identical mind sample was exhibited by the topic participant then the System feds the objects key phrase into Secure Diffusion’s regular text-to-image generator that resulted within the convincing imitation of the true picture.

(leftmost) Photographs seen by research contributors, (2nd) Format and Perspective utilizing patterns of mind exercise alone, (third) Picture by textual Data alone, (rightmost) Addition of textual data and patterns of mind exercise to re-create the item within the photo

On this paper, the researchers additionally claimed that the research was the primary of its sort the place every part of LDM (Secure diffusion) was quantitatively interpreted from a neuroscience perspective. They did so by mapping the precise parts to the distinct areas of the mind. Though the proposed mannequin continues to be within the nascent stage but individuals have been fast to react to this paper and termed the mannequin as the following thoughts reader.

Though the accuracy of this mannequin is sort of spectacular, it was examined on the mind scan of the individuals who offered the coaching mind scans. Utilizing the identical information for coaching and check units can result in overfitting. Nevertheless, we must always not shrug off this paper as such publications appeal to researchers and we begin seeing associated papers with incremental enhancements.

Contemplating the enhancements within the subject of laptop imaginative and prescient, this paper makes me assume: Will we have the ability to relive our desires quickly? Fairly cool and scary on the identical time. Though it is fairly intriguing, it raises some moral considerations in regards to the invasion of privateness. Additionally, there may be nonetheless an extended method to go earlier than we are able to truly create the subjective expertise of a dream. The mannequin isn’t but sensible for day by day use however we’re getting nearer to understanding how our mind capabilities. Such expertise may result in great developments within the medical subject, particularly for individuals who have communication impairments.

If the refinement of the proposed mannequin comes into the image, this may be the NEXT BREAKTHROUGH on this planet of AI. However the advantages and dangers should be weighed earlier than the widespread implementation of any expertise. I hope you loved studying this text and I might love to listen to your ideas about this superb analysis paper.

Kanwal Mehreen is an aspiring software program developer with a eager curiosity in information science and purposes of AI in drugs. Kanwal was chosen because the Google Era Scholar 2022 for the APAC area. Kanwal likes to share technical data by writing articles on trending subjects, and is enthusiastic about enhancing the illustration of girls in tech trade.