UT Austin Researchers Suggest WICE: A New Dataset for Truth Verification Constructed on Actual Claims in Wikipedia with High-quality-Grained Annotations

Pure language inference and textual entailment are enduring points in NLP that may take many shapes. There are some vital gaps when present entailment programs are utilized to this job. Utilizing NLI “as a device for the analysis of domain-general strategies to semantic illustration” is the declared objective of the SNLI dataset. This, nevertheless, is completely different from how NLI is presently utilized. NLI has been used to understand knowledge-grounded discourse, validate replies from QA programs, and assess the accuracy of produced summaries. These functions are extra carefully associated to factual consistency or attribution: is it the case {that a} speculation is correct given the small print in a doc’s premise?

First, many NLI datasets, together with VitaminC and WANLI, which each focus on single-sentence proof, goal short-term premises. Present frameworks for document-level entailment are primarily based on native entailment scores, both by means of combining these scores or retrieval-based strategies. There are a couple of outliers, like DocNLI, nevertheless it incorporates many artificially generated unhealthy knowledge. This attracts consideration to the second flaw: the shortage of detrimental cases which might be environmentally sound. Contradictory conditions are created in a manner that produces deceptive correlations, reminiscent of single-word correlations, syntactic heuristics, or a disregard for the enter.

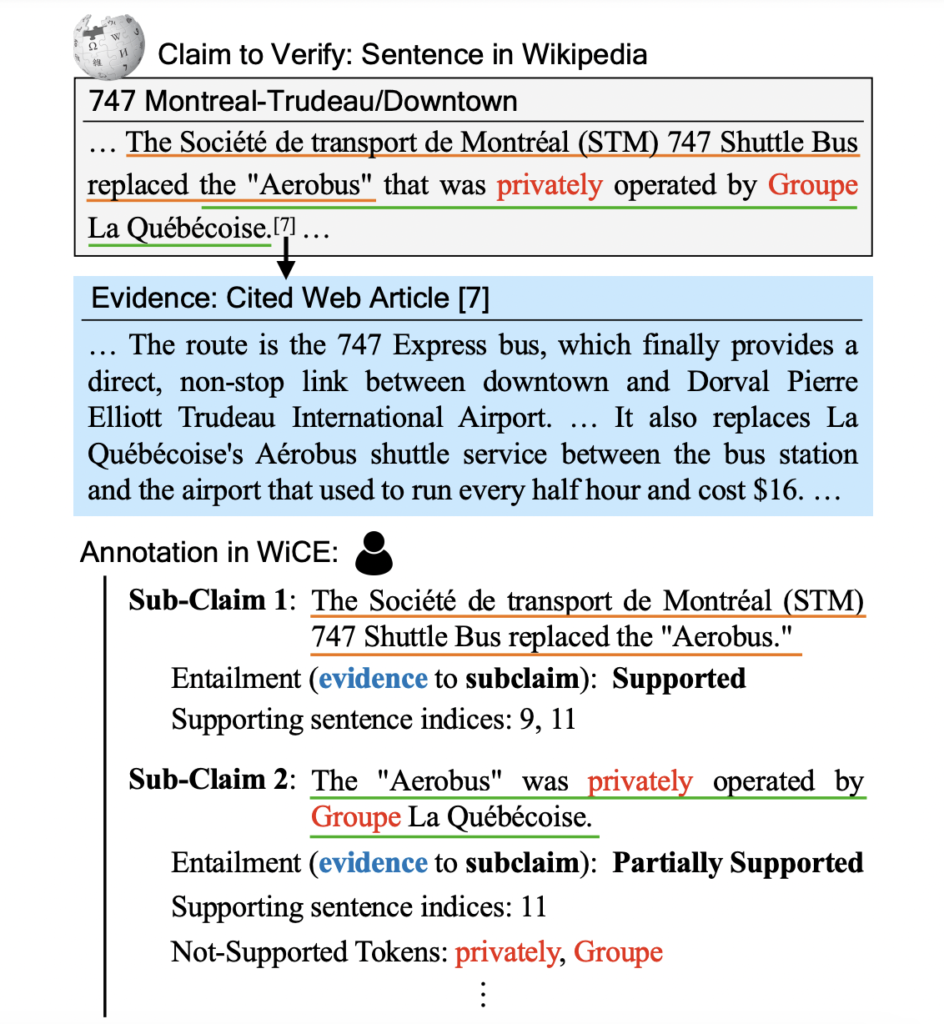

Lastly, along with the traditional three-class “entailed,” “impartial,” and “contradicted” set, fine-grained labeling of whether or not components of a declare are supported or not can be extra related. Present datasets would not have these fine-grained annotations. As demonstrated in Determine 1, acknowledged in Wikipedia and the related articles it refers to, they determine entailment, a listing of supporting sentences within the referenced article, and tokens within the declare that aren’t supported by proof. They show that these statements require troublesome retrieval and verification points, reminiscent of multi-sentence reasoning. Researchers from UT Austin have compiled WICE (Wikipedia Quotation Entailment), a dataset for validating precise claims in Wikipedia fixing the above mentioned issues.

Determine 1 illustrates how the real-world Wikipedia assertions in WICE are incessantly extra sophisticated than the theories employed in most prior NLI datasets. They provide CLAIM-SPLIT, a way of dissecting hypotheses using few-shot prompting with GPT-3, to help in creating their dataset and provides fine-grained annotation, as seen in Determine 2. This decomposition is just like different frameworks constructed from OpenIE or Pyramid. Nevertheless, it does it with out the necessity for annotated knowledge and with extra flexibility, because of GPT-3. They streamline their annotation process and the final word entailment prediction work for automated fashions by performing on the sub-claim degree.

On their dataset, they check numerous programs, reminiscent of short-paragraph entailment fashions which have already been “stretched” to create document-level entailment judgments from short-paragraph judgments. They uncover that these fashions carry out poorly on the declare degree however higher on the degree of their sub-claims when used with their dataset, indicating that proposition-level splitting could be a useful step within the pipeline for an attribution system. Though present programs carry out under the human degree on this dataset and solely generally return good proof, they show that chunk-level enter processing to evaluate these sub-claims is a stable place to begin for future programs.

They supply WICE, a novel dataset for fact-checking primarily based on precise claims in Wikipedia with fine-grained annotations, as one in every of their fundamental contributions. These show how troublesome it’s to resolve entailment with the right information nonetheless. They counsel CLAIM-SPLIT, a technique for breaking down massive claims into smaller, impartial sub-claims at each the info assortment and inference time. The dataset and code might be discovered on GitHub.

Take a look at the Paper and Github. All Credit score For This Analysis Goes To the Researchers on This Undertaking. Additionally, don’t neglect to hitch our 15k+ ML SubReddit, Discord Channel, and Email Newsletter, the place we share the newest AI analysis information, cool AI initiatives, and extra.

Aneesh Tickoo is a consulting intern at MarktechPost. He’s presently pursuing his undergraduate diploma in Information Science and Synthetic Intelligence from the Indian Institute of Expertise(IIT), Bhilai. He spends most of his time engaged on initiatives geared toward harnessing the facility of machine studying. His analysis curiosity is picture processing and is captivated with constructing options round it. He loves to attach with folks and collaborate on fascinating initiatives.