5 Python Greatest Practices for Information Science

Picture by Creator

Robust Python and SQL expertise are each integral to many knowledge professionals. As an information skilled, you’re most likely comfy with Python programming—a lot that writing Python code feels fairly pure. However are you following the very best practices when engaged on knowledge science initiatives with Python?

Although it is simple to be taught Python and construct knowledge science purposes with it, it is, maybe, simpler to put in writing code that’s exhausting to keep up. That can assist you write higher code, this tutorial explores some Python coding finest practices which assist with dependency administration and maintainability reminiscent of:

- Establishing devoted digital environments when engaged on knowledge science initiatives domestically

- Bettering maintainability utilizing sort hints

- Modeling and validating knowledge utilizing Pydantic

- Profiling code

- Utilizing vectorized operations when potential

So let’s get coding!

1. Use Digital Environments for Every Mission

Digital environments guarantee venture dependencies are remoted, stopping conflicts between completely different initiatives. In knowledge science, the place initiatives usually contain completely different units of libraries and variations, Digital environments are significantly helpful for sustaining reproducibility and managing dependencies successfully.

Moreover, digital environments additionally make it simpler for collaborators to arrange the identical venture atmosphere with out worrying about conflicting dependencies.

You need to use instruments like Poetry to create and handle digital environments. There are various advantages to utilizing Poetry but when all you want is to create digital environments on your initiatives, you too can use the built-in venv module.

If you’re on a Linux machine (or a Mac), you possibly can create and activate digital environments like so:

# Create a digital atmosphere for the venture

python -m venv my_project_env

# Activate the digital atmosphere

supply my_project_env/bin/activate

If you happen to’re a Home windows person, you possibly can check the docs on methods to activate the digital atmosphere. Utilizing digital environments for every venture is, subsequently, useful to maintain dependencies remoted and constant.

2. Add Kind Hints for Maintainability

As a result of Python is a dynamically typed language, you do not have to specify within the knowledge sort for the variables that you simply create. Nevertheless, you possibly can add sort hints—indicating the anticipated knowledge sort—to make your code extra maintainable.

Let’s take an instance of a perform that calculates the imply of a numerical function in a dataset with acceptable sort annotations:

from typing import Record

def calculate_mean(function: Record[float]) -> float:

# Calculate imply of the function

mean_value = sum(function) / len(function)

return mean_value

Right here, the sort hints let the person know that the calcuate_mean perform takes in an inventory of floating level numbers and returns a floating-point worth.

Keep in mind Python doesn’t implement sorts at runtime. However you should utilize mypy or the like to boost errors for invalid sorts.

3. Mannequin Your Information with Pydantic

Beforehand we talked about including sort hints to make code extra maintainable. This works advantageous for Python capabilities. However when working with knowledge from exterior sources, it is usually useful to mannequin the info by defining lessons and fields with anticipated knowledge sort.

You need to use built-in dataclasses in Python, however you don’t get knowledge validation help out of the field. With Pydantic, you possibly can mannequin your knowledge and in addition use its built-in knowledge validation capabilities. To make use of Pydantic, you possibly can set up it together with the e-mail validator utilizing pip:

$ pip set up pydantic[email-validator]

Right here’s an instance of modeling buyer knowledge with Pydantic. You possibly can create a mannequin class that inherits from BaseModel and outline the assorted fields and attributes:

from pydantic import BaseModel, EmailStr

class Buyer(BaseModel):

customer_id: int

identify: str

e-mail: EmailStr

cellphone: str

handle: str

# Pattern knowledge

customer_data = {

'customer_id': 1,

'identify': 'John Doe',

'e-mail': 'john.doe@instance.com',

'cellphone': '123-456-7890',

'handle': '123 Predominant St, Metropolis, Nation'

}

# Create a buyer object

buyer = Buyer(**customer_data)

print(buyer)

You possibly can take this additional by including validation to verify if the fields all have legitimate values. If you happen to want a tutorial on utilizing Pydantic—defining fashions and validating knowledge—learn Pydantic Tutorial: Data Validation in Python Made Simple.

4. Profile Code to Establish Efficiency Bottlenecks

Profiling code is useful when you’re trying to optimize your utility for efficiency. In knowledge science initiatives, you possibly can profile reminiscence utilization and execution occasions relying on the context.

Suppose you are engaged on a machine studying venture the place preprocessing a big dataset is a vital step earlier than coaching your mannequin. Let’s profile a perform that applies frequent preprocessing steps reminiscent of standardization:

import numpy as np

import cProfile

def preprocess_data(knowledge):

# Carry out preprocessing steps: scaling and normalization

scaled_data = (knowledge - np.imply(knowledge)) / np.std(knowledge)

return scaled_data

# Generate pattern knowledge

knowledge = np.random.rand(100)

# Profile preprocessing perform

cProfile.run('preprocess_data(knowledge)')

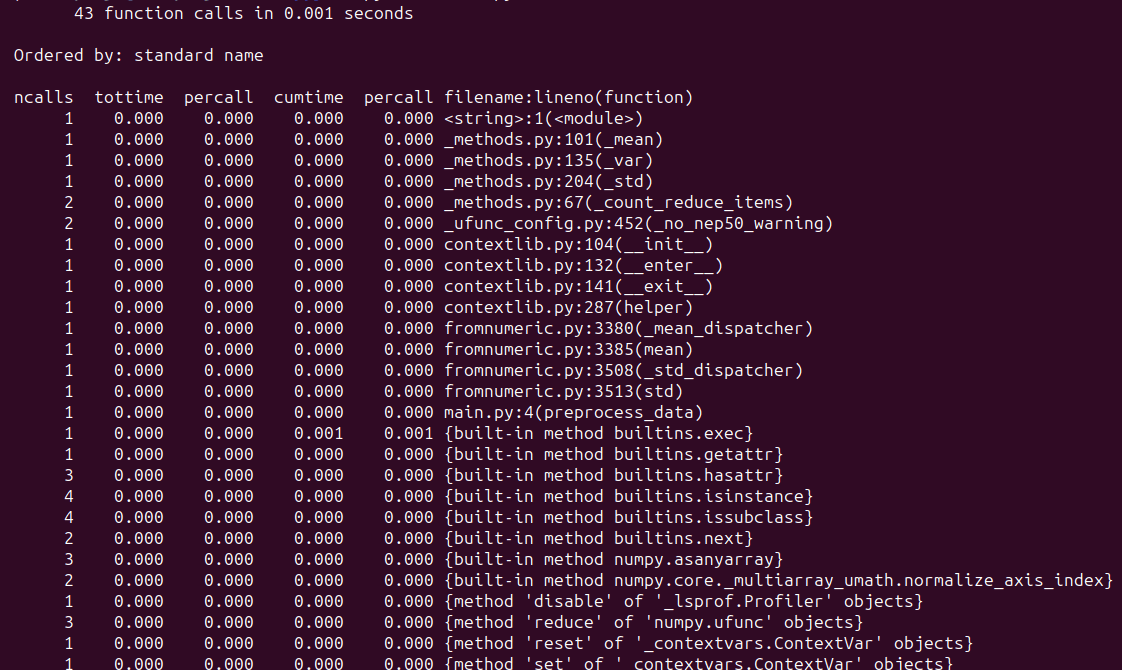

Once you run the script, you need to see an identical output:

On this instance, we’re profiling the preprocess_data() perform, which preprocesses pattern knowledge. Profiling, basically, helps determine any potential bottlenecks—guiding optimizations to enhance efficiency. Listed here are tutorials on profiling in Python which you will discover useful:

5. Use NumPy’s Vectorized Operations

For any knowledge processing process, you possibly can all the time write a Python implementation from scratch. However chances are you’ll not need to do it when working with massive arrays of numbers. For most typical operations—which may be formulated as operations on vectors—that it’s essential to carry out, you should utilize NumPy to carry out them extra effectively.

Let’s take the next instance of element-wise multiplication:

import numpy as np

import timeit

# Set seed for reproducibility

np.random.seed(42)

# Array with 1 million random integers

array1 = np.random.randint(1, 10, measurement=1000000)

array2 = np.random.randint(1, 10, measurement=1000000)

Listed here are the Python-only and NumPy implementations:

# NumPy vectorized implementation for element-wise multiplication

def elementwise_multiply_numpy(array1, array2):

return array1 * array2

# Pattern operation utilizing Python to carry out element-wise multiplication

def elementwise_multiply_python(array1, array2):

consequence = []

for x, y in zip(array1, array2):

consequence.append(x * y)

return consequence

Let’s use the timeit perform from the timeit module to measure the execution occasions for the above implementations:

# Measure execution time for NumPy implementation

numpy_execution_time = timeit.timeit(lambda: elementwise_multiply_numpy(array1, array2), quantity=10) / 10

numpy_execution_time = spherical(numpy_execution_time, 6)

# Measure execution time for Python implementation

python_execution_time = timeit.timeit(lambda: elementwise_multiply_python(array1, array2), quantity=10) / 10

python_execution_time = spherical(python_execution_time, 6)

# Evaluate execution occasions

print("NumPy Execution Time:", numpy_execution_time, "seconds")

print("Python Execution Time:", python_execution_time, "seconds")

We see that the NumPy implementation is ~100 occasions quicker:

Output >>>

NumPy Execution Time: 0.00251 seconds

Python Execution Time: 0.216055 seconds

Wrapping Up

On this tutorial, we now have explored just a few Python coding finest practices for knowledge science. I hope you discovered them useful.

If you’re excited about studying Python for knowledge science, take a look at 5 Free Courses Master Python for Data Science. Joyful studying!

Bala Priya C is a developer and technical author from India. She likes working on the intersection of math, programming, knowledge science, and content material creation. Her areas of curiosity and experience embody DevOps, knowledge science, and pure language processing. She enjoys studying, writing, coding, and occasional! At present, she’s engaged on studying and sharing her information with the developer group by authoring tutorials, how-to guides, opinion items, and extra. Bala additionally creates partaking useful resource overviews and coding tutorials.