Enhancing Neural Community Interpretability and Efficiency with Wavelet-Built-in Kolmogorov-Arnold Networks (Wav-KAN)

Developments in AI have led to proficient programs that make unclear choices, elevating issues about deploying untrustworthy AI in day by day life and the financial system. Understanding neural networks is significant for belief, moral issues like algorithmic bias, and scientific purposes requiring mannequin validation. Multilayer perceptrons (MLPs) are broadly used however lack interpretability in comparison with consideration layers. Mannequin renovation goals to boost interpretability with specifically designed elements. Primarily based on the Kolmogorov-Arnold Networks (KANs) provide improved interpretability and accuracy primarily based on the Kolmogorov-Arnold theorem. Current work extends KANs to arbitrary widths and depths utilizing B-splines, generally known as Spl-KAN.

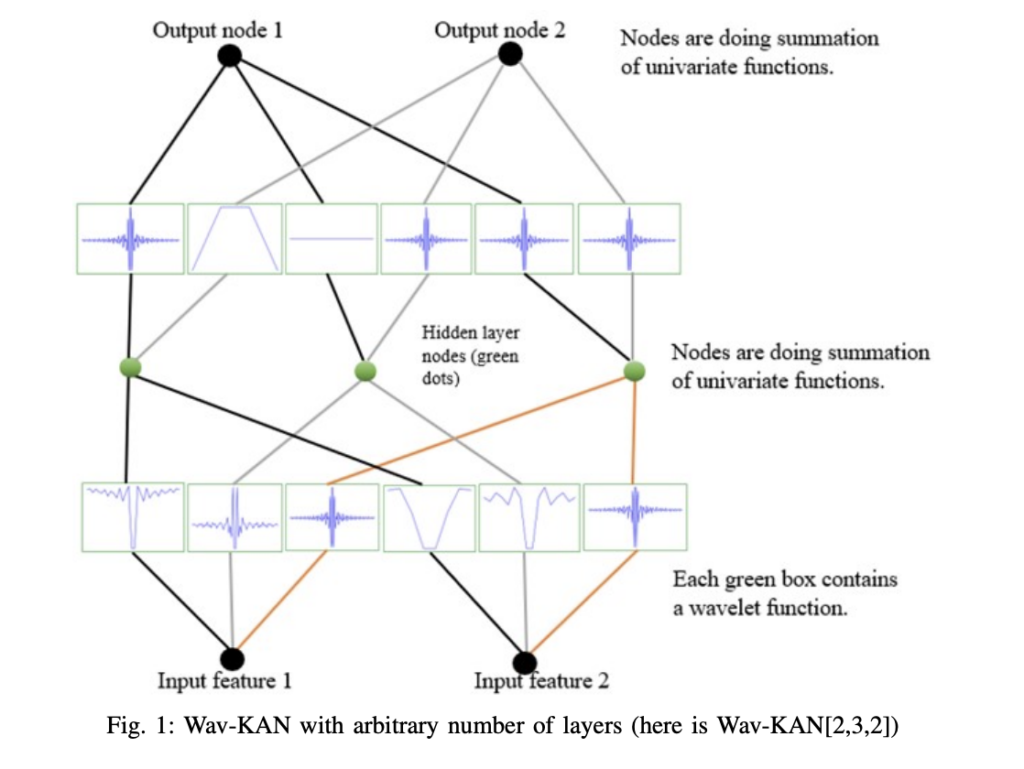

Researchers from Boise State College have developed Wav-KAN, a neural community structure that enhances interpretability and efficiency through the use of wavelet capabilities throughout the KAN framework. Not like conventional MLPs and Spl-KAN, Wav-KAN effectively captures high- and low-frequency knowledge elements, bettering coaching pace, accuracy, robustness, and computational effectivity. By adapting to the information construction, Wav-KAN avoids overfitting and enhances efficiency. This work demonstrates Wav-KAN’s potential as a robust, interpretable neural community software with purposes throughout varied fields and implementations in frameworks like PyTorch and TensorFlow.

Wavelets and B-splines are key strategies for perform approximation, every with distinctive advantages and disadvantages in neural networks. B-splines provide clean, domestically managed approximations however battle with high-dimensional knowledge. Wavelets, excelling in multi-resolution evaluation, deal with each excessive and low-frequency knowledge, making them perfect for function extraction and environment friendly neural community architectures. Wav-KAN outperforms Spl-KAN and MLPs in coaching pace, accuracy, and robustness through the use of wavelets to seize knowledge construction with out overfitting. Wav-KAN’s parameter effectivity and lack of reliance on grid areas make it superior for advanced duties, supported by batch normalization for improved efficiency.

KANs are impressed by the Kolmogorov-Arnold Illustration Theorem, which states that any multivariate perform will be decomposed into the sum of univariate capabilities of sums. In KANs, as an alternative of conventional weights and glued activation capabilities, every “weight” is a learnable perform. This enables KANs to remodel inputs by means of adaptable capabilities, resulting in extra exact perform approximation with fewer parameters. Throughout coaching, these capabilities are optimized to reduce the loss perform, enhancing the mannequin’s accuracy and interpretability by straight studying the information relationships. KANs thus provide a versatile and environment friendly various to conventional neural networks.

Experiments with the KAN mannequin on the MNIST dataset utilizing varied wavelet transformations confirmed promising outcomes. The examine utilized 60,000 coaching and 10,000 check pictures, with wavelet varieties together with Mexican hat, Morlet, By-product of Gaussian (DOG), and Shannon. Wav-KAN and Spl-KAN employed batch normalization and had a construction of [28*28,32,10] nodes. The fashions have been educated for 50 epochs over 5 trials. Utilizing the AdamW optimizer and cross-entropy loss, outcomes indicated that wavelets like DOG and Mexican hat outperformed Spl-KAN by successfully capturing important options and sustaining robustness in opposition to noise, emphasizing the vital function of wavelet choice.

In conclusion, Wav-KAN, a brand new neural community structure, integrates wavelet capabilities into KAN to enhance interpretability and efficiency. Wav-KAN captures advanced knowledge patterns utilizing wavelets’ multiresolution evaluation extra successfully than conventional MLPs and Spl-KANs. Experiments present that Wav-KAN achieves larger accuracy and quicker coaching speeds on account of its distinctive mixture of wavelet transforms and the Kolmogorov-Arnold illustration theorem. This construction enhances parameter effectivity and mannequin interpretability, making Wav-KAN a priceless software for various purposes. Future work will optimize the structure additional and broaden its implementation in machine studying frameworks like PyTorch and TensorFlow.

Try the Paper. All credit score for this analysis goes to the researchers of this undertaking. Additionally, don’t neglect to observe us on Twitter. Be a part of our Telegram Channel, Discord Channel, and LinkedIn Group.

In case you like our work, you’ll love our newsletter..

Don’t Overlook to hitch our 42k+ ML SubReddit

Sana Hassan, a consulting intern at Marktechpost and dual-degree scholar at IIT Madras, is enthusiastic about making use of expertise and AI to handle real-world challenges. With a eager curiosity in fixing sensible issues, he brings a contemporary perspective to the intersection of AI and real-life options.

[Featured Tool] Check out Taipy Enterprise Edition

[Featured Tool] Check out Taipy Enterprise Edition