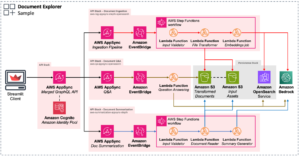

Constructing scalable, safe, and dependable RAG purposes utilizing Information Bases for Amazon Bedrock

Generative artificial intelligence (AI) has gained important momentum with organizations actively exploring its potential purposes. As profitable proof-of-concepts transition into manufacturing, organizations are more and more in want of enterprise scalable options. Nevertheless, to unlock the long-term success and viability of those AI-powered options, it’s essential to align them with well-established architectural ideas.

The AWS Effectively-Architected Framework gives finest practices and pointers for designing and working dependable, safe, environment friendly, and cost-effective techniques within the cloud. Aligning generative AI purposes with this framework is crucial for a number of causes, together with offering scalability, sustaining safety and privateness, reaching reliability, optimizing prices, and streamlining operations. Embracing these ideas is vital for organizations searching for to make use of the ability of generative AI and drive innovation.

This put up explores the brand new enterprise-grade options for Knowledge Bases on Amazon Bedrock and the way they align with the AWS Effectively-Architected Framework. With Information Bases for Amazon Bedrock, you possibly can rapidly construct purposes utilizing Retrieval Augmented Era (RAG) to be used instances like query answering, contextual chatbots, and personalised search.

Listed here are some options which we are going to cowl:

- AWS CloudFormation help

- Non-public community insurance policies for Amazon OpenSearch Serverless

- A number of S3 buckets as information sources

- Service Quotas help

- Hybrid search, metadata filters, customized prompts for the

RetreiveAndGenerateAPI, and most variety of retrievals.

AWS Effectively-Architected design ideas

RAG-based purposes constructed utilizing Information Bases for Amazon Bedrock can enormously profit from following the AWS Well-Architected Framework. This framework has six pillars that assist organizations be sure that their purposes are safe, high-performing, resilient, environment friendly, cost-effective, and sustainable:

- Operational Excellence – Effectively-Architected ideas streamline operations, automate processes, and allow steady monitoring and enchancment of generative AI app efficiency.

- Safety – Implementing robust entry controls, encryption, and monitoring helps safe delicate information utilized in your group’s information base and stop misuse of generative AI.

- Reliability – Effectively-Architected ideas information the design of resilient and fault-tolerant techniques, offering constant worth supply to customers.

- Efficiency Optimization – Selecting the suitable assets, implementing caching methods, and proactively monitoring efficiency metrics make sure that purposes ship quick and correct responses, resulting in optimum efficiency and an enhanced person expertise.

- Price Optimization – Effectively-Architected pointers help in optimizing useful resource utilization, utilizing cost-saving companies, and monitoring bills, leading to long-term viability of generative AI initiatives.

- Sustainability – Effectively-Architected ideas promote environment friendly useful resource utilization and minimizing carbon footprints, addressing the environmental affect of rising generative AI utilization.

By aligning with the Effectively-Architected Framework, organizations can successfully construct and handle enterprise-grade RAG purposes utilizing Information Bases for Amazon Bedrock. Now, let’s dive deep into the brand new options launched inside Information Bases for Amazon Bedrock.

AWS CloudFormation help

For organizations constructing RAG purposes, it’s essential to offer environment friendly and efficient operations and constant infrastructure throughout totally different environments. This may be achieved by implementing practices comparable to automating deployment processes. To perform this, Information Bases for Amazon Bedrock now affords help for AWS CloudFormation.

With AWS CloudFormation and the AWS Cloud Development Kit (AWS CDK), now you can create, replace, and delete information bases and related information sources. Adopting AWS CloudFormation and the AWS CDK for managing information bases and related information sources not solely streamlines the deployment course of, but additionally promotes adherence to the Effectively-Architected ideas. By performing operations (purposes, infrastructure) as code, you possibly can present constant and dependable deployments in a number of AWS accounts and AWS Areas, and preserve versioned and auditable infrastructure configurations.

The next is a pattern CloudFormation script in JSON format for creating and updating a information base in Amazon Bedrock:

Kind specifies a information base as a useful resource in a top-level template. Minimally, it’s essential to specify the next properties:

- Title – Specify a reputation for the information base.

- RoleArn – Specify the Amazon Useful resource Title (ARN) of the AWS Identity and Access Management (IAM) position with permissions to invoke API operations on the information base. For extra data, see Create a service role for Knowledge bases for Amazon Bedrock.

- KnowledgeBaseConfiguration – Specify the embeddings configuration of the information base. The next sub-properties are required:

- Kind – Specify the worth

VECTOR. - VectorKnowledgeBaseConfiguration – Accommodates particulars concerning the mannequin used to create vector embeddings for the information base.

- Kind – Specify the worth

- StorageConfiguration – Specify details about the vector retailer by which the info supply is stored. The next sub-properties are required:

- Kind – Specify the vector retailer service that you’re utilizing.

- You’d additionally want to pick out one of many vector shops supported by Information Bases such OpenSearchServerless, Pinecone or Amazon PostgreSQL and supply configuration for the chosen vector retailer.

For particulars on all of the fields and offering configuration of varied vector shops supported by Information Bases for Amazon Bedrock, confer with AWS::Bedrock::KnowledgeBase.

Redis Enterprise Cloud vector shops usually are not supported as of this writing in AWS CloudFormation. For contemporary data, please confer with the documentation above.

After you create a information base, you might want to create a knowledge supply from the Amazon Simple Storage Service (Amazon S3) bucket containing the information in your information base. It calls the CreateDataSource and DeleteDataSource APIs.

The next is the pattern CloudFormation script in JSON format:

Kind specifies a knowledge supply as a useful resource in a top-level template. Minimally, it’s essential to specify the next properties:

- Title – Specify a reputation for the info supply.

- KnowledgeBaseId – Specify the ID of the information base for the info supply to belong to.

- DataSourceConfiguration – Specify details about the S3 bucket containing the info supply. The next sub-properties are required:

- Kind – Specify the worth S3.

- S3Configuration – Accommodates particulars concerning the configuration of the S3 object containing the info supply.

- VectorIngestionConfiguration – Accommodates particulars about find out how to ingest the paperwork in a knowledge supply. You have to present “ChunkingConfiguration” the place you possibly can outline your chunking technique.

- ServerSideEncryptionConfiguration – Accommodates the configuration for server-side encryption, the place you possibly can present the Amazon Useful resource Title (ARN) of the AWS KMS key used to encrypt the useful resource.

For extra details about organising information sources in Amazon Bedrock, see Set up a data source for your knowledge base.

Notice: You can not change the chunking configuration after you create the info supply.

The CloudFormation template permits you to outline and handle your information base assets utilizing infrastructure as code (IaC). By automating the setup and administration of the information base, you possibly can present a constant infrastructure throughout totally different environments. This method aligns with the Operational Excellence pillar, which emphasizes performing operations as code. By treating your complete workload as code, you possibly can automate processes, create constant responses to occasions, and in the end scale back human errors.

Non-public community insurance policies for Amazon OpenSearch Serverless

For firms constructing RAG purposes, it’s vital that the info stays safe and the community site visitors doesn’t go to public web. To help this, Information Bases for Amazon Bedrock now helps personal community insurance policies for Amazon OpenSearch Serverless.

Information Bases for Amazon Bedrock gives an possibility for utilizing OpenSearch Serverless as a vector retailer. Now you can entry OpenSearch Serverless collections which have a personal community coverage, which additional enhances the safety posture in your RAG software. To realize this, you might want to create an OpenSearch Serverless assortment and configure it for personal community entry. First, create a vector index throughout the assortment to retailer the embeddings. Then, whereas creating the gathering, set Community entry settings to Non-public and specify the VPC endpoint for entry. Importantly, now you can present private network access to OpenSearch Serverless collections particularly for Amazon Bedrock. To do that, choose AWS service personal entry and specify bedrock.amazonaws.com because the service.

This personal community configuration makes certain that your embeddings are saved securely and are solely accessible by Amazon Bedrock, enhancing the general safety and privateness of your information bases. It aligns carefully with the Safety Pillar of controlling site visitors in any respect layers, as a result of all community site visitors is saved throughout the AWS spine with these settings.

Thus far, we’ve explored the automation of making, deleting, and updating information base assets and the improved safety via personal community insurance policies for OpenSearch Serverless to retailer vector embeddings securely. Now, let’s perceive find out how to construct extra dependable, complete, and cost-optimized RAG purposes.

A number of S3 buckets as information sources

Information Bases for Amazon Bedrock now helps including a number of S3 buckets as information sources inside single information base, together with cross-account entry. This enhancement will increase the information base’s comprehensiveness and accuracy by permitting customers to mixture and use data from varied sources seamlessly.

The next are key options:

- A number of S3 buckets – Information Bases for Amazon Bedrock can now incorporate information from a number of S3 buckets, enabling customers to mix and use data from totally different sources effortlessly. This characteristic promotes information variety and makes certain that related data is available for RAG-based purposes.

- Cross-account information entry – Information Bases for Amazon Bedrock helps the configuration of S3 buckets as information sources throughout totally different accounts. You’ll be able to present the mandatory credentials to entry these information sources, increasing the vary of data that may be included into their information bases.

- Environment friendly information administration – When organising a knowledge supply in a information base, you possibly can specify whether or not the info belonging to that information supply needs to be retained or deleted if the info supply is deleted. This characteristic ensures that your information base stays up-to-date and free from out of date or irrelevant information, sustaining the integrity and accuracy of the RAG course of.

By supporting a number of S3 buckets as information sources, the necessity for creating a number of information bases or redundant information copies is eradicated, thereby optimizing value and selling cloud monetary administration. Moreover, the cross-account entry capabilities allow the event of resilient architectures, aligning with the Reliability pillar of the AWS Effectively-Architected Framework, offering excessive availability and fault tolerance.

Different lately introduced options for Information Bases

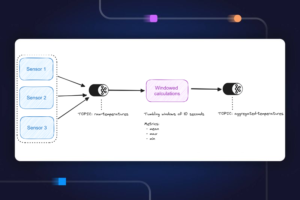

To additional improve the reliability of your RAG software, Information Bases for Amazon Bedrock now extends help for Service Quotas. This characteristic gives a single pane of glass to view utilized AWS quota values and utilization. For instance, you now have fast entry to data such because the allowed variety of `RetrieveAndGenerate API requests per second.

This characteristic permits you to successfully handle useful resource quotas, forestall overprovisioning, and restrict API request charges to safeguard companies from potential abuse.

You can too improve your software’s efficiency by utilizing lately introduced options like hybrid search, filtering based on metadata, custom prompts for the RetreiveAndGenerate API, and maximum number of retrievals. These options collectively enhance the accuracy, relevance, and consistency of generated responses, and align with the Efficiency Effectivity pillar of the AWS Effectively-Architected Framework.

Information Bases for Amazon Bedrock aligns with the Sustainability pillar of the AWS Effectively-Architected Framework by utilizing managed companies and optimizing useful resource utilization. As a completely managed service, Information Bases for Amazon Bedrock removes the burden of provisioning, managing, and scaling the underlying infrastructure, thereby lowering the environmental affect related to working and sustaining these assets.

Moreover, by aligning with the AWS Effectively-Architected ideas, organizations can design and function their RAG purposes in a sustainable method. Practices comparable to automating deployments via AWS CloudFormation, implementing personal community insurance policies for safe information entry, and utilizing environment friendly companies like OpenSearch Serverless contribute to minimizing the environmental affect of those workloads.

Total, Information Bases for Amazon Bedrock, mixed with the AWS Effectively-Architected Framework, empowers organizations to construct scalable, safe, and dependable RAG purposes whereas prioritizing environmental sustainability via environment friendly useful resource utilization and the adoption of managed companies.

Conclusion

The brand new enterprise-grade options, comparable to AWS CloudFormation help, personal community insurance policies, the flexibility to make use of a number of S3 buckets as information sources, and help for Service Quotas, make it easy to construct scalable, safe, and dependable RAG purposes with Information Bases for Amazon Bedrock. Utilizing AWS managed companies and following Effectively-Architected finest practices permits organizations to give attention to delivering progressive generative AI options whereas offering operational excellence, sturdy safety, and environment friendly useful resource utilization. As you construct purposes on AWS, aligning RAG purposes with the AWS Effectively-Architected Framework gives a stable basis for constructing enterprise-grade options that drive enterprise worth whereas adhering to trade requirements.

For added assets, confer with the next:

Concerning the authors

Mani Khanuja is a Tech Lead – Generative AI Specialists, writer of the e book Utilized Machine Studying and Excessive Efficiency Computing on AWS, and a member of the Board of Administrators for Ladies in Manufacturing Schooling Basis Board. She leads machine studying initiatives in varied domains comparable to pc imaginative and prescient, pure language processing, and generative AI. She speaks at inside and exterior conferences such AWS re:Invent, Ladies in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she likes to go for lengthy runs alongside the seaside.

Mani Khanuja is a Tech Lead – Generative AI Specialists, writer of the e book Utilized Machine Studying and Excessive Efficiency Computing on AWS, and a member of the Board of Administrators for Ladies in Manufacturing Schooling Basis Board. She leads machine studying initiatives in varied domains comparable to pc imaginative and prescient, pure language processing, and generative AI. She speaks at inside and exterior conferences such AWS re:Invent, Ladies in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she likes to go for lengthy runs alongside the seaside.

Nitin Eusebius is a Sr. Enterprise Options Architect at AWS, skilled in Software program Engineering, Enterprise Structure, and AI/ML. He’s deeply keen about exploring the probabilities of generative AI. He collaborates with prospects to assist them construct well-architected purposes on the AWS platform, and is devoted to fixing expertise challenges and aiding with their cloud journey.

Nitin Eusebius is a Sr. Enterprise Options Architect at AWS, skilled in Software program Engineering, Enterprise Structure, and AI/ML. He’s deeply keen about exploring the probabilities of generative AI. He collaborates with prospects to assist them construct well-architected purposes on the AWS platform, and is devoted to fixing expertise challenges and aiding with their cloud journey.

Pallavi Nargund is a Principal Options Architect at AWS. In her position as a cloud expertise enabler, she works with prospects to know their objectives and challenges, and provides prescriptive steering to realize their goal with AWS choices. She is keen about girls in expertise and is a core member of Ladies in AI/ML at Amazon. She speaks at inside and exterior conferences comparable to AWS re:Invent, AWS Summits, and webinars. Outdoors of labor she enjoys volunteering, gardening, biking and climbing.

Pallavi Nargund is a Principal Options Architect at AWS. In her position as a cloud expertise enabler, she works with prospects to know their objectives and challenges, and provides prescriptive steering to realize their goal with AWS choices. She is keen about girls in expertise and is a core member of Ladies in AI/ML at Amazon. She speaks at inside and exterior conferences comparable to AWS re:Invent, AWS Summits, and webinars. Outdoors of labor she enjoys volunteering, gardening, biking and climbing.