Improve conversational AI with superior routing strategies with Amazon Bedrock

Conversational synthetic intelligence (AI) assistants are engineered to offer exact, real-time responses via clever routing of queries to probably the most appropriate AI features. With AWS generative AI providers like Amazon Bedrock, builders can create techniques that expertly handle and reply to person requests. Amazon Bedrock is a totally managed service that provides a selection of high-performing basis fashions (FMs) from main AI firms like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon utilizing a single API, together with a broad set of capabilities it’s good to construct generative AI purposes with safety, privateness, and accountable AI.

This put up assesses two main approaches for creating AI assistants: utilizing managed providers reminiscent of Agents for Amazon Bedrock, and using open supply applied sciences like LangChain. We discover the benefits and challenges of every, so you’ll be able to select probably the most appropriate path to your wants.

What’s an AI assistant?

An AI assistant is an clever system that understands pure language queries and interacts with varied instruments, information sources, and APIs to carry out duties or retrieve info on behalf of the person. Efficient AI assistants possess the next key capabilities:

- Pure language processing (NLP) and conversational move

- Data base integration and semantic searches to know and retrieve related info based mostly on the nuances of dialog context

- Working duties, reminiscent of database queries and customized AWS Lambda features

- Dealing with specialised conversations and person requests

We display the advantages of AI assistants utilizing Web of Issues (IoT) gadget administration for instance. On this use case, AI will help technicians handle equipment effectively with instructions that fetch information or automate duties, streamlining operations in manufacturing.

Brokers for Amazon Bedrock method

Agents for Amazon Bedrock means that you can construct generative AI purposes that may run multi-step duties throughout an organization’s techniques and information sources. It affords the next key capabilities:

- Computerized immediate creation from directions, API particulars, and information supply info, saving weeks of immediate engineering effort

- Retrieval Augmented Era (RAG) to securely join brokers to an organization’s information sources and supply related responses

- Orchestration and working of multi-step duties by breaking down requests into logical sequences and calling essential APIs

- Visibility into the agent’s reasoning via a chain-of-thought (CoT) hint, permitting troubleshooting and steering of mannequin habits

- Immediate engineering skills to switch the robotically generated immediate template for enhanced management over brokers

You should use Brokers for Amazon Bedrock and Knowledge Bases for Amazon Bedrock to construct and deploy AI assistants for advanced routing use instances. They supply a strategic benefit for builders and organizations by simplifying infrastructure administration, enhancing scalability, enhancing safety, and lowering undifferentiated heavy lifting. In addition they permit for less complicated utility layer code as a result of the routing logic, vectorization, and reminiscence is totally managed.

Resolution overview

This resolution introduces a conversational AI assistant tailor-made for IoT gadget administration and operations when utilizing Anthropic’s Claude v2.1 on Amazon Bedrock. The AI assistant’s core performance is ruled by a complete set of directions, often called a system immediate, which delineates its capabilities and areas of experience. This steering makes positive the AI assistant can deal with a variety of duties, from managing gadget info to working operational instructions.

Geared up with these capabilities, as detailed within the system immediate, the AI assistant follows a structured workflow to deal with person questions. The next determine supplies a visible illustration of this workflow, illustrating every step from preliminary person interplay to the ultimate response.

The workflow consists of the next steps:

- The method begins when a person requests the assistant to carry out a activity; for instance, asking for the utmost information factors for a particular IoT gadget

device_xxx. This textual content enter is captured and despatched to the AI assistant. - The AI assistant interprets the person’s textual content enter. It makes use of the offered dialog historical past, motion teams, and information bases to know the context and decide the mandatory duties.

- After the person’s intent is parsed and understood, the AI assistant defines duties. That is based mostly on the directions which can be interpreted by the assistant as per the system immediate and person’s enter.

- The duties are then run via a sequence of API calls. That is executed utilizing ReAct prompting, which breaks down the duty right into a sequence of steps which can be processed sequentially:

- For gadget metrics checks, we use the

check-device-metricsmotion group, which entails an API name to Lambda features that then question Amazon Athena for the requested information. - For direct gadget actions like begin, cease, or reboot, we use the

action-on-devicemotion group, which invokes a Lambda operate. This operate initiates a course of that sends instructions to the IoT gadget. For this put up, the Lambda operate sends notifications utilizing Amazon Simple Email Service (Amazon SES). - We use Data Bases for Amazon Bedrock to fetch from historic information saved as embeddings within the Amazon OpenSearch Service vector database.

- For gadget metrics checks, we use the

- After the duties are full, the ultimate response is generated by the Amazon Bedrock FM and conveyed again to the person.

- Brokers for Amazon Bedrock robotically shops info utilizing a stateful session to take care of the identical dialog. The state is deleted after a configurable idle timeout elapses.

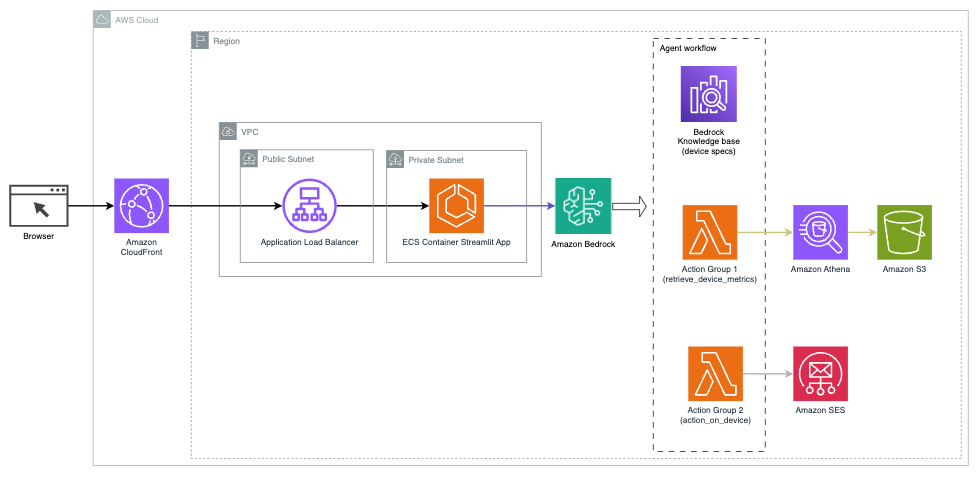

Technical overview

The next diagram illustrates the structure to deploy an AI assistant with Brokers for Amazon Bedrock.

It consists of the next key parts:

- Conversational interface – The conversational interface makes use of Streamlit, an open supply Python library that simplifies the creation of customized, visually interesting internet apps for machine studying (ML) and information science. It’s hosted on Amazon Elastic Container Service (Amazon ECS) with AWS Fargate, and it’s accessed utilizing an Software Load Balancer. You should use Fargate with Amazon ECS to run containers with out having to handle servers, clusters, or digital machines.

- Brokers for Amazon Bedrock – Brokers for Amazon Bedrock completes the person queries via a sequence of reasoning steps and corresponding actions based mostly on ReAct prompting:

- Data Bases for Amazon Bedrock – Data Bases for Amazon Bedrock supplies totally managed RAG to provide the AI assistant with entry to your information. In our use case, we uploaded gadget specs into an Amazon Simple Storage Service (Amazon S3) bucket. It serves as the information supply to the information base.

- Motion teams – These are outlined API schemas that invoke particular Lambda features to work together with IoT gadgets and different AWS providers.

- Anthropic Claude v2.1 on Amazon Bedrock – This mannequin interprets person queries and orchestrates the move of duties.

- Amazon Titan Embeddings – This mannequin serves as a textual content embeddings mannequin, remodeling pure language textual content—from single phrases to advanced paperwork—into numerical vectors. This permits vector search capabilities, permitting the system to semantically match person queries with probably the most related information base entries for efficient search.

The answer is built-in with AWS providers reminiscent of Lambda for working code in response to API calls, Athena for querying datasets, OpenSearch Service for looking out via information bases, and Amazon S3 for storage. These providers work collectively to offer a seamless expertise for IoT gadget operations administration via pure language instructions.

Advantages

This resolution affords the next advantages:

- Implementation complexity:

- Fewer traces of code are required, as a result of Brokers for Amazon Bedrock abstracts away a lot of the underlying complexity, lowering growth effort

- Managing vector databases like OpenSearch Service is simplified, as a result of Data Bases for Amazon Bedrock handles vectorization and storage

- Integration with varied AWS providers is extra streamlined via pre-defined motion teams

- Developer expertise:

- The Amazon Bedrock console supplies a user-friendly interface for immediate growth, testing, and root trigger evaluation (RCA), enhancing the general developer expertise

- Agility and suppleness:

- Brokers for Amazon Bedrock permits for seamless upgrades to newer FMs (reminiscent of Claude 3.0) once they turn into out there, so your resolution stays updated with the newest developments

- Service quotas and limitations are managed by AWS, lowering the overhead of monitoring and scaling infrastructure

- Safety:

- Amazon Bedrock is a totally managed service, adhering to AWS’s stringent safety and compliance requirements, probably simplifying organizational safety evaluations

Though Brokers for Amazon Bedrock affords a streamlined and managed resolution for constructing conversational AI purposes, some organizations could favor an open supply method. In such instances, you should use frameworks like LangChain, which we talk about within the subsequent part.

LangChain dynamic routing method

LangChain is an open supply framework that simplifies constructing conversational AI by permitting the combination of enormous language fashions (LLMs) and dynamic routing capabilities. With LangChain Expression Language (LCEL), builders can outline the routing, which lets you create non-deterministic chains the place the output of a earlier step defines the following step. Routing helps present construction and consistency in interactions with LLMs.

For this put up, we use the identical instance because the AI assistant for IoT gadget administration. Nevertheless, the primary distinction is that we have to deal with the system prompts individually and deal with every chain as a separate entity. The routing chain decides the vacation spot chain based mostly on the person’s enter. The choice is made with the help of an LLM by passing the system immediate, chat historical past, and person’s query.

Resolution overview

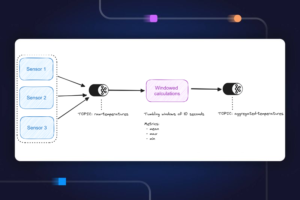

The next diagram illustrates the dynamic routing resolution workflow.

The workflow consists of the next steps:

- The person presents a query to the AI assistant. For instance, “What are the max metrics for gadget 1009?”

- An LLM evaluates every query together with the chat historical past from the identical session to find out its nature and which topic space it falls below (reminiscent of SQL, motion, search, or SME). The LLM classifies the enter and the LCEL routing chain takes that enter.

- The router chain selects the vacation spot chain based mostly on the enter, and the LLM is supplied with the next system immediate:

The LLM evaluates the person’s query together with the chat historical past to find out the character of the question and which topic space it falls below. The LLM then classifies the enter and outputs a JSON response within the following format:

The router chain makes use of this JSON response to invoke the corresponding vacation spot chain. There are 4 subject-specific vacation spot chains, every with its personal system immediate:

- SQL-related queries are despatched to the SQL vacation spot chain for database interactions. You should use LCEL to construct the SQL chain.

- Motion-oriented questions invoke the customized Lambda vacation spot chain for working operations. With LCEL, you’ll be able to outline your personal custom function; in our case, it’s a operate to run a predefined Lambda operate to ship an electronic mail with a tool ID parsed. Instance person enter is likely to be “Shut down gadget 1009.”

- Search-focused inquiries proceed to the RAG vacation spot chain for info retrieval.

- SME-related questions go to the SME/skilled vacation spot chain for specialised insights.

- Every vacation spot chain takes the enter and runs the mandatory fashions or features:

- The SQL chain makes use of Athena for working queries.

- The RAG chain makes use of OpenSearch Service for semantic search.

- The customized Lambda chain runs Lambda features for actions.

- The SME/skilled chain supplies insights utilizing the Amazon Bedrock mannequin.

- Responses from every vacation spot chain are formulated into coherent insights by the LLM. These insights are then delivered to the person, finishing the question cycle.

- Consumer enter and responses are saved in Amazon DynamoDB to offer context to the LLM for the present session and from previous interactions. The length of persevered info in DynamoDB is managed by the appliance.

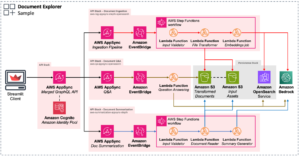

Technical overview

The next diagram illustrates the structure of the LangChain dynamic routing resolution.

The net utility is constructed on Streamlit hosted on Amazon ECS with Fargate, and it’s accessed utilizing an Software Load Balancer. We use Anthropic’s Claude v2.1 on Amazon Bedrock as our LLM. The net utility interacts with the mannequin utilizing LangChain libraries. It additionally interacts with number of different AWS providers, reminiscent of OpenSearch Service, Athena, and DynamoDB to satisfy end-users’ wants.

Advantages

This resolution affords the next advantages:

- Implementation complexity:

- Though it requires extra code and customized growth, LangChain supplies higher flexibility and management over the routing logic and integration with varied parts.

- Managing vector databases like OpenSearch Service requires further setup and configuration efforts. The vectorization course of is applied in code.

- Integrating with AWS providers could contain extra customized code and configuration.

- Developer expertise:

- LangChain’s Python-based method and intensive documentation might be interesting to builders already acquainted with Python and open supply instruments.

- Immediate growth and debugging could require extra handbook effort in comparison with utilizing the Amazon Bedrock console.

- Agility and suppleness:

- LangChain helps a variety of LLMs, permitting you to modify between completely different fashions or suppliers, fostering flexibility.

- The open supply nature of LangChain permits community-driven enhancements and customizations.

- Safety:

- As an open supply framework, LangChain could require extra rigorous safety evaluations and vetting inside organizations, probably including overhead.

Conclusion

Conversational AI assistants are transformative instruments for streamlining operations and enhancing person experiences. This put up explored two highly effective approaches utilizing AWS providers: the managed Brokers for Amazon Bedrock and the versatile, open supply LangChain dynamic routing. The selection between these approaches hinges in your group’s necessities, growth preferences, and desired degree of customization. Whatever the path taken, AWS empowers you to create clever AI assistants that revolutionize enterprise and buyer interactions

Discover the answer code and deployment belongings in our GitHub repository, the place you’ll be able to comply with the detailed steps for every conversational AI method.

Concerning the Authors

Ameer Hakme is an AWS Options Architect based mostly in Pennsylvania. He collaborates with Unbiased Software program Distributors (ISVs) within the Northeast area, helping them in designing and constructing scalable and trendy platforms on the AWS Cloud. An skilled in AI/ML and generative AI, Ameer helps prospects unlock the potential of those cutting-edge applied sciences. In his leisure time, he enjoys using his bike and spending high quality time along with his household.

Ameer Hakme is an AWS Options Architect based mostly in Pennsylvania. He collaborates with Unbiased Software program Distributors (ISVs) within the Northeast area, helping them in designing and constructing scalable and trendy platforms on the AWS Cloud. An skilled in AI/ML and generative AI, Ameer helps prospects unlock the potential of those cutting-edge applied sciences. In his leisure time, he enjoys using his bike and spending high quality time along with his household.

Sharon Li is an AI/ML Options Architect at Amazon Internet Providers based mostly in Boston, with a ardour for designing and constructing Generative AI purposes on AWS. She collaborates with prospects to leverage AWS AI/ML providers for progressive options.

Sharon Li is an AI/ML Options Architect at Amazon Internet Providers based mostly in Boston, with a ardour for designing and constructing Generative AI purposes on AWS. She collaborates with prospects to leverage AWS AI/ML providers for progressive options.

Kawsar Kamal is a senior options architect at Amazon Internet Providers with over 15 years of expertise within the infrastructure automation and safety house. He helps purchasers design and construct scalable DevSecOps and AI/ML options within the Cloud.

Kawsar Kamal is a senior options architect at Amazon Internet Providers with over 15 years of expertise within the infrastructure automation and safety house. He helps purchasers design and construct scalable DevSecOps and AI/ML options within the Cloud.