Speed up ML workflows with Amazon SageMaker Studio Native Mode and Docker help

We’re excited to announce two new capabilities in Amazon SageMaker Studio that can speed up iterative improvement for machine studying (ML) practitioners: Native Mode and Docker help. ML mannequin improvement usually includes gradual iteration cycles as builders change between coding, coaching, and deployment. Every step requires ready for distant compute assets to start out up, which delays validating implementations and getting suggestions on adjustments.

With Native Mode, builders can now prepare and take a look at fashions, debug code, and validate end-to-end pipelines immediately on their SageMaker Studio pocket book occasion with out the necessity for spinning up distant compute assets. This reduces the iteration cycle from minutes right down to seconds, boosting developer productiveness. Docker help in SageMaker Studio notebooks permits builders to effortlessly construct Docker containers and entry pre-built containers, offering a constant improvement setting throughout the workforce and avoiding time-consuming setup and dependency administration.

Native Mode and Docker help provide a streamlined workflow for validating code adjustments and prototyping fashions utilizing native containers operating on a SageMaker Studio pocket book

occasion. On this put up, we information you thru establishing Native Mode in SageMaker Studio, operating a pattern coaching job, and deploying the mannequin on an Amazon SageMaker endpoint from a SageMaker Studio pocket book.

SageMaker Studio Native Mode

SageMaker Studio introduces Native Mode, enabling you to run SageMaker coaching, inference, batch rework, and processing jobs immediately in your JupyterLab, Code Editor, or SageMaker Studio Basic pocket book situations with out requiring distant compute assets. Advantages of utilizing Native Mode embody:

- Immediate validation and testing of workflows proper inside built-in improvement environments (IDEs)

- Sooner iteration by native runs for smaller-scale jobs to examine outputs and establish points early

- Improved improvement and debugging effectivity by eliminating the anticipate distant coaching jobs

- Rapid suggestions on code adjustments earlier than operating full jobs within the cloud

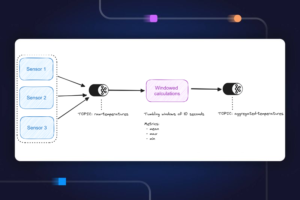

The next determine illustrates the workflow utilizing Native Mode on SageMaker.

To make use of Native Mode, set instance_type="native" when operating SageMaker Python SDK jobs equivalent to coaching and inference. This may run them on the situations utilized by your SageMaker Studio IDEs as an alternative of provisioning cloud assets.

Though sure capabilities equivalent to distributed coaching are solely accessible within the cloud, Native Mode removes the necessity to change contexts for fast iterations. Whenever you’re able to make the most of the complete energy and scale of SageMaker, you possibly can seamlessly run your workflow within the cloud.

Docker help in SageMaker Studio

SageMaker Studio now additionally permits constructing and operating Docker containers domestically in your SageMaker Studio pocket book occasion. This new function permits you to construct and validate Docker pictures in SageMaker Studio earlier than utilizing them for SageMaker coaching and inference.

The next diagram illustrates the high-level Docker orchestration structure inside SageMaker Studio.

With Docker help in SageMaker Studio, you possibly can:

- Construct Docker containers with built-in fashions and dependencies immediately inside SageMaker Studio

- Get rid of the necessity for exterior Docker construct processes to simplify picture creation

- Run containers domestically to validate performance earlier than deploying fashions to manufacturing

- Reuse native containers when deploying to SageMaker for coaching and internet hosting

Though some superior Docker capabilities like multi-container and customized networks usually are not supported as of this writing, the core construct and run performance is out there to speed up creating containers for carry your personal container (BYOC) workflows.

Stipulations

To make use of Native Mode in SageMaker Studio functions, you should full the next stipulations:

- For pulling pictures from Amazon Elastic Container Registry (Amazon ECR), the account internet hosting the ECR picture should present entry permission to the person’s Identity and Access Management (IAM) function. The area’s function should additionally permit Amazon ECR entry.

- To allow Native Mode and Docker capabilities, you should set the

EnableDockerAccessparameter to true for the area’sDockerSettingsutilizing the AWS Command Line Interface (AWS CLI). This enables customers within the area to make use of Native Mode and Docker options. By default, Native Mode and Docker are disabled in SageMaker Studio. Any current SageMaker Studio apps will have to be restarted for the Docker service replace to take impact. The next is an instance AWS CLI command for updating a SageMaker Studio area:

- It’s essential to replace the SageMaker IAM function so as to have the ability to push Docker images to Amazon ECR:

Run Python information in SageMaker Studio areas utilizing Native Mode

SageMaker Studio JupyterLab and Code Editor (based mostly on Code-OSS, Visual Studio Code – Open Source), extends SageMaker Studio so you possibly can write, take a look at, debug, and run your analytics and ML code utilizing the favored light-weight IDE. For extra particulars on how one can get began with SageMaker Studio IDEs, check with Boost productivity on Amazon SageMaker Studio: Introducing JupyterLab Spaces and generative AI tools and New – Code Editor, based on Code-OSS VS Code Open Source now available in Amazon SageMaker Studio. Full the next steps:

- Create a brand new terminal.

- Set up the Docker CLI and Docker Compose plugin following the directions within the following GitHub repo. If chained instructions fail, run the instructions one after the other.

You should replace the SageMaker SDK to the most recent model.

You should replace the SageMaker SDK to the most recent model.

- Run

pip set up sagemaker -Uqwithin the terminal.

For Code Editor solely, you’ll want to set the Python setting to run within the present terminal.

- In Code Editor, on the File menu¸ select Preferences and Settings.

- Seek for and choose Terminal: Execute in File Dir.

- In Code Editor or JupyterLab, open the

scikit_learn_script_mode_local_training_and_servingfolder and run thescikit_learn_script_mode_local_training_and_serving.pyfile.

You possibly can run the script by selecting Run in Code Editor or utilizing the CLI in a JupyterLab terminal.

It is possible for you to to see how the mannequin is educated domestically. You then deploy the mannequin to a SageMaker endpoint domestically, and calculate the basis imply sq. error (

It is possible for you to to see how the mannequin is educated domestically. You then deploy the mannequin to a SageMaker endpoint domestically, and calculate the basis imply sq. error (RMSE).

Simulate coaching and inference in SageMaker Studio Basic utilizing Native Mode

You may as well use a pocket book in SageMaker Studio Basic to run a small-scale coaching job on CIFAR10 utilizing Native Mode, deploy the mannequin domestically, and carry out inference.

Arrange your pocket book

To arrange the pocket book, full the next steps:

- Open SageMaker Studio Basic and clone the next GitHub repo.

- Open the

pytorch_local_mode_cifar10.ipynb pocket book in weblog/pytorch_cnn_cifar10.

- For Picture, select

PyTorch 2.1.0 Python 3.10 CPU Optimized.

Verify that your pocket book exhibits the right occasion and kernel choice.

Verify that your pocket book exhibits the right occasion and kernel choice.

- Open a terminal by selecting Launch Terminal within the present SageMaker picture.

- Set up the Docker CLI and Docker Compose plugin following the directions within the following GitHub repo.

Since you’re utilizing Docker from SageMaker Studio Basic, take away sudo when operating instructions as a result of the terminal already runs below superuser. For SageMaker Studio Basic, the set up instructions depend upon the SageMaker Studio app picture OS. For instance, DLC-based framework pictures are Ubuntu based mostly, wherein the next directions would work. Nonetheless, for a Debian-based picture like DataScience Photos, you should comply with the directions within the following GitHub repo. If chained instructions fail, run the instructions one after the other. It is best to see the Docker model displayed.

- Depart the terminal window open, return to the pocket book, and begin operating it cell by cell.

Make sure that to run the cell with pip set up -U sagemaker so that you’re utilizing the most recent model of the SageMaker Python SDK.

Native coaching

Whenever you begin operating the native SageMaker coaching job, you will notice the next log strains:

This means that the coaching was operating domestically utilizing Docker.

Be affected person whereas the pytorch-training:2.1-cpu-py310 Docker picture is pulled. Attributable to its giant dimension (5.2 GB), it might take a couple of minutes.

Docker pictures shall be saved within the SageMaker Studio app occasion’s root quantity, which isn’t accessible to end-users. The one approach to entry and work together with Docker pictures is through the uncovered Docker API operations.

From a person confidentiality standpoint, the SageMaker Studio platform by no means accesses or shops user-specific pictures.

When the coaching is full, you’ll be capable of see the next success log strains:

Native inference

Full the next steps:

- Deploy the SageMaker endpoint utilizing SageMaker Native Mode.

Be affected person whereas the pytorch-inference:2.1-cpu-py310 Docker picture is pulled. Attributable to its giant dimension (4.32 GB), it might take a couple of minutes.

- Invoke the SageMaker endpoint deployed domestically utilizing the take a look at pictures.

It is possible for you to to see the anticipated lessons: frog, ship, automobile, and airplane:

- As a result of the SageMaker Native endpoint remains to be up, navigate again to the open terminal window and record the operating containers:

docker ps

You’ll be capable of see the operating pytorch-inference:2.1-cpu-py310 container backing the SageMaker endpoint.

- To close down the SageMaker native endpoint and cease the operating container, as a result of you possibly can solely run one native endpoint at a time, run the cleanup code.

- To ensure the Docker container is down, you possibly can navigate to the opened terminal window, run docker ps, and ensure there aren’t any operating containers.

- If you happen to see a container operating, run

docker cease <CONTAINER_ID>to cease it.

Ideas for utilizing SageMaker Native Mode

If you happen to’re utilizing SageMaker for the primary time, check with Train machine learning models. To be taught extra about deploying fashions for inference with SageMaker, check with Deploy models for inference.

Remember the next suggestions:

- Print enter and output information and folders to grasp dataset and mannequin loading

- Use 1–2 epochs and small datasets for fast testing

- Pre-install dependencies in a Dockerfile to optimize setting setup

- Isolate serialization code in endpoints for debugging

Configure Docker set up as a Lifecycle Configuration

You possibly can outline the Docker set up course of as a Lifecycle Configuration (LCC) script to simplify setup every time a brand new SageMaker Studio area begins. LCCs are scripts that SageMaker runs throughout occasions like area creation. Confer with the JupyterLab, Code Editor, or SageMaker Studio Classic LCC setup (utilizing docker install cli as reference) to be taught extra.

Construct and take a look at customized Docker pictures in SageMaker Studio areas

On this step, you put in Docker contained in the JupyterLab (or Code Editor) app area and use Docker to construct, take a look at, and publish customized Docker pictures with SageMaker Studio areas. Areas are used to handle the storage and useful resource wants of some SageMaker Studio functions. Every area has a 1:1 relationship with an occasion of an software. Each supported software that’s created will get its personal area. To be taught extra about SageMaker areas, check with Boost productivity on Amazon SageMaker Studio: Introducing JupyterLab Spaces and generative AI tools. Ensure you provision a brand new area with at the least 30 GB of storage to permit adequate storage for Docker pictures and artifacts.

Set up Docker inside an area

To put in the Docker CLI and Docker Compose plugin inside a JupyterLab area, run the instructions within the following GitHub repo. SageMaker Studio only supports Docker version 20.10.X.

Construct Docker pictures

To substantiate that Docker is put in and dealing inside your JupyterLab area, run the next code:

To construct a customized Docker picture inside a JupyterLab (or Code Editor) area, full the next steps:

- Create an empty Dockerfile:

contact Dockerfile

- Edit the Dockerfile with the next instructions, which create a easy flask internet server picture from the bottom python:3.10.13-bullseye picture hosted on Docker Hub:

The next code exhibits the contents of an instance flask software file app.py:

Moreover, you possibly can replace the reference Dockerfile instructions to incorporate packages and artifacts of your selection.

- Construct a Docker picture utilizing the reference Dockerfile:

docker construct --network sagemaker --tag myflaskapp:v1 --file ./Dockerfile .

Embody --network sagemaker in your docker construct command, in any other case the construct will fail. Containers can’t be run in Docker default bridge or customized Docker networks. Containers are run in similar community because the SageMaker Studio software container. Customers can solely use sagemaker for the community title.

- When your construct is full, validate if the picture exists. Re-tag the construct as an ECR picture and push. If you happen to run into permission points, run the aws ecr get-login-password… command and attempt to rerun the Docker push/pull:

Take a look at Docker pictures

Having Docker put in inside a JupyterLab (or Code Editor) SageMaker Studio area permits you to take a look at pre-built or customized Docker pictures as containers (or containerized functions). On this part, we use the docker run command to provision Docker containers inside a SageMaker Studio area to check containerized workloads like REST internet providers and Python scripts. Full the next steps:

- If the take a look at picture doesn’t exist, run docker pull to drag the picture into your native machine:

sagemaker-user@default:~$ docker pull 123456789012.dkr.ecr.us-east-2.amazonaws.com/myflaskapp:v1

- If you happen to encounter authentication points, run the next instructions:

aws ecr get-login-password --region area | docker login --username AWS --password-stdin aws_account_id.dkr.ecr.area.amazonaws.com

- Create a container to check your workload:

docker run --network sagemaker 123456789012.dkr.ecr.us-east-2.amazonaws.com/myflaskapp:v1

This spins up a brand new container occasion and runs the appliance outlined utilizing Docker’s ENTRYPOINT:

- To check in case your internet endpoint is energetic, navigate to the URL

https://<sagemaker-space-id>.studio.us-east-2.sagemaker.aws/jupyterlab/default/proxy/6006/.

It is best to see a JSON response much like following screenshot.

Clear up

To keep away from incurring pointless costs, delete the assets that you simply created whereas operating the examples on this put up:

- In your SageMaker Studio area, select Studio Basic within the navigation pane, then select Cease.

- In your SageMaker Studio area, select JupyterLab or Code Editor within the navigation pane, select your app, after which select Cease.

Conclusion

SageMaker Studio Native Mode and Docker help empower builders to construct, take a look at, and iterate on ML implementations sooner with out leaving their workspace. By offering on the spot entry to check environments and outputs, these capabilities optimize workflows and enhance productiveness. Check out SageMaker Studio Native Mannequin and Docker help utilizing our quick onboard feature, which lets you spin up a brand new area for single customers inside minutes. Share your ideas within the feedback part!

Concerning the Authors

Shweta Singh is a Senior Product Supervisor within the Amazon SageMaker Machine Studying (ML) platform workforce at AWS, main SageMaker Python SDK. She has labored in a number of product roles in Amazon for over 5 years. She has a Bachelor of Science diploma in Pc Engineering and Masters of Science in Monetary Engineering, each from New York College

Shweta Singh is a Senior Product Supervisor within the Amazon SageMaker Machine Studying (ML) platform workforce at AWS, main SageMaker Python SDK. She has labored in a number of product roles in Amazon for over 5 years. She has a Bachelor of Science diploma in Pc Engineering and Masters of Science in Monetary Engineering, each from New York College

Eitan Sela is a Generative AI and Machine Studying Specialist Options Architect ta AWS. He works with AWS prospects to supply steering and technical help, serving to them construct and function Generative AI and Machine Studying options on AWS. In his spare time, Eitan enjoys jogging and studying the most recent machine studying articles.

Eitan Sela is a Generative AI and Machine Studying Specialist Options Architect ta AWS. He works with AWS prospects to supply steering and technical help, serving to them construct and function Generative AI and Machine Studying options on AWS. In his spare time, Eitan enjoys jogging and studying the most recent machine studying articles.

Pranav Murthy is an AI/ML Specialist Options Architect at AWS. He focuses on serving to prospects construct, prepare, deploy and migrate machine studying (ML) workloads to SageMaker. He beforehand labored within the semiconductor business creating giant laptop imaginative and prescient (CV) and pure language processing (NLP) fashions to enhance semiconductor processes utilizing state-of-the-art ML strategies. In his free time, he enjoys taking part in chess and touring. You could find Pranav on LinkedIn.

Pranav Murthy is an AI/ML Specialist Options Architect at AWS. He focuses on serving to prospects construct, prepare, deploy and migrate machine studying (ML) workloads to SageMaker. He beforehand labored within the semiconductor business creating giant laptop imaginative and prescient (CV) and pure language processing (NLP) fashions to enhance semiconductor processes utilizing state-of-the-art ML strategies. In his free time, he enjoys taking part in chess and touring. You could find Pranav on LinkedIn.

Mufaddal Rohawala is a Software program Engineer at AWS. He works on the SageMaker Python SDK library for Amazon SageMaker. In his spare time, he enjoys journey, out of doors actions and is a soccer fan.

Mufaddal Rohawala is a Software program Engineer at AWS. He works on the SageMaker Python SDK library for Amazon SageMaker. In his spare time, he enjoys journey, out of doors actions and is a soccer fan.