Simplify knowledge prep for generative AI with Amazon SageMaker Knowledge Wrangler

Generative synthetic intelligence (generative AI) fashions have demonstrated spectacular capabilities in producing high-quality textual content, photographs, and different content material. Nonetheless, these fashions require large quantities of unpolluted, structured coaching knowledge to succeed in their full potential. Most real-world knowledge exists in unstructured codecs like PDFs, which requires preprocessing earlier than it may be used successfully.

In response to IDC, unstructured knowledge accounts for over 80% of all enterprise knowledge at this time. This contains codecs like emails, PDFs, scanned paperwork, photographs, audio, video, and extra. Whereas this knowledge holds helpful insights, its unstructured nature makes it tough for AI algorithms to interpret and be taught from it. In response to a 2019 survey by Deloitte, solely 18% of companies reported with the ability to benefit from unstructured knowledge.

As AI adoption continues to speed up, creating environment friendly mechanisms for digesting and studying from unstructured knowledge turns into much more vital sooner or later. This might contain higher preprocessing instruments, semi-supervised studying methods, and advances in pure language processing. Firms that use their unstructured knowledge most successfully will acquire vital aggressive benefits from AI. Clear knowledge is vital for good mannequin efficiency. Extracted texts nonetheless have massive quantities of gibberish and boilerplate textual content (e.g., learn HTML). Scraped knowledge from the web usually accommodates quite a lot of duplications. Knowledge from social media, evaluations, or any consumer generated contents also can comprise poisonous and biased contents, and you might must filter them out utilizing some pre-processing steps. There may be quite a lot of low-quality contents or bot-generated texts, which could be filtered out utilizing accompanying metadata (e.g., filter out customer support responses that acquired low buyer rankings).

Knowledge preparation is vital at a number of phases in Retrieval Augmented Era (RAG) fashions. The data supply paperwork want preprocessing, like cleansing textual content and producing semantic embeddings, to allow them to be effectively listed and retrieved. The consumer’s pure language question additionally requires preprocessing, so it may be encoded right into a vector and in comparison with doc embeddings. After retrieving related contexts, they could want further preprocessing, like truncation, earlier than being concatenated to the consumer’s question to create the ultimate immediate for the inspiration mannequin. Amazon SageMaker Canvas now helps complete knowledge preparation capabilities powered by Amazon SageMaker Data Wrangler. With this integration, SageMaker Canvas supplies clients with an end-to-end no-code workspace to organize knowledge, construct and use ML and foundations fashions to speed up time from knowledge to enterprise insights. Now you can simply uncover and combination knowledge from over 50 knowledge sources, and discover and put together knowledge utilizing over 300 built-in analyses and transformations in SageMaker Canvas’ visible interface.

Resolution overview

On this put up, we work with a PDF documentation dataset—Amazon Bedrock user guide. Additional, we present the best way to preprocess a dataset for RAG. Particularly, we clear the info and create RAG artifacts to reply the questions in regards to the content material of the dataset. Take into account the next machine studying (ML) downside: consumer asks a big language mannequin (LLM) query: “Methods to filter and search fashions in Amazon Bedrock?”. LLM has not seen the documentation throughout the coaching or fine-tuning stage, thus wouldn’t be capable to reply the query and likely will hallucinate. Our aim with this put up, is to discover a related piece of textual content from the PDF (i.e., RAG) and fasten it to the immediate, thus enabling LLM to reply questions particular to this doc.

Under, we present how you are able to do all these fundamental preprocessing steps from Amazon SageMaker Canvas (powered by Amazon SageMaker Data Wrangler):

- Extracting textual content from a PDF doc (powered by Textract)

- Take away delicate info (powered by Comprehend)

- Chunk textual content into items.

- Create embeddings for every bit (powered by Bedrock).

- Add embedding to a vector database (powered by OpenSearch)

Conditions

For this walkthrough, you need to have the next:

Observe: Create OpenSearch Service domains following the directions here. For simplicity, let’s choose the choice with a grasp username and password for fine-grained entry management. As soon as the area is created, create a vector index with the next mappings, and vector dimension 1536 aligns with Amazon Titan embeddings:

Walkthrough

Construct a knowledge stream

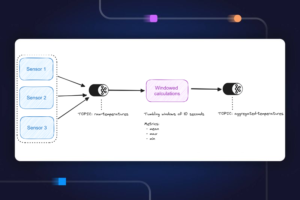

On this part, we cowl how we will construct a knowledge stream to extract textual content and metadata from PDFs, clear and course of the info, generate embeddings utilizing Amazon Bedrock, and index the info in Amazon OpenSearch.

Launch SageMaker Canvas

To launch SageMaker Canvas, full the next steps:

- On the Amazon SageMaker Console, select Domains within the navigation pane.

- Select your area.

- On the launch menu, select Canvas.

Create a dataflow

Full the next steps to create a knowledge stream in SageMaker Canvas:

- On the SageMaker Canvas house web page, select Knowledge Wrangler.

- Select Create on the suitable facet of web page, then give a knowledge stream title and choose Create.

- This can land on a knowledge stream web page.

- Select Import knowledge, choose tabular knowledge.

Now let’s import the info from Amazon S3 bucket:

- Select Import knowledge and choose Tabular from the drop-down record.

- Knowledge Supply and choose Amazon S3 from the drop-down record.

- Navigate to the meta knowledge file with PDF file places, and select the file.

- Now the metadata file is loaded to the info preparation knowledge stream, and we will proceed so as to add subsequent steps to rework the info and index into Amazon OpenSearch. On this case the file has following metadata, with the situation of every file in Amazon S3 listing.

So as to add a brand new remodel, full the next steps:

- Select the plus signal and select Add Rework.

- Select Add Step and select Customized Rework.

- You’ll be able to create a customized remodel utilizing Pandas, PySpark, Python user-defined capabilities, and SQL PySpark. Select Python (PySpark) for this use-case.

- Enter a reputation for the step. From the instance code snippets, browse and choose extract textual content from pdf. Make crucial adjustments to code snippet and choose Add.

- Let’s add a step to redact Private Identifiable Info (PII) knowledge from the extracted knowledge by leveraging Amazon Comprehend. Select Add Step and select Customized Rework. And choose Python (PySpark).

From the instance code snippets, browse and choose masks PII. Make crucial adjustments to code snippet and choose Add.

- The subsequent step is to chunk the textual content content material. Select Add Step and select Customized Rework. And choose Python (PySpark).

From the instance code snippets, browse and choose Chunk textual content. Make crucial adjustments to code snippet and choose Add.

- Let’s convert the textual content content material to vector embeddings utilizing the Amazon Bedrock Titan Embeddings mannequin. Select Add Step and select Customized Rework. And choose Python (PySpark).

From the instance code snippets, browse and choose Generate textual content embedding with Bedrock. Make crucial adjustments to code snippet and choose Add.

- Now we have now vector embeddings accessible for the PDF file contents. Let’s go forward and index the info into Amazon OpenSearch. Select Add Step and select Customized Rework. And choose Python (PySpark). You’re free to rewrite the next code to make use of your most popular vector database. For simplicity, we’re utilizing grasp username and password to entry OpenSearch API’s, for manufacturing workloads choose choice in keeping with your group insurance policies.

Lastly, the dataflow created could be as follows:

With this dataflow, the info from the PDF file has been learn and listed with vector embeddings in Amazon OpenSearch. Now it’s time for us to create a file with queries to question the listed knowledge and put it aside to the Amazon S3 location. We’ll level our search knowledge stream to the file and output a file with corresponding leads to a brand new file in an Amazon S3 location.

Making ready a immediate

After we create a data base out of our PDF, we will take a look at it by looking out the data base for a number of pattern queries. We’ll course of every question as follows:

- Generate embedding for the question (powered by Amazon Bedrock)

- Question vector database for the closest neighbor context (powered by Amazon OpenSearch)

- Mix the question and the context into the immediate.

- Question LLM with a immediate (powered by Amazon Bedrock)

- On the SageMaker Canvas house web page, select Knowledge preparation.

- Select Create on the suitable facet of web page, then give a knowledge stream title and choose Create.

Now let’s load the consumer questions after which create a immediate by combining the query and the same paperwork. This immediate is offered to the LLM for producing a solution to the consumer query.

- Let’s load a csv file with consumer questions. Select Import Knowledge and choose Tabular from the drop-down record.

- Knowledge Supply, and choose Amazon S3 from the drop-down record. Alternatively, you possibly can select to add a file with consumer queries.

- Let’s add a customized transformation to transform the info into vector embeddings, adopted by looking out associated embeddings from Amazon OpenSearch, earlier than sending a immediate to Amazon Bedrock with the question and context from data base. To generate embeddings for the question, you should use the identical instance code snippet Generate textual content embedding with Bedrock talked about in Step #7 above.

Let’s invoke the Amazon OpenSearch API to go looking related paperwork for the generated vector embeddings. Add a customized remodel with Python (PySpark).

Let’s add a customized remodel to name the Amazon Bedrock API for question response, passing the paperwork from the Amazon OpenSearch data base. From the instance code snippets, browse and choose Question Bedrock with context. Make crucial adjustments to code snippet and choose Add.

In abstract, RAG based mostly query answering dataflow is as follows:

ML practitioners spend quite a lot of time crafting characteristic engineering code, making use of it to their preliminary datasets, coaching fashions on the engineered datasets, and evaluating mannequin accuracy. Given the experimental nature of this work, even the smallest challenge results in a number of iterations. The identical characteristic engineering code is commonly run many times, losing time and compute assets on repeating the identical operations. In massive organizations, this may trigger a fair larger lack of productiveness as a result of totally different groups usually run an identical jobs and even write duplicate characteristic engineering code as a result of they don’t have any data of prior work. To keep away from the reprocessing of options, we’ll export our knowledge stream to an Amazon SageMaker pipeline. Let’s choose the + button to the suitable of the question. Choose export knowledge stream and select Run SageMaker Pipeline (through Jupyter pocket book).

Cleansing up

To keep away from incurring future costs, delete or shut down the assets you created whereas following this put up. Seek advice from Logging out of Amazon SageMaker Canvas for extra particulars.

Conclusion

On this put up, we confirmed you the way Amazon SageMaker Canvas’s end-to-end capabilities by assuming the function of a knowledge skilled getting ready knowledge for an LLM. The interactive knowledge preparation enabled rapidly cleansing, remodeling, and analyzing the info to engineer informative options. By eradicating coding complexities, SageMaker Canvas allowed fast iteration to create a high-quality coaching dataset. This accelerated workflow led immediately into constructing, coaching, and deploying a performant machine studying mannequin for enterprise impression. With its complete knowledge preparation and unified expertise from knowledge to insights, SageMaker Canvas empowers customers to enhance their ML outcomes.

We encourage you to be taught extra by exploring Amazon SageMaker Data Wrangler, Amazon SageMaker Canvas, Amazon Titan fashions, Amazon Bedrock, and Amazon OpenSearch Service to construct an answer utilizing the pattern implementation offered on this put up and a dataset related to your small business. You probably have questions or strategies, then please depart a remark.

Concerning the Authors

Ajjay Govindaram is a Senior Options Architect at AWS. He works with strategic clients who’re utilizing AI/ML to unravel complicated enterprise issues. His expertise lies in offering technical path in addition to design help for modest to large-scale AI/ML utility deployments. His data ranges from utility structure to large knowledge, analytics, and machine studying. He enjoys listening to music whereas resting, experiencing the outside, and spending time along with his family members.

Ajjay Govindaram is a Senior Options Architect at AWS. He works with strategic clients who’re utilizing AI/ML to unravel complicated enterprise issues. His expertise lies in offering technical path in addition to design help for modest to large-scale AI/ML utility deployments. His data ranges from utility structure to large knowledge, analytics, and machine studying. He enjoys listening to music whereas resting, experiencing the outside, and spending time along with his family members.

Nikita Ivkin is a Senior Utilized Scientist at Amazon SageMaker Knowledge Wrangler with pursuits in machine studying and knowledge cleansing algorithms.

Nikita Ivkin is a Senior Utilized Scientist at Amazon SageMaker Knowledge Wrangler with pursuits in machine studying and knowledge cleansing algorithms.