How SnapLogic constructed a text-to-pipeline software with Amazon Bedrock to translate enterprise intent into motion

This put up was co-written with Greg Benson, Chief Scientist; Aaron Kesler, Sr. Product Supervisor; and Wealthy Dill, Enterprise Options Architect from SnapLogic.

Many shoppers are constructing generative AI apps on Amazon Bedrock and Amazon CodeWhisperer to create code artifacts primarily based on pure language. This use case highlights how giant language fashions (LLMs) are in a position to change into a translator between human languages (English, Spanish, Arabic, and extra) and machine interpretable languages (Python, Java, Scala, SQL, and so forth) together with subtle inside reasoning. This emergent potential in LLMs has compelled software program builders to make use of LLMs as an automation and UX enhancement device that transforms pure language to a domain-specific language (DSL): system directions, API requests, code artifacts, and extra. On this put up, we present you the way SnapLogic, an AWS buyer, used Amazon Bedrock to energy their SnapGPT product by way of automated creation of those complicated DSL artifacts from human language.

When clients create DSL objects from LLMs, the ensuing DSL is both an actual reproduction or a by-product of an present interface information and schema that kinds the contract between the UI and the enterprise logic within the backing service. This sample is especially trending with impartial software program distributors (ISVs) and software program as a service (SaaS) ISVs because of their distinctive approach of representing configurations by way of code and the need to simplify the person expertise for his or her clients. Instance use circumstances embrace:

Essentially the most simple strategy to construct and scale text-to-pipeline functions with LLMs on AWS is utilizing Amazon Bedrock. Amazon Bedrock is the simplest strategy to construct and scale generative AI functions with basis fashions (FMs). It’s a totally managed service that gives entry to a alternative of high-performing basis FMs from main AI through a single API, together with a broad set of capabilities you could construct generative AI functions with privateness and safety. Anthropic, an AI security and analysis lab that builds dependable, interpretable, and steerable AI methods, is likely one of the main AI corporations that gives entry to their state-of-the artwork LLM, Claude, on Amazon Bedrock. Claude is an LLM that excels at a variety of duties, from considerate dialogue, content material creation, complicated reasoning, creativity, and coding. Anthropic affords each Claude and Claude On the spot fashions, all of which can be found by way of Amazon Bedrock. Claude has rapidly gained recognition in these text-to-pipeline functions due to its improved reasoning potential, which permits it to excel in ambiguous technical downside fixing. Claude 2 on Amazon Bedrock helps a 100,000-token context window, which is equal to about 200 pages of English textual content. It is a significantly necessary characteristic which you could depend on when constructing text-to-pipeline functions that require complicated reasoning, detailed directions, and complete examples.

SnapLogic background

SnapLogic is an AWS buyer on a mission to carry enterprise automation to the world. The SnapLogic Clever Integration Platform (IIP) permits organizations to appreciate enterprise-wide automation by connecting their total ecosystem of functions, databases, huge information, machines and gadgets, APIs, and extra with pre-built, clever connectors known as Snaps. SnapLogic just lately launched a characteristic known as SnapGPT, which gives a textual content interface the place you possibly can sort the specified integration pipeline you need to create in easy human language. SnapGPT makes use of Anthropic’s Claude mannequin by way of Amazon Bedrock to automate the creation of those integration pipelines as code, that are then used by way of SnapLogic’s flagship integration answer. Nevertheless, SnapLogic’s journey to SnapGPT has been a end result of a few years working within the AI area.

SnapLogic’s AI journey

Within the realm of integration platforms, SnapLogic has persistently been on the forefront, harnessing the transformative energy of synthetic intelligence. Over time, the corporate’s dedication to innovating with AI has change into evident, particularly after we hint the journey from Iris to AutoLink.

The standard beginnings with Iris

In 2017, SnapLogic unveiled Iris, an industry-first AI-powered integration assistant. Iris was designed to make use of machine studying (ML) algorithms to foretell the subsequent steps in constructing a knowledge pipeline. By analyzing hundreds of thousands of metadata parts and information flows, Iris may make clever strategies to customers, democratizing information integration and permitting even these with out a deep technical background to create complicated workflows.

AutoLink: Constructing momentum

Constructing on the success and learnings from Iris, SnapLogic launched AutoLink, a characteristic aimed toward additional simplifying the information mapping course of. The tedious activity of manually mapping fields between supply and goal methods grew to become a breeze with AutoLink. Utilizing AI, AutoLink mechanically recognized and advised potential matches. Integrations that after took hours might be run in mere minutes.

The generative leap with SnapGPT

SnapLogic’s newest foray in AI brings us SnapGPT, which goals to revolutionize integration even additional. With SnapGPT, SnapLogic introduces the world’s first generative integration answer. This isn’t nearly simplifying present processes, however completely reimagining how integrations are designed. The ability of generative AI can create total integration pipelines from scratch, optimizing the workflow primarily based on the specified consequence and information traits.

SnapGPT is extraordinarily impactful to SnapLogic’s clients as a result of they can drastically lower the period of time required to generate their first SnapLogic pipeline. Historically, SnapLogic clients would wish to spend days or even weeks configuring integration pipelines from scratch. Now, these clients are in a position to merely ask SnapGPT to, for instance, “create a pipeline which can transfer all of my lively SFDC clients to WorkDay.” A working first draft of a pipeline is mechanically created for this buyer, drastically slicing down the event time required for creation of the bottom of their integration pipeline. This enables the top buyer to spend extra time specializing in what has true enterprise affect to them as an alternative of engaged on configurations of an integration pipeline. The next instance exhibits how a SnapLogic buyer can enter an outline into the SnapGPT characteristic to rapidly generate a pipeline, utilizing pure language.

AWS and SnapLogic have collaborated intently all through this product construct and have discovered rather a lot alongside the best way. The remainder of this put up will give attention to the technical learnings AWS and SnapLogic have had round utilizing LLMs for text-to-pipeline functions.

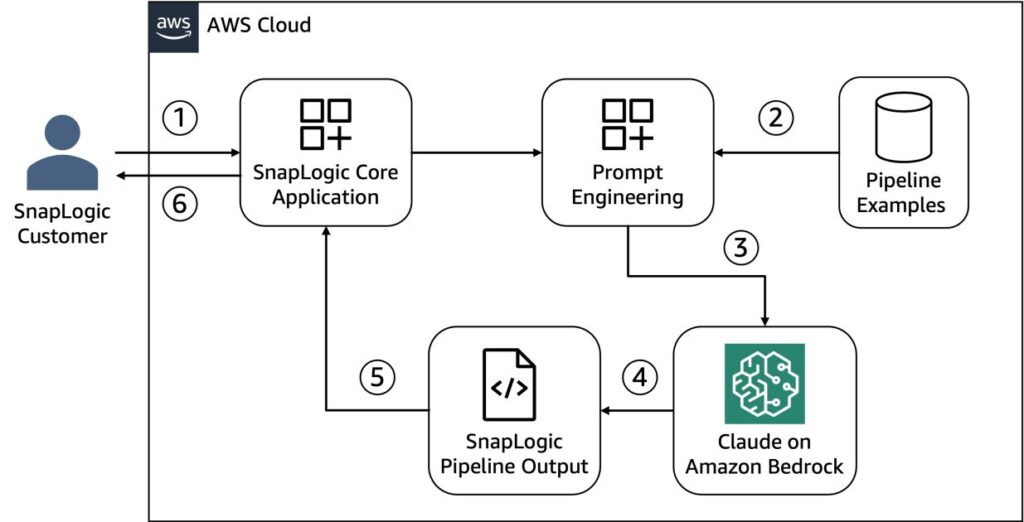

Resolution overview

To resolve this text-to-pipeline downside, AWS and SnapLogic designed a complete answer proven within the following structure.

A request to SnapGPT goes by way of the next workflow:

- A person submits an outline to the applying.

- SnapLogic makes use of a Retrieval Augmented Technology (RAG) method to retrieve related examples of SnapLogic pipelines which can be much like the person’s request.

- These extracted related examples are mixed with the person enter and undergo some textual content preprocessing earlier than they’re despatched to Claude on Amazon Bedrock.

- Claude produces a JSON artifact that represents a SnapLogic pipeline.

- The JSON artifact is straight built-in to the core SnapLogic integration platform.

- The SnapLogic pipeline is rendered to the person in a visible pleasant method.

By way of varied experimentation between AWS and SnapLogic, we now have discovered the immediate engineering step of the answer diagram to be extraordinarily necessary to producing high-quality outputs for these text-to-pipeline outputs. The subsequent part goes additional into some particular methods used with Claude on this area.

Immediate experimentation

All through the event part of SnapGPT, AWS and SnapLogic discovered that speedy iteration on prompts being despatched to Claude was a important growth activity to enhancing the accuracy and relevancy of text-to-pipeline outputs in SnapLogic’s outputs. By utilizing Amazon SageMaker Studio interactive notebooks, the AWS and SnapLogic workforce have been in a position to rapidly work by way of completely different variations of prompts by utilizing the Boto3 SDK connection to Amazon Bedrock. Pocket book-based growth allowed the groups to rapidly create client-side connections to Amazon Bedrock, embrace text-based descriptions alongside Python code for sending prompts to Amazon Bedrock, and maintain joint immediate engineering periods the place iterations have been made rapidly between a number of personas.

Anthropic Claude immediate engineering strategies

On this part, we describe a few of the iterative methods we used to create a high-performing immediate primarily based on an illustrative person request: “Make a pipeline which makes use of the ExampleCompany database which retrieves all lively clients.” Notice that this instance shouldn’t be the schema that SnapGPT is powered by, and is barely used as an instance a text-to-pipeline software.

To baseline our immediate engineering, we use the next authentic immediate:

Make a pipeline which makes use of the ExampleCompany database which retrieves all lively clients

The anticipated output is as follows:

{ "database": "ExampleCompany", "question": "SELECT * FROM ec_prod.clients WHERE standing="lively"" }

Enchancment #1: Utilizing the Human and Assistant annotations

Claude’s coaching process teaches the FM to know dialogue between a human and an assistant in its immediate construction. Claude customers can make the most of this construction by ending their immediate in Assistant:, which can set off Claude to begin producing the response to a question primarily based on what the human has mentioned. Notice that as a result of Claude will proceed to generate textual content till it’s instructed to cease, ensure you use a cease sequence of nnHuman: within the API request to Amazon Bedrock when utilizing Claude. This may make sure you solely obtain the response from the assistant and never the anticipated subsequent response from the human within the dialogue chain. These nnHuman and nnAssistant indicators not solely assist in limiting Claude’s response to related data, but additionally assist in offering clear demarcation between main human inputs and assistant responses from different interactions. This particularly helps in a few-shot immediate setting the place you possibly can have a number of queries and responses with queries tagged with nnHuman and former responses tagged with nnAssistant. Let’s take a look at the improved immediate and related illustrative API name to Amazon Bedrock through the Boto3 SDK.

We use the next immediate:

Human: Make a pipeline which makes use of the ExampleCompany database which retrieves all lively clients

Assistant:

The output is as follows:

Here’s a MongoDB pipeline to retrieve all lively clients from the ExampleCompany database:

pipeline = [ { '$match': { 'database': 'ExampleCompany', 'status': 'active' } } ] db.clients.mixture(pipeline)The important thing steps are:

– `$match` to filter the paperwork to solely these within the ExampleCompany database and with standing equal to “lively”.

– `db.clients.mixture()` runs the pipeline on the purchasers assortment and returns the filtered outcomes.

So this may return all buyer paperwork from the ExampleCompany database the place the standing discipline is about to “lively”.

Discover how within the output, Claude makes up a solution to our query with none context. The reply is probably useful, nevertheless it’s not particular to the issue we’re attempting to resolve as a result of it’s not supplied with sufficient context. It’s additionally necessary to notice that Anthropic requires this human and assistant format when utilizing the Claude fashions; in the event you don’t embrace this step, Amazon Bedrock will return an error.

Enchancment #2: Embody hyper-specific context for Claude earlier than it’s instructed what to do

Claude performs greatest when it’s given good context about what it wants to perform in addition to how it’s supposed to perform that activity. Listed below are just a few examples of issues to incorporate as context for Claude earlier than you inform it the duty:

- Describe the job perform that you really want Claude to satisfy. By doing this, you make Claude suppose within the function of somebody working in that job, or extra like somebody simply being employed in that particular job function. This may assist Claude formulate the reply from that particular job function’s perspective, decreasing hallucination and randomness within the reply.

- Embody technical particulars relating to the output format. Basis fashions are usually good at following actions, so asking Claude to generate the output in a particular format or schema with one or just a few examples will assist Claude present the reply in the proper format, decreasing the necessity for filtering and postprocessing of the output. The instance within the following immediate exhibits a fictitious schema that matches the anticipated output.

Let’s mix these strategies to enhance upon our authentic immediate:

Human: Your job is to behave as an professional on ETL pipelines. Particularly, your job is to create a JSON illustration of an ETL pipeline which can clear up the person request offered to you.

The JSON output ought to comply with the next format:

Right here is your activity: make a pipeline which makes use of the ExampleCompany database which retrieves all lively clients

Assistant:

The output is as follows:

Here’s a JSON illustration of an ETL pipeline to retrieve all lively clients from the ExampleCompany database:

This pipeline makes use of the ExampleCompany database and a SQL question to pick all data from the purchasers desk the place the standing is ‘lively’.

With the addition of context, the mannequin is now in a position to get many of the strategy to our reply. It now is aware of construction the output and it understands that it wants to jot down a SQL assertion to resolve the duty. Nevertheless, you’ll discover that the mannequin doesn’t appropriately use the ec_prod.clients nomenclature we expect. Room for enchancment! Additionally observe, in apply, you will have to incorporate considerably extra context than what we offered on this instance for high-quality leads to text-to-pipeline use circumstances.

Enchancment #3: Enclosing examples in XML tags

Few-shot studying is a well-liked strategy to enhance the accuracy with FMs the place you’ve related examples to information the FM of their activity. That is significantly necessary when working in text-to-pipeline functions as a result of these hyper-specific pipeline outputs are normally not included in any public dataset as a result of they’re particular to a person buyer’s information illustration. When utilizing Claude, you possibly can make the most of its innate information of arbitrary XML tags to be able to present these examples in a few-shot format. Within the following improved immediate, discover the addition of examples enclosed in <instance> XML tags. Additionally, you should use abbreviations for human and assistant with H and A to offer context to Claude with out making it suppose these have been particular items of the dialogue which have already occurred.

We use the next immediate:

Human: Your job is to behave as an professional on ETL pipelines. Particularly, your job is to create a JSON illustration of an ETL pipeline which can clear up the person request offered to you.

The JSON output ought to comply with the next format:

Listed below are some examples of person requests and related output JSON artifacts which appropriately clear up the duty offered. Make sure that to make use of the identical nomenclature which is offered within the examples if you’re utilizing the identical databases.

<example-1>

H: question the ExampleCompany database for any lively orders for buyer #1234

A:

The reasoning for this question is that the shopper #1234 is filtered and we’re looking out the “orders” desk for any present data after the “lively” filter.

</example-1>

<example-2>

H: take away all clients within the ExampleCompany database which haven’t been lively within the final month

A:

The reasoning for this question is that the “ec_prod.clients” desk is the one desk in ExampleCompany database which accommodates buyer data.

</example-2>

Right here is your activity: make a pipeline which makes use of the ExampleCompany database which retrieves all lively clients.

Assistant:

The output is as follows:

Right here is the JSON illustration for an ETL pipeline to retrieve all lively clients from the ExampleCompany database:

The reasoning is:

The ExampleCompany database is specified because the database to make use of

The ec_prod.clients desk accommodates the shopper information

The standing discipline signifies if a buyer is lively so the question filters for under lively clients

This could retrieve all lively buyer data from the ExampleCompany database. Let me know in the event you want any clarification or have further necessities for the ETL pipeline!

The JSON output now has the proper database nomenclature, which Claude was ready to make use of due to the examples that we offered. Coupling human and assistant indicators with XML tags makes it simpler for Claude to know what’s the activity and what was the reply in comparable examples with very clear separation between a number of few-shot examples. The better it’s for Claude to know, the higher and extra related the reply can be, additional decreasing the possibility for the mannequin to hallucinate and supply random irrelevant solutions.

Enchancment #4: Triggering Claude to start JSON technology with XML tags

A small problem with text-to-pipeline functions utilizing FMs is the necessity to precisely parse an output from ensuing textual content so it may be interpreted as code in a downstream software. One strategy to clear up this with Claude is to make the most of its XML tag understanding and mix this with a customized cease sequence. Within the following immediate, we now have instructed Claude to surround the output in <json></json> XML tags. Then, we now have added the <json> tag to the top of the immediate. This ensures that the primary textual content that comes out of Claude would be the begin of the JSON output. If you happen to don’t do that, Claude typically responds with some conversational textual content, then the true code response. By instructing Claude to instantly begin producing the output, you possibly can simply cease technology if you see the closing </json> tag. That is proven within the up to date Boto3 API name. The advantages of this system are twofold. First, you’ll be able to precisely parse the code response from Claude. Second, you’ll be able to scale back price as a result of Claude solely generates code outputs and no further textual content. This reduces price on Amazon Bedrock since you are charged for every token that’s produced as output from all FMs.

We use the next immediate:

Human: Your job is to behave as an professional on ETL pipelines. Particularly, your job is to create a JSON illustration of an ETL pipeline which can clear up the person request offered to you.

The JSON output ought to comply with the next format:

Listed below are some examples of person requests and related output JSON artifacts which appropriately clear up the duty offered. Make sure that to make use of the identical nomenclature which is offered within the examples if you’re utilizing the identical databases.

<example-1>

H: question the ExampleCompany database for any lively orders for buyer #1234

A:

<json>

</json>

The reasoning for this question is that the shopper #1234 is filtered and we’re looking out the “orders” desk for any present data after the “lively” filter.

</example-1>

<example-2>

H: take away all clients within the ExampleCompany database which haven’t been lively within the final month

A:

<json>

</json>

The reasoning for this question is that the “ec_prod.clients” desk is the one desk in ExampleCompany database which accommodates buyer data.

</example-2>

At all times keep in mind to surround your JSON outputs in <json></json> tags.

Right here is your activity: make a pipeline which makes use of the ExampleCompany database which retrieves all lively clients.

Assistant:

<json>

We use the next code:

physique = json.dumps({"immediate": immediate, "stop_sequences": ['nnHuman:', '</json>']})

response = bedrock.invoke_model(

physique=physique,

modelId='anthropic.claude-v2'

)The output is as follows:

{ "database": "ExampleCompany", "question": "SELECT * FROM ec_prod.clients WHERE standing="lively"" }

Now we now have arrived on the anticipated output with solely the JSON object returned! By utilizing this technique, we’re in a position to generate an instantly usable technical artifact in addition to scale back the price of technology by decreasing output tokens.

Conclusion

To get began immediately with SnapGPT, request a free trial of SnapLogic or request a demo of the product. If you need to make use of these ideas for constructing functions immediately, we suggest experimenting hands-on with the immediate engineering part on this put up, utilizing the identical move on a unique DSL technology use case that fits what you are promoting, and diving deeper into the RAG features that are available through Amazon Bedrock.

SnapLogic and AWS have been in a position to accomplice successfully to construct a sophisticated translator between human language and the complicated schema of SnapLogic integration pipelines powered by Amazon Bedrock. All through this journey, we now have seen how the output generated with Claude may be improved in text-to-pipeline functions utilizing particular immediate engineering methods. AWS and SnapLogic are excited to proceed this partnership in Generative AI and look ahead to future collaboration and innovation on this fast-moving area.

Concerning the Authors

Greg Benson is a Professor of Laptop Science on the College of San Francisco and Chief Scientist at SnapLogic. He joined the USF Division of Laptop Science in 1998 and has taught undergraduate and graduate programs together with working methods, pc structure, programming languages, distributed methods, and introductory programming. Greg has printed analysis within the areas of working methods, parallel computing, and distributed methods. Since becoming a member of SnapLogic in 2010, Greg has helped design and implement a number of key platform options together with cluster processing, huge information processing, the cloud structure, and machine studying. He at the moment is engaged on Generative AI for information integration.

Greg Benson is a Professor of Laptop Science on the College of San Francisco and Chief Scientist at SnapLogic. He joined the USF Division of Laptop Science in 1998 and has taught undergraduate and graduate programs together with working methods, pc structure, programming languages, distributed methods, and introductory programming. Greg has printed analysis within the areas of working methods, parallel computing, and distributed methods. Since becoming a member of SnapLogic in 2010, Greg has helped design and implement a number of key platform options together with cluster processing, huge information processing, the cloud structure, and machine studying. He at the moment is engaged on Generative AI for information integration.

Aaron Kesler is the Senior Product Supervisor for AI services and products at SnapLogic, Aaron applies over ten years of product administration experience to pioneer AI/ML product growth and evangelize companies throughout the group. He’s the creator of the upcoming ebook “What’s Your Downside?” aimed toward guiding new product managers by way of the product administration profession. His entrepreneurial journey started together with his faculty startup, STAK, which was later acquired by Carvertise with Aaron contributing considerably to their recognition as Tech Startup of the 12 months 2015 in Delaware. Past his skilled pursuits, Aaron finds pleasure in {golfing} together with his father, exploring new cultures and meals on his travels, and training the ukulele.

Aaron Kesler is the Senior Product Supervisor for AI services and products at SnapLogic, Aaron applies over ten years of product administration experience to pioneer AI/ML product growth and evangelize companies throughout the group. He’s the creator of the upcoming ebook “What’s Your Downside?” aimed toward guiding new product managers by way of the product administration profession. His entrepreneurial journey started together with his faculty startup, STAK, which was later acquired by Carvertise with Aaron contributing considerably to their recognition as Tech Startup of the 12 months 2015 in Delaware. Past his skilled pursuits, Aaron finds pleasure in {golfing} together with his father, exploring new cultures and meals on his travels, and training the ukulele.

Wealthy Dill is a Principal Options Architect with expertise slicing broadly throughout a number of areas of specialization. A monitor document of success spanning multi-platform enterprise software program and SaaS. Well-known for turning buyer advocacy (serving because the voice of the shopper) into revenue-generating new options and merchandise. Confirmed potential to drive cutting-edge merchandise to market and initiatives to completion on schedule and underneath price range in fast-paced onshore and offshore environments. A easy strategy to describe me: the thoughts of a scientist, the guts of an explorer and the soul of an artist.

Wealthy Dill is a Principal Options Architect with expertise slicing broadly throughout a number of areas of specialization. A monitor document of success spanning multi-platform enterprise software program and SaaS. Well-known for turning buyer advocacy (serving because the voice of the shopper) into revenue-generating new options and merchandise. Confirmed potential to drive cutting-edge merchandise to market and initiatives to completion on schedule and underneath price range in fast-paced onshore and offshore environments. A easy strategy to describe me: the thoughts of a scientist, the guts of an explorer and the soul of an artist.

Clay Elmore is an AI/ML Specialist Options Architect at AWS. After spending many hours in a supplies analysis lab, his background in chemical engineering was rapidly left behind to pursue his curiosity in machine studying. He has labored on ML functions in many various industries starting from vitality buying and selling to hospitality advertising and marketing. Clay’s present work at AWS facilities round serving to clients carry software program growth practices to ML and generative AI workloads, permitting clients to construct repeatable, scalable options in these complicated environments. In his spare time, Clay enjoys snowboarding, fixing Rubik’s cubes, studying, and cooking.

Clay Elmore is an AI/ML Specialist Options Architect at AWS. After spending many hours in a supplies analysis lab, his background in chemical engineering was rapidly left behind to pursue his curiosity in machine studying. He has labored on ML functions in many various industries starting from vitality buying and selling to hospitality advertising and marketing. Clay’s present work at AWS facilities round serving to clients carry software program growth practices to ML and generative AI workloads, permitting clients to construct repeatable, scalable options in these complicated environments. In his spare time, Clay enjoys snowboarding, fixing Rubik’s cubes, studying, and cooking.

Sina Sojoodi is a know-how govt, methods engineer, product chief, ex-founder and startup advisor. He joined AWS in March 2021 as a Principal Options Architect. Sina is at the moment the US-West ISV space lead Options Architect. He works with SaaS and B2B software program corporations to construct and develop their companies on AWS. Earlier to his function at Amazon, Sina was a know-how govt at VMware, and Pivotal Software program (IPO in 2018, VMware M&A in 2020) and served a number of management roles together with founding engineer at Xtreme Labs (Pivotal acquisition in 2013). Sina has devoted the previous 15 years of his work expertise to constructing software program platforms and practices for enterprises, software program companies and the general public sector. He’s an {industry} chief with a ardour for innovation. Sina holds a BA from the College of Waterloo the place he studied Electrical Engineering and Psychology.

Sina Sojoodi is a know-how govt, methods engineer, product chief, ex-founder and startup advisor. He joined AWS in March 2021 as a Principal Options Architect. Sina is at the moment the US-West ISV space lead Options Architect. He works with SaaS and B2B software program corporations to construct and develop their companies on AWS. Earlier to his function at Amazon, Sina was a know-how govt at VMware, and Pivotal Software program (IPO in 2018, VMware M&A in 2020) and served a number of management roles together with founding engineer at Xtreme Labs (Pivotal acquisition in 2013). Sina has devoted the previous 15 years of his work expertise to constructing software program platforms and practices for enterprises, software program companies and the general public sector. He’s an {industry} chief with a ardour for innovation. Sina holds a BA from the College of Waterloo the place he studied Electrical Engineering and Psychology.

Sandeep Rohilla is a Senior Options Architect at AWS, supporting ISV clients within the US West area. He focuses on serving to clients architect options leveraging containers and generative AI on the AWS cloud. Sandeep is keen about understanding clients’ enterprise issues and serving to them obtain their targets by way of know-how. He joined AWS after working greater than a decade as a options architect, bringing his 17 years of expertise to bear. Sandeep holds an MSc. in Software program Engineering from the College of the West of England in Bristol, UK.

Sandeep Rohilla is a Senior Options Architect at AWS, supporting ISV clients within the US West area. He focuses on serving to clients architect options leveraging containers and generative AI on the AWS cloud. Sandeep is keen about understanding clients’ enterprise issues and serving to them obtain their targets by way of know-how. He joined AWS after working greater than a decade as a options architect, bringing his 17 years of expertise to bear. Sandeep holds an MSc. in Software program Engineering from the College of the West of England in Bristol, UK.

Dr. Farooq Sabir is a Senior Synthetic Intelligence and Machine Studying Specialist Options Architect at AWS. He holds PhD and MS levels in Electrical Engineering from the College of Texas at Austin and an MS in Laptop Science from Georgia Institute of Expertise. He has over 15 years of labor expertise and in addition likes to show and mentor faculty college students. At AWS, he helps clients formulate and clear up their enterprise issues in information science, machine studying, pc imaginative and prescient, synthetic intelligence, numerical optimization, and associated domains. Primarily based in Dallas, Texas, he and his household like to journey and go on lengthy highway journeys.

Dr. Farooq Sabir is a Senior Synthetic Intelligence and Machine Studying Specialist Options Architect at AWS. He holds PhD and MS levels in Electrical Engineering from the College of Texas at Austin and an MS in Laptop Science from Georgia Institute of Expertise. He has over 15 years of labor expertise and in addition likes to show and mentor faculty college students. At AWS, he helps clients formulate and clear up their enterprise issues in information science, machine studying, pc imaginative and prescient, synthetic intelligence, numerical optimization, and associated domains. Primarily based in Dallas, Texas, he and his household like to journey and go on lengthy highway journeys.