Construct a contextual chatbot for monetary providers utilizing Amazon SageMaker JumpStart, Llama 2 and Amazon OpenSearch Serverless with Vector Engine

The monetary service (FinServ) {industry} has distinctive generative AI necessities associated to domain-specific knowledge, knowledge safety, regulatory controls, and {industry} compliance requirements. As well as, prospects are searching for selections to pick probably the most performant and cost-effective machine studying (ML) mannequin and the flexibility to carry out mandatory customization (fine-tuning) to suit their enterprise use instances. Amazon SageMaker JumpStart is ideally suited to generative AI use instances for FinServ prospects as a result of it supplies the mandatory knowledge safety controls and meets compliance requirements necessities.

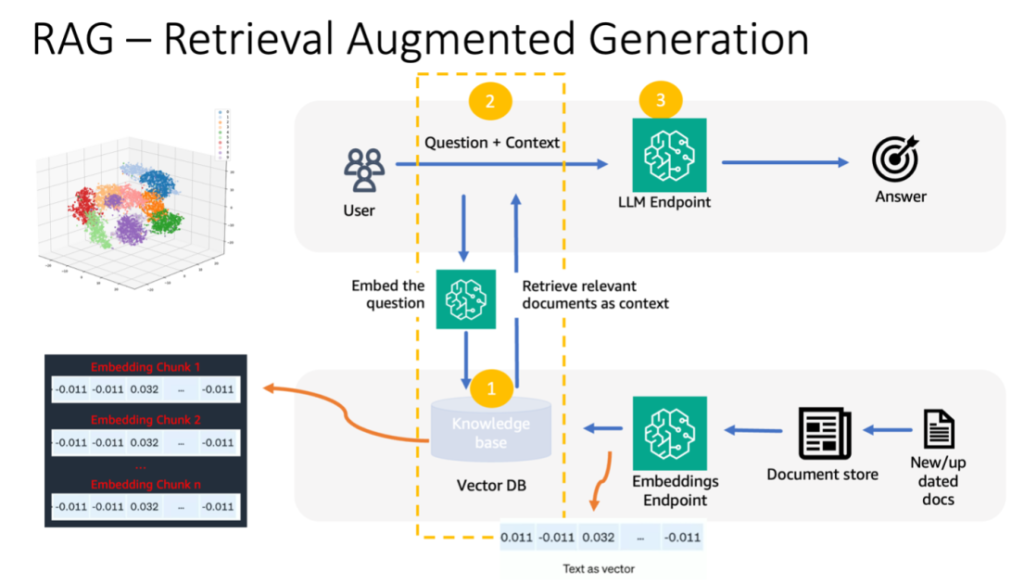

On this put up, we reveal query answering duties utilizing a Retrieval Augmented Era (RAG)-based strategy with giant language fashions (LLMs) in SageMaker JumpStart utilizing a easy monetary area use case. RAG is a framework for enhancing the standard of textual content technology by combining an LLM with an info retrieval (IR) system. The LLM generated textual content, and the IR system retrieves related info from a data base. The retrieved info is then used to reinforce the LLM’s enter, which may help enhance the accuracy and relevance of the mannequin generated textual content. RAG has been proven to be efficient for a wide range of textual content technology duties, comparable to query answering and summarization. It’s a promising strategy for enhancing the standard and accuracy of textual content technology fashions.

Benefits of utilizing SageMaker JumpStart

With SageMaker JumpStart, ML practitioners can select from a broad choice of state-of-the-art fashions to be used instances comparable to content material writing, picture technology, code technology, query answering, copywriting, summarization, classification, info retrieval, and extra. ML practitioners can deploy basis fashions to devoted Amazon SageMaker cases from a community remoted atmosphere and customise fashions utilizing SageMaker for mannequin coaching and deployment.

SageMaker JumpStart is ideally suited to generative AI use instances for FinServ prospects as a result of it provides the next:

- Customization capabilities – SageMaker JumpStart supplies instance notebooks and detailed posts for step-by-step steerage on area adaptation of basis fashions. You’ll be able to comply with these assets for fine-tuning, area adaptation, and instruction of basis fashions or to construct RAG-based functions.

- Information safety – Guaranteeing the safety of inference payload knowledge is paramount. With SageMaker JumpStart, you’ll be able to deploy fashions in community isolation with single-tenancy endpoint provision. Moreover, you’ll be able to handle entry management to chose fashions by the personal mannequin hub functionality, aligning with particular person safety necessities.

- Regulatory controls and compliances – Compliance with requirements comparable to HIPAA BAA, SOC123, PCI, and HITRUST CSF is a core function of SageMaker, making certain alignment with the rigorous regulatory panorama of the monetary sector.

- Mannequin selections – SageMaker JumpStart provides a choice of state-of-the-art ML fashions that persistently rank among the many prime in industry-recognized HELM benchmarks. These embrace, however should not restricted to, Llama 2, Falcon 40B, AI21 J2 Extremely, AI21 Summarize, Hugging Face MiniLM, and BGE fashions.

On this put up, we discover constructing a contextual chatbot for monetary providers organizations utilizing a RAG structure with the Llama 2 basis mannequin and the Hugging Face GPTJ-6B-FP16 embeddings mannequin, each accessible in SageMaker JumpStart. We additionally use Vector Engine for Amazon OpenSearch Serverless (presently in preview) because the vector knowledge retailer to retailer embeddings.

Limitations of huge language fashions

LLMs have been educated on huge volumes of unstructured knowledge and excel basically textual content technology. By this coaching, LLMs purchase and retailer factual data. Nevertheless, off-the-shelf LLMs current limitations:

- Their offline coaching renders them unaware of up-to-date info.

- Their coaching on predominantly generalized knowledge diminishes their efficacy in domain-specific duties. For example, a monetary agency may desire its Q&A bot to supply solutions from its newest inner paperwork, making certain accuracy and compliance with its enterprise guidelines.

- Their reliance on embedded info compromises interpretability.

To make use of particular knowledge in LLMs, three prevalent strategies exist:

- Embedding knowledge inside the mannequin prompts, permitting it to make the most of this context throughout output technology. This may be zero-shot (no examples), few-shot (restricted examples), or many-shot (plentiful examples). Such contextual prompting steers fashions in direction of extra nuanced outcomes.

- High quality-tuning the mannequin utilizing pairs of prompts and completions.

- RAG, which retrieves exterior knowledge (non-parametric) and integrates this knowledge into the prompts, enriching the context.

Nevertheless, the primary technique grapples with mannequin constraints on context measurement, making it powerful to enter prolonged paperwork and probably rising prices. The fine-tuning strategy, whereas potent, is resource-intensive, notably with ever-evolving exterior knowledge, resulting in delayed deployments and elevated prices. RAG mixed with LLMs provides an answer to the beforehand talked about limitations.

Retrieval Augmented Era

RAG retrieves exterior knowledge (non-parametric) and integrates this knowledge into ML prompts, enriching the context. Lewis et al. launched RAG fashions in 2020, conceptualizing them as a fusion of a pre-trained sequence-to-sequence mannequin (parametric reminiscence) and a dense vector index of Wikipedia (non-parametric reminiscence) accessed by way of a neural retriever.

Right here’s how RAG operates:

- Information sources – RAG can draw from various knowledge sources, together with doc repositories, databases, or APIs.

- Information formatting – Each the person’s question and the paperwork are remodeled right into a format appropriate for relevancy comparisons.

- Embeddings – To facilitate this comparability, the question and the doc assortment (or data library) are remodeled into numerical embeddings utilizing language fashions. These embeddings numerically encapsulate textual ideas.

- Relevancy search – The person question’s embedding is in comparison with the doc assortment’s embeddings, figuring out related textual content by a similarity search within the embedding house.

- Context enrichment – The recognized related textual content is appended to the person’s unique immediate, thereby enhancing its context.

- LLM processing – With the enriched context, the immediate is fed to the LLM, which, as a result of inclusion of pertinent exterior knowledge, produces related and exact outputs.

- Asynchronous updates – To make sure the reference paperwork stay present, they are often up to date asynchronously together with their embedding representations. This ensures that future mannequin responses are grounded within the newest info, guaranteeing accuracy.

In essence, RAG provides a dynamic technique to infuse LLMs with real-time, related info, making certain the technology of exact and well timed outputs.

The next diagram exhibits the conceptual circulate of utilizing RAG with LLMs.

Resolution overview

The next steps are required to create a contextual query answering chatbot for a monetary providers utility:

- Use the SageMaker JumpStart GPT-J-6B embedding mannequin to generate embeddings for every PDF doc within the Amazon Simple Storage Service (Amazon S3) add listing.

- Determine related paperwork utilizing the next steps:

- Generate an embedding for the person’s question utilizing the identical mannequin.

- Use OpenSearch Serverless with the vector engine function to seek for the highest Okay most related doc indexes within the embedding house.

- Retrieve the corresponding paperwork utilizing the recognized indexes.

- Mix the retrieved paperwork as context with the person’s immediate and query. Ahead this to the SageMaker LLM for response technology.

We make use of LangChain, a preferred framework, to orchestrate this course of. LangChain is particularly designed to bolster functions powered by LLMs, providing a common interface for numerous LLMs. It streamlines the mixing of a number of LLMs, making certain seamless state persistence between calls. Furthermore, it boosts developer effectivity with options like customizable immediate templates, complete application-building brokers, and specialised indexes for search and retrieval. For an in-depth understanding, consult with the LangChain documentation.

Stipulations

You want the next stipulations to construct our context-aware chatbot:

For directions on the best way to arrange an OpenSearch Serverless vector engine, consult with Introducing the vector engine for Amazon OpenSearch Serverless, now in preview.

For a complete walkthrough of the next resolution, clone the GitHub repo and consult with the Jupyter notebook.

Deploy the ML fashions utilizing SageMaker JumpStart

To deploy the ML fashions, full the next steps:

- Deploy the Llama 2 LLM from SageMaker JumpStart:

- Deploy the GPT-J embeddings mannequin:

Chunk knowledge and create a doc embeddings object

On this part, you chunk the information into smaller paperwork. Chunking is a method for splitting giant texts into smaller chunks. It’s a vital step as a result of it optimizes the relevance of the search question for our RAG mannequin, which in flip improves the standard of the chatbot. The chunk measurement will depend on components such because the doc sort and the mannequin used. A piece chunk_size=1600 has been chosen as a result of that is the approximate measurement of a paragraph. As fashions enhance, their context window measurement will enhance, permitting for bigger chunk sizes.

Check with the Jupyter notebook within the GitHub repo for the entire resolution.

- Lengthen the LangChain

SageMakerEndpointEmbeddingsclass to create a customized embeddings operate that makes use of the gpt-j-6b-fp16 SageMaker endpoint you created earlier (as a part of using the embeddings mannequin): - Create the embeddings object and batch the creation of the doc embeddings:

- These embeddings are saved within the vector engine utilizing LangChain

OpenSearchVectorSearch. You retailer these embeddings within the subsequent part. Retailer the doc embedding in OpenSearch Serverless. You’re now able to iterate over the chunked paperwork, create the embeddings, and retailer these embeddings within the OpenSearch Serverless vector index created in vector search collections. See the next code:

Query and answering over paperwork

To date, you will have chunked a big doc into smaller ones, created vector embeddings, and saved them in a vector engine. Now you’ll be able to reply questions relating to this doc knowledge. Since you created an index over the information, you are able to do a semantic search; this manner, solely probably the most related paperwork required to reply the query are handed by way of the immediate to the LLM. This lets you save money and time by solely passing related paperwork to the LLM. For extra particulars on utilizing doc chains, consult with Documents.

Full the next steps to reply questions utilizing the paperwork:

- To make use of the SageMaker LLM endpoint with LangChain, you utilize

langchain.llms.sagemaker_endpoint.SagemakerEndpoint, which abstracts the SageMaker LLM endpoint. You carry out a change for the request and response payload as proven within the following code for the LangChain SageMaker integration. Word that you could be want to regulate the code in ContentHandler based mostly on the content_type and accepts format of the LLM mannequin you select to make use of.

Now you’re able to work together with the monetary doc.

- Use the next question and immediate template to ask questions relating to the doc:

Cleanup

To keep away from incurring future prices, delete the SageMaker inference endpoints that you simply created on this pocket book. You are able to do so by working the next in your SageMaker Studio pocket book:

For those who created an OpenSearch Serverless assortment for this instance and now not require it, you’ll be able to delete it by way of the OpenSearch Serverless console.

Conclusion

On this put up, we mentioned utilizing RAG as an strategy to supply domain-specific context to LLMs. We confirmed the best way to use SageMaker JumpStart to construct a RAG-based contextual chatbot for a monetary providers group utilizing Llama 2 and OpenSearch Serverless with a vector engine because the vector knowledge retailer. This technique refines textual content technology utilizing Llama 2 by dynamically sourcing related context. We’re excited to see you deliver your customized knowledge and innovate with this RAG-based technique on SageMaker JumpStart!

In regards to the authors

Sunil Padmanabhan is a Startup Options Architect at AWS. As a former startup founder and CTO, he’s obsessed with machine studying and focuses on serving to startups leverage AI/ML for his or her enterprise outcomes and design and deploy ML/AI options at scale.

Sunil Padmanabhan is a Startup Options Architect at AWS. As a former startup founder and CTO, he’s obsessed with machine studying and focuses on serving to startups leverage AI/ML for his or her enterprise outcomes and design and deploy ML/AI options at scale.

Suleman Patel is a Senior Options Architect at Amazon Net Providers (AWS), with a particular concentrate on Machine Studying and Modernization. Leveraging his experience in each enterprise and expertise, Suleman helps prospects design and construct options that sort out real-world enterprise issues. When he’s not immersed in his work, Suleman loves exploring the outside, taking highway journeys, and cooking up scrumptious dishes within the kitchen.

Suleman Patel is a Senior Options Architect at Amazon Net Providers (AWS), with a particular concentrate on Machine Studying and Modernization. Leveraging his experience in each enterprise and expertise, Suleman helps prospects design and construct options that sort out real-world enterprise issues. When he’s not immersed in his work, Suleman loves exploring the outside, taking highway journeys, and cooking up scrumptious dishes within the kitchen.