Machine Studying with MATLAB and Amazon SageMaker

This put up is written in collaboration with Brad Duncan, Rachel Johnson and Richard Alcock from MathWorks.

MATLAB is a well-liked programming device for a variety of functions, similar to knowledge processing, parallel computing, automation, simulation, machine studying, and synthetic intelligence. It’s closely utilized in many industries similar to automotive, aerospace, communication, and manufacturing. In recent times, MathWorks has introduced many product choices into the cloud, particularly on Amazon Web Services (AWS). For extra particulars about MathWorks cloud merchandise, see MATLAB and Simulink in the Cloud or email Mathworks.

On this put up, we carry MATLAB’s machine studying capabilities into Amazon SageMaker, which has a number of vital advantages:

- Compute sources: Utilizing the high-performance computing setting provided by SageMaker can pace up machine studying coaching.

- Collaboration: MATLAB and SageMaker collectively present a strong platform that t groups can use to collaborate successfully on constructing, testing, and deploying machine studying fashions.

- Deployment and accessibility: Fashions may be deployed as SageMaker real-time endpoints, making them readily accessible for different functions to course of dwell streaming knowledge.

We present you find out how to prepare a MATLAB machine studying mannequin as a SageMaker coaching job after which deploy the mannequin as a SageMaker real-time endpoint so it will probably course of dwell, streaming knowledge.

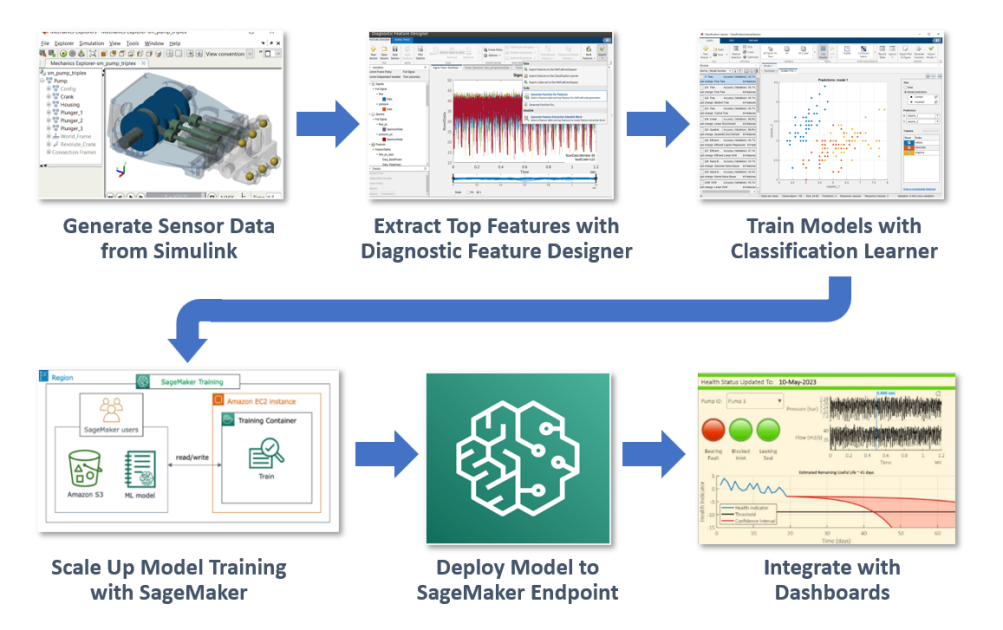

To do that, we’ll use a predictive upkeep instance the place we classify faults in an operational pump that’s streaming dwell sensor knowledge. Now we have entry to a big repository of labeled knowledge generated from a Simulink simulation that has three attainable fault sorts in varied attainable mixtures (for instance, one wholesome and 7 defective states). As a result of we’ve got a mannequin of the system and faults are uncommon in operation, we are able to reap the benefits of simulated knowledge to coach our algorithm. The mannequin may be tuned to match operational knowledge from our actual pump utilizing parameter estimation methods in MATLAB and Simulink.

Our goal is to show the mixed energy of MATLAB and Amazon SageMaker utilizing this fault classification instance.

We begin by coaching a classifier mannequin on our desktop with MATLAB. First, we extract options from a subset of the complete dataset utilizing the Diagnostic Feature Designer app, after which run the mannequin coaching regionally with a MATLAB resolution tree mannequin. As soon as we’re happy with the parameter settings, we are able to generate a MATLAB operate and ship the job together with the dataset to SageMaker. This enables us to scale up the coaching course of to accommodate a lot bigger datasets. After coaching our mannequin, we deploy it as a dwell endpoint which may be built-in right into a downstream app or dashboard, similar to a MATLAB Net App.

This instance will summarize every step, offering a sensible understanding of find out how to leverage MATLAB and Amazon SageMaker for machine studying duties. The total code and outline for the instance is offered on this repository.

Stipulations

- Working setting of MATLAB 2023a or later with MATLAB Compiler and the Statistics and Machine Studying Toolbox on Linux. Here’s a quick guide on find out how to run MATLAB on AWS.

- Docker arrange in an Amazon Elastic Compute Cloud (Amazon EC2) occasion the place MATLAB is working. Both Ubuntu or Linux.

- Set up of AWS Command-Line Interface (AWS CLI), AWS Configure, and Python3.

- AWS CLI, needs to be already put in in the event you adopted the set up information from step 1.

- Set up AWS Configure to work together with AWS sources.

- Confirm your python3 set up by working

python -Vorpython --versioncommand in your terminal. Set up Python if mandatory.

- Copy this repo to a folder in your Linux machine by working:

- Examine the permission on the repo folder. If it doesn’t have write permission, run the next shell command:

- Construct the MATLAB coaching container and push it to the Amazon Elastic Container Registry (Amazon ECR).

- Navigate to folder

docker - Create an Amazon ECR repo utilizing the AWS CLI (substitute REGION together with your most well-liked AWS area)

- Run the next docker command:

- Navigate to folder

- Open MATLAB and open the dwell script known as

PumpFaultClassificationMATLABSageMaker.mlxin folderexamples/PumpFaultClassification. Make this folder your present working folder in MATLAB.

Half 1: Information preparation & function extraction

Step one in any machine studying venture is to organize your knowledge. MATLAB supplies a variety of instruments for importing, cleansing, and extracting options out of your knowledge.:

The SensorData.mat dataset incorporates 240 information. Every file has two timetables: circulate and strain. The goal column is faultcode, which is a binary illustration of three attainable fault mixtures within the pump. For these time sequence tables, every desk has 1,201 rows which mimic 1.2 seconds of pump circulate and strain measurement with 0.001 seconds increment.

Subsequent, the Diagnostic Function Designer app permits you to extract, visualize, and rank a wide range of options from the info. Right here, you utilize Auto Options, which shortly extracts a broad set of time and frequency area options from the dataset and ranks the highest candidates for mannequin coaching. You possibly can then export a MATLAB operate that can recompute the highest 15 ranked options from new enter knowledge. Let’s name this operate extractFeaturesTraining. This operate may be configured to soak up knowledge multi functional batch or as streaming knowledge.

This operate produces a desk of options with related fault codes, as proven within the following determine:

Half 2: Arrange knowledge for SageMaker

Subsequent, you could manage the info in a manner that SageMaker can use for machine studying coaching. Usually, this entails splitting the info into coaching and validation units and splitting the predictor knowledge from the goal response.

On this stage, different extra advanced knowledge cleansing and filtering operations is perhaps required. On this instance, the info is already clear. Doubtlessly, if the info processing may be very advanced and time consuming, SageMaker processing jobs can be utilized to run these jobs other than SageMaker coaching in order that they are often separated into two steps.

trainPredictors = trainingData(:,2:finish);

trainResponse = trainingData(:,1);

Half 3: Prepare and check a machine studying mannequin in MATLAB

Earlier than transferring to SageMaker, it’s a good suggestion to construct and check the machine studying mannequin regionally in MATLAB. This lets you shortly iterate and debug the mannequin. You possibly can arrange and prepare a easy resolution tree classifier regionally.

classifierModel = fitctree(... trainPredictors,... trainResponse,... OptimizeHyperparameters="auto");

The coaching job right here ought to take lower than a minute to complete and generates some graphs to point the coaching progress. After the coaching is completed, a MATLAB machine studying mannequin is produced. The Classification Learner app can be utilized to attempt many forms of classification fashions and tune them for greatest efficiency, then produce the wanted code to switch the mannequin coaching code above.

After checking the accuracy metrics for the locally-trained mannequin, we are able to transfer the coaching into Amazon SageMaker.

Half 4: Prepare the mannequin in Amazon SageMaker

After you’re happy with the mannequin, you possibly can prepare it at scale utilizing SageMaker. To start calling SageMaker SDKs, you could provoke a SageMaker session.

session = sagemaker.Session();

Specify a SageMaker execution IAM role that coaching jobs and endpoint internet hosting will use.

function = "arn:aws:iam::ACCOUNT:function/service-role/AmazonSageMaker-ExecutionRole-XXXXXXXXXXXXXXX";

From MATLAB, save the coaching knowledge as a .csv file to an Amazon Simple Storage Service (Amazon S3) bucket.

writetable(trainingData,'pump_training_data.csv');

trainingDataLocation = "s3:// "+session.DefaultBucket+ +"/cooling_system/enter/pump_training";

copyfile("pump_training_data.csv", trainingDataLocation);

Create a SageMaker Estimator

Subsequent, you could create a SageMaker estimator and go all the mandatory parameters to it, similar to a coaching docker picture, coaching operate, setting variables, coaching occasion measurement, and so forth. The coaching picture URI needs to be the Amazon ECR URI you created within the prerequisite step with the format ACCOUNT.dkr.ecr.us-east-1.amazonaws.com/sagemaker-matlab-training-r2023a:newest. The coaching operate needs to be supplied on the backside of the MATLAB dwell script.

Submit SageMaker coaching job

Calling the match technique from the estimator submits the coaching job into SageMaker.

est.match(coaching=struct(Location=trainingDataLocation, ContentType="textual content/csv"))

You may as well verify the coaching job standing from the SageMaker console:

After the coaching jobs finishes, choosing the job hyperlink takes you to the job description web page the place you possibly can see the MATLAB mannequin saved within the devoted S3 bucket:

Half 5: Deploy the mannequin as a real-time SageMaker endpoint

After coaching, you possibly can deploy the mannequin as a real-time SageMaker endpoint, which you need to use to make predictions in actual time. To do that, name the deploy technique from the estimator. That is the place you possibly can arrange the specified occasion measurement for internet hosting relying on the workload.

Behind the scenes, this step builds an inference docker picture and pushes it to the Amazon ECR repository, nothing is required from the consumer to construct the inference container. The picture incorporates all the mandatory data to serve the inference request, similar to mannequin location, MATLAB authentication data, and algorithms. After that, Amazon SageMaker creates a SageMaker endpoint configuration and eventually deploys the real-time endpoint. The endpoint may be monitored within the SageMaker console and may be terminated anytime if it’s now not used.

Half 6: Check the endpoint

Now that the endpoint is up and working, you possibly can check the endpoint by giving it a number of information to foretell. Use the next code to pick 10 information from the coaching knowledge and ship them to the endpoint for prediction. The prediction result’s despatched again from the endpoint and proven within the following picture.

Half 7: Dashboard integration

The SageMaker endpoint may be known as by many native AWS providers. It can be used as an ordinary REST API if deployed along with an AWS Lambda operate and API gateway, which may be built-in with any internet functions. For this explicit use case, you need to use streaming ingestion with Amazon SageMaker Function Retailer and Amazon Managed Streaming for Apache Kafka, MSK, to make machine learning-backed selections in close to real-time. One other attainable integration is utilizing a mix of Amazon Kinesis, SageMaker, and Apache Flink to construct a managed, dependable, scalable, and extremely obtainable utility that’s able to real-time inferencing on a knowledge stream.

After algorithms are deployed to a SageMaker endpoint, you may need to visualize them utilizing a dashboard that shows streaming predictions in actual time. Within the customized MATLAB internet app that follows, you possibly can see strain and circulate knowledge by pump, and dwell fault predictions from the deployed mannequin.

On this dashboard features a remaining helpful life (RUL) mannequin to foretell the time to failure for every pump in query. To discover ways to prepare RUL algorithms, see Predictive Maintenance Toolbox.

Clear Up

After you run this resolution, be sure you clear up any unneeded AWS sources to keep away from surprising prices. You possibly can clear up these sources utilizing the SageMaker Python SDK or the AWS Administration Console for the precise providers used right here (SageMaker, Amazon ECR, and Amazon S3). By deleting these sources, you forestall additional costs for sources you’re now not utilizing.

Conclusion

We’ve demonstrated how one can carry MATLAB to SageMaker for a pump predictive upkeep use case with your entire machine studying lifecycle. SageMaker supplies a totally managed setting for working machine studying workloads and deploying fashions with a fantastic choice of compute cases serving varied wants.

Disclaimer: The code used on this put up is owned and maintained by MathWorks. Confer with the license phrases within the GitHub repo. For any points with the code or function requests, please open a GitHub difficulty within the repository

References

In regards to the Authors

Brad Duncan is the product supervisor for machine studying capabilities within the Statistics and Machine Studying Toolbox at MathWorks. He works with clients to use AI in new areas of engineering similar to incorporating digital sensors in engineered programs, constructing explainable machine studying fashions, and standardizing AI workflows utilizing MATLAB and Simulink. Earlier than coming to MathWorks he led groups for 3D simulation and optimization of car aerodynamics, consumer expertise for 3D simulation, and product administration for simulation software program. Brad can be a visitor lecturer at Tufts College within the space of car aerodynamics.

Brad Duncan is the product supervisor for machine studying capabilities within the Statistics and Machine Studying Toolbox at MathWorks. He works with clients to use AI in new areas of engineering similar to incorporating digital sensors in engineered programs, constructing explainable machine studying fashions, and standardizing AI workflows utilizing MATLAB and Simulink. Earlier than coming to MathWorks he led groups for 3D simulation and optimization of car aerodynamics, consumer expertise for 3D simulation, and product administration for simulation software program. Brad can be a visitor lecturer at Tufts College within the space of car aerodynamics.

Richard Alcock is the senior growth supervisor for Cloud Platform Integrations at MathWorks. On this function, he’s instrumental in seamlessly integrating MathWorks merchandise into cloud and container platforms. He creates options that allow engineers and scientists to harness the complete potential of MATLAB and Simulink in cloud-based environments. He was beforehand a software program engineering at MathWorks, growing options to assist parallel and distributed computing workflows.

Richard Alcock is the senior growth supervisor for Cloud Platform Integrations at MathWorks. On this function, he’s instrumental in seamlessly integrating MathWorks merchandise into cloud and container platforms. He creates options that allow engineers and scientists to harness the complete potential of MATLAB and Simulink in cloud-based environments. He was beforehand a software program engineering at MathWorks, growing options to assist parallel and distributed computing workflows.

Rachel Johnson is the product supervisor for predictive upkeep at MathWorks, and is liable for general product technique and advertising. She was beforehand an utility engineer instantly supporting the aerospace business on predictive upkeep initiatives. Previous to MathWorks, Rachel was an aerodynamics and propulsion simulation engineer for the US Navy. She additionally spent a number of years educating math, physics, and engineering.

Rachel Johnson is the product supervisor for predictive upkeep at MathWorks, and is liable for general product technique and advertising. She was beforehand an utility engineer instantly supporting the aerospace business on predictive upkeep initiatives. Previous to MathWorks, Rachel was an aerodynamics and propulsion simulation engineer for the US Navy. She additionally spent a number of years educating math, physics, and engineering.

Shun Mao is a Senior AI/ML Accomplice Options Architect within the Rising Applied sciences workforce at Amazon Net Providers. He’s captivated with working with enterprise clients and companions to design, deploy and scale AI/ML functions to derive their enterprise values. Outdoors of labor, he enjoys fishing, touring and enjoying Ping-Pong.

Shun Mao is a Senior AI/ML Accomplice Options Architect within the Rising Applied sciences workforce at Amazon Net Providers. He’s captivated with working with enterprise clients and companions to design, deploy and scale AI/ML functions to derive their enterprise values. Outdoors of labor, he enjoys fishing, touring and enjoying Ping-Pong.

Ramesh Jatiya is a Options Architect within the Unbiased Software program Vendor (ISV) workforce at Amazon Net Providers. He’s captivated with working with ISV clients to design, deploy and scale their functions in cloud to derive their enterprise values. He’s additionally pursuing an MBA in Machine Studying and Enterprise Analytics from Babson Faculty, Boston. Outdoors of labor, he enjoys working, enjoying tennis and cooking.

Ramesh Jatiya is a Options Architect within the Unbiased Software program Vendor (ISV) workforce at Amazon Net Providers. He’s captivated with working with ISV clients to design, deploy and scale their functions in cloud to derive their enterprise values. He’s additionally pursuing an MBA in Machine Studying and Enterprise Analytics from Babson Faculty, Boston. Outdoors of labor, he enjoys working, enjoying tennis and cooking.