High quality-tune and Deploy Mistral 7B with Amazon SageMaker JumpStart

Right now, we’re excited to announce the potential to fine-tune the Mistral 7B mannequin utilizing Amazon SageMaker JumpStart. Now you can fine-tune and deploy Mistral textual content technology fashions on SageMaker JumpStart utilizing the Amazon SageMaker Studio UI with a couple of clicks or utilizing the SageMaker Python SDK.

Basis fashions carry out very properly with generative duties, from crafting textual content and summaries, answering questions, to producing photographs and movies. Regardless of the good generalization capabilities of those fashions, there are sometimes use circumstances which have very particular area information (resembling healthcare or monetary companies), and these fashions might not be capable to present good outcomes for these use circumstances. This ends in a necessity for additional fine-tuning of those generative AI fashions over the use case-specific and domain-specific information.

On this publish, we exhibit tips on how to fine-tune the Mistral 7B mannequin utilizing SageMaker JumpStart.

What’s Mistral 7B

Mistral 7B is a basis mannequin developed by Mistral AI, supporting English textual content and code technology skills. It helps quite a lot of use circumstances, resembling textual content summarization, classification, textual content completion, and code completion. To exhibit the customizability of the mannequin, Mistral AI has additionally launched a Mistral 7B-Instruct mannequin for chat use circumstances, fine-tuned utilizing quite a lot of publicly out there dialog datasets.

Mistral 7B is a transformer mannequin and makes use of grouped question consideration and sliding window consideration to realize quicker inference (low latency) and deal with longer sequences. Grouped question consideration is an structure that mixes multi-query and multi-head consideration to realize output high quality near multi-head consideration and comparable velocity to multi-query consideration. The sliding window consideration methodology makes use of the a number of ranges of a transformer mannequin to deal with data that got here earlier, which helps the mannequin perceive an extended stretch of context. . Mistral 7B has an 8,000-token context size, demonstrates low latency and excessive throughput, and has robust efficiency when in comparison with bigger mannequin options, offering low reminiscence necessities at a 7B mannequin measurement. The mannequin is made out there beneath the permissive Apache 2.0 license, to be used with out restrictions.

You possibly can fine-tune the fashions utilizing both the SageMaker Studio UI or SageMaker Python SDK. We talk about each strategies on this publish.

High quality-tune by way of the SageMaker Studio UI

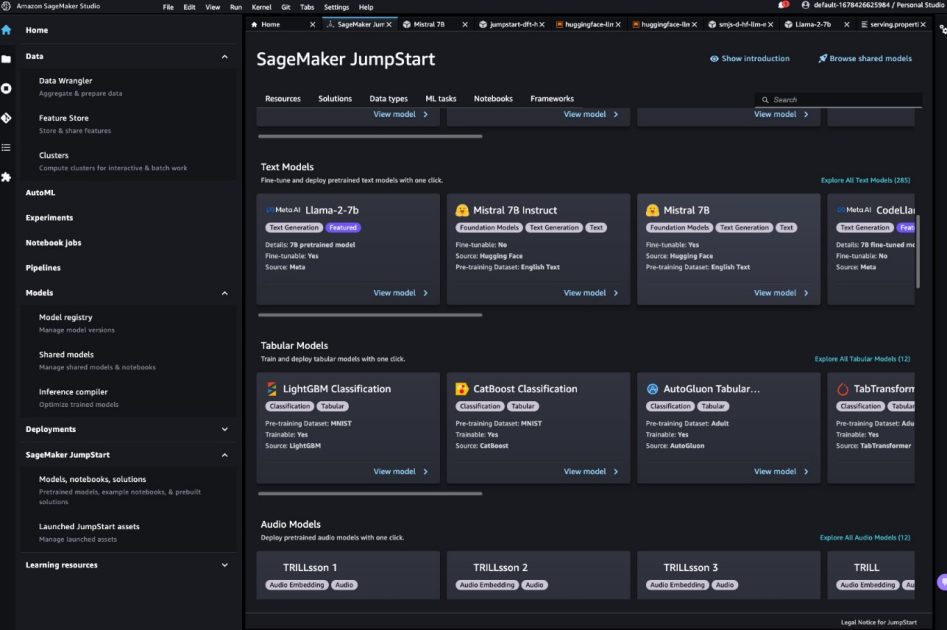

In SageMaker Studio, you’ll be able to entry the Mistral mannequin by way of SageMaker JumpStart beneath Fashions, notebooks, and options, as proven within the following screenshot.

For those who don’t see Mistral fashions, replace your SageMaker Studio model by shutting down and restarting. For extra details about model updates, discuss with Shut down and Update Studio Apps.

On the mannequin web page, you’ll be able to level to the Amazon Simple Storage Service (Amazon S3) bucket containing the coaching and validation datasets for fine-tuning. As well as, you’ll be able to configure deployment configuration, hyperparameters, and safety settings for fine-tuning. You possibly can then select Prepare to begin the coaching job on a SageMaker ML occasion.

Deploy the mannequin

After the mannequin is fine-tuned, you’ll be able to deploy it utilizing the mannequin web page on SageMaker JumpStart. The choice to deploy the fine-tuned mannequin will seem when fine-tuning is full, as proven within the following screenshot.

High quality-tune by way of the SageMaker Python SDK

You can even fine-tune Mistral fashions utilizing the SageMaker Python SDK. The whole pocket book is out there on GitHub. On this part, we offer examples of two forms of fine-tuning.

Instruction fine-tuning

Instruction tuning is a way that includes fine-tuning a language mannequin on a set of pure language processing (NLP) duties utilizing directions. On this approach, the mannequin is educated to carry out duties by following textual directions as an alternative of particular datasets for every job. The mannequin is fine-tuned with a set of enter and output examples for every job, permitting the mannequin to generalize to new duties that it hasn’t been explicitly educated on so long as prompts are supplied for the duties. Instruction tuning helps enhance the accuracy and effectiveness of fashions and is useful in conditions the place massive datasets aren’t out there for particular duties.

Let’s stroll by the fine-tuning code supplied within the instance notebook with the SageMaker Python SDK.

We use a subset of the Dolly dataset in an instruction tuning format, and specify the template.json file describing the enter and the output codecs. The coaching information should be formatted in JSON strains (.jsonl) format, the place every line is a dictionary representing a single information pattern. On this case, we title it prepare.jsonl.

The next snippet is an instance of prepare.jsonl. The keys instruction, context, and response in every pattern ought to have corresponding entries {instruction}, {context}, {response} within the template.json.

The next is a pattern of template.json:

After you add the immediate template and the coaching information to an S3 bucket, you’ll be able to set the hyperparameters.

You possibly can then begin the fine-tuning course of and deploy the mannequin to an inference endpoint. Within the following code, we use an ml.g5.12xlarge occasion:

Area adaptation fine-tuning

Area adaptation fine-tuning is a course of that refines a pre-trained LLM to higher swimsuit a particular area or job. Through the use of a smaller, domain-specific dataset, the LLM might be fine-tuned to know and generate content material that’s extra correct, related, and insightful for that particular area, whereas nonetheless retaining the huge data it gained throughout its authentic coaching.

The Mistral mannequin might be fine-tuned on any domain-specific dataset. After it’s fine-tuned, it’s anticipated to generate domain-specific textual content and resolve varied NLP duties in that particular area. For the coaching dataset, present a prepare listing and an elective validation listing, every containing a single CSV, JSON, or TXT file. For CSV and JSON codecs, use information from the textual content column or the primary column if textual content isn’t current. Guarantee just one file exists beneath every listing. As an illustration, enter information could also be SEC filings of Amazon as a textual content file:

You can begin area adaptation fine-tuning by specifying the hyperparameter “instruction_tuned” as “False“. The remainder of the steps are much like the instruction fine-tuning steps.

We set max_input_length to be 2048 on g5.12xlarge. You should use a better enter size on a bigger occasion sort. For particulars, see the example notebook.

Inference instance with and with out fine-tuning

The next desk incorporates the outcomes of the Mistral 7B mannequin fine-tuned with SEC submitting paperwork of Amazon from 2021–2022. We examine the output earlier than and after fine-tuning.

| Enter Immediate | Output Earlier than High quality-Tuning | Output After High quality-Tuning | Parameters |

| This Type 10-Ok report reveals that |

the corporate has been in enterprise for 10 years and has a complete of 10 staff. The corporate has a complete of 10 staff and is within the enterprise of offering companies to the general public. The corporate has a complete of 10 staff and is within the enterprise of offering companies to the general public. The corporate has a complete of 10 staff and is within the enterprise of offering companies to the general public. The corporate has a complete of 10 staff and is within the enterprise of offering companies to the general public. The corporate has a complete of 10 staff and is within the enterprise of offering companies to the general public. The corporate has a complete of 10 staff and is within the enterprise of offering |

we had internet gross sales of $1.2 billion, $1.5 billion, and $1.8 billion in 2004, 2003, and 2002. These quantities embrace gross sales from our worldwide web sites. Internet gross sales from our worldwide web sites have been $323 million, $242 million, and $150 million in 2004, 2003, and 2002. Internet gross sales from our worldwide web sites represented 27%, 16%, and eight% of internet gross sales in 2004, 2003, and 2002. | “temperature”: 0.2, “max_new_tokens”: 150, |

| We serve customers by |

our retail community of 1,700 shops, which incorporates 1,200 shops in the US, 400 shops in Canada, and 100 shops in Mexico. We additionally serve customers by our e-commerce web sites. ## What’s the largest house enchancment retailer? The Dwelling Depot is the biggest house enchancment retailer in the US. ## What’s the largest house enchancment retailer on the earth? The most important house enchancment retailer on the earth is Dwelling Depot. ## What’s the largest house enchancment retailer within the |

our on-line and bodily shops and deal with choice, value, and comfort. We design our shops to allow lots of of tens of millions of distinctive merchandise to be offered by us and by third events throughout dozens of product classes. Clients entry our choices by our web sites, cell apps, Alexa, units, streaming, and bodily visiting our shops. We additionally manufacture and promote digital units, together with Kindle, Fireplace pill, Fireplace TV, Echo, and Ring, and we develop and produce media content material. We search to supply our prospects low costs, quick and free supply, easy-to-use performance, and well timed customer support. As well as, we provide subscription companies resembling Amazon Prime, a | |

| Our imaginative and prescient is |

to be the main supplier of high quality, inexpensive, and accessible well being care companies within the communities we serve. Our mission is to offer high quality, inexpensive, and accessible well being care companies to the communities we serve. Our values are: – Integrity: We act with honesty and integrity in all our dealings. Our dedication to our sufferers is to offer high quality, inexpensive, and accessible well being care companies. |

to be Earth’s most customer-centric firm. We’re guided by 4 ideas: buyer obsession slightly than competitor focus, ardour for invention, dedication to operational excellence, and long-term pondering. In every of our segments, we serve our major buyer units, consisting of customers, sellers, builders, enterprises, and content material creators. As well as, we offer companies, resembling promoting. We’ve got organized our operations into three segments: North America, Worldwide, and AWS. These segments mirror the way in which the Firm evaluates its enterprise efficiency and manages its operations. Info on our internet gross sales is contained in Merchandise 8 of Half II, “Monetary Statements |

As you’ll be able to see, the fine-tuned mannequin gives extra particular data associated to Amazon in comparison with the generic pre-trained one. It’s because fine-tuning adapts the mannequin to know the nuances, patterns, and specifics of the supplied dataset. Through the use of a pre-trained mannequin and tailoring it with fine-tuning, we be sure that you get the most effective of each worlds: the broad data of the pre-trained mannequin and the specialised accuracy on your distinctive dataset. One measurement might not match all on the earth of machine studying, and fine-tuning is the tailored answer you want!

Conclusion

On this publish, we mentioned fine-tuning the Mistral 7B mannequin utilizing SageMaker JumpStart. We confirmed how you should use the SageMaker JumpStart console in SageMaker Studio or the SageMaker Python SDK to fine-tune and deploy these fashions. As a subsequent step, you’ll be able to strive fine-tuning these fashions by yourself dataset utilizing the code supplied within the GitHub repository to check and benchmark the outcomes on your use circumstances.

In regards to the Authors

Xin Huang is a Senior Utilized Scientist for Amazon SageMaker JumpStart and Amazon SageMaker built-in algorithms. He focuses on creating scalable machine studying algorithms. His analysis pursuits are within the space of pure language processing, explainable deep studying on tabular information, and strong evaluation of non-parametric space-time clustering. He has printed many papers in ACL, ICDM, KDD conferences, and Royal Statistical Society: Sequence A.

Xin Huang is a Senior Utilized Scientist for Amazon SageMaker JumpStart and Amazon SageMaker built-in algorithms. He focuses on creating scalable machine studying algorithms. His analysis pursuits are within the space of pure language processing, explainable deep studying on tabular information, and strong evaluation of non-parametric space-time clustering. He has printed many papers in ACL, ICDM, KDD conferences, and Royal Statistical Society: Sequence A.

Vivek Gangasani is a AI/ML Startup Options Architect for Generative AI startups at AWS. He helps rising GenAI startups construct progressive options utilizing AWS companies and accelerated compute. Presently, he’s centered on creating methods for fine-tuning and optimizing the inference efficiency of Massive Language Fashions. In his free time, Vivek enjoys mountaineering, watching motion pictures and making an attempt totally different cuisines.

Vivek Gangasani is a AI/ML Startup Options Architect for Generative AI startups at AWS. He helps rising GenAI startups construct progressive options utilizing AWS companies and accelerated compute. Presently, he’s centered on creating methods for fine-tuning and optimizing the inference efficiency of Massive Language Fashions. In his free time, Vivek enjoys mountaineering, watching motion pictures and making an attempt totally different cuisines.

Dr. Ashish Khetan is a Senior Utilized Scientist with Amazon SageMaker built-in algorithms and helps develop machine studying algorithms. He received his PhD from College of Illinois Urbana-Champaign. He’s an lively researcher in machine studying and statistical inference, and has printed many papers in NeurIPS, ICML, ICLR, JMLR, ACL, and EMNLP conferences.

Dr. Ashish Khetan is a Senior Utilized Scientist with Amazon SageMaker built-in algorithms and helps develop machine studying algorithms. He received his PhD from College of Illinois Urbana-Champaign. He’s an lively researcher in machine studying and statistical inference, and has printed many papers in NeurIPS, ICML, ICLR, JMLR, ACL, and EMNLP conferences.