Spoken query answering and speech continuation utilizing a spectrogram-powered LLM – Google Analysis Weblog

The purpose of pure language processing (NLP) is to develop computational fashions that may perceive and generate pure language. By capturing the statistical patterns and buildings of text-based pure language, language fashions can predict and generate coherent and significant sequences of phrases. Enabled by the growing use of the extremely profitable Transformer mannequin structure and with coaching on giant quantities of textual content (with proportionate compute and mannequin measurement), giant language fashions (LLMs) have demonstrated exceptional success in NLP duties.

Nevertheless, modeling spoken human language stays a difficult frontier. Spoken dialog programs have conventionally been constructed as a cascade of automatic speech recognition (ASR), natural language understanding (NLU), response technology, and text-to-speech (TTS) programs. Nevertheless, so far there have been few succesful end-to-end programs for the modeling of spoken language: i.e., single fashions that may take speech inputs and generate its continuation as speech outputs.

Immediately we current a brand new method for spoken language modeling, known as Spectron, printed in “Spoken Question Answering and Speech Continuation Using Spectrogram-Powered LLM.” Spectron is the primary spoken language mannequin that’s skilled end-to-end to straight course of spectrograms as each enter and output, as a substitute of studying discrete speech representations. Utilizing solely a pre-trained textual content language mannequin, it may be fine-tuned to generate high-quality, semantically correct spoken language. Moreover, the proposed mannequin improves upon direct initialization in retaining the information of the unique LLM as demonstrated by means of spoken question answering datasets.

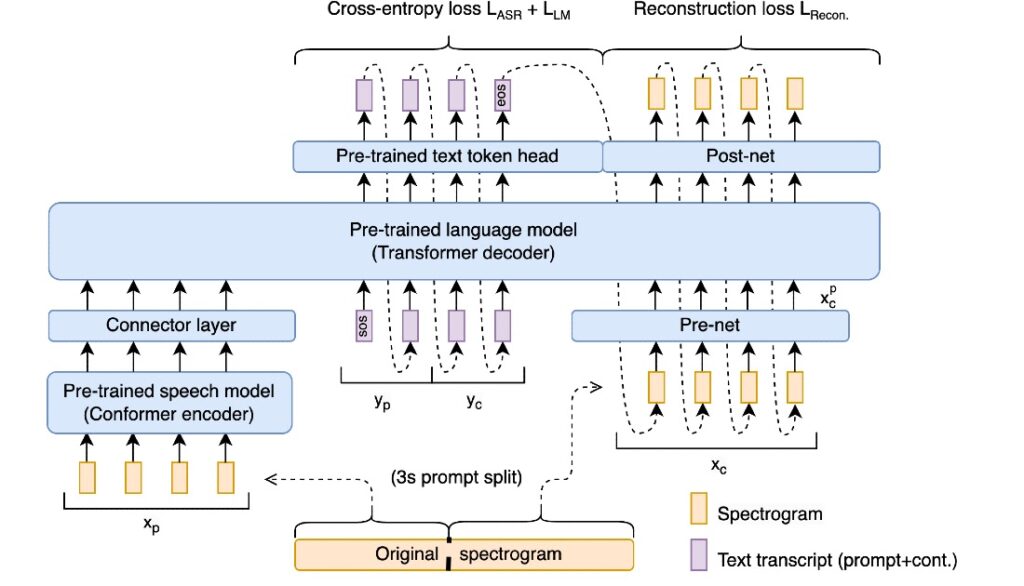

We present {that a} pre-trained speech encoder and a language mannequin decoder allow end-to-end coaching and state-of-the-art efficiency with out sacrificing representational constancy. Key to this can be a novel end-to-end coaching goal that implicitly supervises speech recognition, textual content continuation, and conditional speech synthesis in a joint method. A brand new spectrogram regression loss additionally supervises the mannequin to match the higher-order derivatives of the spectrogram within the time and frequency area. These derivatives specific data aggregated from a number of frames directly. Thus, they specific wealthy, longer-range details about the form of the sign. Our general scheme is summarized within the following determine:

Spectron structure

The structure is initialized with a pre-trained speech encoder and a pre-trained decoder language mannequin. The encoder is prompted with a speech utterance as enter, which it encodes into steady linguistic options. These options feed into the decoder as a prefix, and the entire encoder-decoder is optimized to collectively decrease a cross-entropy loss (for speech recognition and transcript continuation) and a novel reconstruction loss (for speech continuation). Throughout inference, one gives a spoken speech immediate, which is encoded after which decoded to provide each textual content and speech continuations.

Speech encoder

The speech encoder is a 600M-parameter conformer encoder pre-trained on large-scale data (12M hours). It takes the spectrogram of the supply speech as enter, producing a hidden illustration that comes with each linguistic and acoustic data. The enter spectrogram is first subsampled utilizing a convolutional layer after which processed by a sequence of conformer blocks. Every conformer block consists of a feed-forward layer, a self-attention layer, a convolution layer, and a second feed-forward layer. The outputs are handed by means of a projection layer to match the hidden representations to the embedding dimension of the language mannequin.

Language mannequin

We use a 350M or 1B parameter decoder language mannequin (for the continuation and question-answering duties, respectively) skilled within the method of PaLM 2. The mannequin receives the encoded options of the immediate as a prefix. Observe that that is the one connection between the speech encoder and the LM decoder; i.e., there is no such thing as a cross-attention between the encoder and the decoder. Not like most spoken language fashions, throughout coaching, the decoder is teacher-forced to foretell the textual content transcription, textual content continuation, and speech embeddings. To transform the speech embeddings to and from spectrograms, we introduce light-weight modules pre- and post-network.

By having the identical structure decode the intermediate textual content and the spectrograms, we acquire two advantages. First, the pre-training of the LM within the textual content area permits continuation of the immediate within the textual content area earlier than synthesizing the speech. Secondly, the anticipated textual content serves as intermediate reasoning, enhancing the standard of the synthesized speech, analogous to enhancements in text-based language fashions when utilizing intermediate scratchpads or chain-of-thought (CoT) reasoning.

Acoustic projection layers

To allow the language mannequin decoder to mannequin spectrogram frames, we make use of a multi-layer perceptron “pre-net” to mission the bottom fact spectrogram speech continuations to the language mannequin dimension. This pre-net compresses the spectrogram enter right into a decrease dimension, making a bottleneck that aids the decoding course of. This bottleneck mechanism prevents the mannequin from repetitively producing the identical prediction within the decoding course of. To mission the LM output from the language mannequin dimension to the spectrogram dimension, the mannequin employs a “post-net”, which can be a multi-layer perceptron. Each pre- and post-networks are two-layer multi-layer perceptrons.

Coaching goal

The coaching methodology of Spectron makes use of two distinct loss features: (i) cross-entropy loss, employed for each speech recognition and transcript continuation, and (ii) regression loss, employed for speech continuation. Throughout coaching, all parameters are up to date (speech encoder, projection layer, LM, pre-net, and post-net).

Audio samples

Following are examples of speech continuation and query answering from Spectron:

Speech Continuation |

|

| Immediate: | |

| Continuation: | |

| Immediate: | |

| Continuation: | |

| Immediate: | |

| Continuation: | |

| Immediate: | |

| Continuation: | |

Query Answering |

|

| Query: | |

| Reply: | |

| Query: | |

| Reply: | |

Efficiency

To empirically consider the efficiency of the proposed method, we performed experiments on the Libri-Light dataset. Libri-Mild is a 60k hour English dataset consisting of unlabelled speech readings from LibriVox audiobooks. We utilized a frozen neural vocoder known as WaveFit to transform the anticipated spectrograms into uncooked audio. We experiment with two duties, speech continuation and spoken query answering (QA). Speech continuation high quality is examined on the LibriSpeech check set. Spoken QA is examined on the Spoken WebQuestions datasets and a brand new check set named LLama questions, which we created. For all experiments, we use a 3 second audio immediate as enter. We evaluate our technique towards present spoken language fashions: AudioLM, GSLM, TWIST and SpeechGPT. For the speech continuation process, we use the 350M parameter model of LM and the 1B model for the spoken QA process.

For the speech continuation process, we consider our technique utilizing three metrics. The primary is log-perplexity, which makes use of an LM to guage the cohesion and semantic high quality of the generated speech. The second is mean opinion score (MOS), which measures how pure the speech sounds to human evaluators. The third, speaker similarity, makes use of a speaker encoder to measure how comparable the speaker within the output is to the speaker within the enter. Efficiency in all 3 metrics will be seen within the following graphs.

|

| Log-perplexity for completions of LibriSpeech utterances given a 3-second immediate. Decrease is healthier. |

|

| Speaker similarity between the immediate speech and the generated speech utilizing the speaker encoder. Increased is healthier. |

|

| MOS given by human customers on speech naturalness. Raters fee 5-scale subjective imply opinion rating (MOS) ranging between 0 – 5 in naturalness given a speech utterance. Increased is healthier. |

As will be seen within the first graph, our technique considerably outperforms GSLM and TWIST on the log-perplexity metric, and does barely higher than state-of-the-art strategies AudioLM and SpeechGPT. By way of MOS, Spectron exceeds the efficiency of all the opposite strategies aside from AudioLM. By way of speaker similarity, our technique outperforms all different strategies.

To guage the flexibility of the fashions to carry out query answering, we use two spoken query answering datasets. The primary is the LLama Questions dataset, which makes use of basic information questions in several domains generated utilizing the LLama2 70B LLM. The second dataset is the WebQuestions dataset which is a basic query answering dataset. For analysis we use solely questions that match into the three second immediate size. To compute accuracy, solutions are transcribed and in comparison with the bottom fact solutions in textual content kind.

|

| Accuracy for Query Answering on the LLama Questions and Spoken WebQuestions datasets. Accuracy is computed utilizing the ASR transcripts of spoken solutions. |

First, we observe that each one strategies have extra issue answering questions from the Spoken WebQuestions dataset than from the LLama questions dataset. Second, we observe that strategies centered round spoken language modeling resembling GSLM, AudioLM and TWIST have a completion-centric conduct quite than direct query answering which hindered their means to carry out QA. On the LLama questions dataset our technique outperforms all different strategies, whereas SpeechGPT may be very shut in efficiency. On the Spoken WebQuestions dataset, our technique outperforms all different strategies aside from SpeechGPT, which does marginally higher.

Acknowledgements

The direct contributors to this work embody Eliya Nachmani, Alon Levkovitch, Julian Salazar, Chulayutsh Asawaroengchai, Soroosh Mariooryad, RJ Skerry-Ryan and Michelle Tadmor Ramanovich. We additionally thank Heiga Zhen, Yifan Ding, Yu Zhang, Yuma Koizumi, Neil Zeghidour, Christian Frank, Marco Tagliasacchi, Nadav Bar, Benny Schlesinger and Blaise Aguera-Arcas.