Home windows on Snapdragon Brings Hybrid AI to Apps on the Edge

Sponsored Content material

At present, AI is in every single place, and generative AI is being touted as its killer app. We’re seeing giant language fashions (LLMs) like ChatGPT, a generative pre-trained transformer, being utilized in new and artistic methods.

Whereas LLMs had been as soon as relegated to highly effective cloud servers, round-trip latency can result in poor consumer experiences, and the prices to course of such giant fashions within the cloud are growing. For instance, the price of a search question, a standard use case for generative AI, is estimated to extend by ten instances in comparison with conventional search strategies as fashions change into extra complicated. Foundational general-purpose LLMs, like GPT-4 and LaMDA, utilized in such searches, have achieved unprecedented ranges of language understanding, era capabilities, and world information whereas pushing 100 billion parameters.

Points like privateness, personalization, latency, and growing prices have led to the introduction of Hybrid AI, the place builders distribute inference between gadgets and the cloud. A key ingredient for profitable Hybrid AI is the trendy devoted AI engine, which might deal with giant fashions on the edge. For instance, the Snapdragon® 8cx Gen3 Compute Platform powers many gadgets with Home windows on Snapdragon and options the Qualcomm® Hexagon™ NPU for working AI on the edge. Together with instruments and SDKs, which offer superior quantization and compression strategies, builders can add hardware-accelerated inference to their Home windows apps for fashions with billions of parameters. On the identical time, the platform’s always-connected functionality by way of 5G and Wi-Fi 6 gives entry to cloud-based inference just about wherever.

With such instruments at one’s disposal, let’s take a better have a look at Hybrid AI, and how one can benefit from it.

Hybrid AI

Hybrid AI leverages the very best of native and cloud-based inference to allow them to work collectively to ship extra highly effective, environment friendly, and extremely optimized AI. It additionally runs easy (aka gentle) fashions regionally on the system, whereas extra complicated (aka full) fashions may be run regionally and/or offloaded to the cloud.

Builders choose totally different offload choices based mostly on mannequin or question complexity (e.g., mannequin measurement, immediate, and era size) and acceptable accuracy. Different issues embody privateness or personalization (e.g., maintaining knowledge on the system), latency and out there bandwidth for acquiring outcomes, and balancing power consumption versus warmth era.

Hybrid AI affords flexibility by three common distribution approaches:

- Machine-centric: Fashions which offer ample inference efficiency on knowledge collected on the system are run regionally. If the efficiency is inadequate (e.g., when the top consumer is just not glad with the inference outcomes), an on-device neural community or arbiter might resolve to dump inference to the cloud as an alternative.

- Machine-sensing: Gentle fashions run on the system to deal with less complicated inference instances (e.g., automated speech recognition (ASR)). The output predictions from these on-device fashions are then despatched to the cloud and used as enter to full fashions. The fashions then carry out further, complicated inference (e.g., generative AI from the detected speech knowledge) and transmit outcomes again to the system.

- Joint processing: Since LLMs are reminiscence certain, {hardware} usually sits idle ready as knowledge is loaded. When a number of LLMs are wanted to generate tokens for phrases, it may be useful to speculatively run LLMs in parallel and offload accuracy checks to an LLM within the cloud.

A Stack for the Edge

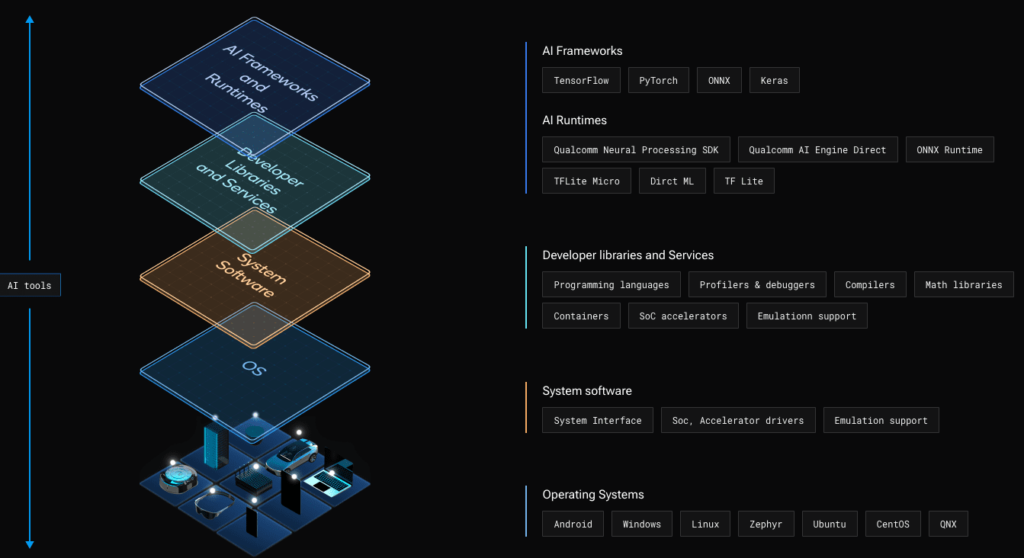

A robust engine and improvement stack are required to benefit from the NPU in an edge platform. That is the place the Qualcomm® AI Stack, proven in Determine 1, is available in.

Determine 1 – The Qualcomm® AI Stack gives {hardware} and software program elements for AI on the edge throughout all Snapdragon platforms.

The Qualcomm AI Stack is supported throughout a spread of platforms from Qualcomm Applied sciences, Inc., together with Snapdragon Compute Platforms which energy Home windows on Snapdragon gadgets and Snapdragon Cell Platforms which energy lots of immediately’s smartphones.

On the stack’s highest degree are standard AI frameworks (e.g., TensorFlow) for producing fashions. Builders can then select from a few choices to combine these fashions into their Home windows on Snapdragon apps. Notice: TFLite and TFLite Micro aren’t supported for Home windows on Snapdragon.

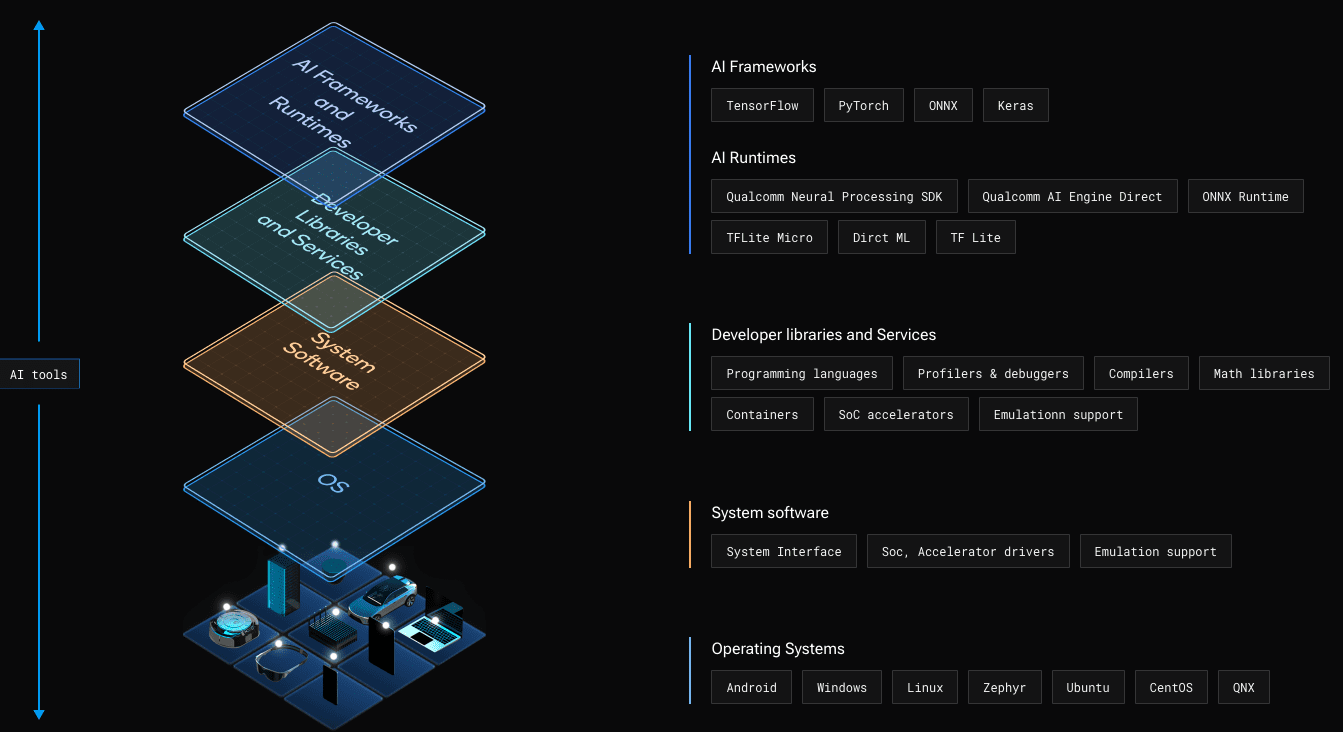

The Qualcomm® Neural Processing SDK for AI gives a high-level, end-to-end resolution comprising a pipeline to transform fashions right into a Hexagon particular (DLC) format and a runtime to execute them as proven in Determine 2.

Determine 2 – Overview of utilizing the Qualcomm® Neural Processing SDK for AI.

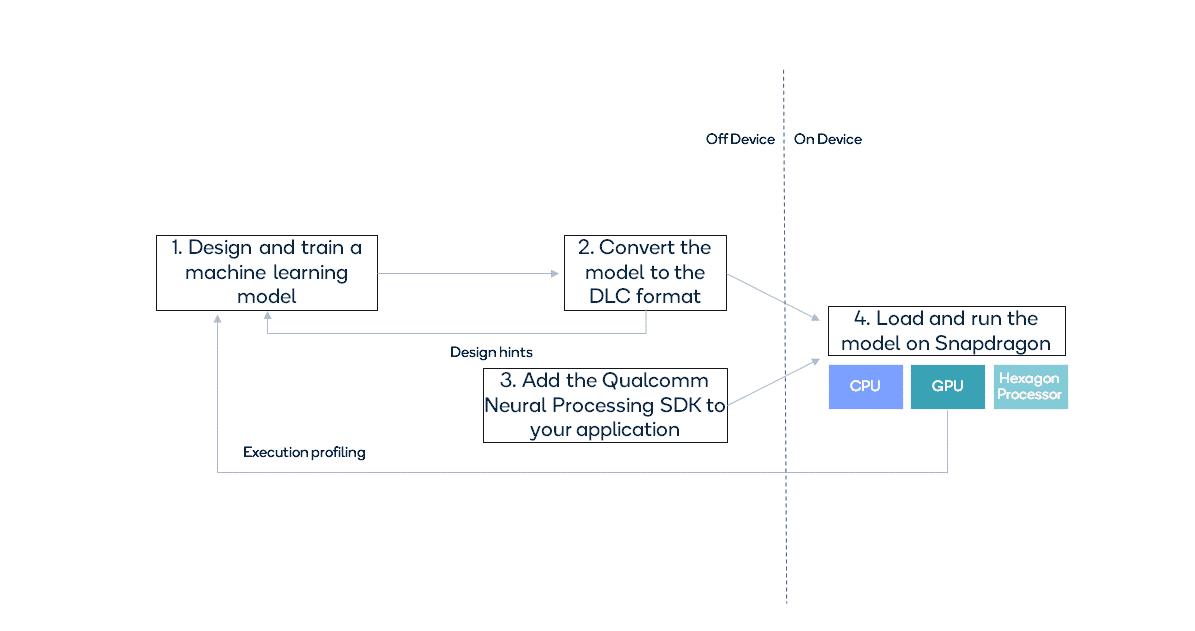

The Qualcomm Neural Processing SDK for AI is constructed on the Qualcomm AI Engine Direct SDK. The Qualcomm AI Engine Direct SDK gives lower-level APIs to run inference on particular accelerators by way of particular person backend libraries. This SDK is changing into the popular technique for working with the Qualcomm AI Engine.

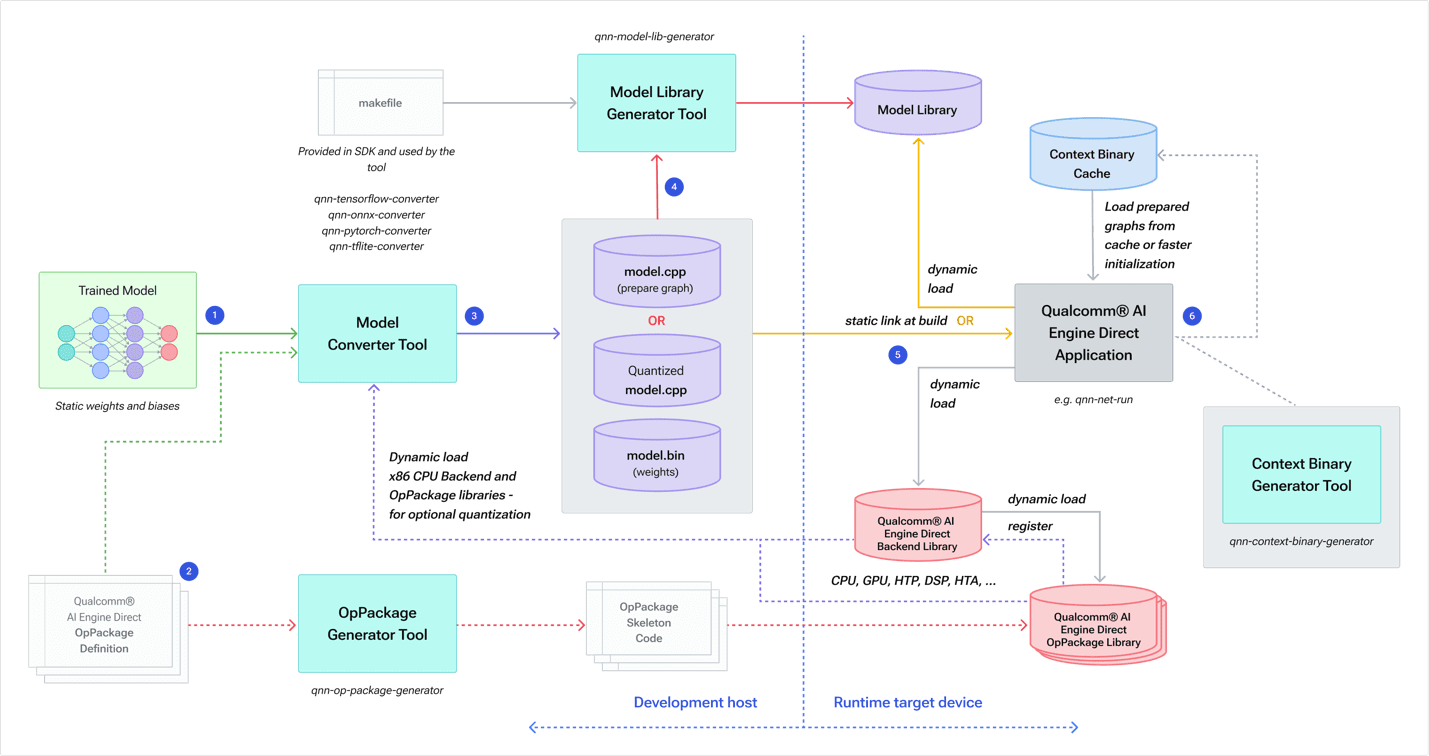

The diagram under (Determine 3) exhibits how fashions from totally different frameworks can be utilized with the Qualcomm AI Engine Direct SDK.

Determine 3 – Overview of the Qualcomm AI Stack, together with its runtime framework help and backend libraries.

The backend libraries summary away the totally different {hardware} cores, offering the choice to run fashions on probably the most applicable core out there in numerous variations of Hexagon NPU (HTP for the Snapdragon 8cx Gen3).

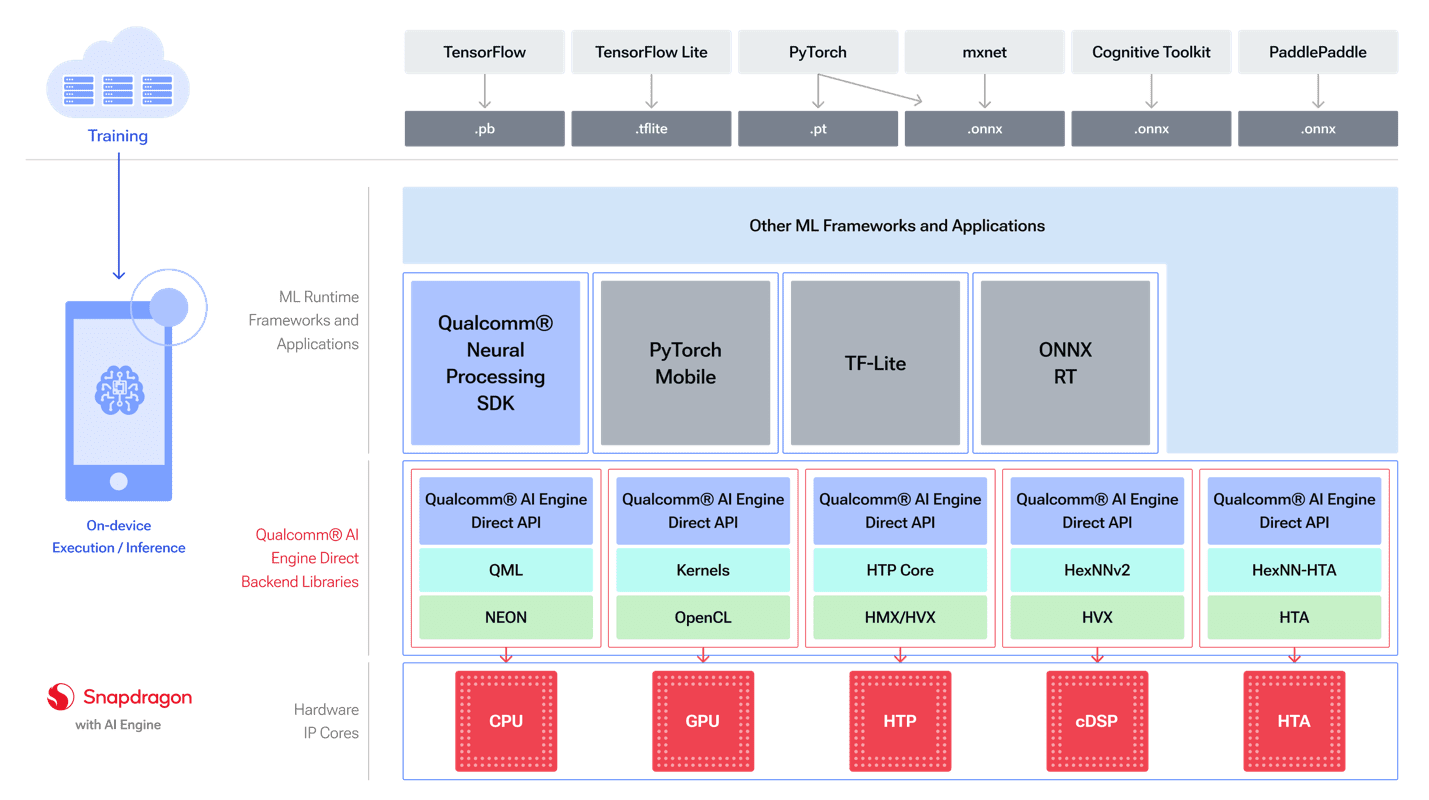

Determine 4 exhibits how builders work with the Qualcomm AI Engine Direct SDK.

Determine 4 – Workflow to transform a mannequin right into a Qualcomm AI Engine Direct illustration for optimum execution on Hexagon NPU.

A mannequin is skilled after which handed to a framework-specific mannequin conversion device, together with any optionally available Op Packages containing definitions of customized operations. The conversion device generates two elements:

- mannequin.cpp containing Qualcomm AI Engine Direct API calls to assemble the community graph

- mannequin.bin (binary) file containing the community weights and biases (32-bit floats by default)

Notice: Builders have the choice to generate these as quantized knowledge.

The SDK’s generator device then builds a runtime mannequin library whereas any Op Packages are generated as code to run on the goal.

You could find implementations of the Qualcomm AI Stack for a number of different Snapdragon platforms spanning verticals similar to cellular, IoT, and automotive. This implies you’ll be able to develop your AI fashions as soon as, and run them throughout these totally different platforms.

Be taught Extra

Whether or not your Home windows on Snapdragon app runs AI solely on the edge or as a part of a hybrid setup, remember to try the Qualcomm AI Stack web page to study extra.

Snapdragon and Qualcomm branded merchandise are merchandise of Qualcomm Applied sciences, Inc. and/or its subsidiaries.