Giant Language Fashions Shock Meta AI Researchers at Compiler Optimization!

“We thought this is able to be a paper in regards to the apparent failings of LLMs that may function motivation for future intelligent concepts to beat these failings. We have been solely taken abruptly to seek out that in lots of circumstances a sufficiently educated LLM cannot solely predict the most effective optimizations to use to an enter code, however it will possibly additionally immediately carry out the optimizations with out resorting to the compiler in any respect!”. - Researchers at Meta AI

Meta AI Researchers have been attempting to make Giant Language Fashions (LLMs) do the identical type of code optimizations that common compilers, like LLVM, do. LLVM’s optimizer is extremely advanced, with 1000’s of guidelines and algorithms written in over 1 million strains of code within the C++ programming language.

They didn’t suppose LLMs might deal with this complexity as a result of they’re sometimes used for duties like translating languages and producing code. Compiler optimizations contain loads of various kinds of considering, maths, and utilizing advanced methods, which they didn’t suppose LLMs have been good at. However publish methodology the outcomes have been completely shocking.

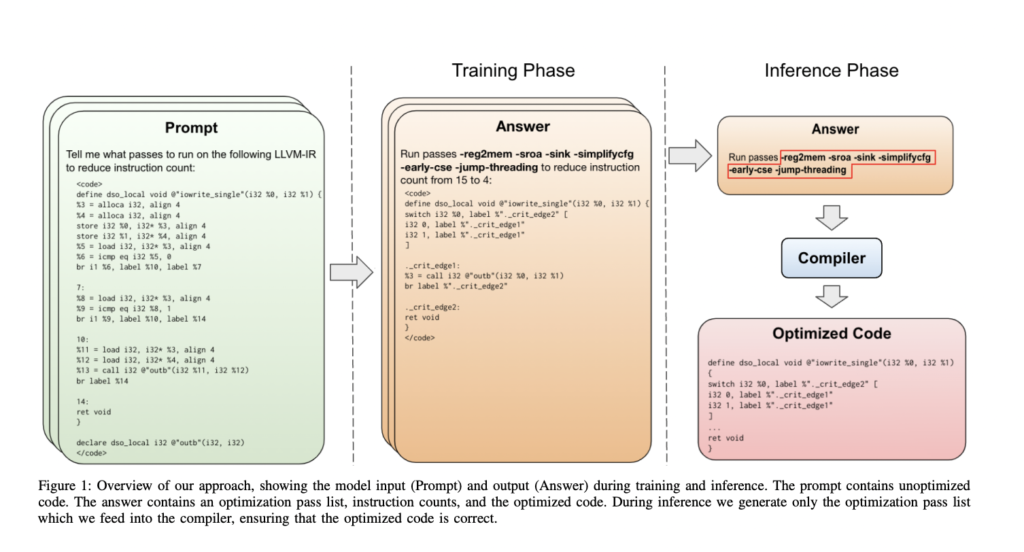

The above picture demonstrates the overview of the methodology, exhibiting the mannequin enter (Immediate) and output (Reply) throughout coaching and inference. The immediate incorporates unoptimized code. The reply incorporates an optimization go record, instruction counts, and the optimized code. Throughout inference, solely the optimization go record is generated, which is then fed into the compiler, guaranteeing that the optimized code is appropriate.

Their method is easy, beginning with a 7-billion-parameter Giant Language Mannequin (LLM) structure sourced from LLaMa 2 [25] and initializing it from scratch. The mannequin is then educated on an unlimited dataset consisting of thousands and thousands of LLVM meeting examples, every paired with the most effective compiler choices decided via a search course of for every meeting, in addition to the ensuing meeting code after making use of these optimizations. By means of these examples alone, the mannequin acquires the power to optimize code with outstanding precision.

The notable contribution of their work lies in being the primary to use LLMs to the duty of code optimization. They create LLMs particularly tailor-made for compiler optimization, demonstrating that these fashions obtain a 3.0% enchancment in code measurement discount on a single compilation in comparison with a search-based method that attains 5.0% enchancment with 2.5 billion compilations. In distinction, state-of-the-art machine studying approaches result in regressions and require 1000’s of compilations. The researchers additionally embody supplementary experiments and code examples to offer a extra complete understanding of the potential and limitations of LLMs in code reasoning. Total, they discover the efficacy of LLMs on this context to be outstanding and imagine that their findings might be of curiosity to the broader group.

Take a look at the Paper. All Credit score For This Analysis Goes To the Researchers on This Challenge. Additionally, don’t neglect to affix our 30k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, the place we share the most recent AI analysis information, cool AI initiatives, and extra.

If you like our work, you will love our newsletter..

Janhavi Lande, is an Engineering Physics graduate from IIT Guwahati, class of 2023. She is an upcoming knowledge scientist and has been working on the planet of ml/ai analysis for the previous two years. She is most fascinated by this ever altering world and its fixed demand of people to maintain up with it. In her pastime she enjoys touring, studying and writing poems.