Simplify entry to inside info utilizing Retrieval Augmented Technology and LangChain Brokers

This submit takes you thru the most typical challenges that prospects face when looking out inside paperwork, and offers you concrete steerage on how AWS providers can be utilized to create a generative AI conversational bot that makes inside info extra helpful.

Unstructured data accounts for 80% of all the data discovered inside organizations, consisting of repositories of manuals, PDFs, FAQs, emails, and different paperwork that grows every day. Companies right this moment depend on repeatedly rising repositories of inside info, and issues come up when the quantity of unstructured knowledge turns into unmanageable. Usually, customers discover themselves studying and checking many alternative inside sources to seek out the solutions they want.

Inside query and reply boards may help customers get extremely particular solutions but additionally require longer wait instances. Within the case of company-specific inside FAQs, lengthy wait instances end in decrease worker productiveness. Query and reply boards are tough to scale as they depend on manually written solutions. With generative AI, there’s presently a paradigm shift in how customers search and discover info. The subsequent logical step is to make use of generative AI to condense giant paperwork into smaller chew sized info for simpler person consumption. As a substitute of spending a very long time studying textual content or ready for solutions, customers can generate summaries in real-time based mostly on a number of current repositories of inside info.

Answer overview

The answer permits prospects to retrieve curated responses to questions requested about inside paperwork through the use of a transformer mannequin to generate solutions to questions on knowledge that it has not been skilled on, a method often called zero-shot prompting. By adopting this answer, prospects can achieve the next advantages:

- Discover correct solutions to questions based mostly on current sources of inside paperwork

- Cut back the time customers spend looking for solutions through the use of Massive Language Fashions (LLMs) to supply near-immediate solutions to complicated queries utilizing paperwork with essentially the most up to date info

- Search beforehand answered questions by means of a centralized dashboard

- Cut back stress brought on by spending time manually studying info to search for solutions

Retrieval Augmented Technology (RAG)

Retrieval Augmented Technology (RAG) reduces a number of the shortcomings of LLM based mostly queries by discovering the solutions out of your information base and utilizing the LLM to summarize the paperwork into concise responses. Please learn this post to learn to implement the RAG strategy with Amazon Kendra. The next dangers and limitations are related to LLM based mostly queries {that a} RAG strategy with Amazon Kendra addresses:

- Hallucinations and traceability – LLMS are skilled on giant knowledge units and generate responses on chances. This could result in inaccurate solutions, that are often called hallucinations.

- A number of knowledge silos – So as to reference knowledge from a number of sources inside your response, one must arrange a connector ecosystem to combination the information. Accessing a number of repositories is guide and time-consuming.

- Safety – Safety and privateness are crucial issues when deploying conversational bots powered by RAG and LLMs. Regardless of utilizing Amazon Comprehend to filter out private knowledge which may be supplied by means of person queries, there stays a risk of unintentionally surfacing private or delicate info, relying on the ingested knowledge. Because of this controlling entry to the chatbot is essential to forestall unintended entry to delicate info.

- Knowledge relevance – LLMS are skilled on knowledge as much as sure date, which implies info is usually not present. The associated fee related to coaching fashions on latest knowledge is excessive. To make sure correct and up-to-date responses, organizations bear the duty of repeatedly updating and enriching the content material of the listed paperwork.

- Value – The associated fee related to deploying this answer ought to be a consideration for companies. Companies have to rigorously assess their price range and efficiency necessities when implementing this answer. Working LLMs can require substantial computational assets, which can enhance operational prices. These prices can turn into a limitation for functions that have to function at a big scale. Nevertheless, one of many advantages of the AWS Cloud is the flexibleness to solely pay for what you utilize. AWS gives a easy, constant, pay-as-you-go pricing mannequin, so you’re charged just for the assets you eat.

Utilization of Amazon SageMaker JumpStart

For transformer-based language fashions, organizations can profit from utilizing Amazon SageMaker JumpStart, which gives a set of pre-built machine studying fashions. Amazon SageMaker JumpStart gives a variety of textual content technology and question-answering (Q&A) foundational fashions that may be simply deployed and utilized. This answer integrates a FLAN T5-XL Amazon SageMaker JumpStart mannequin, however there are totally different facets to bear in mind when choosing a foundation model.

Integrating safety in our workflow

Following the perfect practices of the Safety Pillar of the Well-Architected Framework, Amazon Cognito is used for authentication. Amazon Cognito Person Swimming pools will be built-in with third-party id suppliers that help a number of frameworks used for entry management, together with Open Authorization (OAuth), OpenID Join (OIDC), or Safety Assertion Markup Language (SAML). Figuring out customers and their actions permits the answer to keep up traceability. The answer additionally makes use of the Amazon Comprehend personally identifiable information (PII) detection function to mechanically id and redact PII. Redacted PII contains addresses, social safety numbers, e-mail addresses, and different delicate info. This design ensures that any PII supplied by the person by means of the enter question is redacted. The PII shouldn’t be saved, utilized by Amazon Kendra, or fed to the LLM.

Answer Walkthrough

The next steps describe the workflow of the Query answering over paperwork movement:

- Customers ship a question by means of an online interface.

- Amazon Cognito is used for authentication, making certain safe entry to the online software.

- The net software front-end is hosted on AWS Amplify.

- Amazon API Gateway hosts a REST API with varied endpoints to deal with person requests which can be authenticated utilizing Amazon Cognito.

- PII redaction with Amazon Comprehend:

- Person Question Processing: When a person submits a question or enter, it’s first handed by means of Amazon Comprehend. The service analyzes the textual content and identifies any PII entities current throughout the question.

- PII Extraction: Amazon Comprehend extracts the detected PII entities from the person question.

- Related Data Retrieval with Amazon Kendra:

- Amazon Kendra is used to handle an index of paperwork that incorporates the data used to generate solutions to the person’s queries.

- The LangChain QA retrieval module is used to construct a dialog chain that has related details about the person’s queries.

- Integration with Amazon SageMaker JumpStart:

- The AWS Lambda operate makes use of the LangChain library and connects to the Amazon SageMaker JumpStart endpoint with a context-stuffed question. The Amazon SageMaker JumpStart endpoint serves because the interface of the LLM used for inference.

- Storing responses and returning it to the person:

- The response from the LLM is saved in Amazon DynamoDB together with the person’s question, the timestamp, a singular identifier, and different arbitrary identifiers for the merchandise akin to query class. Storing the query and reply as discrete gadgets permits the AWS Lambda operate to simply recreate a person’s dialog historical past based mostly on the time when questions have been requested.

- Lastly, the response is shipped again to the person by way of a HTTPs request by means of the Amazon API Gateway REST API integration response.

The next steps describe the AWS Lambda capabilities and their movement by means of the method:

- Examine and redact any PII / Delicate information

- LangChain QA Retrieval Chain

- Search and retrieve related information

- Context Stuffing & Immediate Engineering

- Inference with LLM

- Return response & Put it aside

Use instances

There are numerous enterprise use instances the place prospects can use this workflow. The next part explains how the workflow can be utilized in numerous industries and verticals.

Worker Help

Effectively-designed company coaching can enhance worker satisfaction and cut back the time required for onboarding new staff. As organizations develop and complexity will increase, staff discover it obscure the numerous sources of inside paperwork. Inside paperwork on this context embrace firm pointers, insurance policies, and Commonplace Working Procedures. For this situation, an worker has a query in tips on how to proceed and edit an inside concern ticketing ticket. The worker can entry and use the generative synthetic intelligence (AI) conversational bot to ask and execute the following steps for a particular ticket.

Particular use case: Automate concern decision for workers based mostly on company pointers.

The next steps describe the AWS Lambda capabilities and their movement by means of the method:

- LangChain agent to determine the intent

- Ship notification based mostly on worker request

- Modify ticket standing

On this structure diagram, company coaching movies will be ingested by means of Amazon Transcribe to gather a log of those video scripts. Moreover, company coaching content material saved in varied sources (i.e., Confluence, Microsoft SharePoint, Google Drive, Jira, and many others.) can be utilized to create indexes by means of Amazon Kendra connectors. Learn this text to study extra on the gathering of native connectors you’ll be able to make the most of in Amazon Kendra as a supply level. The Amazon Kendra crawler is then ready to make use of each the company coaching video scripts and documentation saved in these different sources to help the conversational bot in answering questions particular to firm company coaching pointers. The LangChain agent verifies permissions, modifies ticket standing, and notifies the proper people utilizing Amazon Easy Notification Service (Amazon SNS).

Buyer Assist Groups

Shortly resolving buyer queries improves the shopper expertise and encourages model loyalty. A loyal buyer base helps drive gross sales, which contributes to the underside line and will increase buyer engagement. Buyer help groups spend a lot of vitality referencing many inside paperwork and buyer relationship administration software program to reply buyer queries about services and products. Inside paperwork on this context can embrace generic buyer help name scripts, playbooks, escalation pointers, and enterprise info. The generative AI conversational bot helps with price optimization as a result of it handles queries on behalf of the shopper help group.

Particular use case: Dealing with an oil change request based mostly on service historical past and customer support plan bought.

On this structure diagram, the shopper is routed to both the generative AI conversational bot or the Amazon Connect contact heart. This resolution will be based mostly on the extent of help wanted or the supply of buyer help brokers. The LangChain agent identifies the shopper’s intent and verifies id. The LangChain agent additionally checks the service historical past and bought help plan.

The next steps describe the AWS Lambda capabilities and their movement by means of the method:

- LangChain agent identifies the intent

- Retrieve Buyer Data

- Examine customer support historical past and guarantee info

- E book appointment, present extra info, or path to contact heart

- Ship e-mail affirmation

Amazon Join is used to gather the voice and chat logs, and Amazon Comprehend is used to take away personally identifiable info (PII) from these logs. The Amazon Kendra crawler is then ready to make use of the redacted voice and chat logs, buyer name scripts, and customer support help plan insurance policies to create the index. As soon as a call is made, the generative AI conversational bot decides whether or not to guide an appointment, present extra info, or route the shopper to the contact heart for additional help. For price optimization, the LangChain agent may generate solutions utilizing fewer tokens and a cheaper giant language mannequin for decrease precedence buyer queries.

Monetary Companies

Monetary providers firms depend on well timed use of data to remain aggressive and adjust to monetary laws. Utilizing a generative AI conversational bot, monetary analysts and advisors can work together with textual info in a conversational method and cut back the effort and time it takes to make higher knowledgeable selections. Exterior of funding and market analysis, a generative AI conversational bot may increase human capabilities by dealing with duties that might historically require extra human time and effort. For instance, a monetary establishment specializing in private loans can enhance the speed at which loans are processed whereas offering higher transparency to prospects.

Particular use case: Use buyer monetary historical past and former mortgage functions to determine and clarify mortgage resolution.

The next steps describe the AWS Lambda capabilities and their movement by means of the method:

- LangChain agent to determine the intent

- Examine buyer monetary and credit score rating historical past

- Examine inside buyer relationship administration system

- Examine normal mortgage insurance policies and recommend resolution for worker qualifying the mortgage

- Ship notification to buyer

This structure incorporates buyer monetary knowledge saved in a database and knowledge saved in a buyer relationship administration (CRM) instrument. These knowledge factors are used to tell a call based mostly on the corporate’s inside mortgage insurance policies. The client is ready to ask clarifying questions to know what loans they qualify for and the phrases of the loans they will settle for. If the generative AI conversational bot is unable to approve a mortgage software, the person can nonetheless ask questions on bettering credit score scores or various financing choices.

Authorities

Generative AI conversational bots can drastically profit authorities establishments by dashing up communication, effectivity, and decision-making processes. Generative AI conversational bots may present instantaneous entry to inside information bases to assist authorities staff to rapidly retrieve info, insurance policies, and procedures (i.e., eligibility standards, software processes, and citizen’s providers and help). One answer is an interactive system, which permits tax payers and tax professionals to simply discover tax-related particulars and advantages. It may be used to know person questions, summarize tax paperwork, and supply clear solutions by means of interactive conversations.

Customers can ask questions akin to:

- How does inheritance tax work and what are the tax thresholds?

- Are you able to clarify the idea of revenue tax?

- What are the tax implications when promoting a second property?

Moreover, customers can have the comfort of submitting tax kinds to a system, which may help confirm the correctness of the data supplied.

This structure illustrates how customers can add accomplished tax kinds to the answer and put it to use for interactive verification and steerage on tips on how to precisely finishing the mandatory info.

Healthcare

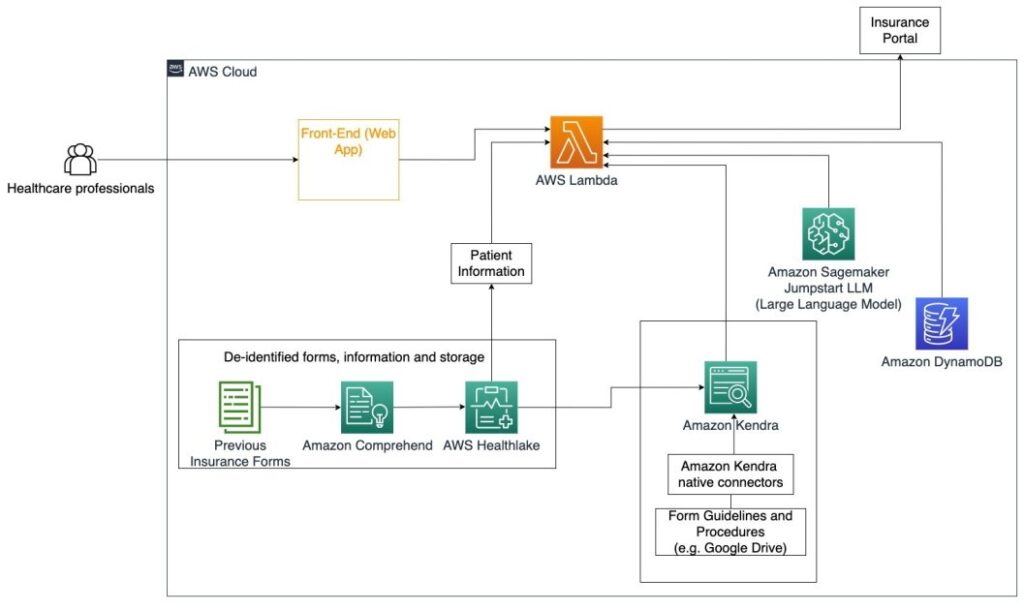

Healthcare companies have the chance to automate the usage of giant quantities of inside affected person info, whereas additionally addressing widespread questions relating to use instances akin to remedy choices, insurance coverage claims, medical trials, and pharmaceutical analysis. Utilizing a generative AI conversational bot permits fast and correct technology of solutions about well being info from the supplied information base. For instance, some healthcare professionals spend a whole lot of time filling in kinds to file insurance coverage claims.

In related settings, medical trial directors and researchers want to seek out details about remedy choices. A generative AI conversational bot can use the pre-built connectors in Amazon Kendra to retrieve essentially the most related info from the hundreds of thousands of paperwork revealed by means of ongoing analysis performed by pharmaceutical firms and universities.

Particular use case: Cut back the errors and time wanted to fill out and ship insurance coverage kinds.

On this structure diagram, a healthcare skilled is ready to use the generative AI conversational bot to determine what kinds have to be stuffed out for the insurance coverage. The LangChain agent is then capable of retrieve the best kinds and add the wanted info for a affected person in addition to giving responses for descriptive components of the kinds based mostly on insurance coverage insurance policies and former kinds. The healthcare skilled can edit the responses given by the LLM earlier than approving and having the shape delivered to the insurance coverage portal.

The next steps describe the AWS Lambda capabilities and their movement by means of the method:

- LangChain agent to determine the intent

- Retrieve the affected person info wanted

- Fill out the insurance coverage type based mostly on the affected person info and type guideline

- Submit the shape to the insurance coverage portal after person approval

AWS HealthLake is used to securely retailer the well being knowledge together with earlier insurance coverage kinds and affected person info, and Amazon Comprehend is used to take away personally identifiable info (PII) from the earlier insurance coverage kinds. The Amazon Kendra crawler is then ready to make use of the set of insurance coverage kinds and pointers to create the index. As soon as the shape(s) are stuffed out by the generative AI, then the shape(s) reviewed by the medical skilled will be despatched to the insurance coverage portal.

Value estimate

The price of deploying the bottom answer as a proof-of-concept is proven within the following desk. For the reason that base answer is taken into account a proof-of-concept, Amazon Kendra Developer Version was used as a low-cost choice because the workload wouldn’t be in manufacturing. Our assumption for Amazon Kendra Developer Version was 730 lively hours for the month.

For Amazon SageMaker, we made an assumption that the shopper can be utilizing the ml.g4dn.2xlarge occasion for real-time inference, with a single inference endpoint per occasion. You will discover extra info on Amazon SageMaker pricing and obtainable inference occasion varieties here.

| Service | Assets Consumed | Value Estimate Per Month in USD |

| AWS Amplify | 150 construct minutes 1 GB of Knowledge served 500,000 requests |

15.71 |

| Amazon API Gateway | 1M REST API Calls | 3.5 |

| AWS Lambda | 1 Million requests 5 seconds length per request 2 GB reminiscence allotted |

160.23 |

| Amazon DynamoDB | 1 million reads 1 million writes 100 GB storage |

26.38 |

| Amazon Sagemaker | Actual-time inference with ml.g4dn.2xlarge | 676.8 |

| Amazon Kendra | Developer Version with 730 hours/month 10,000 Paperwork scanned 5,000 queries/day |

821.25 |

| . | . | Whole Value: 1703.87 |

* Amazon Cognito has a free tier of fifty,000 Month-to-month Energetic Customers who use Cognito Person Swimming pools or 50 Month-to-month Energetic Customers who use SAML 2.0 id suppliers

Clear Up

To save lots of prices, delete all of the assets you deployed as a part of the tutorial. You may delete any SageMaker endpoints you will have created by way of the SageMaker console. Keep in mind, deleting an Amazon Kendra index doesn’t take away the unique paperwork out of your storage.

Conclusion

On this submit, we confirmed you tips on how to simplify entry to inside info by summarizing from a number of repositories in real-time. After the latest developments of commercially obtainable LLMs, the probabilities of generative AI have turn into extra obvious. On this submit, we showcased methods to make use of AWS providers to create a serverless chatbot that makes use of generative AI to reply questions. This strategy incorporates an authentication layer and Amazon Comprehend’s PII detection to filter out any delicate info supplied within the person’s question. Whether or not or not it’s people in healthcare understanding the nuances to file insurance coverage claims or HR understanding particular company-wide laws, there’re a number of industries and verticals that may profit from this strategy. An Amazon SageMaker JumpStart basis mannequin is the engine behind the chatbot, whereas a context stuffing strategy utilizing the RAG approach is used to make sure that the responses extra precisely reference inside paperwork.

To study extra about working with generative AI on AWS, seek advice from Announcing New Tools for Building with Generative AI on AWS. For extra in-depth steerage on utilizing the RAG approach with AWS providers, seek advice from Quickly build high-accuracy Generative AI applications on enterprise data using Amazon Kendra, LangChain, and large language models. For the reason that strategy on this weblog is LLM agnostic, any LLM can be utilized for inference. In our subsequent submit, we’ll define methods to implement this answer utilizing Amazon Bedrock and the Amazon Titan LLM.

Concerning the Authors

Abhishek Maligehalli Shivalingaiah is a Senior AI Companies Answer Architect at AWS. He’s captivated with constructing functions utilizing Generative AI, Amazon Kendra and NLP. He has round 10 years of expertise in constructing Knowledge & AI options to create worth for purchasers and enterprises. He has even constructed a (private) chatbot for enjoyable to solutions questions on his profession {and professional} journey. Exterior of labor he enjoys making portraits of household & associates, and loves creating artworks.

Abhishek Maligehalli Shivalingaiah is a Senior AI Companies Answer Architect at AWS. He’s captivated with constructing functions utilizing Generative AI, Amazon Kendra and NLP. He has round 10 years of expertise in constructing Knowledge & AI options to create worth for purchasers and enterprises. He has even constructed a (private) chatbot for enjoyable to solutions questions on his profession {and professional} journey. Exterior of labor he enjoys making portraits of household & associates, and loves creating artworks.

Medha Aiyah is an Affiliate Options Architect at AWS, based mostly in Austin, Texas. She not too long ago graduated from the College of Texas at Dallas in December 2022 along with her Masters of Science in Pc Science with a specialization in Clever Methods specializing in AI/ML. She is to study extra about AI/ML and using AWS providers to find options prospects can profit from.

Medha Aiyah is an Affiliate Options Architect at AWS, based mostly in Austin, Texas. She not too long ago graduated from the College of Texas at Dallas in December 2022 along with her Masters of Science in Pc Science with a specialization in Clever Methods specializing in AI/ML. She is to study extra about AI/ML and using AWS providers to find options prospects can profit from.

Hugo Tse is an Affiliate Options Architect at AWS based mostly in Seattle, Washington. He holds a Grasp’s diploma in Data Know-how from Arizona State College and a bachelor’s diploma in Economics from the College of Chicago. He’s a member of the Data Methods Audit and Management Affiliation (ISACA) and Worldwide Data System Safety Certification Consortium (ISC)2. He enjoys serving to prospects profit from expertise.

Hugo Tse is an Affiliate Options Architect at AWS based mostly in Seattle, Washington. He holds a Grasp’s diploma in Data Know-how from Arizona State College and a bachelor’s diploma in Economics from the College of Chicago. He’s a member of the Data Methods Audit and Management Affiliation (ISACA) and Worldwide Data System Safety Certification Consortium (ISC)2. He enjoys serving to prospects profit from expertise.

Ayman Ishimwe is an Affiliate Options Architect at AWS based mostly in Seattle, Washington. He holds a Grasp’s diploma in Software program Engineering and IT from Oakland College. He has a previous expertise in software program improvement, particularly in constructing microservices for distributed net functions. He’s captivated with serving to prospects construct strong and scalable options on AWS cloud providers following finest practices.

Ayman Ishimwe is an Affiliate Options Architect at AWS based mostly in Seattle, Washington. He holds a Grasp’s diploma in Software program Engineering and IT from Oakland College. He has a previous expertise in software program improvement, particularly in constructing microservices for distributed net functions. He’s captivated with serving to prospects construct strong and scalable options on AWS cloud providers following finest practices.

Shervin Suresh is an Affiliate Options Architect at AWS based mostly in Austin, Texas. He has graduated with a Masters in Software program Engineering with a Focus in Cloud Computing and Virtualization and a Bachelors in Pc Engineering from San Jose State College. He’s captivated with leveraging expertise to assist enhance the lives of individuals from all backgrounds.

Shervin Suresh is an Affiliate Options Architect at AWS based mostly in Austin, Texas. He has graduated with a Masters in Software program Engineering with a Focus in Cloud Computing and Virtualization and a Bachelors in Pc Engineering from San Jose State College. He’s captivated with leveraging expertise to assist enhance the lives of individuals from all backgrounds.