Learn to construct and deploy tool-using LLM brokers utilizing AWS SageMaker JumpStart Basis Fashions

Giant language mannequin (LLM) brokers are applications that stretch the capabilities of standalone LLMs with 1) entry to exterior instruments (APIs, features, webhooks, plugins, and so forth), and a pair of) the flexibility to plan and execute duties in a self-directed vogue. Usually, LLMs must work together with different software program, databases, or APIs to perform complicated duties. For instance, an administrative chatbot that schedules conferences would require entry to staff’ calendars and electronic mail. With entry to instruments, LLM brokers can grow to be extra highly effective—at the price of extra complexity.

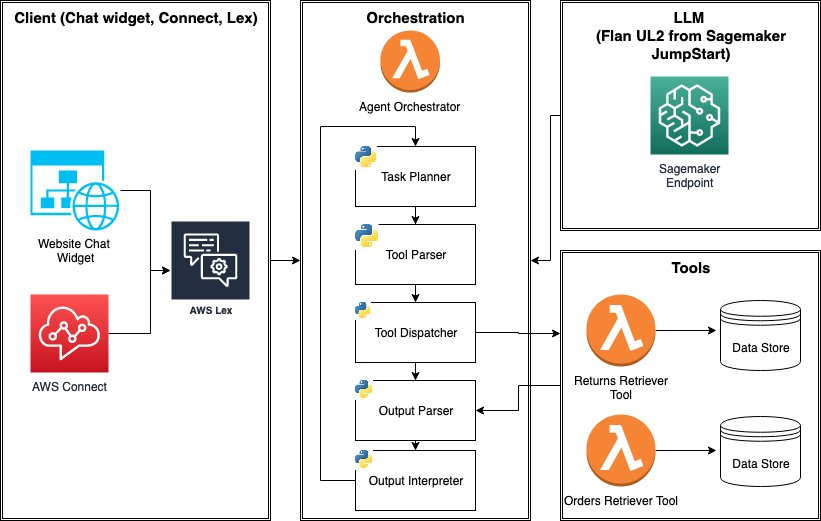

On this put up, we introduce LLM brokers and exhibit methods to construct and deploy an e-commerce LLM agent utilizing Amazon SageMaker JumpStart and AWS Lambda. The agent will use instruments to supply new capabilities, equivalent to answering questions on returns (“Is my return rtn001 processed?”) and offering updates about orders (“Might you inform me if order 123456 has shipped?”). These new capabilities require LLMs to fetch knowledge from a number of knowledge sources (orders, returns) and carry out retrieval augmented technology (RAG).

To energy the LLM agent, we use a Flan-UL2 mannequin deployed as a SageMaker endpoint and use knowledge retrieval instruments constructed with AWS Lambda. The agent can subsequently be built-in with Amazon Lex and used as a chatbot inside web sites or AWS Connect. We conclude the put up with objects to think about earlier than deploying LLM brokers to manufacturing. For a totally managed expertise for constructing LLM brokers, AWS additionally offers the agents for Amazon Bedrock feature (in preview).

A short overview of LLM agent architectures

LLM brokers are applications that use LLMs to determine when and methods to use instruments as mandatory to finish complicated duties. With instruments and job planning skills, LLM brokers can work together with exterior programs and overcome conventional limitations of LLMs, equivalent to information cutoffs, hallucinations, and imprecise calculations. Instruments can take a wide range of varieties, equivalent to API calls, Python features, or webhook-based plugins. For instance, an LLM can use a “retrieval plugin” to fetch related context and carry out RAG.

So what does it imply for an LLM to choose instruments and plan duties? There are quite a few approaches (equivalent to ReAct, MRKL, Toolformer, HuggingGPT, and Transformer Agents) to utilizing LLMs with instruments, and developments are occurring quickly. However one easy means is to immediate an LLM with an inventory of instruments and ask it to find out 1) if a software is required to fulfill the consumer question, and if that’s the case, 2) choose the suitable software. Such a immediate sometimes seems to be like the next instance and should embrace few-shot examples to enhance the LLM’s reliability in selecting the correct software.

Extra complicated approaches contain utilizing a specialised LLM that may straight decode “API calls” or “software use,” equivalent to GorillaLLM. Such finetuned LLMs are skilled on API specification datasets to acknowledge and predict API calls primarily based on instruction. Usually, these LLMs require some metadata about accessible instruments (descriptions, yaml, or JSON schema for his or her enter parameters) so as to output software invocations. This strategy is taken by agents for Amazon Bedrock and OpenAI function calls. Word that LLMs typically must be sufficiently massive and sophisticated so as to present software choice potential.

Assuming job planning and gear choice mechanisms are chosen, a typical LLM agent program works within the following sequence:

- Consumer request – This system takes a consumer enter equivalent to “The place is my order

123456?” from some shopper utility. - Plan subsequent motion(s) and choose software(s) to make use of – Subsequent, this system makes use of a immediate to have the LLM generate the following motion, for instance, “Search for the orders desk utilizing

OrdersAPI.” The LLM is prompted to counsel a software title equivalent toOrdersAPIfrom a predefined listing of obtainable instruments and their descriptions. Alternatively, the LLM may very well be instructed to straight generate an API name with enter parameters equivalent toOrdersAPI(12345).- Word that the following motion could or could not contain utilizing a software or API. If not, the LLM would reply to consumer enter with out incorporating extra context from instruments or just return a canned response equivalent to, “I can not reply this query.”

- Parse software request – Subsequent, we have to parse out and validate the software/motion prediction recommended by the LLM. Validation is required to make sure software names, APIs, and request parameters aren’t hallucinated and that the instruments are correctly invoked in keeping with specification. This parsing could require a separate LLM name.

- Invoke software – As soon as legitimate software title(s) and parameter(s) are ensured, we invoke the software. This may very well be an HTTP request, perform name, and so forth.

- Parse output – The response from the software may have extra processing. For instance, an API name could end in a protracted JSON response, the place solely a subset of fields are of curiosity to the LLM. Extracting data in a clear, standardized format can assist the LLM interpret the end result extra reliably.

- Interpret output – Given the output from the software, the LLM is prompted once more to make sense of it and determine whether or not it may possibly generate the ultimate reply again to the consumer or whether or not extra actions are required.

- Terminate or proceed to step 2 – Both return a closing reply or a default reply within the case of errors or timeouts.

Totally different agent frameworks execute the earlier program movement in a different way. For instance, ReAct combines software choice and closing reply technology right into a single immediate, versus utilizing separate prompts for software choice and reply technology. Additionally, this logic may be run in a single move or run in a whereas assertion (the “agent loop”), which terminates when the ultimate reply is generated, an exception is thrown, or timeout happens. What stays fixed is that brokers use the LLM because the centerpiece to orchestrate planning and gear invocations till the duty terminates. Subsequent, we present methods to implement a easy agent loop utilizing AWS providers.

Resolution overview

For this weblog put up, we implement an e-commerce help LLM agent that gives two functionalities powered by instruments:

- Return standing retrieval software – Reply questions concerning the standing of returns equivalent to, “What is occurring to my return

rtn001?” - Order standing retrieval software – Monitor the standing of orders equivalent to, “What’s the standing of my order

123456?”

The agent successfully makes use of the LLM as a question router. Given a question (“What’s the standing of order 123456?”), choose the suitable retrieval software to question throughout a number of knowledge sources (that’s, returns and orders). We accomplish question routing by having the LLM choose amongst a number of retrieval instruments, that are liable for interacting with an information supply and fetching context. This extends the straightforward RAG sample, which assumes a single knowledge supply.

Each retrieval instruments are Lambda features that take an id (orderId or returnId) as enter, fetches a JSON object from the information supply, and converts the JSON right into a human pleasant illustration string that’s appropriate for use by LLM. The information supply in a real-world situation may very well be a extremely scalable NoSQL database equivalent to DynamoDB, however this answer employs easy Python Dict with pattern knowledge for demo functions.

Extra functionalities may be added to the agent by including Retrieval Instruments and modifying prompts accordingly. This agent may be examined a standalone service that integrates with any UI over HTTP, which may be accomplished simply with Amazon Lex.

Listed here are some extra particulars about the important thing parts:

- LLM inference endpoint – The core of an agent program is an LLM. We are going to use SageMaker JumpStart basis mannequin hub to simply deploy the

Flan-UL2mannequin. SageMaker JumpStart makes it straightforward to deploy LLM inference endpoints to devoted SageMaker cases. - Agent orchestrator – Agent orchestrator orchestrates the interactions among the many LLM, instruments, and the shopper app. For our answer, we use an AWS Lambda perform to drive this movement and make use of the next as helper features.

- Activity (software) planner – Activity planner makes use of the LLM to counsel one in all 1) returns inquiry, 2) order inquiry, or 3) no software. We use immediate engineering solely and

Flan-UL2mannequin as-is with out fine-tuning. - Software parser – Software parser ensures that the software suggestion from job planner is legitimate. Notably, we make sure that a single

orderIdorreturnIdmay be parsed. In any other case, we reply with a default message. - Software dispatcher – Software dispatcher invokes instruments (Lambda features) utilizing the legitimate parameters.

- Output parser – Output parser cleans and extracts related objects from JSON right into a human-readable string. This job is finished each by every retrieval software in addition to inside the orchestrator.

- Output interpreter – Output interpreter’s duty is to 1) interpret the output from software invocation and a pair of) decide whether or not the consumer request may be happy or extra steps are wanted. If the latter, a closing response is generated individually and returned to the consumer.

- Activity (software) planner – Activity planner makes use of the LLM to counsel one in all 1) returns inquiry, 2) order inquiry, or 3) no software. We use immediate engineering solely and

Now, let’s dive a bit deeper into the important thing parts: agent orchestrator, job planner, and gear dispatcher.

Agent orchestrator

Under is an abbreviated model of the agent loop contained in the agent orchestrator Lambda perform. The loop makes use of helper features equivalent to task_planner or tool_parser, to modularize the duties. The loop right here is designed to run at most two occasions to stop the LLM from being caught in a loop unnecessarily lengthy.

Activity planner (software prediction)

The agent orchestrator makes use of job planner to foretell a retrieval software primarily based on consumer enter. For our LLM agent, we’ll merely use immediate engineering and few shot prompting to show the LLM this job in context. Extra subtle brokers might use a fine-tuned LLM for software prediction, which is past the scope of this put up. The immediate is as follows:

Software dispatcher

The software dispatch mechanism works by way of if/else logic to name acceptable Lambda features relying on the software’s title. The next is tool_dispatch helper perform’s implementation. It’s used contained in the agent loop and returns the uncooked response from the software Lambda perform, which is then cleaned by an output_parser perform.

Deploy the answer

Necessary stipulations – To get began with the deployment, you have to fulfill the next stipulations:

- Entry to the AWS Management Console by way of a consumer who can launch AWS CloudFormation stacks

- Familiarity with navigating the AWS Lambda and Amazon Lex consoles

Flan-UL2requires a singleml.g5.12xlargefor deployment, which can necessitate growing useful resource limits by way of a support ticket. In our instance, we useus-east-1because the Area, so please be sure that to extend the service quota (if wanted) inus-east-1.

Deploy utilizing CloudFormation – You may deploy the answer to us-east-1 by clicking the button beneath:![]()

Deploying the answer will take about 20 minutes and can create a LLMAgentStack stack, which:

- deploys the SageMaker endpoint utilizing

Flan-UL2mannequin from SageMaker JumpStart; - deploys three Lambda features:

LLMAgentOrchestrator,LLMAgentReturnsTool,LLMAgentOrdersTool; and - deploys an AWS Lex bot that can be utilized to check the agent:

Sagemaker-Jumpstart-Flan-LLM-Agent-Fallback-Bot.

Check the answer

The stack deploys an Amazon Lex bot with the title Sagemaker-Jumpstart-Flan-LLM-Agent-Fallback-Bot. The bot can be utilized to check the agent end-to-end. Here’s an additional comprehensive guide for testing AWS Amazon Lex bots with a Lambda integration and how the integration works at a high level. However in brief, Amazon Lex bot is a useful resource that gives a fast UI to talk with the LLM agent operating inside a Lambda perform that we constructed (LLMAgentOrchestrator).

The pattern take a look at circumstances to think about are as follows:

- Legitimate order inquiry (for instance, “Which merchandise was ordered for

123456?”)- Order “123456” is a legitimate order, so we should always count on an affordable reply (e.g. “Natural Handsoap”)

- Legitimate return inquiry for a return (for instance, “When is my return

rtn003processed?”)- We must always count on an affordable reply concerning the return’s standing.

- Irrelevant to each returns or orders (for instance, “How is the climate in Scotland proper now?”)

- An irrelevant query to returns or orders, thus a default reply must be returned (“Sorry, I can not reply that query.”)

- Invalid order inquiry (for instance, “Which merchandise was ordered for

383833?”)- The id 383832 doesn’t exist within the orders dataset and therefore we should always fail gracefully (for instance, “Order not discovered. Please verify your Order ID.”)

- Invalid return inquiry (for instance, “When is my return

rtn123processed?”)- Equally, id

rtn123doesn’t exist within the returns dataset, and therefore ought to fail gracefully.

- Equally, id

- Irrelevant return inquiry (for instance, “What’s the impression of return

rtn001on world peace?”)- This query, whereas it appears to pertain to a legitimate order, is irrelevant. The LLM is used to filter questions with irrelevant context.

To run these assessments your self, listed below are the directions.

- On the Amazon Lex console (AWS Console > Amazon Lex), navigate to the bot entitled

Sagemaker-Jumpstart-Flan-LLM-Agent-Fallback-Bot. This bot has already been configured to name theLLMAgentOrchestratorLambda perform at any time when theFallbackIntentis triggered. - Within the navigation pane, select Intents.

- Select Construct on the high proper nook

- 4. Await the construct course of to finish. When it’s accomplished, you get a hit message, as proven within the following screenshot.

- Check the bot by coming into the take a look at circumstances.

Cleanup

To keep away from extra expenses, delete the assets created by our answer by following these steps:

- On the AWS CloudFormation console, choose the stack named

LLMAgentStack(or the customized title you picked). - Select Delete

- Verify that the stack is deleted from the CloudFormation console.

Necessary: double-check that the stack is efficiently deleted by guaranteeing that the Flan-UL2 inference endpoint is eliminated.

- To verify, go to AWS console > Sagemaker > Endpoints > Inference web page.

- The web page ought to listing all lively endpoints.

- Be certain

sm-jumpstart-flan-bot-endpointdoesn’t exist just like the beneath screenshot.

Issues for manufacturing

Deploying LLM brokers to manufacturing requires taking further steps to make sure reliability, efficiency, and maintainability. Listed here are some issues previous to deploying brokers in manufacturing:

- Deciding on the LLM mannequin to energy the agent loop: For the answer mentioned on this put up, we used a

Flan-UL2mannequin with out fine-tuning to carry out job planning or software choice. In apply, utilizing an LLM that’s fine-tuned to straight output software or API requests can improve reliability and efficiency, in addition to simplify improvement. We might fine-tune an LLM on software choice duties or use a mannequin that straight decodes software tokens like Toolformer.- Utilizing fine-tuned fashions can even simplify including, eradicating, and updating instruments accessible to an agent. With prompt-only primarily based approaches, updating instruments requires modifying each immediate contained in the agent orchestrator, equivalent to these for job planning, software parsing, and gear dispatch. This may be cumbersome, and the efficiency could degrade if too many instruments are supplied in context to the LLM.

- Reliability and efficiency: LLM brokers may be unreliable, particularly for complicated duties that can’t be accomplished inside a couple of loops. Including output validations, retries, structuring outputs from LLMs into JSON or yaml, and imposing timeouts to supply escape hatches for LLMs caught in loops can improve reliability.

Conclusion

On this put up, we explored methods to construct an LLM agent that may make the most of a number of instruments from the bottom up, utilizing low-level immediate engineering, AWS Lambda features, and SageMaker JumpStart as constructing blocks. We mentioned the structure of LLM brokers and the agent loop intimately. The ideas and answer structure launched on this weblog put up could also be acceptable for brokers that use a small variety of a predefined set of instruments. We additionally mentioned a number of methods for utilizing brokers in manufacturing. Agents for Bedrock, which is in preview, additionally offers a managed expertise for constructing brokers with native help for agentic software invocations.

Concerning the Creator

John Hwang is a Generative AI Architect at AWS with particular concentrate on Giant Language Mannequin (LLM) functions, vector databases, and generative AI product technique. He’s keen about serving to corporations with AI/ML product improvement, and the way forward for LLM brokers and co-pilots. Previous to becoming a member of AWS, he was a Product Supervisor at Alexa, the place he helped deliver conversational AI to cell units, in addition to a derivatives dealer at Morgan Stanley. He holds B.S. in pc science from Stanford College.

John Hwang is a Generative AI Architect at AWS with particular concentrate on Giant Language Mannequin (LLM) functions, vector databases, and generative AI product technique. He’s keen about serving to corporations with AI/ML product improvement, and the way forward for LLM brokers and co-pilots. Previous to becoming a member of AWS, he was a Product Supervisor at Alexa, the place he helped deliver conversational AI to cell units, in addition to a derivatives dealer at Morgan Stanley. He holds B.S. in pc science from Stanford College.