Construct a classification pipeline with Amazon Comprehend customized classification (Half I)

“Knowledge locked away in textual content, audio, social media, and different unstructured sources is usually a aggressive benefit for companies that work out how you can use it“

Solely 18% of organizations in a 2019 survey by Deloitte reported with the ability to make the most of unstructured knowledge. Nearly all of knowledge, between 80% and 90%, is unstructured knowledge. That may be a large untapped useful resource that has the potential to present companies a aggressive edge if they’ll learn the way to make use of it. It may be troublesome to search out insights from this knowledge, significantly if efforts are wanted to categorise, tag, or label it. Amazon Comprehend customized classification may be helpful on this state of affairs. Amazon Comprehend is a natural-language processing (NLP) service that makes use of machine studying to uncover invaluable insights and connections in textual content.

Doc categorization or classification has important advantages throughout enterprise domains –

- Improved search and retrieval – By categorizing paperwork into related subjects or classes, it makes it a lot simpler for customers to look and retrieve the paperwork they want. They will search inside particular classes to slender down outcomes.

- Data administration – Categorizing paperwork in a scientific manner helps to arrange a company’s information base. It makes it simpler to find related data and see connections between associated content material.

- Streamlined workflows – Automated doc sorting may help streamline many enterprise processes like processing invoices, buyer help, or regulatory compliance. Paperwork may be routinely routed to the correct individuals or workflows.

- Value and time financial savings – Handbook doc categorization is tedious, time-consuming, and costly. AI methods can take over this mundane activity and categorize hundreds of paperwork in a short while at a a lot decrease price.

- Perception technology – Analyzing traits in doc classes can present helpful enterprise insights. For instance, a rise in buyer complaints in a product class may signify some points that have to be addressed.

- Governance and coverage enforcement – Organising doc categorization guidelines helps to make sure that paperwork are categorised appropriately in keeping with a company’s insurance policies and governance requirements. This permits for higher monitoring and auditing.

- Customized experiences – In contexts like web site content material, doc categorization permits for tailor-made content material to be proven to customers primarily based on their pursuits and preferences as decided from their searching habits. This could improve consumer engagement.

The complexity of creating a bespoke classification machine studying mannequin varies relying on a wide range of elements reminiscent of knowledge high quality, algorithm, scalability, and area information, to say a couple of. It’s important to begin with a transparent downside definition, clear and related knowledge, and step by step work by means of the completely different levels of mannequin growth. Nevertheless, companies can create their very own distinctive machine studying fashions utilizing Amazon Comprehend customized classification to routinely classify textual content paperwork into classes or tags, to fulfill enterprise particular necessities and map to enterprise expertise and doc classes. As human tagging or categorization is now not needed, this will save companies numerous time, cash, and labor. We now have made this course of easy by automating the entire coaching pipeline.

In first a part of this multi-series weblog publish, you’ll learn to create a scalable coaching pipeline and put together coaching knowledge for Comprehend Customized Classification fashions. We are going to introduce a customized classifier coaching pipeline that may be deployed in your AWS account with few clicks. We’re utilizing the BBC information dataset, and can be coaching a classifier to establish the category (e.g. politics, sports activities) {that a} doc belongs to. The pipeline will allow your group to quickly reply to modifications and prepare new fashions with out having to begin from scratch every time. You could scale up and prepare a number of fashions primarily based in your demand simply.

Conditions

- An energetic AWS account (Click on here to create a brand new AWS account)

- Entry to Amazon Comprehend, Amazon S3, Amazon Lambda, Amazon Step Perform, Amazon SNS, and Amazon CloudFormation

- Coaching knowledge (semi-structure or textual content) ready in following part

- Primary information about Python and Machine Studying generally

Put together coaching knowledge

This answer can take enter as both textual content format (ex. CSV) or semi-structured format (ex. PDF).

Textual content enter

Amazon Comprehend customized classification helps two modes: multi-class and multi-label.

In multi-class mode, every doc can have one and just one class assigned to it. The coaching knowledge must be ready as two-column CSV file with every line of the file containing a single class and the textual content of a doc that demonstrates the category.

Instance for BBC news dataset:

In multi-label mode, every doc has no less than one class assigned to it, however can have extra. Coaching knowledge must be as a two-column CSV file, which every line of the file containing a number of lessons and the textual content of the coaching doc. Multiple class must be indicated by utilizing a delimiter between every class.

No header must be included within the CSV file for both of the coaching mode.

Semi-structured enter

Beginning in 2023, Amazon Comprehend now helps coaching fashions utilizing semi-structured paperwork. The coaching knowledge for semi-structure enter is comprised of a set of labeled paperwork, which may be pre-identified paperwork from a doc repository that you have already got entry to. The next is an instance of an annotations file CSV knowledge required for coaching (Sample Data):

The annotations CSV file accommodates three columns: The primary column accommodates the label for the doc, the second column is the doc title (i.e., file title), and the final column is the web page variety of the doc that you just wish to embrace within the coaching dataset. Normally, if the annotations CSV file is situated on the identical folder with all different doc, then you definitely simply must specify the doc title within the second column. Nevertheless, if the CSV file is situated in a special location, then you definitely’d must specify the trail to location within the second column, reminiscent of path/to/prefix/document1.pdf.

For particulars, how you can put together your coaching knowledge, please discuss with here.

Answer overview

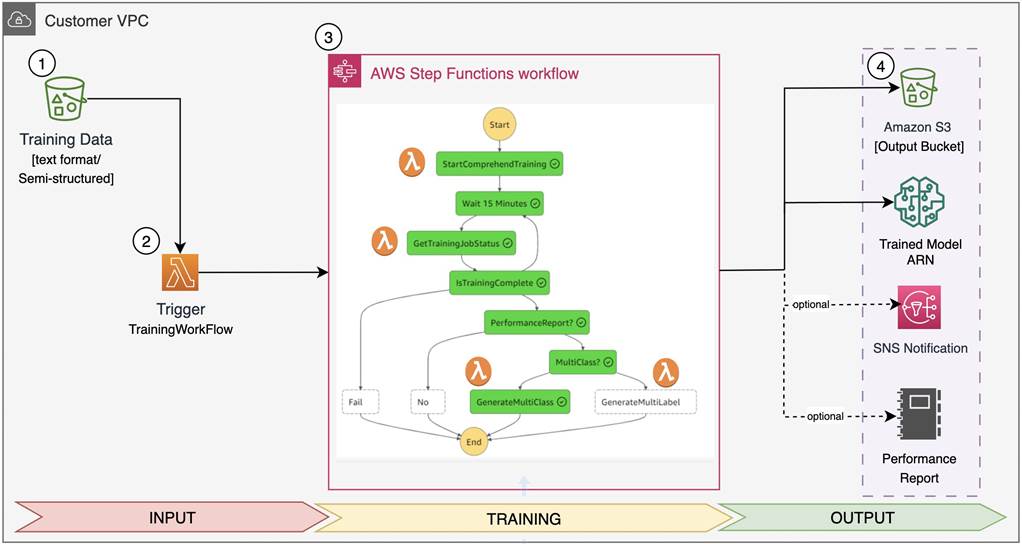

- Amazon Comprehend coaching pipeline begins when coaching knowledge (.csv file for textual content enter and annotation .csv file for semi-structure enter) is uploaded to a devoted Amazon Easy Storage Service (Amazon S3) bucket.

- An AWS Lambda operate is invoked by Amazon S3 set off such that each time an object is uploaded to specified Amazon S3 location, the AWS Lambda operate retrieves the supply bucket title and the important thing title of the uploaded object and go it to coaching step function workflow.

- In coaching step operate, after receiving the coaching knowledge bucket title and object key title as enter parameters, a customized mannequin coaching workflow kicks-off as a sequence of lambdas capabilities as described:

StartComprehendTraining: This AWS Lambda operate defines aComprehendClassifierobject relying on the kind of enter recordsdata (i.e., textual content or semi-structured) after which kicks-off an Amazon Comprehend customized classification coaching activity by calling create_document_classifier Utility Programming Interfact (API), which returns a coaching Job Amazon Useful resource Names (ARN) . Subsequently, this operate checks the standing of the coaching job by invoking describe_document_classifier API. Lastly, it returns a coaching Job ARN and job standing, as output to the subsequent stage of coaching workflow.GetTrainingJobStatus: This AWS Lambda checks the job standing of coaching job in each quarter-hour, by calling describe_document_classifier API, till coaching job standing modifications to Full or Failed.GenerateMultiClassorGenerateMultiLabel: If you choose sure for efficiency report when launching the stack, one in every of these two AWS Lambdas will run evaluation in keeping with your Amazon Comprehend mannequin outputs, which generates per class efficiency evaluation and put it aside to Amazon S3.GenerateMultiClass: This AWS Lambda can be referred to as in case your enter is MultiClass and you choose sure for efficiency report.GenerateMultiLabel: This AWS Lambda can be referred to as in case your enter is MultiLabel and you choose sure for efficiency report.

- As soon as the coaching is completed efficiently, the answer generates following outputs:

- Customized Classification Mannequin: A educated mannequin ARN can be obtainable in your account for future inference work.

- Confusion Matrix [Optional]: A confusion matrix (

confusion_matrix.json) can be obtainable in consumer outlined output Amazon S3 path, relying on the consumer choice. - Amazon Simple Notification Service notification [Optional]: A notification electronic mail can be despatched about coaching job standing to the subscribers, relying on the preliminary consumer choice.

Walkthrough

Launching the answer

To deploy your pipeline, full the next steps:

- Select Launch Stack button:

- Select Subsequent

- Specify the pipeline particulars with the choices becoming your use case:

Data for every stack element:

- Stack title (Required) – the title you specified for this AWS CloudFormation stack. The title should be distinctive within the Area wherein you’re creating it.

- Q01ClassifierInputBucketName (Required) – The Amazon S3 bucket title to retailer your enter knowledge. It must be a globally distinctive title and AWS CloudFormation stack helps you create the bucket whereas it’s being launched.

- Q02ClassifierOutputBucketName (Required) – The Amazon S3 bucket title to retailer outputs from Amazon Comprehend and the pipeline. It also needs to be a globally distinctive title.

- Q03InputFormat – A dropdown choice, you’ll be able to select textual content (in case your coaching knowledge is csv recordsdata) or semi-structure (in case your coaching knowledge are semi-structure [e.g., PDF files]) primarily based in your knowledge enter format.

- Q04Language – A dropdown choice, selecting the language of paperwork from supported listing. Please word, at present solely English is supported in case your enter format is semi-structure.

- Q05MultiClass – A dropdown choice, choose sure in case your enter is MultiClass mode. In any other case, choose no.

- Q06LabelDelimiter – Solely required in case your Q05MultiClass reply is no. This delimiter is utilized in your coaching knowledge to separate every class.

- Q07ValidationDataset – A dropdown choice, change the reply to sure if you wish to check the efficiency of educated classifier with your personal check knowledge.

- Q08S3ValidationPath – Solely required in case your Q07ValidationDataset reply is sure.

- Q09PerformanceReport – A dropdown choice, choose sure if you wish to generate the class-level efficiency report publish mannequin coaching. The report can be saved in you specified output bucket in Q02ClassifierOutputBucketName.

- Q10EmailNotification – A dropdown choice. Choose sure if you wish to obtain notification after mannequin is educated.

- Q11EmailID – Enter legitimate electronic mail handle for receiving efficiency report notification. Please word, you must verify subscription out of your electronic mail after AWS CloudFormation stack is launched, earlier than you would obtain notification when coaching is accomplished.

- Within the Amazon Configure stack choices part, add elective tags, permissions, and different superior settings.

- Select Subsequent

- Evaluation the stack particulars and choose I acknowledge that AWS CloudFormation may create AWS IAM sources.

- Select Submit. This initiates pipeline deployment in your AWS account.

- After the stack is deployed efficiently, then you can begin utilizing the pipeline. Create a

/training-datafolder underneath your specified Amazon S3 location for enter. Observe: Amazon S3 routinely applies server-side encryption (SSE-S3) for every new object except you specify a special encryption possibility. Please refer Data protection in Amazon S3 for extra particulars on knowledge safety and encryption in Amazon S3.

- Add your coaching knowledge to the folder. (If the coaching knowledge are semi-structure, then add all of the PDF recordsdata earlier than importing .csv format label data).

You’re finished! You’ve efficiently deployed your pipeline and you’ll verify the pipeline standing in deployed step operate. (You should have a educated mannequin in your Amazon Comprehend customized classification panel).

In case you select the mannequin and its model inside Amazon Comprehend Console, then now you can see extra particulars concerning the mannequin you simply educated. It contains the Mode you choose, which corresponds to the choice Q05MultiClass, the variety of labels, and the variety of educated and check paperwork inside your coaching knowledge. You could possibly additionally verify the general efficiency beneath; nonetheless, if you wish to verify detailed efficiency for every class, then please discuss with the Efficiency Report generated by the deployed pipeline.

Service quotas

Your AWS account has default quotas for Amazon Comprehend and AmazonTextract, if inputs are in semi-structure format. To view service quotas, please refer here for Amazon Comprehend and here for AmazonTextract.

Clear up

To keep away from incurring ongoing expenses, delete the sources you created as a part of this answer once you’re finished.

- On the Amazon S3 console, manually delete the contents inside buckets you created for enter and output knowledge.

- On the AWS CloudFormation console, select Stacks within the navigation pane.

- Choose the principle stack and select Delete.

This routinely deletes the deployed stack.

- Your educated Amazon Comprehend customized classification mannequin will stay in your account. In case you don’t want it anymore, in Amazon Comprehend console, delete the created mannequin.

Conclusion

On this publish, we confirmed you the idea of a scalable coaching pipeline for Amazon Comprehend customized classification fashions and offering an automatic answer to effectively coaching new fashions. The AWS CloudFormation template offered makes it potential so that you can create your personal textual content classification fashions effortlessly, catering to demand scales. The answer adopts the latest introduced Euclid characteristic and accepts inputs in textual content or semi-structured format.

Now, we encourage you, our readers, to check these instruments. You will discover extra particulars about training data preparation and perceive the custom classifier metrics. Strive it out and see firsthand the way it can streamline your mannequin coaching course of and improve effectivity. Please share your suggestions to us!

Concerning the Authors

Sandeep Singh is a Senior Knowledge Scientist with AWS Skilled Providers. He’s captivated with serving to clients innovate and obtain their enterprise goals by creating state-of-the-art AI/ML powered options. He’s at present centered on Generative AI, LLMs, immediate engineering, and scaling Machine Studying throughout enterprises. He brings latest AI developments to create worth for patrons.

Sandeep Singh is a Senior Knowledge Scientist with AWS Skilled Providers. He’s captivated with serving to clients innovate and obtain their enterprise goals by creating state-of-the-art AI/ML powered options. He’s at present centered on Generative AI, LLMs, immediate engineering, and scaling Machine Studying throughout enterprises. He brings latest AI developments to create worth for patrons.

Yanyan Zhang is a Senior Knowledge Scientist within the Vitality Supply workforce with AWS Skilled Providers. She is captivated with serving to clients clear up actual issues with AI/ML information. Just lately, her focus has been on exploring the potential of Generative AI and LLM. Outdoors of labor, she loves touring, figuring out and exploring new issues.

Yanyan Zhang is a Senior Knowledge Scientist within the Vitality Supply workforce with AWS Skilled Providers. She is captivated with serving to clients clear up actual issues with AI/ML information. Just lately, her focus has been on exploring the potential of Generative AI and LLM. Outdoors of labor, she loves touring, figuring out and exploring new issues.

Wrick Talukdar is a Senior Architect with the Amazon Comprehend Service workforce. He works with AWS clients to assist them undertake machine studying on a big scale. Outdoors of labor, he enjoys studying and pictures.

Wrick Talukdar is a Senior Architect with the Amazon Comprehend Service workforce. He works with AWS clients to assist them undertake machine studying on a big scale. Outdoors of labor, he enjoys studying and pictures.