Modeling and enhancing textual content stability in stay captions – Google Analysis Weblog

Computerized speech recognition (ASR) know-how has made conversations extra accessible with stay captions in distant conferencing software program, cellular purposes, and head-worn displays. Nonetheless, to keep up real-time responsiveness, stay caption techniques usually show interim predictions which are up to date as new utterances are obtained. This may trigger textual content instability (a “flicker” the place beforehand displayed textual content is up to date, proven within the captions on the left within the video beneath), which may impair customers’ studying expertise resulting from distraction, fatigue, and problem following the dialog.

In “Modeling and Improving Text Stability in Live Captions”, offered at ACM CHI 2023, we formalize this downside of textual content stability by way of a couple of key contributions. First, we quantify the textual content instability by using a vision-based flicker metric that makes use of luminance distinction and discrete Fourier transform. Second, we additionally introduce a stability algorithm to stabilize the rendering of stay captions by way of tokenized alignment, semantic merging, and easy animation. Lastly, we performed a person examine (N=123) to know viewers’ expertise with stay captioning. Our statistical evaluation demonstrates a powerful correlation between our proposed flicker metric and viewers’ expertise. Moreover, it reveals that our proposed stabilization methods considerably improves viewers’ expertise (e.g., the captions on the fitting within the video above).

| Uncooked ASR captions vs. stabilized captions |

Metric

Impressed by previous work, we suggest a flicker-based metric to quantify textual content stability and objectively consider the efficiency of stay captioning techniques. Particularly, our objective is to quantify the glint in a grayscale stay caption video. We obtain this by evaluating the distinction in luminance between particular person frames (frames within the figures beneath) that represent the video. Massive visible modifications in luminance are apparent (e.g., addition of the phrase “vibrant” within the determine on the underside), however refined modifications (e.g., replace from “… this gold. Good..” to “… this. Gold is good”) could also be troublesome to discern for readers. Nonetheless, changing the change in luminance to its constituting frequencies exposes each the apparent and refined modifications.

Thus, for every pair of contiguous frames, we convert the distinction in luminance into its constituting frequencies utilizing discrete Fourier remodel. We then sum over every of the high and low frequencies to quantify the glint on this pair. Lastly, we common over the entire frame-pairs to get a per-video flicker.

As an example, we will see beneath that two similar frames (high) yield a flicker of 0, whereas two non-identical frames (backside) yield a non-zero flicker. It’s value noting that greater values of the metric point out excessive flicker within the video and thus, a worse person expertise than decrease values of the metric.

|

| Illustration of the glint metric between two similar frames. |

|

| Illustration of the glint between two non-identical frames. |

Stability algorithm

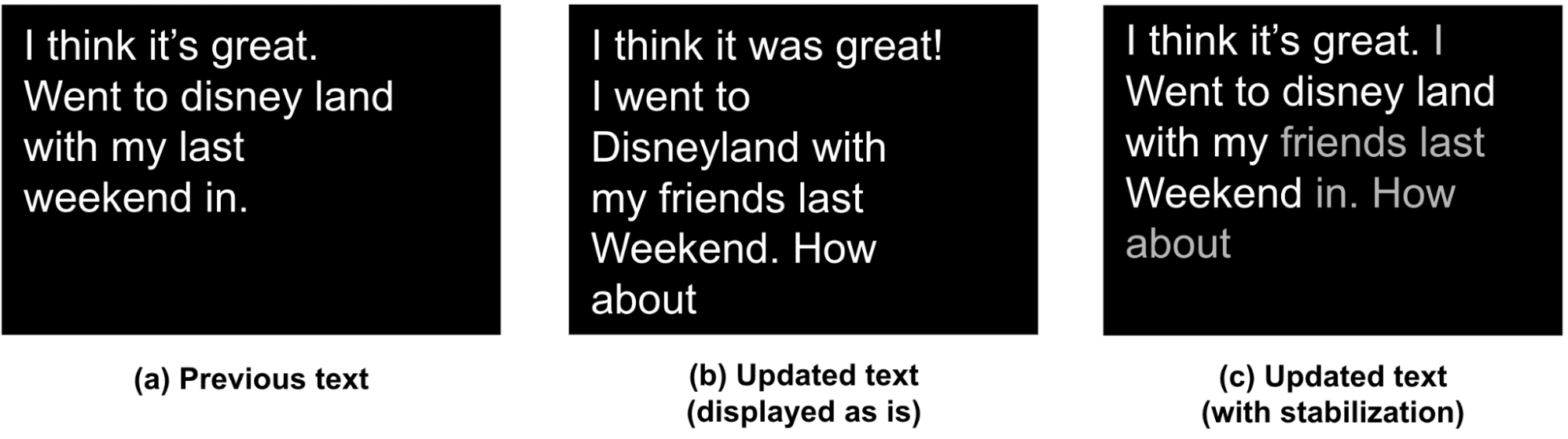

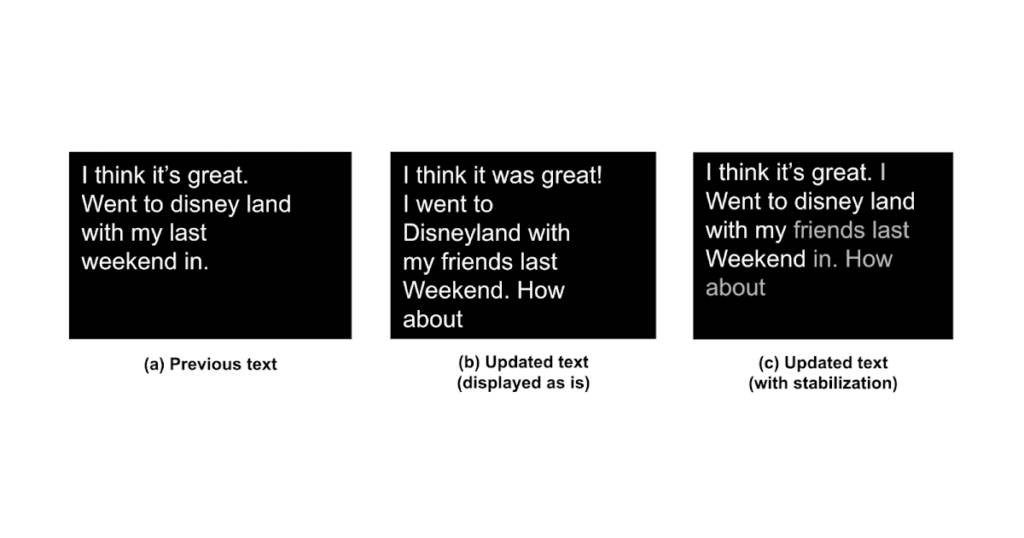

To enhance the soundness of stay captions, we suggest an algorithm that takes as enter already rendered sequence of tokens (e.g., “Earlier” within the determine beneath) and the brand new sequence of ASR predictions, and outputs an up to date stabilized textual content (e.g., “Up to date textual content (with stabilization)” beneath). It considers each the pure language understanding (NLU) side in addition to the ergonomic side (show, structure, and so forth.) of the person expertise in deciding when and produce a steady up to date textual content. Particularly, our algorithm performs tokenized alignment, semantic merging, and easy animation to attain this objective. In what follows, a token is outlined as a phrase or punctuation produced by ASR.

Our algorithm deal with the problem of manufacturing stabilized up to date textual content by first figuring out three courses of modifications (highlighted in crimson, inexperienced, and blue beneath):

- Pink: Addition of tokens to the tip of beforehand rendered captions (e.g., “How about”).

- Inexperienced: Addition / deletion of tokens, in the course of already rendered captions.

- B1: Addition of tokens (e.g., “I” and “associates”). These could or could not have an effect on the general comprehension of the captions, however could result in structure change. Such structure modifications aren’t desired in stay captions as they trigger important jitter and poorer person expertise. Right here “I” doesn’t add to the comprehension however “associates” does. Thus, you will need to steadiness updates with stability specifically for B1 kind tokens.

- B2: Elimination of tokens, e.g., “in” is eliminated within the up to date sentence.

- Blue: Re-captioning of tokens: This consists of token edits which will or could not have an effect on the general comprehension of the captions.

- C1: Correct nouns like “disney land” are up to date to “Disneyland”.

- C2: Grammatical shorthands like “it is” are up to date to “It was”.

|

| Courses of modifications between beforehand displayed and up to date textual content. |

Alignment, merging, and smoothing

To maximise textual content stability, our objective is to align the outdated sequence with the brand new sequence utilizing updates that make minimal modifications to the present structure whereas making certain correct and significant captions. To attain this, we leverage a variant of the Needleman-Wunsch algorithm with dynamic programming to merge the 2 sequences relying on the category of tokens as outlined above:

- Case A tokens: We straight add case A tokens, and line breaks as wanted to suit the up to date captions.

- Case B tokens: Our preliminary research confirmed that customers most well-liked stability over accuracy for beforehand displayed captions. Thus, we solely replace case B tokens if the updates don’t break an current line structure.

- Case C tokens: We evaluate the semantic similarity of case C tokens by reworking authentic and up to date sentences into sentence embeddings, measuring their dot-product, and updating them provided that they’re semantically completely different (similarity < 0.85) and the replace won’t trigger new line breaks.

Lastly, we leverage animations to cut back visible jitter. We implement easy scrolling and fading of newly added tokens to additional stabilize the general structure of the stay captions.

Consumer analysis

We performed a person examine with 123 members to (1) study the correlation of our proposed flicker metric with viewers’ expertise of the stay captions, and (2) assess the effectiveness of our stabilization methods.

We manually chosen 20 movies in YouTube to acquire a broad protection of matters together with video conferences, documentaries, tutorial talks, tutorials, information, comedy, and extra. For every video, we chosen a 30-second clip with not less than 90% speech.

We ready 4 varieties of renderings of stay captions to match:

- Uncooked ASR: uncooked speech-to-text outcomes from a speech-to-text API.

- Uncooked ASR + thresholding: solely show interim speech-to-text outcome if its confidence rating is greater than 0.85.

- Stabilized captions: captions utilizing our algorithm described above with alignment and merging.

- Stabilized and easy captions: stabilized captions with easy animation (scrolling + fading) to evaluate whether or not softened show expertise helps enhance the person expertise.

We collected person scores by asking the members to look at the recorded stay captions and price their assessments of consolation, distraction, ease of studying, ease of following the video, fatigue, and whether or not the captions impaired their expertise.

Correlation between flicker metric and person expertise

We calculated Spearman’s coefficient between the glint metric and every of the behavioral measurements (values vary from -1 to 1, the place damaging values point out a damaging relationship between the 2 variables, constructive values point out a constructive relationship, and 0 signifies no relationship). Proven beneath, our examine demonstrates statistically important ( < 0.001) correlations between our flicker metric and customers’ scores. Absolutely the values of the coefficient are round 0.3, indicating a reasonable relationship.

| Behavioral Measurement | Correlation to Flickering Metric* |

| Consolation | -0.29 |

| Distraction | 0.33 |

| Simple to learn | -0.31 |

| Simple to comply with movies | -0.29 |

| Fatigue | 0.36 |

| Impaired Expertise | 0.31 |

| Spearman correlation checks of our proposed flickering metric. *p < 0.001. |

Stabilization of stay captions

Our proposed method (stabilized easy captions) obtained constantly higher scores, important as measured by the Mann-Whitney U test (p < 0.01 within the determine beneath), in 5 out of six aforementioned survey statements. That’s, customers thought-about the stabilized captions with smoothing to be extra snug and simpler to learn, whereas feeling much less distraction, fatigue, and impairment to their expertise than different varieties of rendering.

|

| Consumer scores from 1 (Strongly Disagree) – 7 (Strongly Agree) on survey statements. (**: p<0.01, ***: p<0.001; ****: p<0.0001; ns: non-significant) |

Conclusion and future route

Textual content instability in stay captioning considerably impairs customers’ studying expertise. This work proposes a vision-based metric to mannequin caption stability that statistically considerably correlates with customers’ expertise, and an algorithm to stabilize the rendering of stay captions. Our proposed answer will be doubtlessly built-in into current ASR techniques to reinforce the usability of stay captions for a wide range of customers, together with these with translation wants or these with listening to accessibility wants.

Our work represents a considerable step in the direction of measuring and enhancing textual content stability. This may be developed to incorporate language-based metrics that target the consistency of the phrases and phrases utilized in stay captions over time. These metrics could present a mirrored image of person discomfort because it pertains to language comprehension and understanding in real-world situations. We’re additionally excited about conducting eye-tracking research (e.g., movies proven beneath) to trace viewers’ gaze patterns, equivalent to eye fixation and saccades, permitting us to raised perceive the varieties of errors which are most distracting and enhance textual content stability for these.

| Illustration of monitoring a viewer’s gaze when studying uncooked ASR captions. |

| Illustration of monitoring a viewer’s gaze when studying stabilized and smoothed captions. |

By enhancing textual content stability in stay captions, we will create simpler communication instruments and enhance how individuals join in on a regular basis conversations in acquainted or, by way of translation, unfamiliar languages.

Acknowledgements

This work is a collaboration throughout a number of groups at Google. Key contributors embody Xingyu “Bruce” Liu, Jun Zhang, Leonardo Ferrer, Susan Xu, Vikas Bahirwani, Boris Smus, Alex Olwal, and Ruofei Du. We want to prolong our because of our colleagues who supplied help, together with Nishtha Bhatia, Max Spear, and Darcy Philippon. We might additionally prefer to thank Lin Li, Evan Parker, and CHI 2023 reviewers.