MLOps for batch inference with mannequin monitoring and retraining utilizing Amazon SageMaker, HashiCorp Terraform, and GitLab CI/CD

Sustaining machine studying (ML) workflows in manufacturing is a difficult job as a result of it requires creating steady integration and steady supply (CI/CD) pipelines for ML code and fashions, mannequin versioning, monitoring for knowledge and idea drift, mannequin retraining, and a handbook approval course of to make sure new variations of the mannequin fulfill each efficiency and compliance necessities.

On this publish, we describe learn how to create an MLOps workflow for batch inference that automates job scheduling, mannequin monitoring, retraining, and registration, in addition to error dealing with and notification by utilizing Amazon SageMaker, Amazon EventBridge, AWS Lambda, Amazon Simple Notification Service (Amazon SNS), HashiCorp Terraform, and GitLab CI/CD. The introduced MLOps workflow supplies a reusable template for managing the ML lifecycle by way of automation, monitoring, auditability, and scalability, thereby lowering the complexities and prices of sustaining batch inference workloads in manufacturing.

Resolution overview

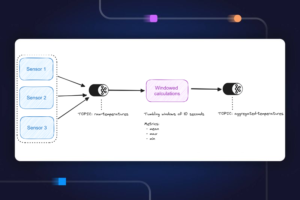

The next determine illustrates the proposed goal MLOps structure for enterprise batch inference for organizations who use GitLab CI/CD and Terraform infrastructure as code (IaC) along side AWS instruments and companies. GitLab CI/CD serves because the macro-orchestrator, orchestrating mannequin construct and mannequin deploy pipelines, which embrace sourcing, constructing, and provisioning Amazon SageMaker Pipelines and supporting assets utilizing the SageMaker Python SDK and Terraform. SageMaker Python SDK is used to create or replace SageMaker pipelines for coaching, coaching with hyperparameter optimization (HPO), and batch inference. Terraform is used to create extra assets equivalent to EventBridge guidelines, Lambda capabilities, and SNS matters for monitoring SageMaker pipelines and sending notifications (for instance, when a pipeline step fails or succeeds). SageMaker Pipelines serves because the orchestrator for ML mannequin coaching and inference workflows.

This structure design represents a multi-account technique the place ML fashions are constructed, educated, and registered in a central mannequin registry inside an information science growth account (which has extra controls than a typical software growth account). Then, inference pipelines are deployed to staging and manufacturing accounts utilizing automation from DevOps instruments equivalent to GitLab CI/CD. The central mannequin registry might optionally be positioned in a shared companies account as nicely. Consult with Operating model for finest practices relating to a multi-account technique for ML.

Within the following subsections, we focus on totally different points of the structure design intimately.

Infrastructure as code

IaC presents a solution to handle IT infrastructure by way of machine-readable information, making certain environment friendly model management. On this publish and the accompanying code pattern, we exhibit learn how to use HashiCorp Terraform with GitLab CI/CD to handle AWS assets successfully. This method underscores the important thing advantage of IaC, providing a clear and repeatable course of in IT infrastructure administration.

Mannequin coaching and retraining

On this design, the SageMaker coaching pipeline runs on a schedule (through EventBridge) or based mostly on an Amazon Simple Storage Service (Amazon S3) occasion set off (for instance, when a set off file or new coaching knowledge, in case of a single coaching knowledge object, is positioned in Amazon S3) to frequently recalibrate the mannequin with new knowledge. This pipeline doesn’t introduce structural or materials adjustments to the mannequin as a result of it makes use of fastened hyperparameters which were accepted in the course of the enterprise mannequin evaluation course of.

The coaching pipeline registers the newly educated mannequin model within the Amazon SageMaker Model Registry if the mannequin exceeds a predefined mannequin efficiency threshold (for instance, RMSE for regression and F1 rating for classification). When a brand new model of the mannequin is registered within the mannequin registry, it triggers a notification to the accountable knowledge scientist through Amazon SNS. The information scientist then must evaluation and manually approve the most recent model of the mannequin within the Amazon SageMaker Studio UI or through an API name utilizing the AWS Command Line Interface (AWS CLI) or AWS SDK for Python (Boto3) earlier than the brand new model of mannequin could be utilized for inference.

The SageMaker coaching pipeline and its supporting assets are created by the GitLab mannequin construct pipeline, both through a handbook run of the GitLab pipeline or mechanically when code is merged into the essential department of the mannequin construct Git repository.

Batch inference

The SageMaker batch inference pipeline runs on a schedule (through EventBridge) or based mostly on an S3 occasion set off as nicely. The batch inference pipeline mechanically pulls the most recent accepted model of the mannequin from the mannequin registry and makes use of it for inference. The batch inference pipeline consists of steps for checking knowledge high quality in opposition to a baseline created by the coaching pipeline, in addition to mannequin high quality (mannequin efficiency) if floor reality labels can be found.

If the batch inference pipeline discovers knowledge high quality points, it is going to notify the accountable knowledge scientist through Amazon SNS. If it discovers mannequin high quality points (for instance, RMSE is larger than a pre-specified threshold), the pipeline step for the mannequin high quality verify will fail, which can in flip set off an EventBridge occasion to begin the coaching with HPO pipeline.

The SageMaker batch inference pipeline and its supporting assets are created by the GitLab mannequin deploy pipeline, both through a handbook run of the GitLab pipeline or mechanically when code is merged into the essential department of the mannequin deploy Git repository.

Mannequin tuning and retuning

The SageMaker coaching with HPO pipeline is triggered when the mannequin high quality verify step of the batch inference pipeline fails. The mannequin high quality verify is carried out by evaluating mannequin predictions with the precise floor reality labels. If the mannequin high quality metric (for instance, RMSE for regression and F1 rating for classification) doesn’t meet a pre-specified criterion, the mannequin high quality verify step is marked as failed. The SageMaker coaching with HPO pipeline can be triggered manually (within the SageMaker Studio UI or through an API name utilizing the AWS CLI or SageMaker Python SDK) by the accountable knowledge scientist if wanted. As a result of the mannequin hyperparameters are altering, the accountable knowledge scientist must receive approval from the enterprise mannequin evaluation board earlier than the brand new mannequin model could be accepted within the mannequin registry.

The SageMaker coaching with HPO pipeline and its supporting assets are created by the GitLab mannequin construct pipeline, both through a handbook run of the GitLab pipeline or mechanically when code is merged into the essential department of the mannequin construct Git repository.

Mannequin monitoring

Information statistics and constraints baselines are generated as a part of the coaching and coaching with HPO pipelines. They’re saved to Amazon S3 and in addition registered with the educated mannequin within the mannequin registry if the mannequin passes analysis. The proposed structure for the batch inference pipeline makes use of Amazon SageMaker Model Monitor for knowledge high quality checks, whereas utilizing customized Amazon SageMaker Processing steps for mannequin high quality verify. This design decouples knowledge and mannequin high quality checks, which in flip means that you can solely ship a warning notification when knowledge drift is detected; and set off the coaching with HPO pipeline when a mannequin high quality violation is detected.

Mannequin approval

After a newly educated mannequin is registered within the mannequin registry, the accountable knowledge scientist receives a notification. If the mannequin has been educated by the coaching pipeline (recalibration with new coaching knowledge whereas hyperparameters are fastened), there isn’t a want for approval from the enterprise mannequin evaluation board. The information scientist can evaluation and approve the brand new model of the mannequin independently. Alternatively, if the mannequin has been educated by the coaching with HPO pipeline (retuning by altering hyperparameters), the brand new mannequin model must undergo the enterprise evaluation course of earlier than it may be used for inference in manufacturing. When the evaluation course of is full, the info scientist can proceed and approve the brand new model of the mannequin within the mannequin registry. Altering the standing of the mannequin bundle to Accepted will set off a Lambda perform through EventBridge, which can in flip set off the GitLab mannequin deploy pipeline through an API name. It will mechanically replace the SageMaker batch inference pipeline to make the most of the most recent accepted model of the mannequin for inference.

There are two essential methods to approve or reject a brand new mannequin model within the mannequin registry: utilizing the AWS SDK for Python (Boto3) or from the SageMaker Studio UI. By default, each the coaching pipeline and coaching with HPO pipeline set ModelApprovalStatus to PendingManualApproval. The accountable knowledge scientist can replace the approval standing for the mannequin by calling the update_model_package API from Boto3. Consult with Update the Approval Status of a Model for particulars about updating the approval standing of a mannequin through the SageMaker Studio UI.

Information I/O design

SageMaker interacts instantly with Amazon S3 for studying inputs and storing outputs of particular person steps within the coaching and inference pipelines. The next diagram illustrates how totally different Python scripts, uncooked and processed coaching knowledge, uncooked and processed inference knowledge, inference outcomes and floor reality labels (if out there for mannequin high quality monitoring), mannequin artifacts, coaching and inference analysis metrics (mannequin high quality monitoring), in addition to knowledge high quality baselines and violation studies (for knowledge high quality monitoring) could be organized inside an S3 bucket. The course of arrows within the diagram signifies which information are inputs or outputs from their respective steps within the SageMaker pipelines. Arrows have been color-coded based mostly on pipeline step sort to make them simpler to learn. The pipeline will mechanically add Python scripts from the GitLab repository and retailer output information or mannequin artifacts from every step within the acceptable S3 path.

The information engineer is chargeable for the next:

- Importing labeled coaching knowledge to the suitable path in Amazon S3. This consists of including new coaching knowledge frequently to make sure the coaching pipeline and coaching with HPO pipeline have entry to latest coaching knowledge for mannequin retraining and retuning, respectively.

- Importing enter knowledge for inference to the suitable path in S3 bucket earlier than a deliberate run of the inference pipeline.

- Importing floor reality labels to the suitable S3 path for mannequin high quality monitoring.

The information scientist is chargeable for the next:

- Making ready floor reality labels and offering them to the info engineering crew for importing to Amazon S3.

- Taking the mannequin variations educated by the coaching with HPO pipeline by way of the enterprise evaluation course of and acquiring needed approvals.

- Manually approving or rejecting newly educated mannequin variations within the mannequin registry.

- Approving the manufacturing gate for the inference pipeline and supporting assets to be promoted to manufacturing.

Pattern code

On this part, we current a pattern code for batch inference operations with a single-account setup as proven within the following structure diagram. The pattern code could be discovered within the GitHub repository, and may function a place to begin for batch inference with mannequin monitoring and automated retraining utilizing high quality gates usually required for enterprises. The pattern code differs from the goal structure within the following methods:

- It makes use of a single AWS account for constructing and deploying the ML mannequin and supporting assets. Consult with Organizing Your AWS Environment Using Multiple Accounts for steerage on multi-account setup on AWS.

- It makes use of a single GitLab CI/CD pipeline for constructing and deploying the ML mannequin and supporting assets.

- When a brand new model of the mannequin is educated and accepted, the GitLab CI/CD pipeline just isn’t triggered mechanically and must be run manually by the accountable knowledge scientist to replace the SageMaker batch inference pipeline with the most recent accepted model of the mannequin.

- It solely helps S3 event-based triggers for operating the SageMaker coaching and inference pipelines.

Conditions

You must have the next conditions earlier than deploying this resolution:

- An AWS account

- SageMaker Studio

- A SageMaker execution function with Amazon S3 learn/write and AWS Key Management Service (AWS KMS) encrypt/decrypt permissions

- An S3 bucket for storing knowledge, scripts, and mannequin artifacts

- Terraform model 0.13.5 or larger

- GitLab with a working Docker runner for operating the pipelines

- The AWS CLI

- jq

- unzip

- Python3 (Python 3.7 or larger) and the next Python packages:

- boto3

- sagemaker

- pandas

- pyyaml

Repository construction

The GitHub repository accommodates the next directories and information:

/code/lambda_function/– This listing accommodates the Python file for a Lambda perform that prepares and sends notification messages (through Amazon SNS) concerning the SageMaker pipelines’ step state adjustments/knowledge/– This listing consists of the uncooked knowledge information (coaching, inference, and floor reality knowledge)/env_files/– This listing accommodates the Terraform enter variables file/pipeline_scripts/– This listing accommodates three Python scripts for creating and updating coaching, inference, and coaching with HPO SageMaker pipelines, in addition to configuration information for specifying every pipeline’s parameters/scripts/– This listing accommodates extra Python scripts (equivalent to preprocessing and analysis) which can be referenced by the coaching, inference, and coaching with HPO pipelines.gitlab-ci.yml– This file specifies the GitLab CI/CD pipeline configuration/occasions.tf– This file defines EventBridge assets/lambda.tf– This file defines the Lambda notification perform and the related AWS Identity and Access Management (IAM) assets/essential.tf– This file defines Terraform knowledge sources and native variables/sns.tf– This file defines Amazon SNS assets/tags.json– This JSON file means that you can declare customized tag key-value pairs and append them to your Terraform assets utilizing an area variable/variables.tf– This file declares all of the Terraform variables

Variables and configuration

The next desk reveals the variables which can be used to parameterize this resolution. Consult with the ./env_files/dev_env.tfvars file for extra particulars.

| Identify | Description |

bucket_name |

S3 bucket that’s used to retailer knowledge, scripts, and mannequin artifacts |

bucket_prefix |

S3 prefix for the ML challenge |

bucket_train_prefix |

S3 prefix for coaching knowledge |

bucket_inf_prefix |

S3 prefix for inference knowledge |

notification_function_name |

Identify of the Lambda perform that prepares and sends notification messages about SageMaker pipelines’ step state adjustments |

custom_notification_config |

The configuration for customizing notification message for particular SageMaker pipeline steps when a particular pipeline run standing is detected |

email_recipient |

The e-mail deal with listing for receiving SageMaker pipelines’ step state change notifications |

pipeline_inf |

Identify of the SageMaker inference pipeline |

pipeline_train |

Identify of the SageMaker coaching pipeline |

pipeline_trainwhpo |

Identify of SageMaker coaching with HPO pipeline |

recreate_pipelines |

If set to true, the three present SageMaker pipelines (coaching, inference, coaching with HPO) will likely be deleted and new ones will likely be created when GitLab CI/CD is run |

model_package_group_name |

Identify of the mannequin bundle group |

accuracy_mse_threshold |

Most worth of MSE earlier than requiring an replace to the mannequin |

role_arn |

IAM function ARN of the SageMaker pipeline execution function |

kms_key |

KMS key ARN for Amazon S3 and SageMaker encryption |

subnet_id |

Subnet ID for SageMaker networking configuration |

sg_id |

Safety group ID for SageMaker networking configuration |

upload_training_data |

If set to true, coaching knowledge will likely be uploaded to Amazon S3, and this add operation will set off the run of the coaching pipeline |

upload_inference_data |

If set to true, inference knowledge will likely be uploaded to Amazon S3, and this add operation will set off the run of the inference pipeline |

user_id |

The worker ID of the SageMaker person that’s added as a tag to SageMaker assets |

Deploy the answer

Full the next steps to deploy the answer in your AWS account:

- Clone the GitHub repository into your working listing.

- Assessment and modify the GitLab CI/CD pipeline configuration to fit your surroundings. The configuration is specified within the

./gitlab-ci.ymlfile. - Consult with the README file to replace the overall resolution variables within the

./env_files/dev_env.tfvarsfile. This file accommodates variables for each Python scripts and Terraform automation.- Verify the extra SageMaker Pipelines parameters which can be outlined within the YAML information underneath

./batch_scoring_pipeline/pipeline_scripts/. Assessment and replace the parameters if needed.

- Verify the extra SageMaker Pipelines parameters which can be outlined within the YAML information underneath

- Assessment the SageMaker pipeline creation scripts in

./pipeline_scripts/in addition to the scripts which can be referenced by them within the./scripts/folder. The instance scripts offered within the GitHub repo are based mostly on the Abalone dataset. If you’ll use a unique dataset, make sure you replace the scripts to fit your specific drawback. - Put your knowledge information into the

./knowledge/folder utilizing the next naming conference. If you’re utilizing the Abalone dataset together with the offered instance scripts, guarantee the info information are headerless, the coaching knowledge consists of each impartial and goal variables with the unique order of columns preserved, the inference knowledge solely consists of impartial variables, and the bottom reality file solely consists of the goal variable.training-data.csvinference-data.csvground-truth.csv

- Commit and push the code to the repository to set off the GitLab CI/CD pipeline run (first run). Observe that the primary pipeline run will fail on the

pipelinestage as a result of there’s no accepted mannequin model but for the inference pipeline script to make use of. Assessment the step log and confirm a brand new SageMaker pipeline namedTrainingPipelinehas been efficiently created.

-

- Open the SageMaker Studio UI, then evaluation and run the coaching pipeline.

- After the profitable run of the coaching pipeline, approve the registered mannequin model within the mannequin registry, then rerun the complete GitLab CI/CD pipeline.

- Assessment the Terraform plan output within the

constructstage. Approve the handbookapplystage within the GitLab CI/CD pipeline to renew the pipeline run and authorize Terraform to create the monitoring and notification assets in your AWS account. - Lastly, evaluation the SageMaker pipelines’ run standing and output within the SageMaker Studio UI and verify your e mail for notification messages, as proven within the following screenshot. The default message physique is in JSON format.

SageMaker pipelines

On this part, we describe the three SageMaker pipelines throughout the MLOps workflow.

Coaching pipeline

The coaching pipeline consists of the next steps:

- Preprocessing step, together with function transformation and encoding

- Information high quality verify step for producing knowledge statistics and constraints baseline utilizing the coaching knowledge

- Coaching step

- Coaching analysis step

- Situation step to verify whether or not the educated mannequin meets a pre-specified efficiency threshold

- Mannequin registration step to register the newly educated mannequin within the mannequin registry if the educated mannequin meets the required efficiency threshold

Each the skip_check_data_quality and register_new_baseline_data_quality parameters are set to True within the coaching pipeline. These parameters instruct the pipeline to skip the info high quality verify and simply create and register new knowledge statistics or constraints baselines utilizing the coaching knowledge. The next determine depicts a profitable run of the coaching pipeline.

Batch inference pipeline

The batch inference pipeline consists of the next steps:

- Making a mannequin from the most recent accepted mannequin model within the mannequin registry

- Preprocessing step, together with function transformation and encoding

- Batch inference step

- Information high quality verify preprocessing step, which creates a brand new CSV file containing each enter knowledge and mannequin predictions for use for the info high quality verify

- Information high quality verify step, which checks the enter knowledge in opposition to baseline statistics and constraints related to the registered mannequin

- Situation step to verify whether or not floor reality knowledge is offered. If floor reality knowledge is offered, the mannequin high quality verify step will likely be carried out

- Mannequin high quality calculation step, which calculates mannequin efficiency based mostly on floor reality labels

Each the skip_check_data_quality and register_new_baseline_data_quality parameters are set to False within the inference pipeline. These parameters instruct the pipeline to carry out an information high quality verify utilizing the info statistics or constraints baseline related to the registered mannequin (supplied_baseline_statistics_data_quality and supplied_baseline_constraints_data_quality) and skip creating or registering new knowledge statistics and constraints baselines throughout inference. The next determine illustrates a run of the batch inference pipeline the place the info high quality verify step has failed as a consequence of poor efficiency of the mannequin on the inference knowledge. On this specific case, the coaching with HPO pipeline will likely be triggered mechanically to fine-tune the mannequin.

Coaching with HPO pipeline

The coaching with HPO pipeline consists of the next steps:

- Preprocessing step (function transformation and encoding)

- Information high quality verify step for producing knowledge statistics and constraints baseline utilizing the coaching knowledge

- Hyperparameter tuning step

- Coaching analysis step

- Situation step to verify whether or not the educated mannequin meets a pre-specified accuracy threshold

- Mannequin registration step if the very best educated mannequin meets the required accuracy threshold

Each the skip_check_data_quality and register_new_baseline_data_quality parameters are set to True within the coaching with HPO pipeline. The next determine depicts a profitable run of the coaching with HPO pipeline.

Clear up

Full the next steps to wash up your assets:

- Make use of the

destroystage within the GitLab CI/CD pipeline to remove all assets provisioned by Terraform. - Use the AWS CLI to list and remove any remaining pipelines which can be created by the Python scripts.

- Optionally, delete different AWS assets such because the S3 bucket or IAM function created outdoors the CI/CD pipeline.

Conclusion

On this publish, we demonstrated how enterprises can create MLOps workflows for his or her batch inference jobs utilizing Amazon SageMaker, Amazon EventBridge, AWS Lambda, Amazon SNS, HashiCorp Terraform, and GitLab CI/CD. The introduced workflow automates knowledge and mannequin monitoring, mannequin retraining, in addition to batch job runs, code versioning, and infrastructure provisioning. This will result in important reductions in complexities and prices of sustaining batch inference jobs in manufacturing. For extra details about implementation particulars, evaluation the GitHub repo.

In regards to the Authors

Hasan Shojaei is a Sr. Information Scientist with AWS Skilled Providers, the place he helps prospects throughout totally different industries equivalent to sports activities, insurance coverage, and monetary companies remedy their enterprise challenges by way of the usage of huge knowledge, machine studying, and cloud applied sciences. Previous to this function, Hasan led a number of initiatives to develop novel physics-based and data-driven modeling methods for high vitality firms. Outdoors of labor, Hasan is keen about books, mountain climbing, images, and historical past.

Hasan Shojaei is a Sr. Information Scientist with AWS Skilled Providers, the place he helps prospects throughout totally different industries equivalent to sports activities, insurance coverage, and monetary companies remedy their enterprise challenges by way of the usage of huge knowledge, machine studying, and cloud applied sciences. Previous to this function, Hasan led a number of initiatives to develop novel physics-based and data-driven modeling methods for high vitality firms. Outdoors of labor, Hasan is keen about books, mountain climbing, images, and historical past.

Wenxin Liu is a Sr. Cloud Infrastructure Architect. Wenxin advises enterprise firms on learn how to speed up cloud adoption and helps their improvements on the cloud. He’s a pet lover and is keen about snowboarding and touring.

Wenxin Liu is a Sr. Cloud Infrastructure Architect. Wenxin advises enterprise firms on learn how to speed up cloud adoption and helps their improvements on the cloud. He’s a pet lover and is keen about snowboarding and touring.

Vivek Lakshmanan is a Machine Studying Engineer at Amazon. He has a Grasp’s diploma in Software program Engineering with specialization in Information Science and a number of other years of expertise as an MLE. Vivek is happy on making use of cutting-edge applied sciences and constructing AI/ML options to prospects on cloud. He’s keen about Statistics, NLP and Mannequin Explainability in AI/ML. In his spare time, he enjoys enjoying cricket and taking highway journeys.

Vivek Lakshmanan is a Machine Studying Engineer at Amazon. He has a Grasp’s diploma in Software program Engineering with specialization in Information Science and a number of other years of expertise as an MLE. Vivek is happy on making use of cutting-edge applied sciences and constructing AI/ML options to prospects on cloud. He’s keen about Statistics, NLP and Mannequin Explainability in AI/ML. In his spare time, he enjoys enjoying cricket and taking highway journeys.

Andy Cracchiolo is a Cloud Infrastructure Architect. With greater than 15 years in IT infrastructure, Andy is an completed and results-driven IT skilled. Along with optimizing IT infrastructure, operations, and automation, Andy has a confirmed observe file of analyzing IT operations, figuring out inconsistencies, and implementing course of enhancements that enhance effectivity, scale back prices, and enhance income.

Andy Cracchiolo is a Cloud Infrastructure Architect. With greater than 15 years in IT infrastructure, Andy is an completed and results-driven IT skilled. Along with optimizing IT infrastructure, operations, and automation, Andy has a confirmed observe file of analyzing IT operations, figuring out inconsistencies, and implementing course of enhancements that enhance effectivity, scale back prices, and enhance income.