Intel Particulars Extra on Granite Rapids and Sierra Forest Xeons

With the annual Scorching Chips convention happening this week, most of the trade’s greatest chip design companies are on the present, speaking about their newest and/or upcoming wares. For Intel, it’s a case of the latter, as the corporate is at Scorching Chips to speak about its subsequent era of Xeon processors, Granite Rapids and Sierra Forest, that are set to launch in 2024. Intel has beforehand revealed this processors on its information middle roadmap – most not too long ago updating it in March of this year – and for Scorching Chips the corporate is providing a bit extra in the best way of technical particulars for the chips and their shared platform.

Whereas there’s no such factor as an “unimportant” era for Intel’s Xeon processors, Granite Rapids and Sierra Forest promise to be one in all Intel’s most necessary up to date to the Xeon Scalable {hardware} ecosystem but, because of the introduction of area-efficient E-cores. Already a mainstay on Intel’s client processors since 12th era Core (Alder Lake), with the upcoming subsequent era Xeon Scalable platform will lastly carry E-cores over to Intel’s server platform. Although in contrast to client elements the place each core varieties are combined in a single chip, Intel goes for a purely homogenous technique, giving us the all P-core Granite Rapids, and the all E-core Sierra Forest.

As Intel’s first E-core Xeon Scalable chip for information middle use, Sierra Forest is arguably crucial of the 2 chips. Fittingly, it’s Intel’s lead car for his or her EUV-based Intel 3 course of node, and it’s the primary Xeon to return out. In accordance with the corporate, it stays on monitor for a H1’2024 launch. In the meantime Granite Rapids might be “shortly” behind that, on the identical Intel 3 course of node.

As Intel’s slated to ship two moderately completely different Xeons in a single era, an enormous ingredient of the following era Xeon Scalable platform is that each processors will share the identical platform. This implies the identical socket(s), the identical reminiscence, the identical chiplet-based design philosophy, the identical firmware, and so forth. Whereas there are nonetheless variations, significantly in relation to AVX-512 help, Intel is attempting to make these chips as interchangeable as attainable.

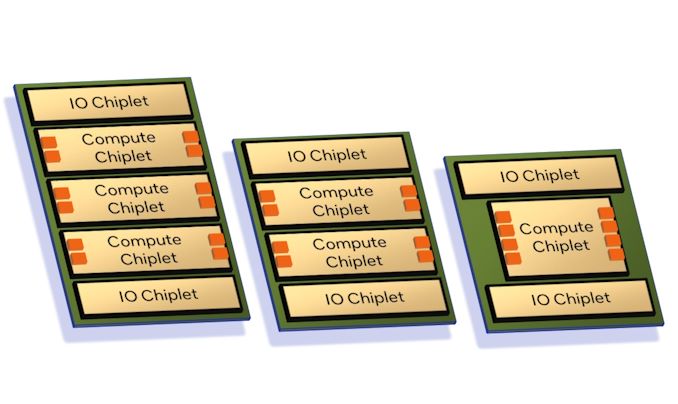

As introduced by Intel again in 2024, each Granite and Sierra are chiplet-based designs, counting on a mixture of compute and I/O chiplets which might be stitched collectively utilizing Intel’s lively EMIB bridge expertise. Whereas this isn’t Intel’s first dance with chiplets within the Xeon house (XCC Sapphire Rapids takes that honor), this can be a distinct evolution of the chiplet design through the use of distinct compute/IO chiplets as an alternative of sewing collectively in any other case “full” Xeon chiplets. Amongst different issues, which means Granite and Sierra can share the widespread I/O chiplet (constructed on the Intel 7 course of), and from a producing standpoint, whether or not a Xeon is Granite or Sierra is “merely” a matter of which sort of compute chiplet is positioned down.

Notably right here, Intel is confirming for the primary time that the following gen Xeon Scalable platform is getting self-booting capabilities, making it a real SoC. With Intel putting the entire crucial I/O options wanted for operation throughout the I/O chiplets, an exterior chipset (or FPGA) will not be wanted to function these processors. This brings Intel’s Xeon lineup nearer in performance to AMD’s EPYC lineup, which has been equally self-booting for some time now.

Altogether, the following gen Xeon Scalable platform will help as much as 12 reminiscence channels, scaling with the quantity and capabilities of the compute dies current. As beforehand revealed by Intel, this platform would be the first to help the brand new Multiplexer Mixed Ranks (MCR) DIMM, which basically gangs up two units/ranks of reminiscence chips so as to double the efficient bandwidth to and from the DIMM. With the mix of upper reminiscence bus speeds and extra reminiscence channels total, Intel says the platform can provide 2.8x as a lot bandwidth as present Sapphire Rapids Xeons.

As for I/O, a max configuration Xeon will be capable of provide as much as 136 lanes common I/O, in addition to as much as 6 UPI hyperlinks (144 lanes in whole) for multi-socket connectivity. For I/O, the platform helps PCIe 5.0 (why no PCIe 6.0? We have been informed the timing didn’t work out), in addition to the newer CXL 2.0 commonplace. As is historically the case for Intel’s big-core Xeons, Granite Rapids chips will be capable of scale as much as 8 sockets altogether. Sierra Forest, however, will solely be capable of scale as much as 2 sockets, owing to the variety of CPU cores in play in addition to the completely different use circumstances Intel is anticipating of their clients.

Together with particulars on the shared platform, Intel can be providing for the primary time a high-level overview of the architectures used for the E-cores and the P-cores. As has been the case for a lot of generations of Xeons now, Intel is leveraging the identical fundamental CPU structure that goes into their client elements. So Granite and Sierra could be regarded as a deconstructed Meteor Lake processor, with Granite getting the Redwood Cove P-cores, whereas Sierra will get the Crestmont E-Cores.

As famous earlier than, that is Intel’s first foray into providing E-cores for the Xeon market. Which for Intel, has meant tuning their E-core design for information middle workloads, versus the consumer-centric workloads that outlined the earlier era E-core design.

Whereas not a deep-dive on the structure itself, Intel is revealing that Crestmont is providing a 6-wide instruction decode pathway in addition to an 8-wide retirement backend. Whereas not as beefy as Intel’s P-cores, the E-core will not be by any means a light-weight core, and Intel’s design choices mirror this. Nonetheless, it’s designed to be way more environment friendly each by way of die house and vitality consumption than the P cores that may go into Granite.

The L1 instruction cache (I-cache) for Crestmont might be 64KB, twice the scale because the I-cache on earlier designs. It’s uncommon for Intel to the touch I-cache capability (attributable to balancing hit charges with latency), so this can be a notable change and will probably be fascinating to see the ramifications as soon as Intel talks extra about structure.

In the meantime, new to the E-core lineup with Crestmont, the cores can both be packaged into 2 or 4 core clusters, in contrast to Gracemont right this moment, which is simply out there as a 4 core cluster. That is basically how Intel goes to regulate the ratio of L2 cache to CPU cores; with 4MB of shared L2 whatever the configuration, a 2-core cluster affords every core twice as a lot L2 per core as they’d in any other case get. This basically provides Intel one other knob to regulate for chip efficiency; clients who want a barely increased performing Sierra design (moderately than simply maxing out the variety of CPU cores) can as an alternative get fewer cores with the upper efficiency that comes from the successfully bigger L2 cache.

And at last for Sierra/Crestmont, the chip will provide as near instruction parity with Granite Rapids as attainable. This implies BF16 information kind help, in addition to help for varied instruction units reminiscent of AVX-IFMA and AVX-DOT-PROD-INT8. The one factor you gained’t discover right here, in addition to an AMX matrix engine, is help for AVX-512; Intel’s ultra-wide vector format will not be part of Crestmont’s function set. Finally, AVX10 will help to take care of this problem, however for now that is as shut as Intel can get to parity between the 2 processors.

In the meantime, for Granite Rapids we’ve got the Redwood Cove P-core. The normal coronary heart of a Xeon processor, Redwood/Granite aren’t as huge of a change for Intel as Sierra Forest is. However that doesn’t imply they’re sitting idly by.

By way of microarchitecture, Redwood Cove is getting the identical 64KB I-cache as we noticed on Crestmont, which is 2x the capability of its predecessor. However most notably right here, Intel has managed to additional shave down the latency of floating-point multiplication, bringing it from 4/5 cycles down to only 3 cycles. Elementary instruction latency enhancements like these are uncommon, so that they’re at all times welcome to see.

In any other case, the remaining highlights of the Redwood Cove microarchitecture are department prediction and prefetching, that are typical optimization targets for Intel. Something they will do to enhance department prediction (and cut back the price of uncommon misses) tends to pay comparatively huge dividends by way of efficiency.

Extra relevant to the Xeon household particularly, the AMX matrix engine for Redwood Cove is gaining FP16 help. FP16 isn’t as fairly as closely used because the already-supported BF16 and INT8, but it surely’s an enchancment to AMX’s flexibility total.

Reminiscence encryption help can be being improved. Granite Rapids’ taste of Redwood Cove will help 2048, 256-bit reminiscence keys, up from 128 keys on Sapphire Rapids. Cache Allocation Expertise (CAT) and Code and Information Prioritization (CDP) performance are additionally getting some enhancements right here, with Intel extending them to have the ability to management what goes in to the L2 cache, versus simply the LLC/L3 cache in earlier implementations.

Finally, it goes with out saying that Intel believes they’re well-positioned for 2024 and past with their upcoming Xeons. By enhancing efficiency on the top-end P-core Xeons, whereas introducing E-core Xeons for patrons who simply want plenty of lighter CPU cores, Intel believes they will tackle the whole market with two CPU core varieties sharing a single widespread platform.

Whereas it’s nonetheless too early to speak about particular person SKUs for Granite Rapids and Sierra Forest, Intel has informed us that core counts total are going up. Granite Rapids elements will provide extra CPU cores than Sapphire Rapids (up from 60 for SPR XCC), and, after all, at 144 cores Sierra will provide much more than that. Notably, nonetheless, Intel gained’t be segmenting the 2 CPU traces by core counts – Sierra Forest might be out there in smaller core counts as nicely (in contrast to AMD’s EPYC Zen4c Bergamo chips). This displays the completely different efficiency capabilities of the P and E cores, and, little question, Intel seeking to totally embrace the scalability that comes from utilizing chiplets.

And whereas Sierra Forest will already go to 144 CPU cores, Intel additionally made an fascinating remark in our pre-briefing that they might have gone increased with core counts for his or her first E-core Xeon Scalable processor. However the firm determined to prioritize per-core efficiency a bit extra, ensuing within the chips and core counts we’ll be seeing subsequent 12 months.

Above all else – and, maybe, letting advertising and marketing take the wheel slightly too lengthy right here for Scorching Chips – Intel is hammering residence the truth that their next-generation Xeon processors stay on-track for his or her 2024 launch. It goes with out saying that Intel is simply now recovering from the large delays in Sapphire Rapids (and knock-on impact to Emerald Rapids), so the corporate is eager to guarantee clients that Granite Rapids and Sierra Forest is the place Intel’s timing will get again on monitor. Between earlier Xeon delays and taking so lengthy to carry to market an E-core Xeon Scalable chip, Intel hasn’t dominated within the information middle market prefer it as soon as did, so Granite Rapids and Sierra Forest are going to mark an necessary inflection level for Intel’s information middle choices going ahead.