MIT and Harvard Researchers Suggest (FAn): A Complete AI System that Bridges the Hole between SOTA Laptop Imaginative and prescient and Robotic Methods- Offering an Finish-to-Finish Resolution for Segmenting, Detecting, Monitoring, and Following any Object

In a brand new AI analysis, a crew of MIT and Harvard College researchers has launched a groundbreaking framework referred to as “Observe Something” (FAn). The system addresses the restrictions of present object-following robotic methods and presents an modern answer for real-time, open-set object monitoring and following.

The first shortcomings of current robotic object-following methods are a constrained capacity to accommodate new objects attributable to a hard and fast set of acknowledged classes and an absence of user-friendliness in specifying goal objects. The brand new FAn system tackles these points by presenting an open-set strategy that may seamlessly detect, phase, observe, and observe a variety of issues whereas adapting to novel objects via textual content, pictures, or click on queries.

The core options of the proposed FAn system may be summarized as follows:

Open-Set Multimodal Method: FAn introduces a novel methodology that facilitates real-time detection, segmentation, monitoring, and following of any object inside a given atmosphere, no matter its class.

Unified Deployment: The system is designed for straightforward deployment on robotic platforms, specializing in micro aerial automobiles, enabling environment friendly integration into sensible functions.

Robustness: The system incorporates re-detection mechanisms to deal with eventualities the place tracked objects are occluded or briefly misplaced throughout the monitoring course of.

The basic goal of the fan system is to empower robotic methods outfitted with onboard cameras to establish and observe objects of curiosity. This includes making certain the thing stays inside the digital camera’s discipline of view because the robotic strikes.

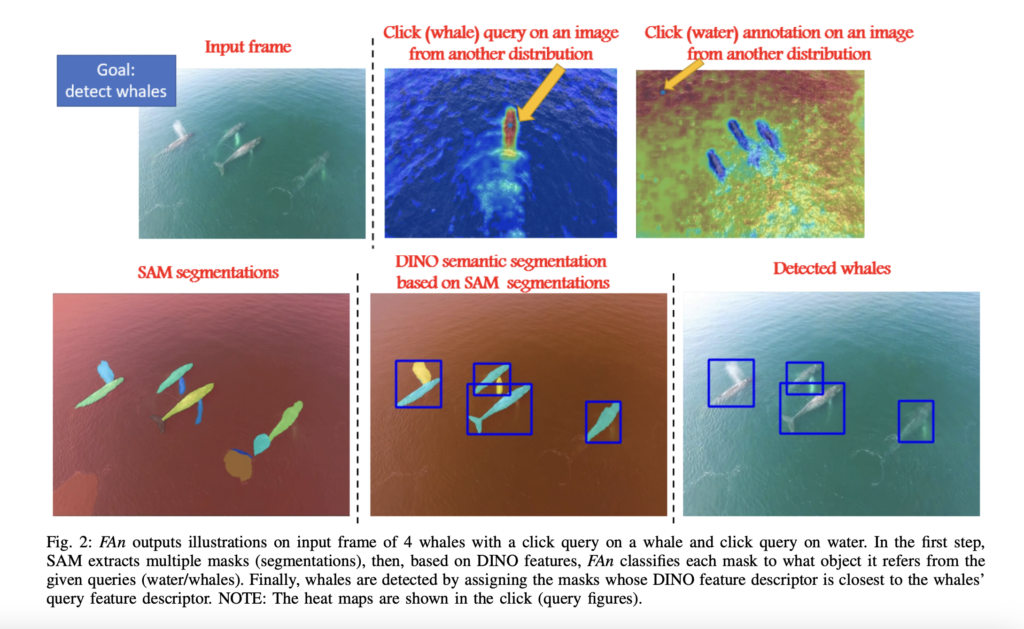

FAn leverages state-of-the-art Imaginative and prescient Transformer (ViT) fashions to attain this goal. These fashions are optimized for real-time processing and merged right into a cohesive system. The researchers exploit the strengths of assorted fashions, such because the Section Something Mannequin (SAM) for segmentation, DINO and CLIP for studying visible ideas from pure language, and a light-weight detection and semantic segmentation scheme. Moreover, real-time monitoring is facilitated utilizing the (Seg)AOT and SiamMask fashions. A light-weight visible serving controller can also be launched to manipulate the object-following course of.

The researchers carried out complete experiments to guage FAn’s efficiency throughout numerous objects in zero-shot detection, monitoring, and following eventualities. The outcomes demonstrated the system’s seamless and environment friendly functionality to observe objects of curiosity in real-time.

In conclusion, the FAn framework represents an encompassing answer for real-time object monitoring and following, eliminating the restrictions of closed-set methods. Its open-set nature, multimodal compatibility, real-time processing, and adaptableness to new environments make it a big development in robotics. Furthermore, the crew’s dedication to open-sourcing the system underscores its potential to profit a big selection of real-world functions.

Take a look at the Paper and Github. All Credit score For This Analysis Goes To the Researchers on This Challenge. Additionally, don’t neglect to affix our 29k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, the place we share the most recent AI analysis information, cool AI initiatives, and extra.

For those who like our work, please observe us on Twitter

Niharika is a Technical consulting intern at Marktechpost. She is a 3rd yr undergraduate, at present pursuing her B.Tech from Indian Institute of Expertise(IIT), Kharagpur. She is a extremely enthusiastic particular person with a eager curiosity in Machine studying, Knowledge science and AI and an avid reader of the most recent developments in these fields.