Section Something Mannequin: Basis Mannequin for Picture Segmentation

Segmentation, the method of figuring out picture pixels that belong to things, is on the core of pc imaginative and prescient. This course of is utilized in functions from scientific imaging to picture modifying, and technical consultants should possess each extremely expert talents and entry to AI infrastructure with giant portions of annotated knowledge for correct modeling.

Meta AI lately unveiled its Segment Anything project? which is ?a picture segmentation dataset and mannequin with the Section Something Mannequin (SAM) and the SA-1B mask dataset?—?the biggest ever segmentation dataset assist additional analysis in basis fashions for pc imaginative and prescient. They made SA-1B out there for analysis use whereas the SAM is licensed beneath Apache 2.0 open license for anybody to attempt SAM along with your pictures utilizing this demo!

Section Something Mannequin / Picture by Meta AI

Earlier than, segmentation issues had been approached utilizing two lessons of approaches:

- Interactive segmentation by which the customers information the segmentation process by iteratively refining a masks.

- Automated segmentation allowed selective object classes like cats or chairs to be segmented mechanically but it surely required giant numbers of annotated objects for coaching (i.e. hundreds and even tens of hundreds of examples of segmented cats) together with computing sources and technical experience to coach a segmentation mannequin neither strategy offered a normal, absolutely automated answer to segmentation.

SAM makes use of each interactive and automated segmentation in a single mannequin. The proposed interface permits versatile utilization, making a variety of segmentation duties doable by engineering the suitable immediate (equivalent to clicks, bins, or textual content).

SAM was developed utilizing an expansive, high-quality dataset containing multiple billion masks collected as a part of this undertaking, giving it the aptitude of generalizing to new varieties of objects and pictures past these noticed throughout coaching. In consequence, practitioners not want to gather their segmentation knowledge and tailor a mannequin particularly to their use case.

These capabilities allow SAM to generalize each throughout duties and domains one thing no different picture segmentation software program has executed earlier than.

SAM comes with highly effective capabilities that make the segmentation process simpler:

- Number of enter prompts: Prompts that direct segmentation enable customers to simply carry out completely different segmentation duties with out extra coaching necessities. You possibly can apply segmentation utilizing interactive factors and bins, mechanically section every little thing in a picture, and generate a number of legitimate masks for ambiguous prompts. Within the determine beneath we will see the segmentation is completed for sure objects utilizing an enter textual content immediate.

Bounding field utilizing textual content immediate.

- Integration with different methods: SAM can settle for enter prompts from different methods, equivalent to sooner or later taking the consumer’s gaze from an AR/VR headset and deciding on objects.

- Extensible outputs: The output masks can function inputs to different AI methods. As an illustration, object masks might be tracked in movies, enabled imaging modifying functions, lifted into 3D area, and even used creatively equivalent to collating

- Zero-shot generalization: SAM has developed an understanding of objects which permits him to shortly adapt to unfamiliar ones with out extra coaching.

- A number of masks technology: SAM can produce a number of legitimate masks when confronted with uncertainty concerning an object being segmented, offering essential help when fixing segmentation in real-world settings.

- Actual-time masks technology: SAM can generate a segmentation masks for any immediate in actual time after precomputing the picture embedding, enabling real-time interplay with the mannequin.

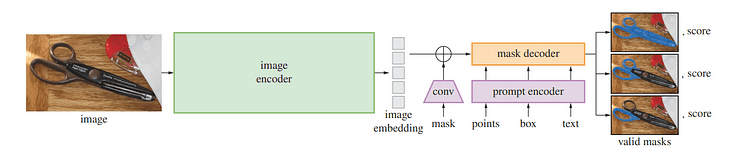

Overview of SAM mannequin / Picture by Segment Anything

One of many current advances in pure language processing and pc imaginative and prescient has been basis fashions that allow zero-shot and few-shot studying for brand spanking new datasets and duties by way of “prompting”. Meta AI researchers skilled SAM to return a sound segmentation masks for any immediate, equivalent to foreground/background factors, tough bins/masks or masks, freeform textual content, or any data indicating the goal object inside a picture.

A sound masks merely implies that even when the immediate might seek advice from a number of objects (as an example: one level on a shirt might characterize each itself or somebody sporting it), its output ought to present an inexpensive masks for one object solely?—?thus pre-training the mannequin and fixing normal downstream segmentation duties through prompting.

The researchers noticed that pretraining duties and interactive knowledge assortment imposed particular constraints on mannequin design. Most importantly, real-time simulation should run effectively on a CPU in an internet browser to permit annotators to make use of SAM interactively in real-time for environment friendly annotation. Though runtime constraints resulted in tradeoffs between high quality and runtime constraints, easy designs produced passable ends in apply.

Beneath SAM’s hood, a picture encoder generates a one-time embedding for pictures whereas a light-weight encoder converts any immediate into an embedding vector in real-time. These data sources are then mixed by a light-weight decoder that predicts segmentation masks primarily based on picture embeddings computed with SAM, so SAM can produce segments in simply 50 milliseconds for any given immediate in an internet browser.

Constructing and coaching the mannequin requires entry to an unlimited and various pool of information that didn’t exist initially of coaching. Right this moment’s segmentation dataset launch is by far the biggest to this point. Annotators used SAM interactively annotate pictures earlier than updating SAM with this new knowledge?—?repeating this cycle many instances to repeatedly refine each the mannequin and dataset.

SAM makes amassing segmentation masks quicker than ever, taking solely 14 seconds per masks annotated interactively; that course of is barely two instances slower than annotating bounding bins which take solely 7 seconds utilizing quick annotation interfaces. Comparable large-scale segmentation knowledge assortment efforts embody COCO absolutely handbook polygon-based masks annotation which takes about 10 hours; SAM model-assisted annotation efforts had been even quicker; its annotation time per masks annotated was 6.5x quicker versus 2x slower when it comes to knowledge annotation time than earlier mannequin assisted giant scale knowledge annotations efforts!

Interactively annotating masks is inadequate to generate the SA-1B dataset; thus a knowledge engine was developed. This knowledge engine accommodates three “gears”, beginning with assisted annotators earlier than shifting onto absolutely automated annotation mixed with assisted annotation to extend the variety of collected masks and at last absolutely automated masks creation for the dataset to scale.

SA-1B’s closing dataset options greater than 1.1 billion segmentation masks collected on over 11 million licensed and privacy-preserving pictures, making up 4 instances as many masks than any current segmentation dataset, based on human analysis research. As verified by these human assessments, these masks exhibit top quality and variety in contrast with earlier manually annotated datasets with a lot smaller pattern sizes.

Pictures for SA-1B had been obtained through a picture supplier from a number of international locations that represented completely different geographic areas and earnings ranges. Whereas sure geographic areas stay underrepresented, SA-1B supplies better illustration as a consequence of its bigger variety of pictures and general higher protection throughout all areas.

Researchers carried out assessments aimed toward uncovering any biases within the mannequin throughout gender presentation, pores and skin tone notion, the age vary of individuals in addition to the perceived age of individuals introduced, discovering that the SAM mannequin carried out equally throughout numerous teams. They hope this may make the ensuing work extra equitable when utilized in real-world use circumstances.

Whereas SA-1B enabled the analysis output, it may well additionally allow different researchers to coach basis fashions for picture segmentation. Moreover, this knowledge might change into the muse for brand spanking new datasets with extra annotations.

Meta AI researchers hope that by sharing their analysis and dataset, they will speed up the analysis in picture segmentation and picture and video understanding. Since this segmentation mannequin can carry out this operate as a part of bigger methods.

On this article, we lined what’s SAM and its functionality and use circumstances. After that, we went by way of the way it works, and the way it was skilled in order to provide an summary of the mannequin. Lastly, we conclude the article with the longer term imaginative and prescient and work. If you need to know extra about SAM be certain to learn the paper and take a look at the demo.

Youssef Rafaat is a pc imaginative and prescient researcher & knowledge scientist. His analysis focuses on growing real-time pc imaginative and prescient algorithms for healthcare functions. He additionally labored as a knowledge scientist for greater than 3 years within the advertising and marketing, finance, and healthcare area.