Reworking AI Interplay: LLaVAR Outperforms in Visible and Textual content-Based mostly Comprehension, Marking a New Period in Multimodal Instruction-Following Fashions

By combining a number of actions into one instruction, instruction tuning enhances generalization to new duties. Such capability to answer open-ended questions has contributed to the latest chatbot explosion since ChatGPT 2. Visible encoders like CLIP-ViT have just lately been added to dialog brokers as a part of visible instruction-tuned fashions, permitting for human-agent interplay primarily based on footage. Nonetheless, they need assistance comprehending textual content inside photos, perhaps as a result of coaching information’s predominance of pure imagery (e.g., Conceptual Captions and COCO). Nonetheless, studying comprehension is crucial for every day visible notion in people. Luckily, OCR methods make it attainable to acknowledge phrases from photographs.

The computation (bigger context lengths) is elevated (naively) by including acknowledged texts to the enter of visible instruction-tuned fashions with out fully utilizing the encoding capability of visible encoders. To do that, they recommend gathering instruction-following information that necessitates comprehension of phrases inside footage to enhance the visible instruction-tuned mannequin end-to-end. By combining manually given instructions (akin to “Establish any textual content seen within the picture supplied.”) with the OCR outcomes, they particularly first collect 422K noisy instruction-following information utilizing text-rich3 photos.

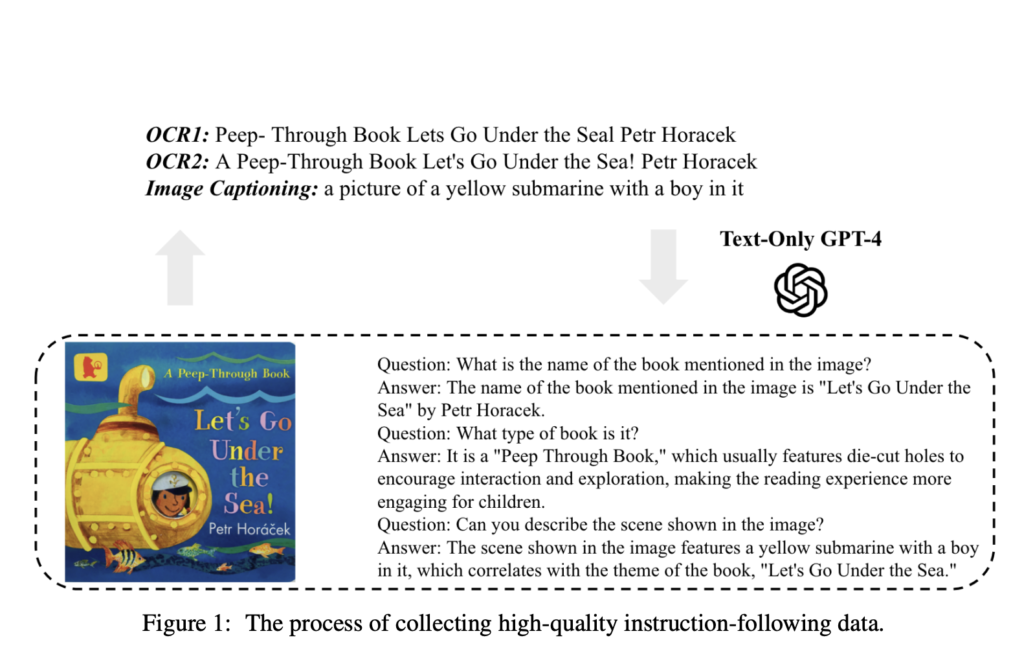

These large noisy-aligned information considerably improve the characteristic alignment between the language decoder and the visible options. Moreover, they ask text-only GPT-4 to provide 16K conversations utilizing OCR outcomes and picture captions as high-quality examples of tips on how to observe directions. Every dialog might comprise many turns of question-and-answer pairs. To provide subtle directions relying on the enter, this method necessitates that GPT-4 denoise the OCR information and create distinctive questions (Determine 1). They complement the pretraining and finetuning levels of LLaVA correspondingly utilizing noisy and high-quality examples to evaluate the efficacy of the information that has been obtained.

Researchers from Georgia Tech, Adobe Analysis, and Stanford College develop LLaVAR, which stands for Massive Language and Imaginative and prescient Assistant that Can Learn. To raised encode minute textual options, they experiment with scaling the enter decision from 2242 to 3362 in comparison with the unique LLaVA. Based on the evaluation method, empirically, they provide the findings on 4 text-based VQA datasets along with the ScienceQA finetuning outcomes. Moreover, they use 50 text-rich footage from LAION and 30 pure photos from COCO within the GPT-4-based instruction-following evaluation. Moreover, they provide qualitative evaluation to measure extra subtle instruction-following talents (e.g., on posters, web site screenshots, and tweets).

In conclusion, their contributions embody the next:

• They collect 16K high-quality and 422K noisy instruction-following information. Each have been demonstrated to enhance visible instruction tuning. The improved capability permits their mannequin, LLaVAR, to ship end-to-end interactions primarily based on various on-line materials, together with textual content and pictures, whereas solely modestly enhancing the mannequin’s efficiency on pure photographs.

• The coaching and evaluation information, in addition to the mannequin milestones, are made publicly accessible.

Aneesh Tickoo is a consulting intern at MarktechPost. He’s at present pursuing his undergraduate diploma in Knowledge Science and Synthetic Intelligence from the Indian Institute of Know-how(IIT), Bhilai. He spends most of his time engaged on tasks geared toward harnessing the facility of machine studying. His analysis curiosity is picture processing and is enthusiastic about constructing options round it. He loves to attach with individuals and collaborate on attention-grabbing tasks.