Secure picture technology and diffusion fashions with Amazon AI content material moderation companies

Generative AI know-how is bettering quickly, and it’s now potential to generate textual content and pictures primarily based on textual content enter. Stable Diffusion is a text-to-image mannequin that empowers you to create photorealistic functions. You possibly can simply generate photographs from textual content utilizing Steady Diffusion fashions by means of Amazon SageMaker JumpStart.

The next are examples of enter texts and the corresponding output photographs generated by Steady Diffusion. The inputs are “A boxer dancing on a desk,” “A woman on the seashore in swimming put on, water coloration type,” and “A canine in a go well with.”

Though generative AI options are highly effective and helpful, they will also be weak to manipulation and abuse. Prospects utilizing them for picture technology should prioritize content material moderation to guard their customers, platform, and model by implementing robust moderation practices to create a protected and constructive consumer expertise whereas safeguarding their platform and model repute.

On this submit, we discover utilizing AWS AI companies Amazon Rekognition and Amazon Comprehend, together with different methods, to successfully reasonable Steady Diffusion model-generated content material in near-real time. To learn to launch and generate photographs from textual content utilizing a Steady Diffusion mannequin on AWS, consult with Generate images from text with the stable diffusion model on Amazon SageMaker JumpStart.

Answer overview

Amazon Rekognition and Amazon Comprehend are managed AI companies that present pre-trained and customizable ML fashions through an API interface, eliminating the necessity for machine studying (ML) experience. Amazon Rekognition Content material Moderation automates and streamlines picture and video moderation. Amazon Comprehend makes use of ML to investigate textual content and uncover priceless insights and relationships.

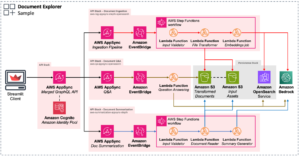

The next reference illustrates the creation of a RESTful proxy API for moderating Steady Diffusion text-to-image model-generated photographs in near-real time. On this resolution, we launched and deployed a Steady Diffusion mannequin (v2-1 base) utilizing JumpStart. The answer makes use of unfavorable prompts and textual content moderation options corresponding to Amazon Comprehend and a rule-based filter to reasonable enter prompts. It additionally makes use of Amazon Rekognition to reasonable the generated photographs. The RESTful API will return the generated picture and the moderation warnings to the consumer if unsafe data is detected.

The steps within the workflow are as follows:

- The consumer ship a immediate to generate a picture.

- An AWS Lambda operate coordinates picture technology and moderation utilizing Amazon Comprehend, JumpStart, and Amazon Rekognition:

- Apply a rule-based situation to enter prompts in Lambda capabilities, implementing content material moderation with forbidden phrase detection.

- Use the Amazon Comprehend customized classifier to investigate the immediate textual content for toxicity classification.

- Ship the immediate to the Steady Diffusion mannequin by means of the SageMaker endpoint, passing each the prompts as consumer enter and unfavorable prompts from a predefined listing.

- Ship the picture bytes returned from the SageMaker endpoint to the Amazon Rekognition

DetectModerationLabelAPI for picture moderation. - Assemble a response message that features picture bytes and warnings if the earlier steps detected any inappropriate data within the immediate or generative picture.

- Ship the response again to the consumer.

The next screenshot reveals a pattern app constructed utilizing the described structure. The net UI sends consumer enter prompts to the RESTful proxy API and shows the picture and any moderation warnings obtained within the response. The demo app blurs the precise generated picture if it incorporates unsafe content material. We examined the app with the pattern immediate “An attractive woman.”

You possibly can implement extra refined logic for a greater consumer expertise, corresponding to rejecting the request if the prompts include unsafe data. Moreover, you could possibly have a retry coverage to regenerate the picture if the immediate is protected, however the output is unsafe.

Predefine a listing of unfavorable prompts

Steady Diffusion helps unfavorable prompts, which helps you to specify prompts to keep away from throughout picture technology. Making a predefined listing of unfavorable prompts is a sensible and proactive method to stop the mannequin from producing unsafe photographs. By together with prompts like “bare,” “horny,” and “nudity,” that are recognized to result in inappropriate or offensive photographs, the mannequin can acknowledge and keep away from them, decreasing the chance of producing unsafe content material.

The implementation will be managed within the Lambda operate when calling the SageMaker endpoint to run inference of the Steady Diffusion mannequin, passing each the prompts from consumer enter and the unfavorable prompts from a predefined listing.

Though this method is efficient, it might impression the outcomes generated by the Steady Diffusion mannequin and restrict its performance. It’s essential to think about it as one of many moderation methods, mixed with different approaches corresponding to textual content and picture moderation utilizing Amazon Comprehend and Amazon Rekognition.

Average enter prompts

A typical method to textual content moderation is to make use of a rule-based key phrase lookup technique to establish whether or not the enter textual content incorporates any forbidden phrases or phrases from a predefined listing. This technique is comparatively simple to implement, with minimal efficiency impression and decrease prices. Nonetheless, the most important disadvantage of this method is that it’s restricted to solely detecting phrases included within the predefined listing and might’t detect new or modified variations of forbidden phrases not included within the listing. Customers may try and bypass the principles through the use of different spellings or particular characters to switch letters.

To deal with the constraints of a rule-based textual content moderation, many options have adopted a hybrid method that mixes rule-based key phrase lookup with ML-based toxicity detection. The mixture of each approaches permits for a extra complete and efficient textual content moderation resolution, able to detecting a wider vary of inappropriate content material and bettering the accuracy of moderation outcomes.

On this resolution, we use an Amazon Comprehend custom classifier to coach a toxicity detection mannequin, which we use to detect probably dangerous content material in enter prompts in circumstances the place no specific forbidden phrases are detected. With the ability of machine studying, we will train the mannequin to acknowledge patterns in textual content which will point out toxicity, even when such patterns aren’t simply detectable by a rule-based method.

With Amazon Comprehend as a managed AI service, coaching and inference are simplified. You possibly can simply prepare and deploy Amazon Comprehend customized classification with simply two steps. Try our workshop lab for extra details about the toxicity detection mannequin utilizing an Amazon Comprehend customized classifier. The lab supplies a step-by-step information to creating and integrating a customized toxicity classifier into your utility. The next diagram illustrates this resolution structure.

This pattern classifier makes use of a social media coaching dataset and performs binary classification. Nonetheless, when you have extra particular necessities in your textual content moderation wants, think about using a extra tailor-made dataset to coach your Amazon Comprehend customized classifier.

Average output photographs

Though moderating enter textual content prompts is essential, it doesn’t assure that every one photographs generated by the Steady Diffusion mannequin will likely be protected for the supposed viewers, as a result of the mannequin’s outputs can include a sure degree of randomness. Subsequently, it’s equally essential to reasonable the pictures generated by the Steady Diffusion mannequin.

On this resolution, we make the most of Amazon Rekognition Content Moderation, which employs pre-trained ML fashions, to detect inappropriate content material in photographs and movies. On this resolution, we use the Amazon Rekognition DetectModerationLabel API to reasonable photographs generated by the Steady Diffusion mannequin in near-real time. Amazon Rekognition Content material Moderation supplies pre-trained APIs to investigate a variety of inappropriate or offensive content material, corresponding to violence, nudity, hate symbols, and extra. For a complete listing of Amazon Rekognition Content material Moderation taxonomies, consult with Moderating content.

The next code demonstrates tips on how to name the Amazon Rekognition DetectModerationLabel API to reasonable photographs inside an Lambda operate utilizing the Python Boto3 library. This operate takes the picture bytes returned from SageMaker and sends them to the Picture Moderation API for moderation.

For extra examples of the Amazon Rekognition Picture Moderation API, consult with our Content Moderation Image Lab.

Efficient picture moderation methods for fine-tuning fashions

Advantageous-tuning is a typical approach used to adapt pre-trained fashions to particular duties. Within the case of Steady Diffusion, fine-tuning can be utilized to generate photographs that incorporate particular objects, types, and characters. Content material moderation is vital when coaching a Steady Diffusion mannequin to stop the creation of inappropriate or offensive photographs. This entails rigorously reviewing and filtering out any knowledge that might result in the technology of such photographs. By doing so, the mannequin learns from a extra various and consultant vary of information factors, bettering its accuracy and stopping the propagation of dangerous content material.

JumpStart makes fine-tuning the Steady Diffusion Mannequin simple by offering the switch studying scripts utilizing the DreamBooth technique. You simply want to organize your coaching knowledge, outline the hyperparameters, and begin the coaching job. For extra particulars, consult with Fine-tune text-to-image Stable Diffusion models with Amazon SageMaker JumpStart.

The dataset for fine-tuning must be a single Amazon Simple Storage Service (Amazon S3) listing together with your photographs and occasion configuration file dataset_info.json, as proven within the following code. The JSON file will affiliate the pictures with the occasion immediate like this: {'instance_prompt':<<instance_prompt>>}.

Clearly, you may manually assessment and filter the pictures, however this may be time-consuming and even impractical whenever you do that at scale throughout many initiatives and groups. In such circumstances, you may automate a batch course of to centrally test all the pictures towards the Amazon Rekognition DetectModerationLabel API and routinely flag or take away photographs in order that they don’t contaminate your coaching.

Moderation latency and price

On this resolution, a sequential sample is used to reasonable textual content and pictures. A rule-based operate and Amazon Comprehend are referred to as for textual content moderation, and Amazon Rekognition is used for picture moderation, each earlier than and after invoking Steady Diffusion. Though this method successfully moderates enter prompts and output photographs, it might improve the general value and latency of the answer, which is one thing to think about.

Latency

Each Amazon Rekognition and Amazon Comprehend provide managed APIs which might be extremely accessible and have built-in scalability. Regardless of potential latency variations resulting from enter dimension and community pace, the APIs used on this resolution from each companies provide near-real-time inference. Amazon Comprehend customized classifier endpoints can provide a pace of lower than 200 milliseconds for enter textual content sizes of lower than 100 characters, whereas the Amazon Rekognition Picture Moderation API serves roughly 500 milliseconds for common file sizes of lower than 1 MB. (The outcomes are primarily based on the check carried out utilizing the pattern utility, which qualifies as a near-real-time requirement.)

In whole, the moderation API calls to Amazon Rekognition and Amazon Comprehend will add as much as 700 milliseconds to the API name. It’s essential to notice that the Steady Diffusion request normally takes longer relying on the complexity of the prompts and the underlying infrastructure functionality. Within the check account, utilizing an occasion kind of ml.p3.2xlarge, the typical response time for the Steady Diffusion mannequin through a SageMaker endpoint was round 15 seconds. Subsequently, the latency launched by moderation is roughly 5% of the general response time, making it a minimal impression on the general efficiency of the system.

Value

The Amazon Rekognition Picture Moderation API employs a pay-as-you-go mannequin primarily based on the variety of requests. The associated fee varies relying on the AWS Area used and follows a tiered pricing construction. As the quantity of requests will increase, the price per request decreases. For extra data, consult with Amazon Rekognition pricing.

On this resolution, we utilized an Amazon Comprehend customized classifier and deployed it as an Amazon Comprehend endpoint to facilitate real-time inference. This implementation incurs each a one-time coaching value and ongoing inference prices. For detailed data, consult with Amazon Comprehend Pricing.

Jumpstart lets you rapidly launch and deploy the Steady Diffusion mannequin as a single bundle. Working inference on the Steady Diffusion mannequin will incur prices for the underlying Amazon Elastic Compute Cloud (Amazon EC2) occasion in addition to inbound and outbound knowledge switch. For detailed data, consult with Amazon SageMaker Pricing.

Abstract

On this submit, we offered an outline of a pattern resolution that showcases tips on how to reasonable Steady Diffusion enter prompts and output photographs utilizing Amazon Comprehend and Amazon Rekognition. Moreover, you may outline unfavorable prompts in Steady Diffusion to stop producing unsafe content material. By implementing a number of moderation layers, the chance of manufacturing unsafe content material will be vastly lowered, guaranteeing a safer and extra reliable consumer expertise.

Study extra about content moderation on AWS and our content moderation ML use cases, and take step one in direction of streamlining your content material moderation operations with AWS.

Concerning the Authors

Lana Zhang is a Senior Options Architect at AWS WWSO AI Providers group, specializing in AI and ML for content material moderation, laptop imaginative and prescient, and pure language processing. Along with her experience, she is devoted to selling AWS AI/ML options and aiding prospects in reworking their enterprise options throughout various industries, together with social media, gaming, e-commerce, and promoting & advertising and marketing.

Lana Zhang is a Senior Options Architect at AWS WWSO AI Providers group, specializing in AI and ML for content material moderation, laptop imaginative and prescient, and pure language processing. Along with her experience, she is devoted to selling AWS AI/ML options and aiding prospects in reworking their enterprise options throughout various industries, together with social media, gaming, e-commerce, and promoting & advertising and marketing.

James Wu is a Senior AI/ML Specialist Answer Architect at AWS. serving to prospects design and construct AI/ML options. James’s work covers a variety of ML use circumstances, with a main curiosity in laptop imaginative and prescient, deep studying, and scaling ML throughout the enterprise. Previous to becoming a member of AWS, James was an architect, developer, and know-how chief for over 10 years, together with 6 years in engineering and 4 years in advertising and marketing and promoting industries.

James Wu is a Senior AI/ML Specialist Answer Architect at AWS. serving to prospects design and construct AI/ML options. James’s work covers a variety of ML use circumstances, with a main curiosity in laptop imaginative and prescient, deep studying, and scaling ML throughout the enterprise. Previous to becoming a member of AWS, James was an architect, developer, and know-how chief for over 10 years, together with 6 years in engineering and 4 years in advertising and marketing and promoting industries.

Kevin Carlson is a Principal AI/ML Specialist with a deal with Laptop Imaginative and prescient at AWS, the place he leads Enterprise Growth and GTM for Amazon Rekognition. Previous to becoming a member of AWS, he led Digital Transformation globally at Fortune 500 Engineering firm AECOM, with a deal with synthetic intelligence and machine studying for generative design and infrastructure evaluation. He’s primarily based in Chicago, the place exterior of labor he enjoys time together with his household, and is enthusiastic about flying airplanes and training youth baseball.

Kevin Carlson is a Principal AI/ML Specialist with a deal with Laptop Imaginative and prescient at AWS, the place he leads Enterprise Growth and GTM for Amazon Rekognition. Previous to becoming a member of AWS, he led Digital Transformation globally at Fortune 500 Engineering firm AECOM, with a deal with synthetic intelligence and machine studying for generative design and infrastructure evaluation. He’s primarily based in Chicago, the place exterior of labor he enjoys time together with his household, and is enthusiastic about flying airplanes and training youth baseball.

John Rouse is a Senior AI/ML Specialist at AWS, the place he leads world enterprise improvement for AI companies targeted on Content material Moderation and Compliance use circumstances. Previous to becoming a member of AWS, he has held senior degree enterprise improvement and management roles with innovative know-how corporations. John is working to place machine studying within the arms of each developer with AWS AI/ML stack. Small concepts result in small impression. John’s objective for purchasers is to empower them with huge concepts and alternatives that open doorways to allow them to make a significant impression with their buyer.

John Rouse is a Senior AI/ML Specialist at AWS, the place he leads world enterprise improvement for AI companies targeted on Content material Moderation and Compliance use circumstances. Previous to becoming a member of AWS, he has held senior degree enterprise improvement and management roles with innovative know-how corporations. John is working to place machine studying within the arms of each developer with AWS AI/ML stack. Small concepts result in small impression. John’s objective for purchasers is to empower them with huge concepts and alternatives that open doorways to allow them to make a significant impression with their buyer.